Best AI tools for< Ai Security Engineer >

Infographic

20 - AI tool Sites

VIDOC

VIDOC is an AI-powered security engineer that automates code review and penetration testing. It continuously scans and reviews code to detect and fix security issues, helping developers deliver secure software faster. VIDOC is easy to use, requiring only two lines of code to be added to a GitHub Actions workflow. It then takes care of the rest, providing developers with a tailored code solution to fix any issues found.

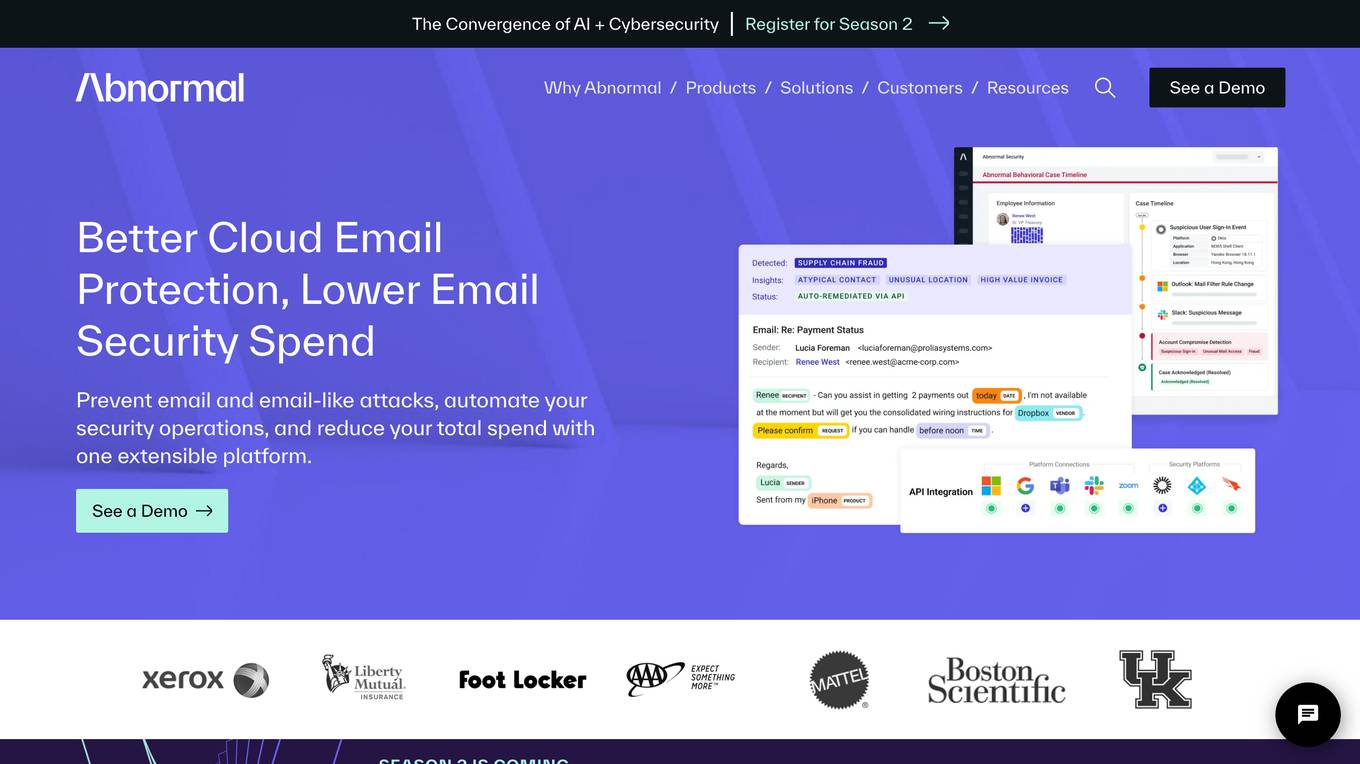

Abnormal Security

Abnormal Security is an AI-powered platform that leverages superhuman understanding of human behavior to protect against email threats such as phishing, social engineering, and account takeovers. The platform is trusted by over 3,000 customers, including 25% of the Fortune 500 companies. Abnormal Security offers a comprehensive cloud email security solution, behavioral anomaly detection, SaaS security, and autonomous AI security agents to provide multi-layered protection against advanced email attacks. The platform is recognized as a leader in email security and AI-native security, delivering unmatched protection and reducing the risk of phishing attacks by 90%.

Rebuff AI

Rebuff AI is an AI tool designed as a self-hardening prompt injection detector. It is built to strengthen its prompt detection capabilities as it faces more attacks. The tool aims to protect the AI community by providing a reliable and robust solution for prompt injection detection. Rebuff AI offers an API for developers to integrate its functionality into their applications, ensuring enhanced security measures against malicious prompt injections.

Cyguru

Cyguru is an all-in-one cloud-based AI Security Operation Center (SOC) that offers a comprehensive range of features for a robust and secure digital landscape. Its Security Operation Center is the cornerstone of its service domain, providing AI-Powered Attack Detection, Continuous Monitoring for Vulnerabilities and Misconfigurations, Compliance Assurance, SecPedia: Your Cybersecurity Knowledge Hub, and Advanced ML & AI Detection. Cyguru's AI-Powered Analyst promptly alerts users to any suspicious behavior or activity that demands attention, ensuring timely delivery of notifications. The platform is accessible to everyone, with up to three free servers and subsequent pricing that is more than 85% below the industry average.

MLSecOps

MLSecOps is an AI tool designed to drive the field of MLSecOps forward through high-quality educational resources and tools. It focuses on traditional cybersecurity principles, emphasizing people, processes, and technology. The MLSecOps Community educates and promotes the integration of security practices throughout the AI & machine learning lifecycle, empowering members to identify, understand, and manage risks associated with their AI systems.

Abnormal

Abnormal is an AI-powered platform that leverages superhuman understanding of human behavior to protect against email attacks such as phishing, social engineering, and account takeovers. The platform offers unified protection across email and cloud applications, behavioral anomaly detection, account compromise detection, data security, and autonomous AI agents for security operations. Abnormal is recognized as a leader in email security and AI-native security, trusted by over 3,000 customers, including 20% of the Fortune 500. The platform aims to autonomously protect humans, reduce risks, save costs, accelerate AI adoption, and provide industry-leading security solutions.

Vectra AI

Vectra AI is an advanced AI-driven cybersecurity platform that helps organizations detect, prioritize, investigate, and respond to sophisticated cyber threats in real-time. The platform provides Attack Signal Intelligence to arm security analysts with the necessary intel to stop attacks fast. Vectra AI offers integrated signal for extended detection and response (XDR) across various domains such as network, identity, cloud, and endpoint security. Trusted by 1,500 enterprises worldwide, Vectra AI is known for its patented AI security solutions that deliver the best attack signal intelligence on the planet.

Lakera

Lakera is the world's most advanced AI security platform that offers cutting-edge solutions to safeguard GenAI applications against various security threats. Lakera provides real-time security controls, stress-testing for AI systems, and protection against prompt attacks, data loss, and insecure content. The platform is powered by a proprietary AI threat database and aligns with global AI security frameworks to ensure top-notch security standards. Lakera is suitable for security teams, product teams, and LLM builders looking to secure their AI applications effectively and efficiently.

Giskard

Giskard is an AI Red Teaming & LLM Security Platform designed to continuously secure LLM agents by preventing hallucinations and security issues in production. It offers automated testing to catch vulnerabilities before they happen, trusted by enterprise AI leaders to ensure data and reputation protection. The platform provides comprehensive protection against various security attacks and vulnerabilities, offering end-to-end encryption, data residency & isolation, and compliance with GDPR, SOC 2 Type II, and HIPAA. Giskard helps in uncovering AI vulnerabilities, stopping business failures at the source, unifying testing across teams, and saving time with continuous testing to prevent regressions.

Darktrace

Darktrace is an essential AI cybersecurity platform that offers proactive protection, cloud-native AI security, comprehensive risk management, and user protection across various devices. It accelerates triage by 10x, defends with confidence, and connects with various integrations. Darktrace ActiveAI Security Platform spots novel threats across organizations, providing solutions for ransomware, APTs, phishing, data loss, and more. With a focus on defense, Darktrace aims to transform cybersecurity by detecting and responding to known and novel threats in real-time.

Huntr

Huntr is the world's first bug bounty platform for AI/ML. It provides a single place for security researchers to submit vulnerabilities, ensuring the security and stability of AI/ML applications, including those powered by Open Source Software (OSS).

hCaptcha Enterprise

hCaptcha Enterprise is a comprehensive AI-powered security platform designed to detect and deter human and automated threats, including bot detection, fraud protection, and account defense. It offers highly accurate bot detection, fraud protection without false positives, and account takeover detection. The platform also provides privacy-preserving abuse detection with zero personally identifiable information (PII) required. hCaptcha Enterprise is trusted by category leaders in various industries worldwide, offering universal support, comprehensive security, and compliance with global privacy standards like GDPR, CCPA, and HIPAA.

Darktrace

Darktrace is a cybersecurity platform that leverages AI technology to provide proactive protection against cyber threats. It offers cloud-native AI security solutions for networks, emails, cloud environments, identity protection, and endpoint security. Darktrace's AI Analyst investigates alerts at the speed and scale of AI, mimicking human analyst behavior. The platform also includes services such as 24/7 expert support and incident management. Darktrace's AI is built on a unique approach where it learns from the organization's data to detect and respond to threats effectively. The platform caters to organizations of all sizes and industries, offering real-time detection and autonomous response to known and novel threats.

Ambient.ai

Ambient.ai is an AI-powered physical security software that helps prevent security incidents by detecting threats in real-time, auto-clearing false alarms, and accelerating investigations. The platform uses computer vision intelligence to monitor cameras for suspicious activities, decrease alarms, and enable rapid investigations. Ambient.ai offers rich integration ecosystem, detections for a spectrum of threats, unparalleled operational efficiency, and enterprise-grade privacy to ensure maximum security and efficiency for its users.

VOLT AI

VOLT AI is a cloud-based enterprise security application that utilizes advanced AI technology to intercept threats in real-time. The application offers solutions for various industries such as education, corporate, and cities, focusing on perimeter security, medical emergencies, and weapons detection. VOLT AI provides features like unified cameras, video intelligence, real-time notifications, automated escalations, and digital twin creation for advanced situational awareness. The application aims to enhance safety and security by detecting security risks and notifying users promptly.

Veriti

Veriti is an AI-driven platform that proactively monitors and safely remediates exposures across the entire security stack, without disrupting the business. It helps organizations maximize their security posture while ensuring business uptime. Veriti offers solutions for safe remediation, MITRE ATT&CK®, healthcare, MSSPs, and manufacturing. The platform correlates exposures to misconfigurations, continuously assesses exposures, integrates with various security solutions, and prioritizes remediation based on business impact. Veriti is recognized for its role in exposure assessments and remediation, providing a consolidated security platform for businesses to neutralize threats before they happen.

DevSecCops

DevSecCops is an AI-driven automation platform designed to revolutionize DevSecOps processes. The platform offers solutions for cloud optimization, machine learning operations, data engineering, application modernization, infrastructure monitoring, security, compliance, and more. With features like one-click infrastructure security scan, AI engine security fixes, compliance readiness using AI engine, and observability, DevSecCops aims to enhance developer productivity, reduce cloud costs, and ensure secure and compliant infrastructure management. The platform leverages AI technology to identify and resolve security issues swiftly, optimize AI workflows, and provide cost-saving techniques for cloud architecture.

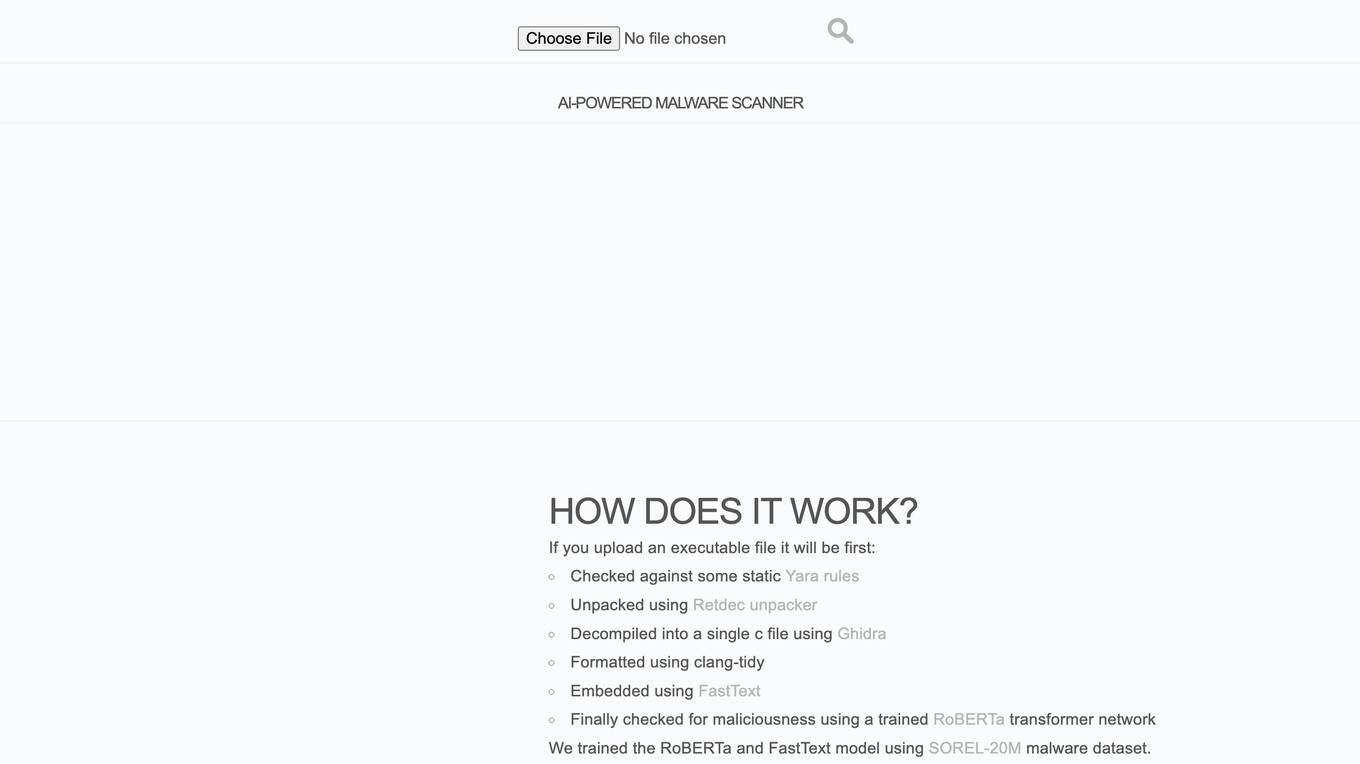

SecureWoof

SecureWoof is an AI-powered Malware Scanner that utilizes advanced technologies such as Yara rules, Retdec unpacker, Ghidra decompiler, clang-tidy formatter, FastText embedding, and RoBERTa transformer network to scan and detect malicious content in executable files. The tool is trained on the SOREL-20M malware dataset to enhance its detection capabilities.

Palo Alto Networks

Palo Alto Networks is a cybersecurity company offering advanced security solutions powered by Precision AI to protect modern enterprises from cyber threats. The company provides network security, cloud security, and AI-driven security operations to defend against AI-generated threats in real time. Palo Alto Networks aims to simplify security and achieve better security outcomes through platformization, intelligence-driven expertise, and proactive monitoring of sophisticated threats.

AppSec Assistant

AppSec Assistant is an AI-powered application designed to provide automated security recommendations in Jira Cloud. It focuses on ensuring data security by enabling secure-by-design software development. The tool simplifies setup by allowing users to add their OpenAI API key and organization, encrypts and stores data using Atlassian's Storage API, and provides tailored security recommendations for each ticket to reduce manual AppSec reviews. AppSec Assistant empowers developers by keeping up with their pace and helps in easing the security review bottleneck.

0 - Open Source Tools

20 - OpenAI Gpts

CISO GPT

Specialized LLM in computer security, acting as a CISO with 20 years of experience, providing precise, data-driven technical responses to enhance organizational security.

Smart Contract Audit Assistant by Keybox.AI

Get your Ethereum and L2 EVMs smart contracts audited updated knowledge base of vulnerabilities and exploits. Updated: Nov 14th 23

AdversarialGPT

Adversarial AI expert aiding in AI red teaming, informed by cutting-edge industry research (early dev)

ethicallyHackingspace (eHs)® (Full Spectrum)™

Full Spectrum Space Cybersecurity Professional ™ AI-copilot (BETA)

ethicallyHackingspace (eHs)® METEOR™ STORM™

Multiple Environment Threat Evaluation of Resources (METEOR)™ Space Threats and Operational Risks to Mission (STORM)™ non-profit product AI co-pilot

HackingPT

HackingPT is a specialized language model focused on cybersecurity and penetration testing, committed to providing precise and in-depth insights in these fields.

GPT Auth™

This is a demonstration of GPT Auth™, an authentication system designed to protect your customized GPT.

Phoenix Vulnerability Intelligence GPT

Expert in analyzing vulnerabilities with ransomware focus with intelligence powered by Phoenix Security

CISO AI

Team of experts assisting CISOs, CIOs, Exec Teams, and Board Directors in cyber risk oversight and security program management, providing actionable strategic, operational, and tactical support. Enhanced with advanced technical security architecture and engineering expertise.

Guardian AI VPN

I'm GPTGuardian VPN, enhancing your GPT experience with top security and connectivity.

Securia

AI-powered audit ally. Enhance cybersecurity effortlessly with intelligent, automated security analysis. Safe, swift, and smart.

🛡️ CodeGuardian Pro+ 🛡️

Your AI-powered sentinel for code! Scans for vulnerabilities, offers security tips, and educates on best practices in cybersecurity. 🔍🔐

cloud exams coach

AI Cloud Computing (Engineering, Architecture, DevOps ) Certifications Coach for AWS, GCP, and Azure. I provide timed mock exams.

IoE - Internet of Everything Advisor

Advanced IoE-focused GPT, excelling in domain knowledge, security awareness, and problem-solving, powered by OpenAI

AI Implementation Guide for Sensitive/Private Data

Guide on AI implementation for secure data, with a focus on best practices and tools.

fox8 botnet paper

A helpful guide for understanding the paper "Anatomy of an AI-powered malicious social botnet"