Best AI tools for< Transcode Video >

3 - AI tool Sites

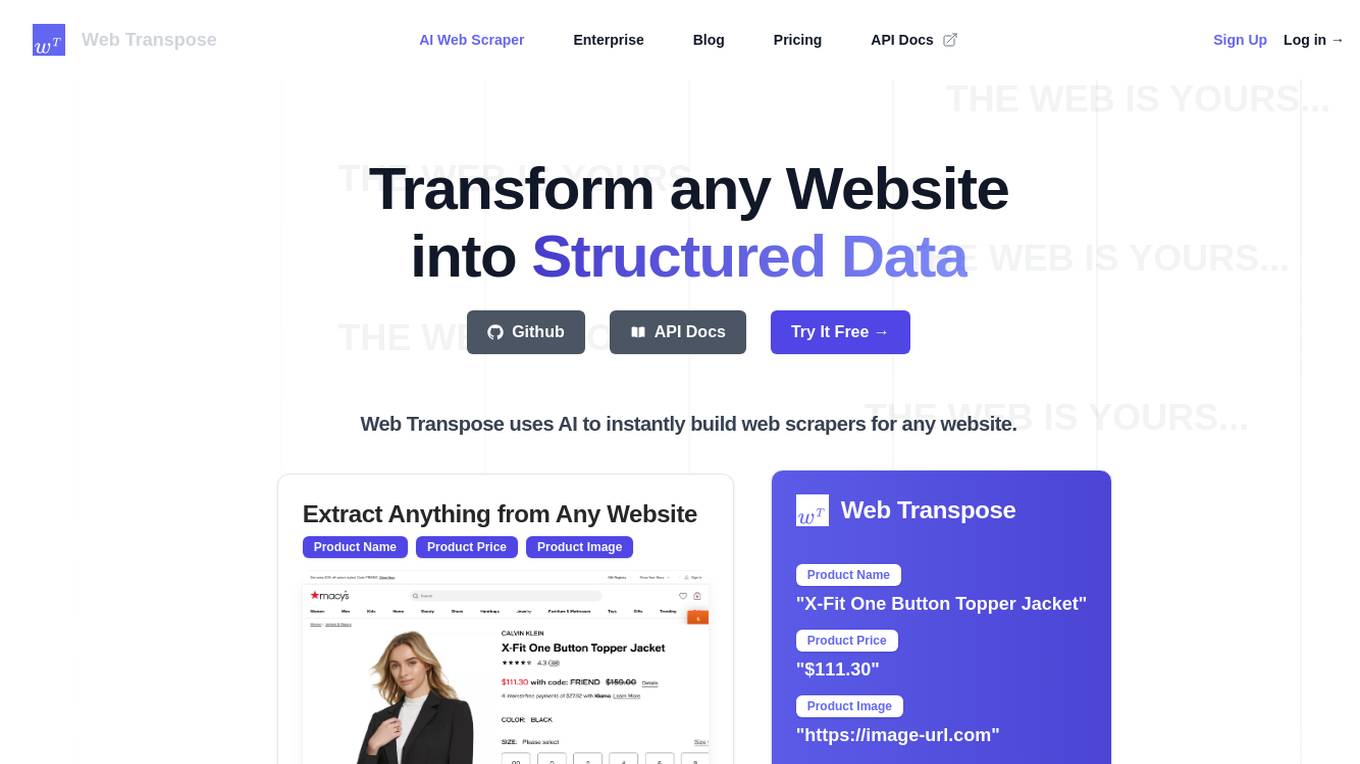

Web Transpose

Web Transpose is an AI-powered web scraping and web crawling API that allows users to transform any website into structured data. By utilizing artificial intelligence, Web Transpose can instantly build web scrapers for any website, enabling users to extract valuable information efficiently and accurately. The tool is designed for production use, offering low latency and effective proxy handling. Web Transpose learns the structure of the target website, reducing latency and preventing hallucinations commonly associated with traditional web scraping methods. Users can query any website like an API and build products quickly using the scraped data.

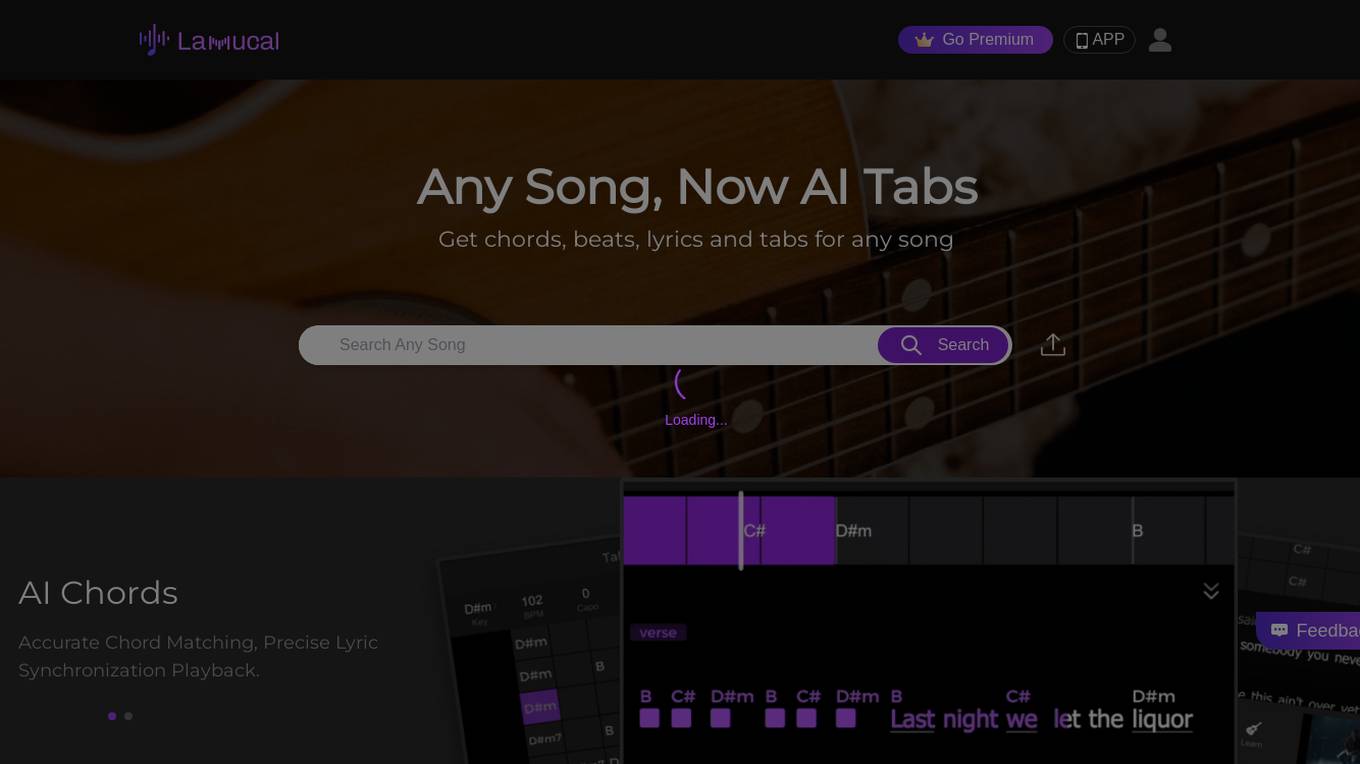

Lamucal

Lamucal is an AI-powered music application that provides users with accurate chords, beats, lyrics, and tabs for any song. It features AI-generated rhythm patterns and precise lyric synchronization, making it an invaluable tool for musicians and music enthusiasts alike. With Lamucal, users can easily find and play their favorite songs, explore new music, and improve their musical skills.

Lamucal

Lamucal is an AI-powered platform that provides tabs and chords for any song. It offers real-time chords, lyrics, tabs, and melody for any song, making it a valuable tool for musicians and music enthusiasts. Users can upload songs or search for any song to access chords and other musical elements. With a user-friendly interface and a wide range of features, Lamucal aims to enhance the music learning and playing experience for its users.

1 - Open Source AI Tools

bmf

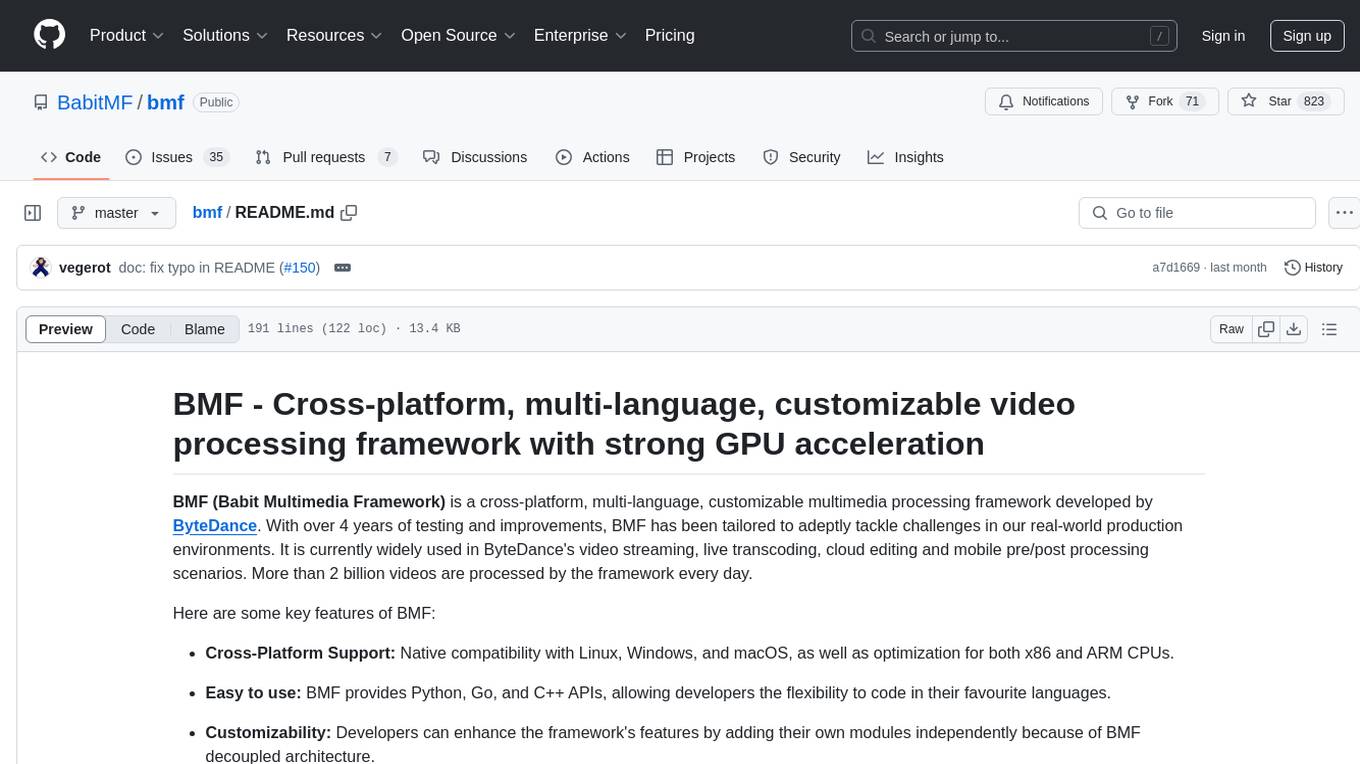

BMF (Babit Multimedia Framework) is a cross-platform, multi-language, customizable multimedia processing framework developed by ByteDance. It offers native compatibility with Linux, Windows, and macOS, Python, Go, and C++ APIs, and high performance with strong GPU acceleration. BMF allows developers to enhance its features independently and provides efficient data conversion across popular frameworks and hardware devices. BMFLite is a client-side lightweight framework used in apps like Douyin/Xigua, serving over one billion users daily. BMF is widely used in video streaming, live transcoding, cloud editing, and mobile pre/post processing scenarios.