Best AI tools for< Improve System Performance >

20 - AI tool Sites

Tensordyne

Tensordyne is a generative AI inference compute tool designed and developed in the US and Germany. It focuses on re-engineering AI math and defining AI inference to run the biggest AI models for thousands of users at a fraction of the rack count, power, and cost. Tensordyne offers custom silicon and systems built on the Zeroth Scaling Law, enabling breakthroughs in AI technology.

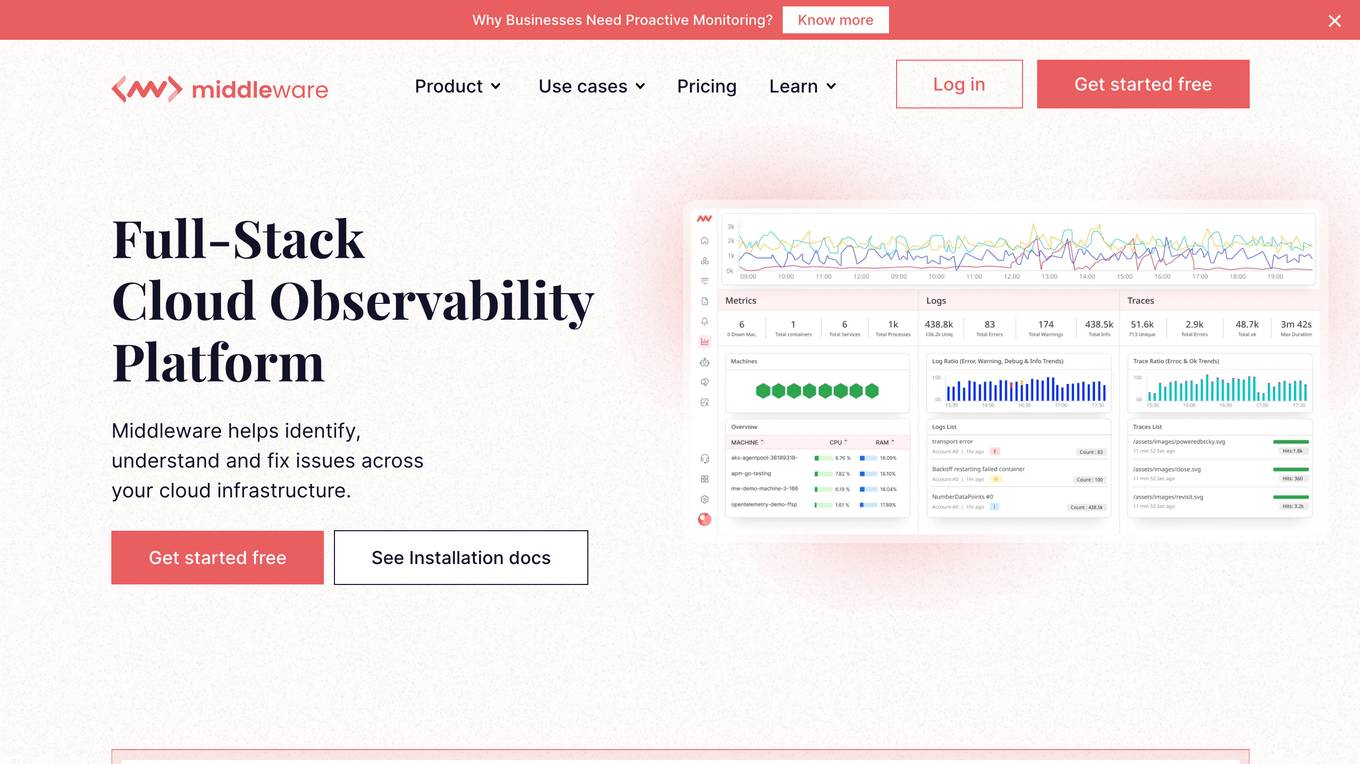

Cloud Observability Middleware

The website provides Full-Stack Cloud Observability services with a focus on Middleware. It offers comprehensive monitoring and analysis tools to help businesses optimize their cloud infrastructure performance. The platform enables users to gain insights into their middleware applications, identify bottlenecks, and improve overall system efficiency.

Webb.ai

Webb.ai is an AI-powered platform that offers automated troubleshooting for Kubernetes. It is designed to assist users in identifying and resolving issues within their Kubernetes environment efficiently. By leveraging AI technology, Webb.ai provides insights and recommendations to streamline the troubleshooting process, ultimately improving system reliability and performance. The platform is user-friendly and caters to both beginners and experienced users in the field of Kubernetes management.

æœªæ ¥ç®€åŽ†

æœªæ ¥ç®€åŽ† is an AI-powered HR solution that helps businesses automate their hiring processes and improve their candidate experience. It uses AI to screen resumes, schedule interviews, and make hiring decisions. æœªæ ¥ç®€åŽ† also provides a suite of tools to help businesses manage their employees, including a performance management system, a learning management system, and a payroll system.

Cast AI

Cast AI is an intelligent Kubernetes automation platform that offers live migration for AWS EKS, enabling users to migrate stateful workloads with zero downtime. The platform provides application performance automation by automating and optimizing the entire application stack, including Kubernetes cluster optimization, security, workload optimization, LLM optimization for AIOps, cost monitoring, and database optimization. Cast AI integrates with various cloud services and tools, offering solutions for migration of stateful workloads, inference at scale, and cutting AI costs without sacrificing scale. The platform helps users improve performance, reduce costs, and boost productivity through end-to-end application performance automation.

Futr Energy

Futr Energy is a solar asset management platform designed to help manage solar power plants efficiently. It offers a range of tools and features such as remote monitoring, CMMS, inventory management, performance monitoring, and automated reports. Futr Energy aims to provide clean energy developers, operators, and investors with intelligent solutions to optimize the generation and performance of solar assets.

Nyle

Nyle is an AI-powered operating system for e-commerce growth. It provides tools to generate higher profits and increase team productivity. Nyle's platform includes advanced market analysis, quantitative assessment of customer sentiment, and automated insights. It also offers collaborative dashboards and interactive modeling to facilitate decision-making and cross-functional alignment.

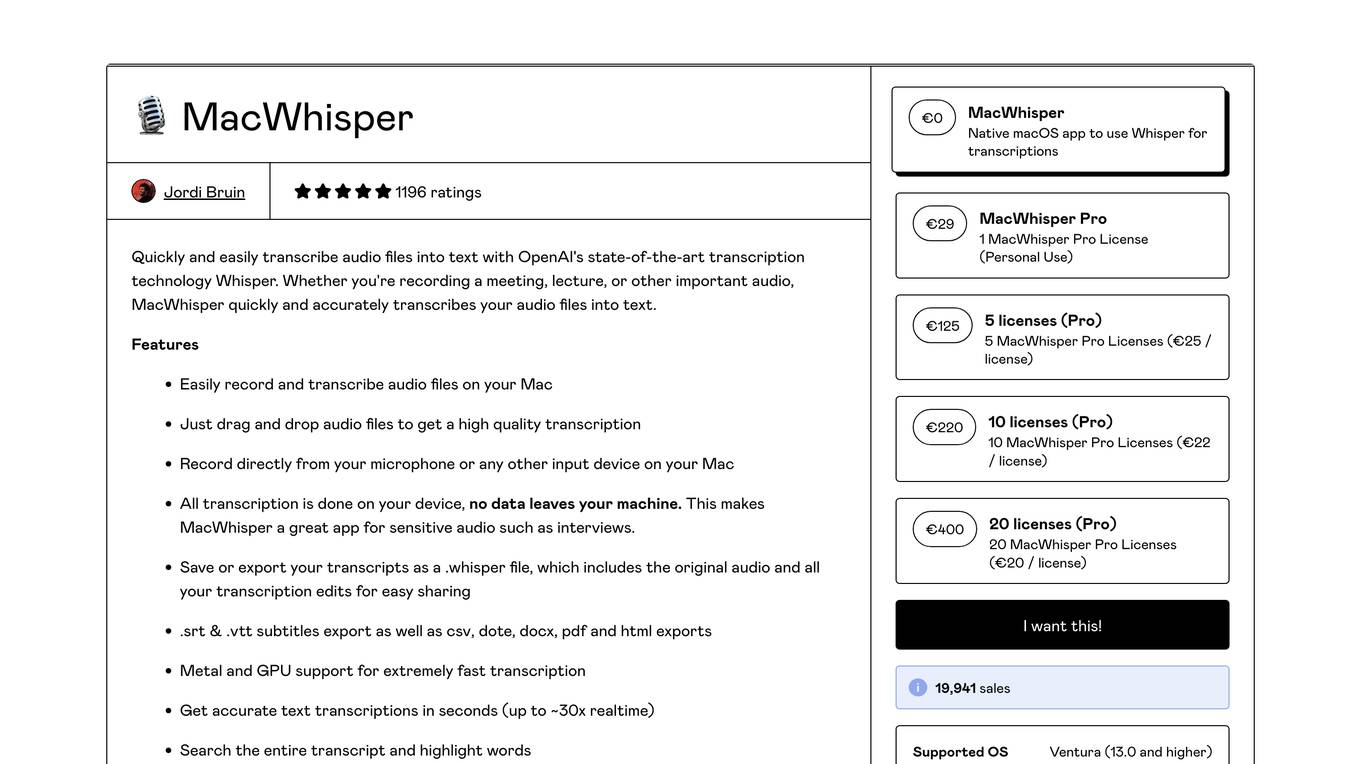

MacWhisper

MacWhisper is a native macOS application that utilizes OpenAI's Whisper technology for transcribing audio files into text. It offers a user-friendly interface for recording, transcribing, and editing audio, making it suitable for various use cases such as transcribing meetings, lectures, interviews, and podcasts. The application is designed to protect user privacy by performing all transcriptions locally on the device, ensuring that no data leaves the user's machine.

1case.io

1case.io is a website that utilizes Cloudflare to restrict access to certain users based on their IP address. The site displays an 'Access denied' message along with an error code and a link for troubleshooting. Users are prompted to enable cookies and are provided with information regarding the ban. The website aims to enhance security and performance by leveraging Cloudflare's services.

GetMerlin

GetMerlin is a website that focuses on security verification and performance optimization. It ensures a secure connection by reviewing the security of the user's connection before proceeding. The platform verifies the user's identity to prevent unauthorized access and provides a seamless browsing experience. GetMerlin uses Cloudflare for performance and security enhancements, ensuring a safe and efficient online environment for users.

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

aqua

aqua is a comprehensive Quality Assurance (QA) management tool designed to streamline testing processes and enhance testing efficiency. It offers a wide range of features such as AI Copilot, bug reporting, test management, requirements management, user acceptance testing, and automation management. aqua caters to various industries including banking, insurance, manufacturing, government, tech companies, and medical sectors, helping organizations improve testing productivity, software quality, and defect detection ratios. The tool integrates with popular platforms like Jira, Jenkins, JMeter, and offers both Cloud and On-Premise deployment options. With AI-enhanced capabilities, aqua aims to make testing faster, more efficient, and error-free.

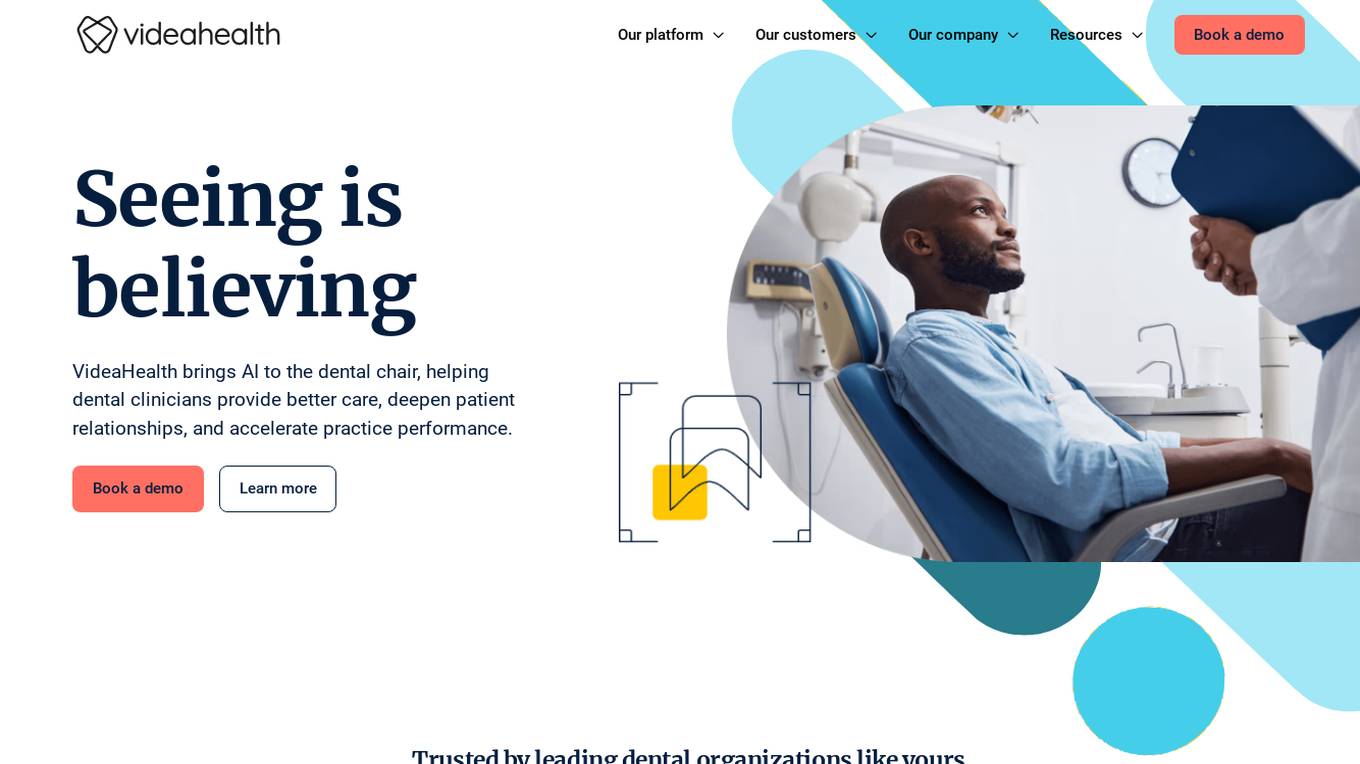

VideaHealth

VideaHealth is a dental AI platform trusted by dentists and DSOs. It enhances diagnostics and streamlines workflows using clinical AI to identify and convert treatments across major oral conditions. The platform combines practice management system data with AI insights to elevate patient care and empower dental practices. VideaHealth offers advanced FDA-cleared detection algorithms to detect suspect diseases, provides AI-powered insights for data-driven decisions, and delivers real-time chairside assistance to dentists.

DORA

DORA is a research program by Google Cloud that focuses on understanding the capabilities driving software delivery and operations performance. It helps teams apply these capabilities to enhance organizational performance. The program introduces the DORA AI Capabilities Model, identifying key technical and cultural practices that amplify the positive impacts of AI on performance. DORA offers resources, guides, and tools like the DORA Quick Check to help organizations improve their software delivery goals.

Cuecard

Cuecard is an AI-powered sales co-pilot tool designed to revolutionize the sales process by providing AI-driven knowledge and personalized experiences to help sales teams close deals faster. It offers features such as interactive outreach, efficient research, real-time answers, centralized knowledge access, and improved sales velocity. Cuecard is trusted by leading brands of all sizes and offers a live demo for users to experience its innovative features firsthand.

Jochem

Jochem is an AI tool designed to provide accurate answers quickly and enhance knowledge on-the-go. It helps users get instant answers to their questions, connects them with experts within the company, and continuously learns to improve performance. Jochem eliminates the need to search through files and articles by offering a smart matching system based on expertise. It also allows users to easily add and update the knowledge base, ensuring full control and transparency.

Convin

Convin is an omnichannel contact center platform powered by conversation intelligence. It offers a full-stack conversations QA platform for contact centers, AI learning management system for faster agent onboarding, real-time agent assist for improved conversions, automated agent coaching for personalized training, supervisor assist for tracking and assistance, and insights to collect 100% of customer intelligence. The platform also provides automated QA to audit and score customer conversations, analytics for quality management, and a mobile app for on-the-go access. Convin helps businesses in various industries like sales, support, compliance, collection, retention, healthtech, fintech, insurtech, edtech, real estate, hospitality & travel, and BPO to enhance customer interactions and drive revenue.

promptsplitter.com

The website promptsplitter.com encountered a Cloudflare Tunnel error, preventing access to the requested page. Users are advised to try again in a few minutes. The site is hosted on the Cloudflare network, and the error may be due to configuration issues. The error message provides guidance for both visitors and website owners on potential solutions to resolve the issue.

Offline for Maintenance

The website is currently offline for maintenance. It is undergoing updates and improvements to enhance user experience. Please check back later for the latest information and services.

Server Error Analyzer

The website is experiencing a 500 Internal Server Error, which indicates a problem with the server hosting the website. This error message is generated by the server when it is unable to fulfill a request from a client. The OpenResty software may be involved in the server configuration. Users encountering this error should contact the website administrator for assistance in resolving the issue.

1 - Open Source AI Tools

llm_note

LLM notes repository contains detailed analysis on transformer models, language model compression, inference and deployment, high-performance computing, and system optimization methods. It includes discussions on various algorithms, frameworks, and performance analysis related to large language models and high-performance computing. The repository serves as a comprehensive resource for understanding and optimizing language models and computing systems.

20 - OpenAI Gpts

FAANG.AI

Get into FAANG. Practice with an AI expert in algorithms, data structures, and system design. Do a mock interview and improve.

High-Quality Review Analyzer

Analyses and gives actionable feedback on web Review type content using Google's Reviews System guidelines and Google's Quality Rater Guidelines

Design System Technical Specialist

Expert in Technical Design System Foundations and Components

TB Order Recommendation System

Given a set of Parameters, Provides a set of Order Recommendations

Government Contract Guidance System

This GPT Helps navigate the worlds of Government Contract Procurement ... and will guide and advise you through the process

Design Transformer

Design Transformer delivers a concise, expert analysis of key design system components, blending global trends and professional insights for a comprehensive overview.

Agent Prompt Generator for LLM's

This GPT generates the best possible LLM-agents for your system prompts. You can also specify the model size, like 3B, 33B, 70B, etc.

GPT Auth™

This is a demonstration of GPT Auth™, an authentication system designed to protect your customized GPT.