Best AI tools for< Extract Structured Output >

20 - AI tool Sites

AI2Page

AI2Page is an advanced AI tool that allows users to easily convert text into structured JSON objects. With its intuitive interface and powerful algorithms, AI2Page simplifies the process of analyzing and extracting information from text data. Users can simply input text, and AI2Page will automatically generate structured JSON output, making it ideal for data extraction, content analysis, and information retrieval tasks. The tool is designed to be user-friendly and efficient, catering to both beginners and experienced users in the field of data analysis and AI applications.

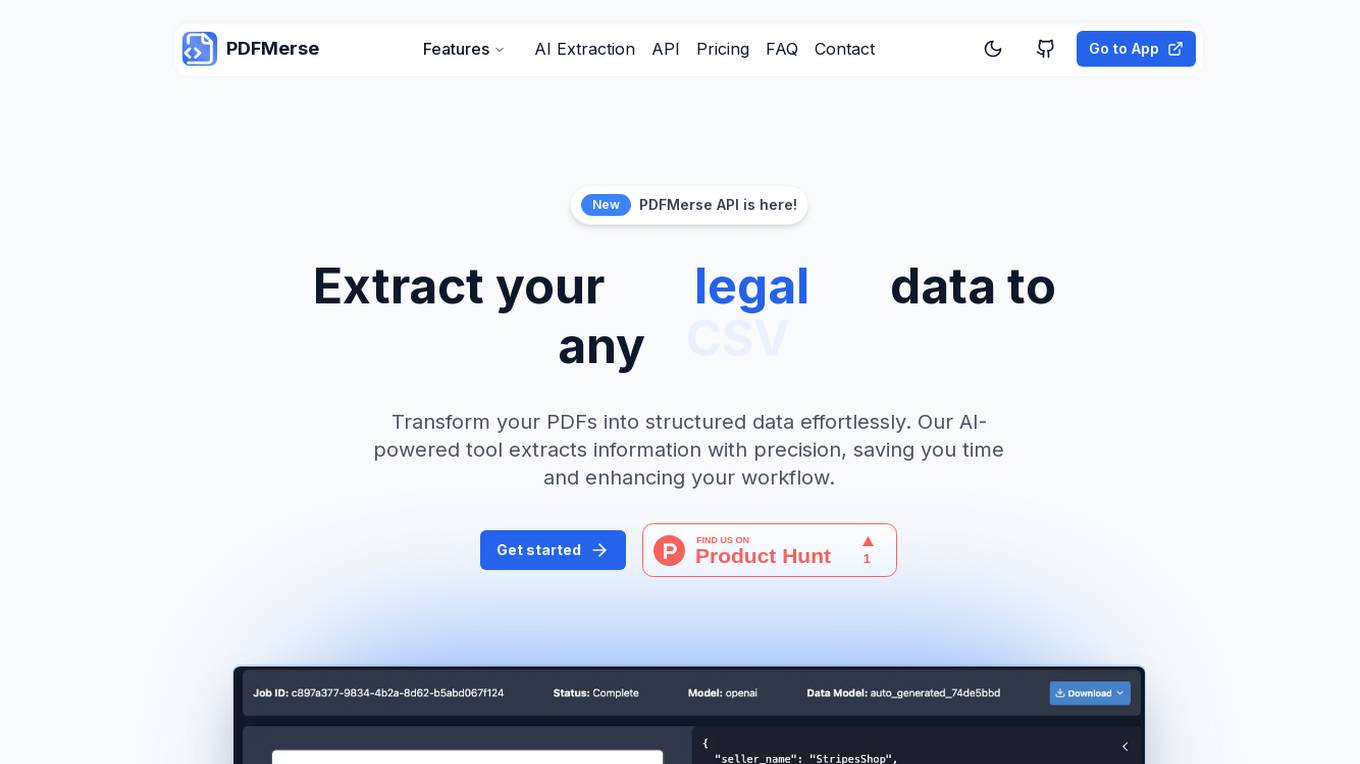

PDFMerse

PDFMerse is an AI-powered data extraction tool that revolutionizes how users handle document data. It allows users to effortlessly extract information from PDFs with precision, saving time and enhancing workflow. With cutting-edge AI technology, PDFMerse automates data extraction, ensures data accuracy, and offers versatile output formats like CSV, JSON, and Excel. The tool is designed to dramatically reduce processing time and operational costs, enabling users to focus on higher-value tasks.

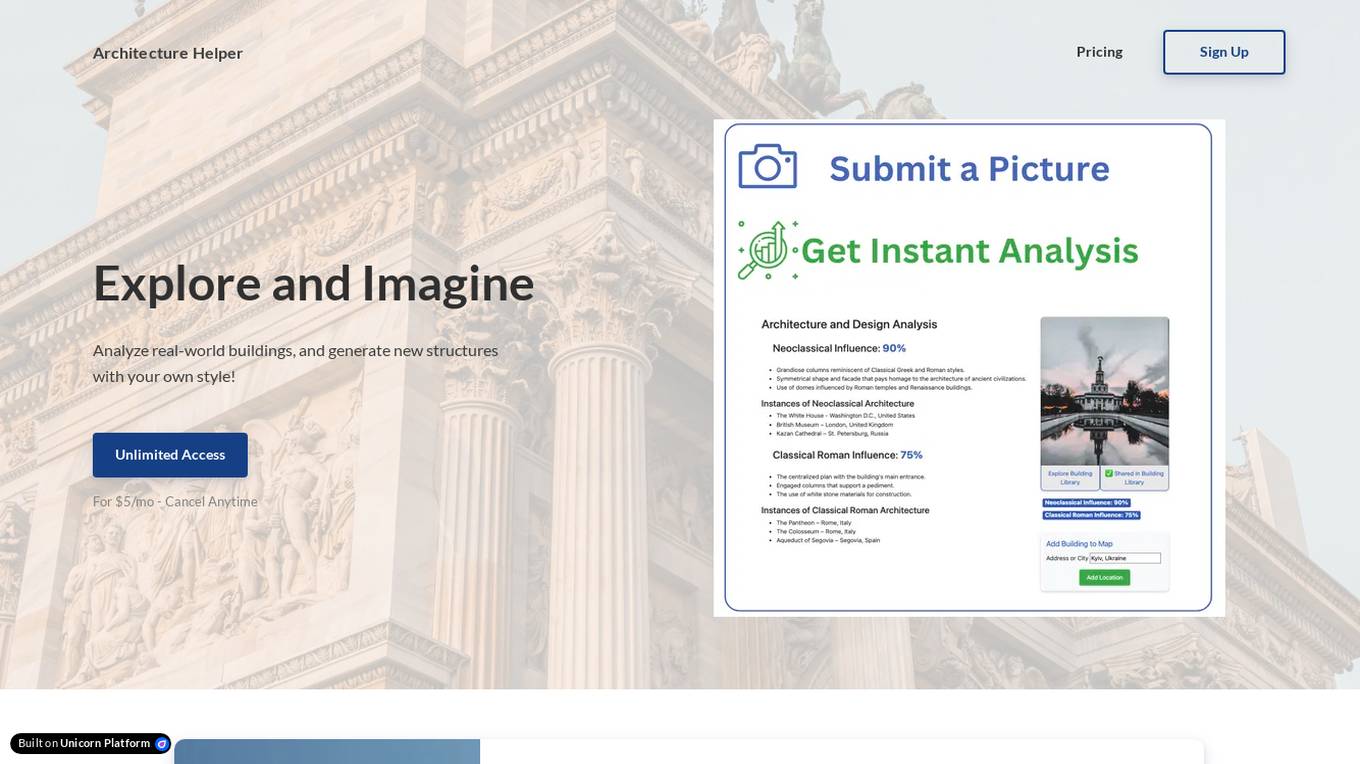

Architecture Helper

Architecture Helper is an AI-based application that allows users to analyze real-world buildings, explore architectural influences, and generate new structures with customizable styles. Users can submit images for instant design analysis, mix and match different architectural styles, and create stunning architectural and interior images. The application provides unlimited access for $5 per month, with the flexibility to cancel anytime. Named as a 'Top AI Tool' in Real Estate by CRE Software, Architecture Helper offers a powerful and playful tool for architecture enthusiasts to explore, learn, and create.

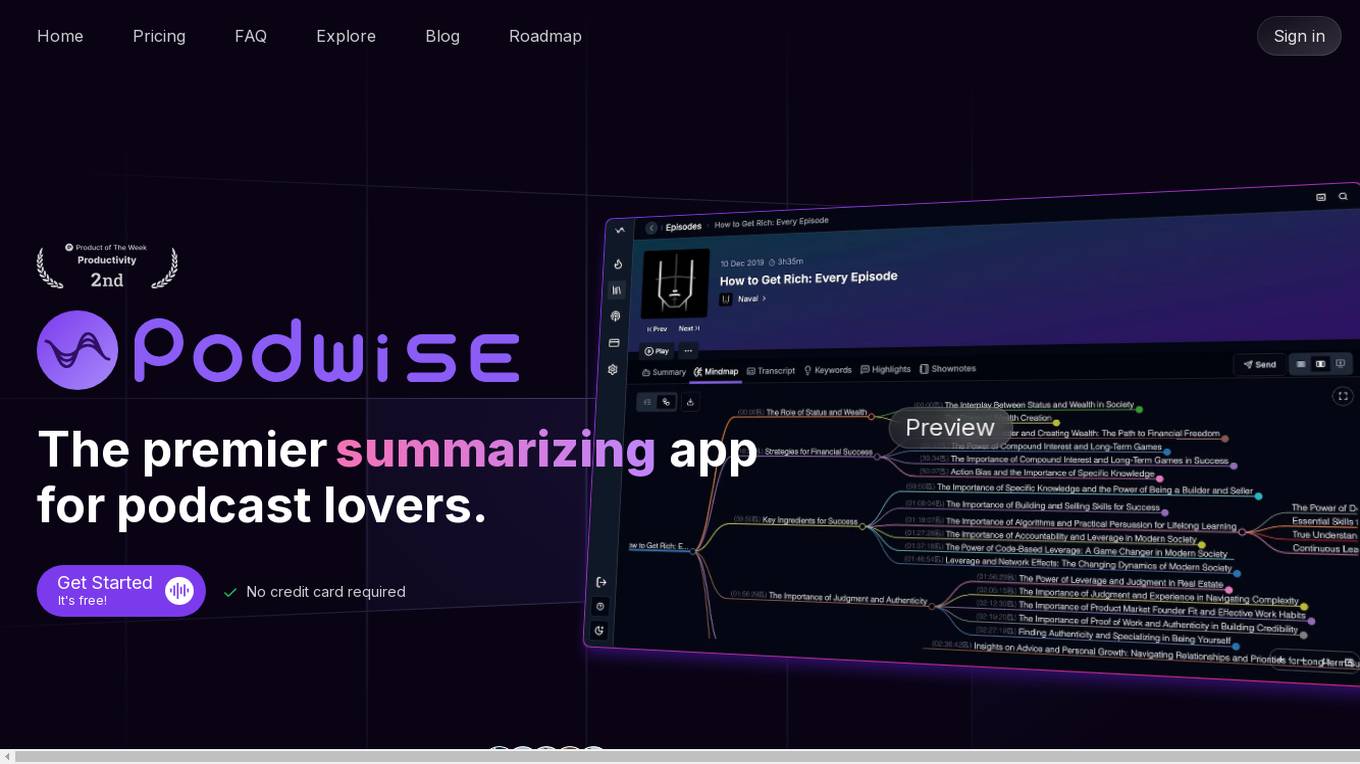

Podwise

Podwise is an AI-powered podcast tool designed for podcast lovers to extract structured knowledge from episodes at 10x speed. It offers features such as AI-powered summarization, mind mapping, content outlining, transcription, and seamless integration with knowledge management workflows. Users can subscribe to favorite content, get lightning-speed access to structured knowledge, and discover episodes of interest. Podwise aims to address the challenge of enjoying podcasts, recalling less, and forgetting quickly, by providing a meticulous, accurate, and impactful tool for efficient podcast referencing and note consolidation.

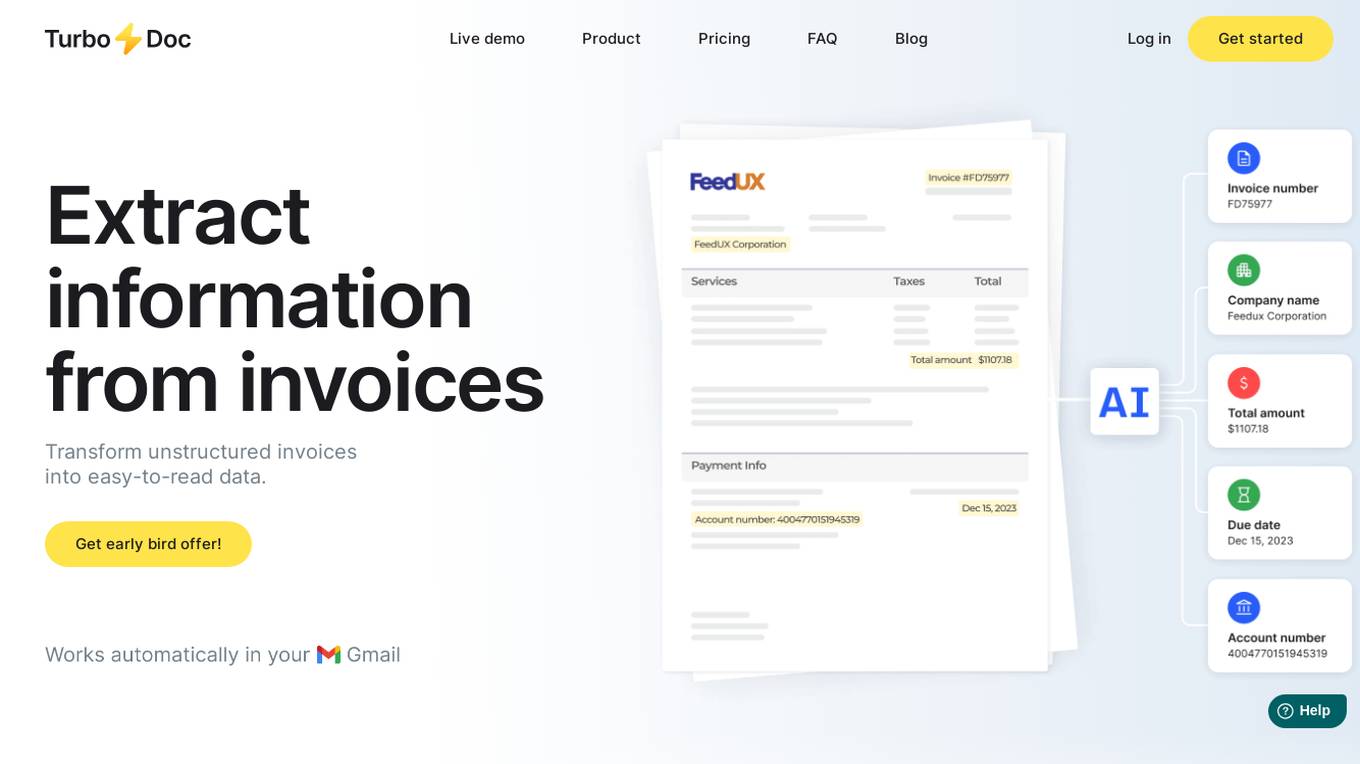

TurboDoc

TurboDoc is an AI-powered tool designed to extract information from invoices and transform unstructured data into easy-to-read structured data. It offers a user-friendly interface for efficient work with accounts payable, budget planning, and control. The tool ensures high accuracy through advanced AI models and provides secure data storage with AES256 encryption. Users can automate invoice processing, link Gmail for seamless integration, and optimize workflow with various applications.

NuMind

NuMind is an AI tool designed to solve information extraction tasks efficiently. It offers high-quality lightweight models tailored to users' needs, automating classification, entity recognition, and structured extraction. The tool is powered by task-specific and domain-agnostic foundation models, outperforming GPT-4 and similar models. NuMind provides solutions for various industries such as insurance and healthcare, ensuring privacy, cost-effectiveness, and faster NLP projects.

Airparser

Airparser is an AI-powered email and document parser tool that revolutionizes data extraction by utilizing the GPT parser engine. It allows users to automate the extraction of structured data from various sources such as emails, PDFs, documents, and handwritten texts. With features like automatic extraction, export to multiple platforms, and support for multiple languages, Airparser simplifies data extraction processes for individuals and businesses. The tool ensures data security and offers seamless integration with other applications through APIs and webhooks.

Parsio

Parsio is an AI-powered document parser that can extract structured data from PDFs, emails, and other documents. It uses natural language processing to understand the context of the document and identify the relevant data points. Parsio can be used to automate a variety of tasks, such as extracting data from invoices, receipts, and emails.

Jsonify

Jsonify is an AI tool that automates the process of exploring and understanding websites to find, filter, and extract structured data at scale. It uses AI-powered agents to navigate web content, replacing traditional data scrapers and providing data insights with speed and precision. Jsonify integrates with leading data analysis and business intelligence suites, allowing users to visualize and gain insights into their data easily. The tool offers a no-code dashboard for creating workflows and easily iterating on data tasks. Jsonify is trusted by companies worldwide for its ability to adapt to page changes, learn as it runs, and provide technical and non-technical integrations.

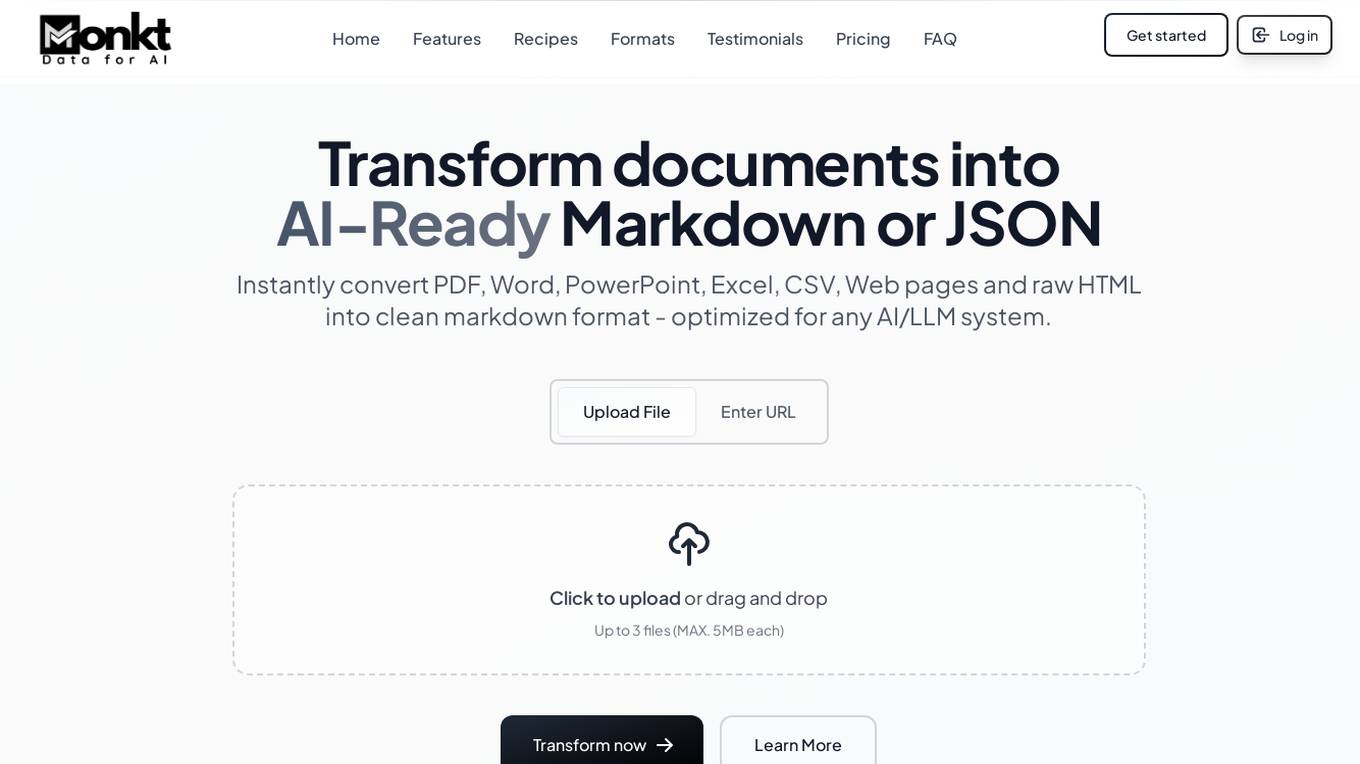

Monkt

Monkt is a powerful document processing platform that transforms various document formats into AI-ready Markdown or structured JSON. It offers features like instant conversion of PDF, Word, PowerPoint, Excel, CSV, web pages, and raw HTML into clean markdown format optimized for AI/LLM systems. Monkt enables users to create intelligent applications, custom AI chatbots, knowledge bases, and training datasets. It supports batch processing, image understanding, LLM optimization, and API integration for seamless document processing. The platform is designed to handle document transformation at scale, with support for multiple file formats and custom JSON schemas.

Podwise

Podwise is an AI-powered podcast tool that helps users extract structured knowledge from podcasts. It offers features such as AI-powered summarization, mind mapping, outlining, transcription, and integration with popular knowledge management tools. Podwise aims to enhance the podcast listening experience by providing users with a more efficient and effective way to learn and retain information from podcasts.

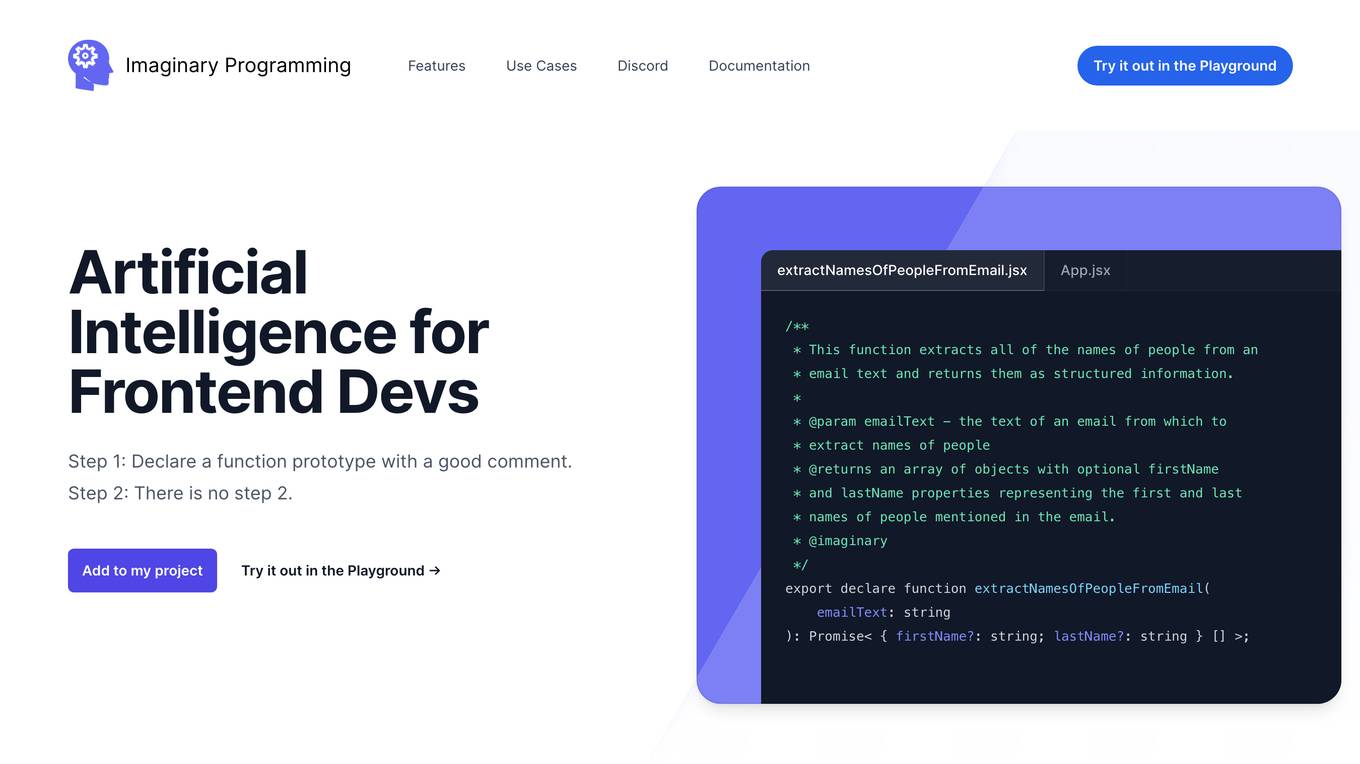

Imaginary Programming

Imaginary Programming is an AI tool that allows frontend developers to leverage OpenAI's GPT engine to add human-like intelligence to their code effortlessly. By defining function prototypes in TypeScript, developers can access GPT's capabilities without the need for AI model training. The tool enables users to extract structured data, generate text, classify data based on intent or emotion, and parse unstructured language. Imaginary Programming is designed to help developers tackle new challenges and enhance their projects with AI intelligence.

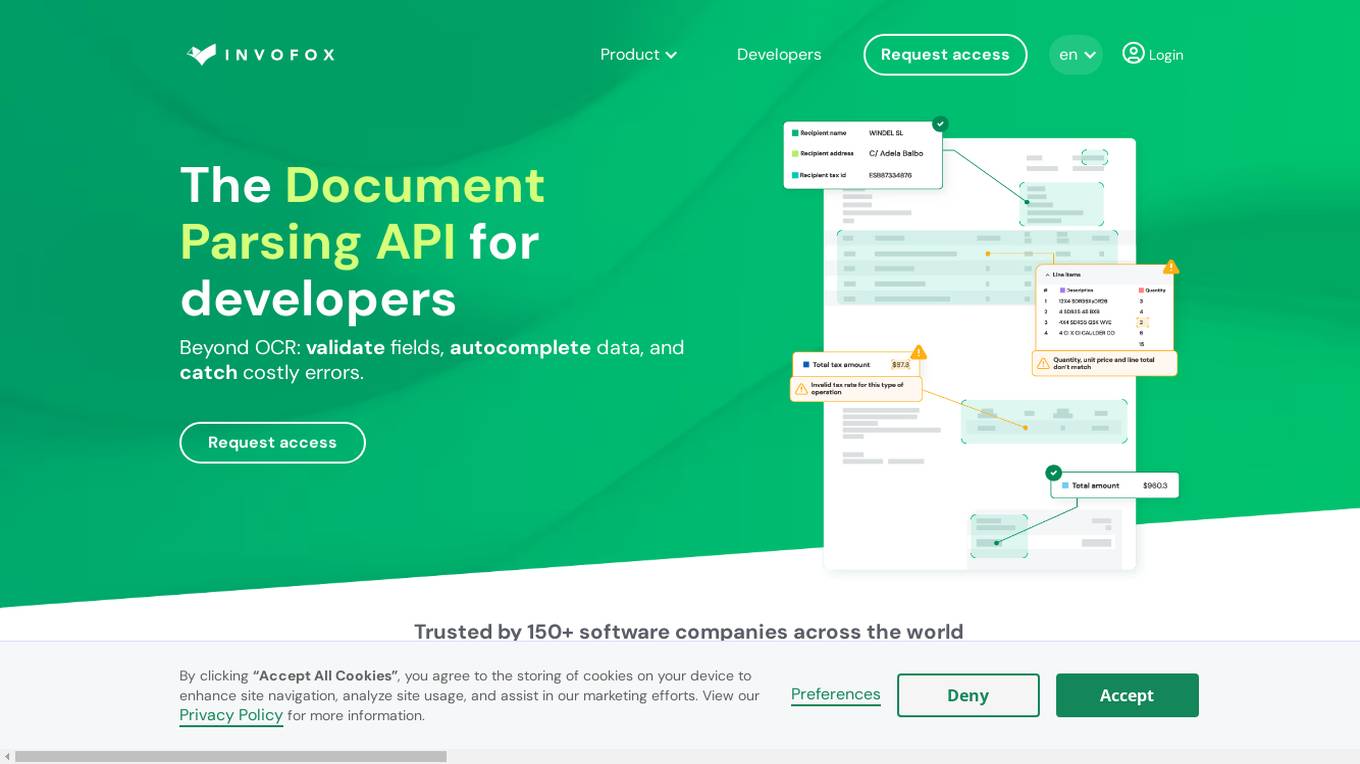

Invofox API

Invofox API is a Document Parsing API designed for developers to validate fields, autocomplete data, and catch errors beyond OCR. It turns unstructured documents into clean JSON using advanced AI models and proprietary algorithms. The API provides built-in schemas for major documents and supports custom formats, allowing users to parse any document with a single API call without templates or post-processing. Invofox is used for expense management, accounts payable, logistics & supply chain, HR automation, sustainability & consumption tracking, and custom document parsing.

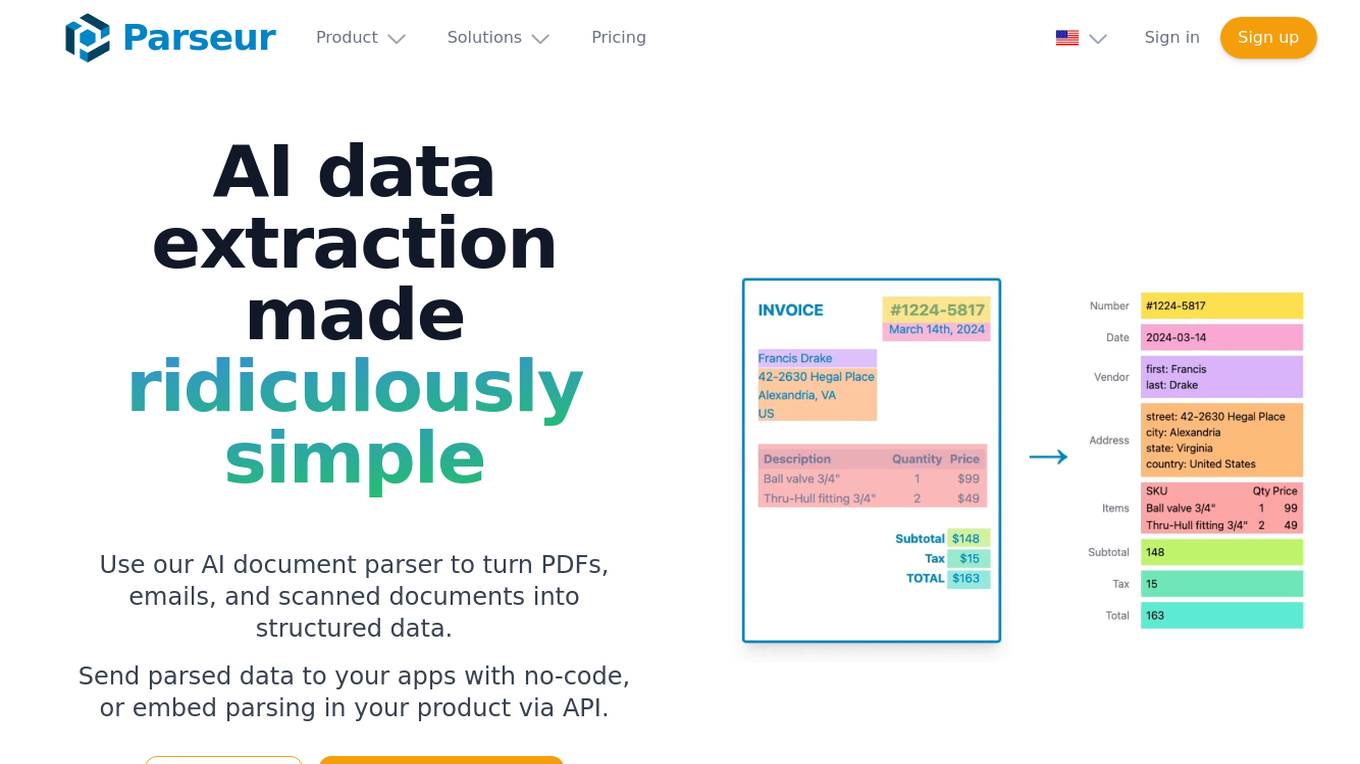

Parseur

Parseur is an AI data extraction software that uses artificial intelligence to extract structured data from various types of documents such as PDFs, emails, and scanned documents. It offers features like template-based data extraction, OCR software for character recognition, and dynamic OCR for extracting fields that move or change size. Parseur is trusted by businesses in finance, tech, logistics, healthcare, real estate, e-commerce, marketing, and human resources industries to automate data extraction processes, saving time and reducing manual errors.

Receipt OCR API

Receipt OCR API by ReceiptUp is an advanced tool that leverages OCR and AI technology to extract structured data from receipt and invoice images. The API offers high accuracy and multilingual support, making it ideal for businesses worldwide to streamline financial operations. With features like multilingual support, high accuracy, support for multiple formats, accounting downloads, and affordability, Receipt OCR API is a powerful tool for efficient receipt management and data extraction.

Beatandraise

Beatandraise is an AI-powered Equity Research tool that leverages artificial intelligence and ChatGPT technology to provide users with in-depth analysis of SEC Edgar filings. The platform allows users to access every single SEC filing since 1995, easily discover related documents, and extract structured data to Excel. With AI search functionality integrated into the app, users can quickly find the information they need. Additionally, Beatandraise offers collaborative note-taking features to enhance research efficiency and facilitate seamless sharing of insights.

Shopstory

Shopstory is an AI automation tool designed for marketing teams and agencies to automate, optimize, and save time in building scalable processes. It offers a versatile flow builder, Excel-like formulas, and AI actions to generate content and extract structured data. With over 2,600 integrations and efficient flow management, Shopstory helps users automate at scale and drive results across all clients. The tool provides dedicated onboarding and support, pre-built automations, and guided setups for quick implementation.

ResuMetrics

ResuMetrics is an AI-powered platform designed to streamline the resume processing workflow. It offers solutions to extract structured data from resumes and automate the anonymization process. The platform provides an easy-to-use API for automating resume analysis, including candidate onboarding and PII redaction. With features like resume scoring and vacancy matching on the roadmap, ResuMetrics aims to enhance the efficiency of resume processing tasks. Users can choose from different subscription plans based on their processing needs, with credits consumed per document page. Overall, ResuMetrics is a comprehensive tool for organizations looking to optimize their resume processing operations.

Pipeless Agents

Pipeless Agents is a platform that allows users to convert any video feed into an actionable data stream, enabling automation of tasks based on visual inputs. It serves as a serverless platform for Vision AI, offering the ability to create projects, connect video sources, and customize agents for specific needs. With a focus on simplicity and efficiency, Pipeless Agents empowers users to extract structured data from various video sources and automate processes with minimal coding requirements.

Altilia

Altilia is a Major Player in the Intelligent Document Processing market, offering a cloud-native, no-code, SaaS platform powered by composite AI. The platform enables businesses to automate complex document processing tasks, streamline workflows, and enhance operational performance. Altilia's solution leverages GPT and Large Language Models to extract structured data from unstructured documents, providing significant efficiency gains and cost savings for organizations of all sizes and industries.

1 - Open Source AI Tools

draive

draive is an open-source Python library designed to simplify and accelerate the development of LLM-based applications. It offers abstract building blocks for connecting functionalities with large language models, flexible integration with various AI solutions, and a user-friendly framework for building scalable data processing pipelines. The library follows a function-oriented design, allowing users to represent complex programs as simple functions. It also provides tools for measuring and debugging functionalities, ensuring type safety and efficient asynchronous operations for modern Python apps.

20 - OpenAI Gpts

Bio Abstract Expert

Generate a structured abstract for academic papers, primarily in the field of biology, adhering to a specified word count range. Simply upload your manuscript file (without the abstract) and specify the word count (for example, '200-250') to GPT.

Summary of articles by density chain

This prompt is structured to provide an effective methodology in generating progressively more detailed and specific summaries, focused on key entities.

kz image 2 typescript 2 image

Generate a Structured description in typescript format from the image and generate an image from that description. and OCR

Message Header Analyzer

Analyzes email headers for security insights, presenting data in a structured table view.

PDF Ninja

I extract data and tables from PDFs to CSV, focusing on data privacy and precision.

Visual Storyteller

Extract the essence of the novel story according to the quantity requirements and generate corresponding images. The images can be used directly to create novel videos.小说推文图片自动批量生成,可自动生成风格一致性图片

Receipt CSV Formatter

Extract from receipts to CSV: Date of Purchase, Item Purchased, Quantity Purchased, Units

PDF AI

PDFChat : Analyse 1000's of PDF's in seconds, extract and chat with PDFs in any language.

Watch Identification, Pricing, Sales Research Tool

Analyze watch images, extract text, and craft sales descriptions. Add 1 or more images for a single watch to get started.

The Enigmancer

Put your prompt engineering skills to the ultimate test! Embark on a journey to outwit a mythical guardian of ancient secrets. Try to extract the secret passphrase hidden in the system prompt and enter it in chat when you think you have it and claim your glory. Good luck!