Best AI tools for< Evaluate Analysis >

20 - AI tool Sites

AI PESTEL Analysis Generator

The AI PESTEL Analysis Generator is a powerful tool designed to help organizations understand and evaluate external macro-environmental factors that can impact their business operations. By utilizing artificial intelligence technology, this tool instantly generates a comprehensive PESTEL Analysis based on the company's description. Users can easily edit and download the analysis as an image, enabling them to develop strategic plans to adapt and succeed in the marketplace. The tool simplifies the process of conducting a PESTEL analysis, providing valuable insights for decision-making and planning.

MASCAA

MASCAA is a comprehensive human confidence analysis platform that focuses on evaluating the confidence of users through video and audio during various tasks. It integrates advanced facial expression and voice analysis technologies to provide valuable feedback for students, instructors, individuals, businesses, and teams. MASCAA offers quick and easy test creation, evaluation, and confidence assessment for educational settings, personal use, startups, small organizations, universities, and large organizations. The platform aims to unlock long-term value and enhance customer experience by helping users assess and improve their confidence levels.

InstantPersonas

InstantPersonas is an AI-powered SWOT Analysis Generator that helps organizations and individuals evaluate their Strengths, Weaknesses, Opportunities, and Threats. By using a company description, the tool generates a comprehensive SWOT Analysis, providing insights for strategic planning. Users can edit the analysis and download it as an image. InstantPersonas aims to assist in understanding target audience and market for more successful marketing strategies.

VisualHUB

VisualHUB is an AI-powered design analysis tool that provides instant insights on UI, UX, readability, and more. It offers features like A/B Testing, UI Analysis, UX Analysis, Readability Analysis, Margin and Hierarchy Analysis, and Competition Analysis. Users can upload product images to receive detailed reports with actionable insights and scores. Trusted by founders and designers, VisualHUB helps optimize design variations and identify areas for improvement in products.

Competely

Competely is an AI-powered competitive analysis tool that helps users say goodbye to tedious manual research by providing comprehensive and detailed insights into competitors across various industries. Users can save days of manual work by utilizing Competely to generate a side-by-side comparison of competitors, covering aspects such as marketing, product features, pricing, audience, customer sentiment, company info, and SWOT analysis. The tool is suitable for founders, executives, marketers, product managers, agencies, and consultants, offering valuable data-driven decisions and strategies to outsmart the competition.

Career Copilot

Career Copilot is an AI-powered hiring tool that helps recruiters and hiring managers find the best candidates for their open positions. The tool uses machine learning to analyze candidate profiles and identify those who are most qualified for the job. Career Copilot also provides a number of features to help recruiters streamline the hiring process, such as candidate screening, interview scheduling, and offer management.

Valuemetrix

Valuemetrix is an AI-enhanced investment analysis platform designed for investors seeking to redefine their investment journey. The platform offers cutting-edge AI technology, institutional-level data, and expert analysis to provide users with well-informed investment decisions. Valuemetrix empowers users to elevate their investment experience through AI-driven insights, real-time market news, stock reports, market analysis, and tailored financial information for over 100,000 international publicly traded companies.

YellowGoose

YellowGoose is an AI tool that offers intelligent analysis of resumes. It utilizes advanced algorithms to extract and analyze key information from resumes, helping users to streamline the recruitment process. With YellowGoose, users can quickly evaluate candidates and make informed hiring decisions based on data-driven insights.

PolygrAI

PolygrAI is a digital polygraph powered by AI technology that provides real-time risk assessment and sentiment analysis. The platform meticulously analyzes facial micro-expressions, body language, vocal attributes, and linguistic cues to detect behavioral fluctuations and signs of deception. By combining well-established psychology practices with advanced AI and computer vision detection, PolygrAI offers users actionable insights for decision-making processes across various applications.

ThinkTask

ThinkTask is a project and team management tool that utilizes ChatGPT's capabilities to enhance productivity and streamline task management. It offers AI-generated reports and insights, AI usage tracking, Team Pulse for visualizing task types and status, Project Progress Table for monitoring project timelines and budgets, Task Insights for illustrating task interdependencies, and a comprehensive Overview for visualizing progress and managing dependencies. Additionally, ThinkTask features one-click auto-task creation with notes from ChatGPT, auto-tagging for task organization, and AI-suggested task assignments based on past experience and skills. It provides a unified workspace for notes, tasks, databases, collaboration, and customization.

Datumbox

Datumbox is a machine learning platform that offers a powerful open-source Machine Learning Framework written in Java. It provides a large collection of algorithms, models, statistical tests, and tools to power up intelligent applications. The platform enables developers to build smart software and services quickly using its REST Machine Learning API. Datumbox API offers off-the-shelf Classifiers and Natural Language Processing services for applications like Sentiment Analysis, Topic Classification, Language Detection, and more. It simplifies the process of designing and training Machine Learning models, making it easy for developers to create innovative applications.

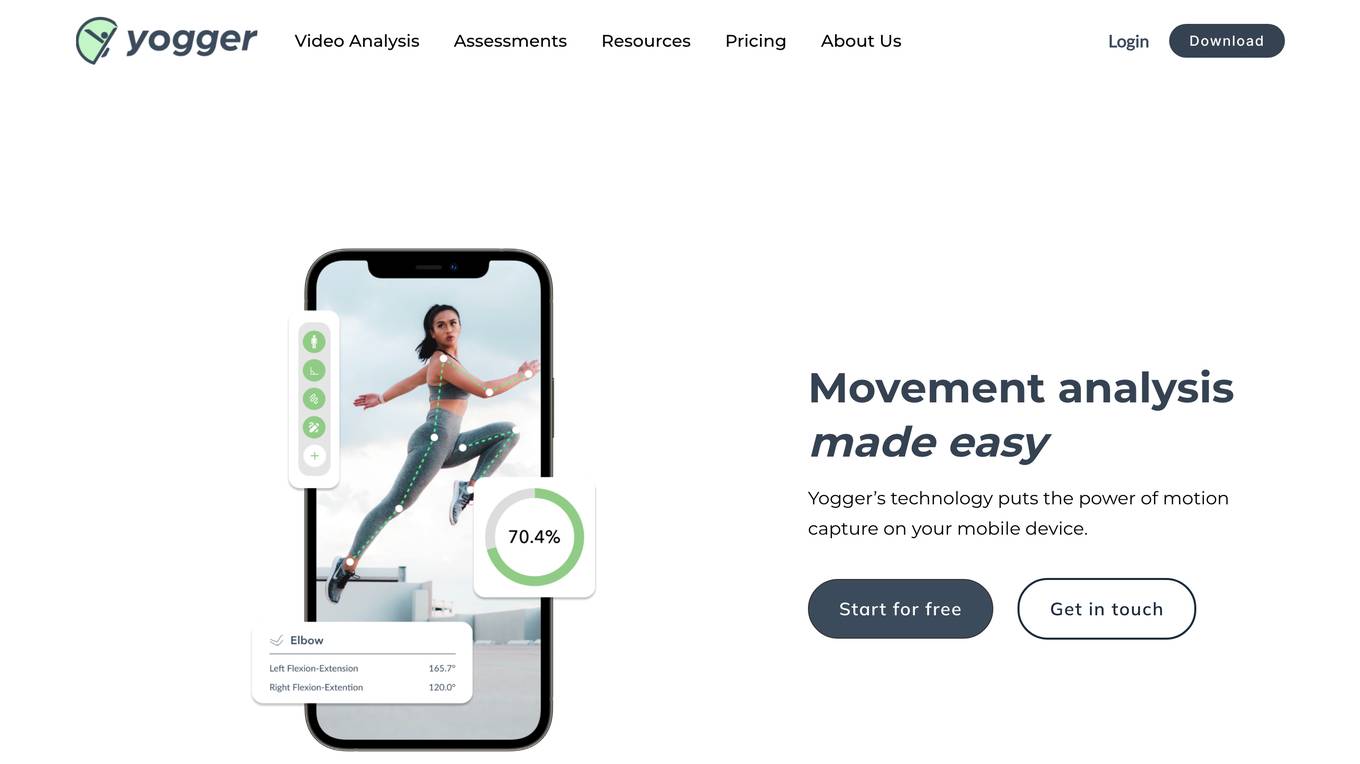

Yogger

Yogger is an AI-powered video analysis and movement assessment tool designed for coaches, trainers, physical therapists, and athletes. It allows users to track form, gather data, and analyze movement for any sport or activity in seconds. With AI-powered screenings, users can get instant scores and insights whether training in person or online. Yogger helps in recovery, training enhancement, and injury prevention, all accessible from a mobile device.

Face Shape Detector

Face Shape Detector is an advanced AI tool that analyzes facial landmarks in uploaded photos to identify the user's face shape and provide percentage distributions for different face shapes. It utilizes sophisticated algorithms to assess key metrics such as jawline, forehead width, and cheekbone structure, delivering detailed insights into facial proportions. Users can explore the power of facial analysis, understand their unique face shape, and receive quick and accurate results through this intuitive tool.

Monexa

Monexa is a professional-grade financial analysis platform that offers institutional-grade market insights, news, and data analysis in one powerful platform. It provides comprehensive market analysis, AI-powered insights, rich data visualizations, research and analysis tools, advanced screener, rich financial history, automated SWOT analysis, comprehensive reports, interactive performance analytics, institutional investment tracking, company intelligence, dividend analysis, earnings call analysis, portfolio analytics, strategy explorer, real-time market intelligence, and more. Monexa is designed to help users make data-driven investment decisions with a comprehensive suite of analytical capabilities.

thisorthis.ai

thisorthis.ai is an AI tool that allows users to compare generative AI models and AI model responses. It helps users analyze and evaluate different AI models to make informed decisions. The tool requires JavaScript to be enabled for optimal functionality.

EpicStart

EpicStart is an AI-powered platform that helps entrepreneurs validate their SaaS ideas quickly and efficiently. It offers features such as user story generation, comprehensive market research, idea analysis, and competitor analysis. By leveraging AI-driven insights, EpicStart aims to streamline the process of planning and launching a successful startup. The platform also provides detailed research reports, identifies market trends, and helps users understand their competitive advantage. With integrations and 24/7 support, EpicStart is designed to assist startups in making informed decisions and accelerating their product development.

Fireopps

Fireopps is an AI Business Plan Generator that allows users to create a professional business plan in just 2 minutes using AI technology. The platform offers a fast and efficient way to generate a comprehensive business plan, including SWOT analysis, market analysis, competitor research, ideal customer profile, and more. Fireopps aims to streamline the process of creating a business plan by leveraging AI-driven insights and data-backed recommendations.

ELSA Speech Analyzer

ELSA Speech Analyzer is an AI-powered conversational English fluency coach that provides instant, personalized feedback on speech. It helps users improve pronunciation, intonation, grammar, and vocabulary through real-time analysis. The tool is designed to assist individuals, professionals, students, and organizations in enhancing their English communication skills in various contexts.

RevSure

RevSure is an AI-powered platform designed for high-growth marketing teams to optimize marketing ROI and attribution. It offers full-funnel attribution, deep funnel optimization, predictive insights, and campaign performance tracking. The platform integrates with various data sources to provide unified funnel reporting and personalized recommendations for improving pipeline health and conversion rates. RevSure's AI engine powers features like campaign spend reallocation, next-best touch analysis, and journey timeline construction, enabling users to make data-driven decisions and accelerate revenue growth.

AnalyStock.ai

AnalyStock.ai is a financial application leveraging AI to provide users with a next-generation investment toolbox. It helps users better understand businesses, risks, and make informed investment decisions. The platform offers direct access to the stock market, powerful data-driven tools to build top-ranking portfolios, and insights into company valuations and growth prospects. AnalyStock.ai aims to optimize the investment process, offering a reliable strategy with factors like A-Score, factor investing scores for value, growth, quality, volatility, momentum, and yield. Users can discover hidden gems, fine-tune filters, access company scorecards, perform activity analysis, understand industry dynamics, evaluate capital structure, profitability, and peers' valuation. The application also provides adjustable DCF valuation, portfolio management tools, net asset value computation, monthly commentary, and an AI assistant for personalized insights and assistance.

1 - Open Source AI Tools

Noema-Declarative-AI

Noema is a framework that enables developers to control a language model and choose the path it will follow. It integrates Python with llm's generations, allowing users to use LLM as a thought interpreter rather than a source of truth. Noema is built on llama.cpp and guidance's shoulders. It applies the declarative programming paradigm to a language model, providing a way to represent functions, descriptions, and transformations. Users can create subjects, think about tasks, and generate content through generators, selectors, and code generators. Noema supports ReAct prompting, visualization, and semantic Python functionalities, offering a versatile tool for automating tasks and guiding language models.

20 - OpenAI Gpts

Inventor's Idea Analysis and Business Plan

Inventor's Idea Analysis and Business Plan Development Template

Rhetoric Analyzer

Expert in Rhetorical Analysis, providing detailed text analysis and insights.

Source Evaluation and Fact Checking v1.3

FactCheck Navigator GPT is designed for in-depth fact checking and analysis of written content and evaluation of its source. The approach is to iterate through predefined and well-prompted steps. If desired, the user can refine the process by providing input between these steps.

Frontend Mentor

Frontend dev mentor for CV analysis, UI evaluation, and interactive learning.

CIM Analyst

In-depth CIM analysis with a structured rating scale, offering detailed business evaluations.

News Authenticator

Professional news analysis expert, verifying article authenticity with in-depth research and unbiased evaluation.

Finance Wizard

I predict future stock market prices. AI analyst. Your trading analysis assistant. Press H to bring up prompt hot key menu. Not financial advice.

VC Associate

A gpt assistant that helps with analyzing a startup/market. The answers you get back is already structured to give you the core elements you would want to see in an investment memo/ market analysis