Best AI tools for< Tune Training Hyperparameters >

20 - AI tool Sites

Kubeflow

Kubeflow is an open-source machine learning (ML) toolkit that makes deploying ML workflows on Kubernetes simple, portable, and scalable. It provides a unified interface for model training, serving, and hyperparameter tuning, and supports a variety of popular ML frameworks including PyTorch, TensorFlow, and XGBoost. Kubeflow is designed to be used with Kubernetes, a container orchestration system that automates the deployment, management, and scaling of containerized applications.

madebymachines

madebymachines is an AI tool designed to assist users in various stages of the machine learning workflow, from data preparation to model development. The tool offers services such as data collection, data labeling, model training, hyperparameter tuning, and transfer learning. With a user-friendly interface and efficient algorithms, madebymachines aims to streamline the process of building machine learning models for both beginners and experienced users.

FineTuneAIs.com

FineTuneAIs.com is a platform that specializes in custom AI model fine-tuning. Users can fine-tune their AI models to achieve better performance and accuracy. The platform requires JavaScript to be enabled for optimal functionality.

Fine-Tune AI

Fine-Tune AI is a tool that allows users to generate fine-tune data sets using prompts. This can be useful for a variety of tasks, such as improving the accuracy of machine learning models or creating new training data for AI applications.

prompteasy.ai

Prompteasy.ai is an AI tool that allows users to fine-tune AI models in less than 5 minutes. It simplifies the process of training AI models on user data, making it as easy as having a conversation. Users can fully customize GPT by fine-tuning it to meet their specific needs. The tool offers data-driven customization, interactive AI coaching, and seamless model enhancement, providing users with a competitive edge and simplifying AI integration into their workflows.

Sapien.io

Sapien.io is a decentralized data foundry that offers data labeling services powered by a decentralized workforce and gamified platform. The platform provides high-quality training data for large language models through a human-in-the-loop labeling process, enabling fine-tuning of datasets to build performant AI models. Sapien combines AI and human intelligence to collect and annotate various data types for any model, offering customized data collection and labeling models across industries.

Cerebras

Cerebras is an AI tool that offers products and services related to AI supercomputers, cloud system processors, and applications for various industries. It provides high-performance computing solutions, including large language models, and caters to sectors such as health, energy, government, scientific computing, and financial services. Cerebras specializes in AI model services, offering state-of-the-art models and training services for tasks like multi-lingual chatbots and DNA sequence prediction. The platform also features the Cerebras Model Zoo, an open-source repository of AI models for developers and researchers.

Cerebras

Cerebras is a leading AI tool and application provider that offers cutting-edge AI supercomputers, model services, and cloud solutions for various industries. The platform specializes in high-performance computing, large language models, and AI model training, catering to sectors such as health, energy, government, and financial services. Cerebras empowers developers and researchers with access to advanced AI models, open-source resources, and innovative hardware and software development kits.

Scale AI

Scale AI is an AI tool that accelerates the development of AI applications for various sectors including enterprise, government, and automotive industries. It offers solutions for training models, fine-tuning, generative AI, and model evaluations. Scale Data Engine and GenAI Platform enable users to leverage enterprise data effectively. The platform collaborates with leading AI models and provides high-quality data for public and private sector applications.

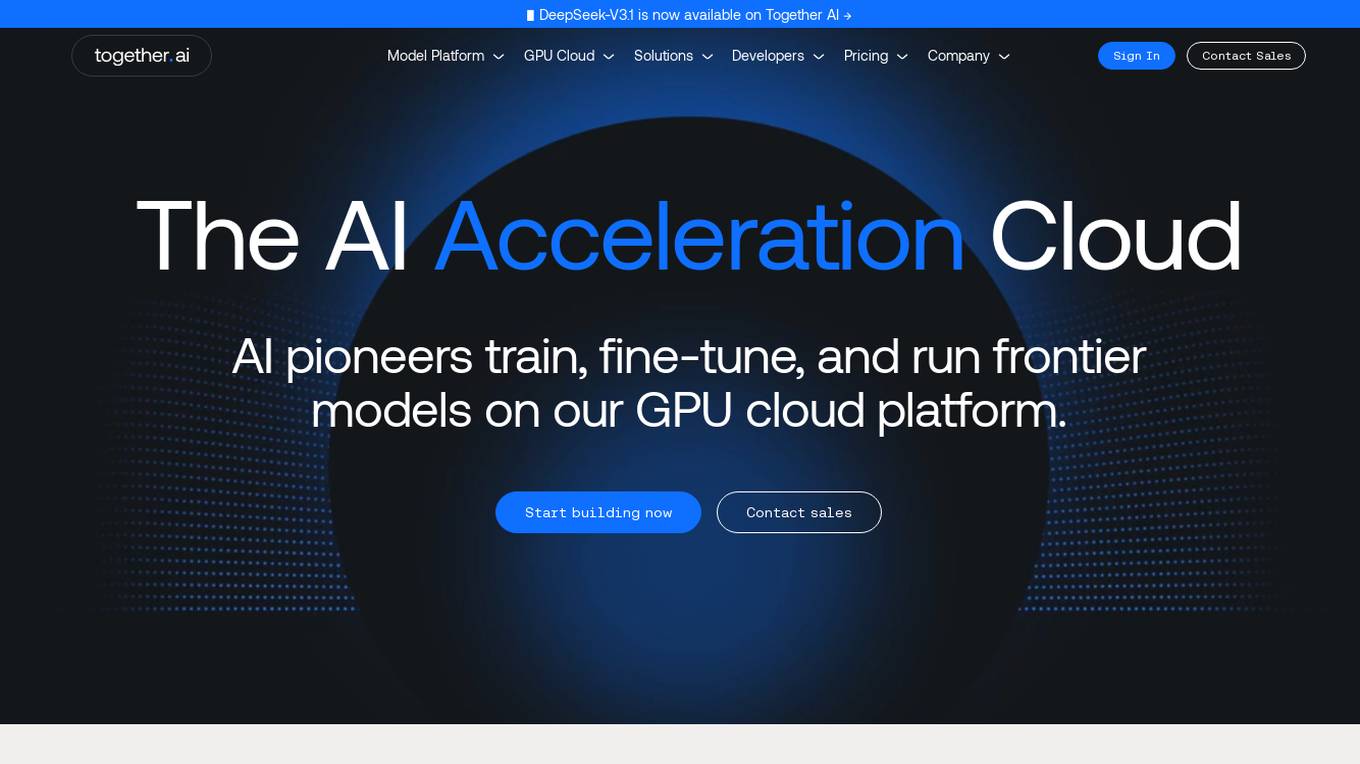

Together AI

Together AI is an AI Acceleration Cloud platform that offers fast inference, fine-tuning, and training services. It provides self-service NVIDIA GPUs, model deployment on custom hardware, AI chat app, code execution sandbox, and tools to find the right model for specific use cases. The platform also includes a model library with open-source models, documentation for developers, and resources for advancing open-source AI. Together AI enables users to leverage pre-trained models, fine-tune them, or build custom models from scratch, catering to various generative AI needs.

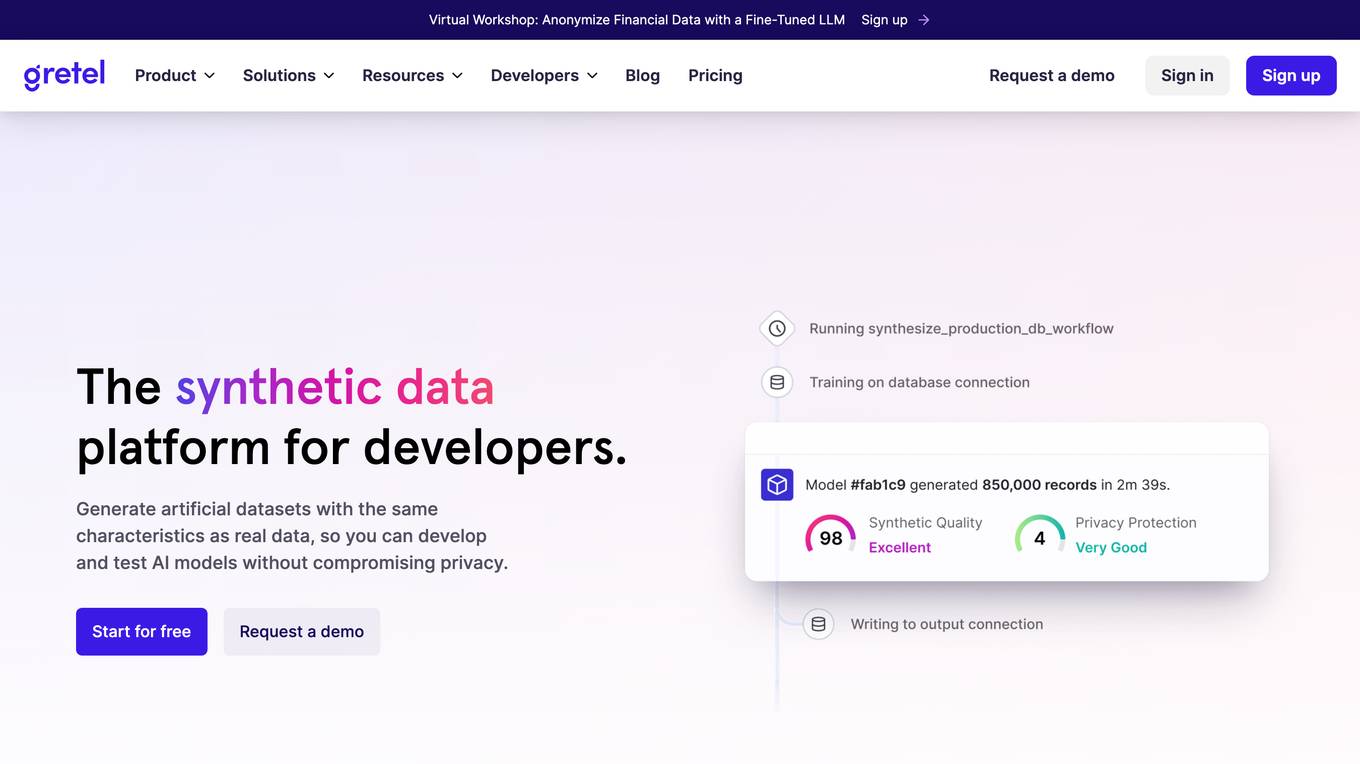

Gretel.ai

Gretel.ai is a synthetic data platform purpose-built for AI applications. It allows users to generate artificial, synthetic datasets with the same characteristics as real data, enabling the improvement of AI models without compromising privacy. The platform offers APIs for generating anonymized and safe synthetic data, training generative AI models, and validating models with quality and privacy scores. Users can deploy Gretel for enterprise use cases and run it on various cloud platforms or in their own environment.

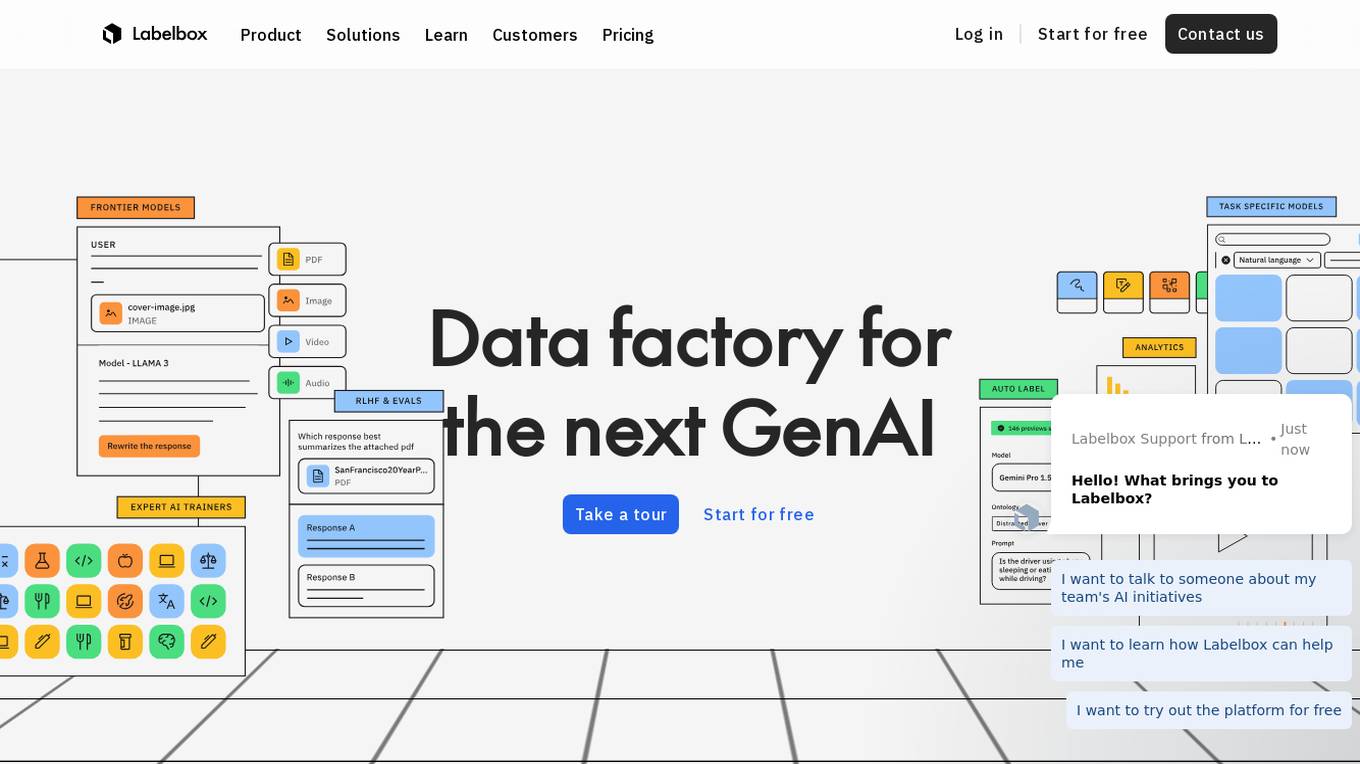

Labelbox

Labelbox is a data factory platform that empowers AI teams to manage data labeling, train models, and create better data with internet scale RLHF platform. It offers an all-in-one solution comprising tooling and services powered by a global community of domain experts. Labelbox operates a global data labeling infrastructure and operations for AI workloads, providing expert human network for data labeling in various domains. The platform also includes AI-assisted alignment for maximum efficiency, data curation, model training, and labeling services. Customers achieve breakthroughs with high-quality data through Labelbox.

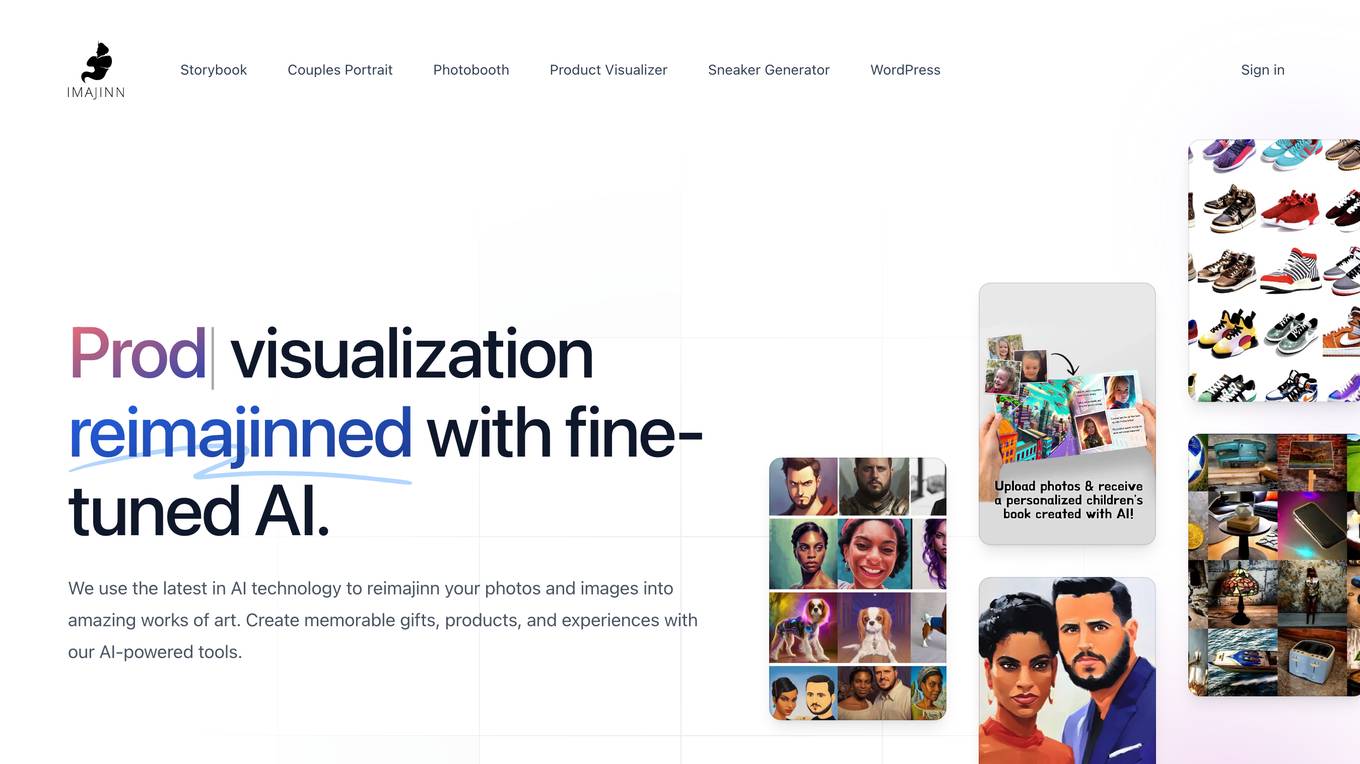

Imajinn AI

Imajinn AI is a cutting-edge visualization tool that utilizes fine-tuned AI technology to reimagine photos and images into stunning works of art. The platform offers a suite of AI-powered tools for creating personalized children's books, printed couples portraits, profile picture photobooth, product photo visualizer, and sneaker generator. Users can also generate concept images for various purposes, such as eCommerce, virtual photoshoots, and more. Imajinn AI provides a fun and creative way to bring ideas to life with high-quality outputs and a user-friendly interface.

Prem AI

Prem is an AI platform that empowers developers and businesses to build and fine-tune generative AI models with ease. It offers a user-friendly development platform for developers to create AI solutions effortlessly. For businesses, Prem provides tailored model fine-tuning and training to meet unique requirements, ensuring data sovereignty and ownership. Trusted by global companies, Prem accelerates the advent of sovereign generative AI by simplifying complex AI tasks and enabling full control over intellectual capital. With a suite of foundational open-source SLMs, Prem supercharges business applications with cutting-edge research and customization options.

TractoAI

TractoAI is an advanced AI platform that offers deep learning solutions for various industries. It provides Batch Inference with no rate limits, DeepSeek offline inference, and helps in training open source AI models. TractoAI simplifies training infrastructure setup, accelerates workflows with GPUs, and automates deployment and scaling for tasks like ML training and big data processing. The platform supports fine-tuning models, sandboxed code execution, and building custom AI models with distributed training launcher. It is developer-friendly, scalable, and efficient, offering a solution library and expert guidance for AI projects.

ITSoli

ITSoli is an AI consulting firm that specializes in AI adoption, transformation, and data intelligence services. They offer custom AI models, data services, and strategic partnerships to help organizations innovate, automate, and accelerate their AI journey. With expertise in fine-tuning AI models and training custom agents, ITSoli aims to unlock the power of AI for businesses across various industries.

Live Portrait Ai Generator

Live Portrait Ai Generator is an AI application that transforms static portrait images into lifelike videos using advanced animation technology. Users can effortlessly animate their portraits, fine-tune animations, unleash artistic styles, and make memories move with text, music, and other elements. The tool offers a seamless stitching technology and retargeting capabilities to achieve perfect results. Live Portrait Ai enhances generation quality and generalization ability through a mixed image-video training strategy and network architecture upgrades.

Nscale

Nscale is a full-stack, scalable, and sustainable AI cloud platform that offers a wide range of AI services and solutions. It provides services for developing, training, tuning, and deploying AI models using on-demand services. Nscale also offers serverless inference API endpoints, fine-tuning capabilities, private cloud solutions, and various GPU clusters engineered for AI. The platform aims to simplify the journey from AI model development to production, offering a marketplace for AI/ML tools and resources. Nscale's infrastructure includes data centers powered by renewable energy, high-performance GPU nodes, and optimized networking and storage solutions.

Welo Data

Welo Data is an AI tool that specializes in AI benchmarking, model assessment, and training high-quality datasets for AI models. The platform offers services such as supervised fine tuning, reinforcement learning with human feedback, data generation, expert evaluations, and data quality framework to support the development of world-class AI models. With over 27 years of experience, Welo Data combines language expertise and AI data to deliver exceptional training and performance evaluation solutions.

Databricks

Databricks is a data and AI company that offers a Data Intelligence Platform to help users succeed with AI by developing generative AI applications, democratizing insights, and driving down costs. The platform maintains data lineage, quality, control, and privacy across the entire AI workflow, enabling users to create, tune, and deploy generative AI models. Databricks caters to industry leaders, providing tools and integrations to speed up success in data and AI. The company offers resources such as support, training, and community engagement to help users succeed in their data and AI journey.

1 - Open Source AI Tools

ai-robotics

AI Robotics tutorials for hobbyists focusing on training a virtual humanoid/robot to perform football tricks using Reinforcement Learning. The tutorials cover tuning training hyperparameters, optimizing reward functions, and achieving results in a short training time without the need for GPUs.

20 - OpenAI Gpts

Tune Tailor: Playlist Pal

I find and create playlists based on mood, genre, and activities.

Text Tune Up GPT

I edit articles, improving clarity and respectfulness, maintaining your style.

The Name That Tune Game - from lyrics

Joyful music expert in song lyrics, offering trivia, insights, and engaging music discussions.

Joke Smith | Joke Edits for Standup Comedy

A witty editor to fine-tune stand-up comedy jokes.

Rewrite This Song: Lyrics Generator

I rewrite song lyrics to new themes, keeping the tune and essence of the original.

Dr. Tuning your Sim Racing doctor

Your quirky pit crew chief for top-notch sim racing advice

アダチさん12号(Oracle RDBMS篇)

安達孝一さんがSE時代に蓄積してきた、Oracle RDBMSのナレッジやノウハウ等 (Oracle 7/8.1.6/8.1.7/9iR1/9iR2/10gR1/10gR2/11gR2/12c/SQLチューニング) について、ご質問頂けます。また、対話内容を基に、ChatGPT(GPT-4)向けの、汎用的な質問文例も作成できます。

Drone Buddy

An FPV drone specialist aiding in building, tuning, and learning about the hobby.

Pytorch Trainer GPT

Your purpose is to create the pytorch code to train language models using pytorch

BrandChic Strategic

I'm Chic Strategic, your ally in carving out a distinct brand position and fine-tuning your voice. Let's make your brand's presence robust and its message clear in a bustling market.