Best AI tools for< Troubleshoot Cloud Issues >

20 - AI tool Sites

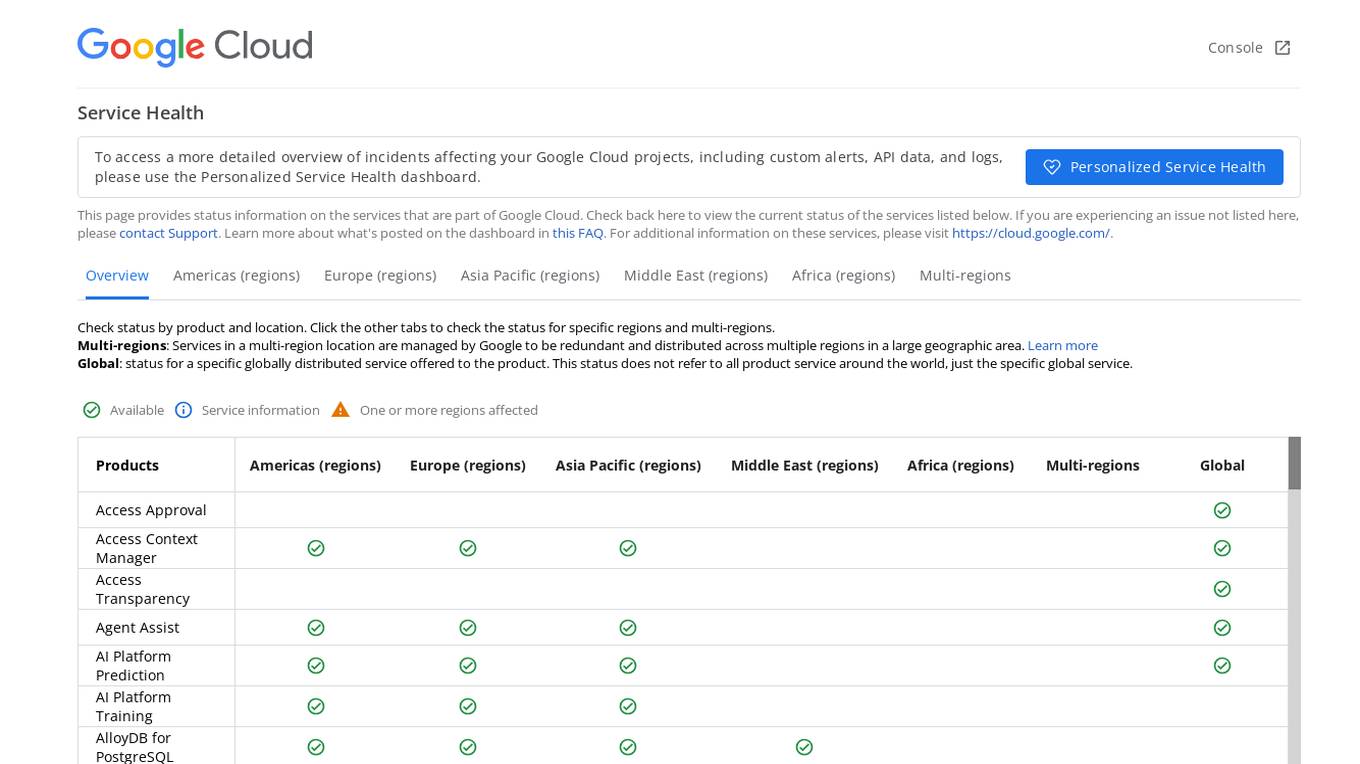

Google Cloud Service Health Console

Google Cloud Service Health Console provides status information on the services that are part of Google Cloud. It allows users to check the current status of services, view detailed overviews of incidents affecting their Google Cloud projects, and access custom alerts, API data, and logs through the Personalized Service Health dashboard. The console also offers a global view of the status of specific globally distributed services and allows users to check the status by product and location.

Arize AI

Arize AI is an AI observability tool designed to monitor and troubleshoot AI models in production. It provides configurable and sophisticated observability features to ensure the performance and reliability of next-gen AI stacks. With a focus on ML observability, Arize offers automated setup, a simple API, and a lightweight package for tracking model performance over time. The tool is trusted by top companies for its ability to surface insights, simplify issue root causing, and provide a dedicated customer success manager. Arize is battle-hardened for real-world scenarios, offering unparalleled performance, scalability, security, and compliance with industry standards like SOC 2 Type II and HIPAA.

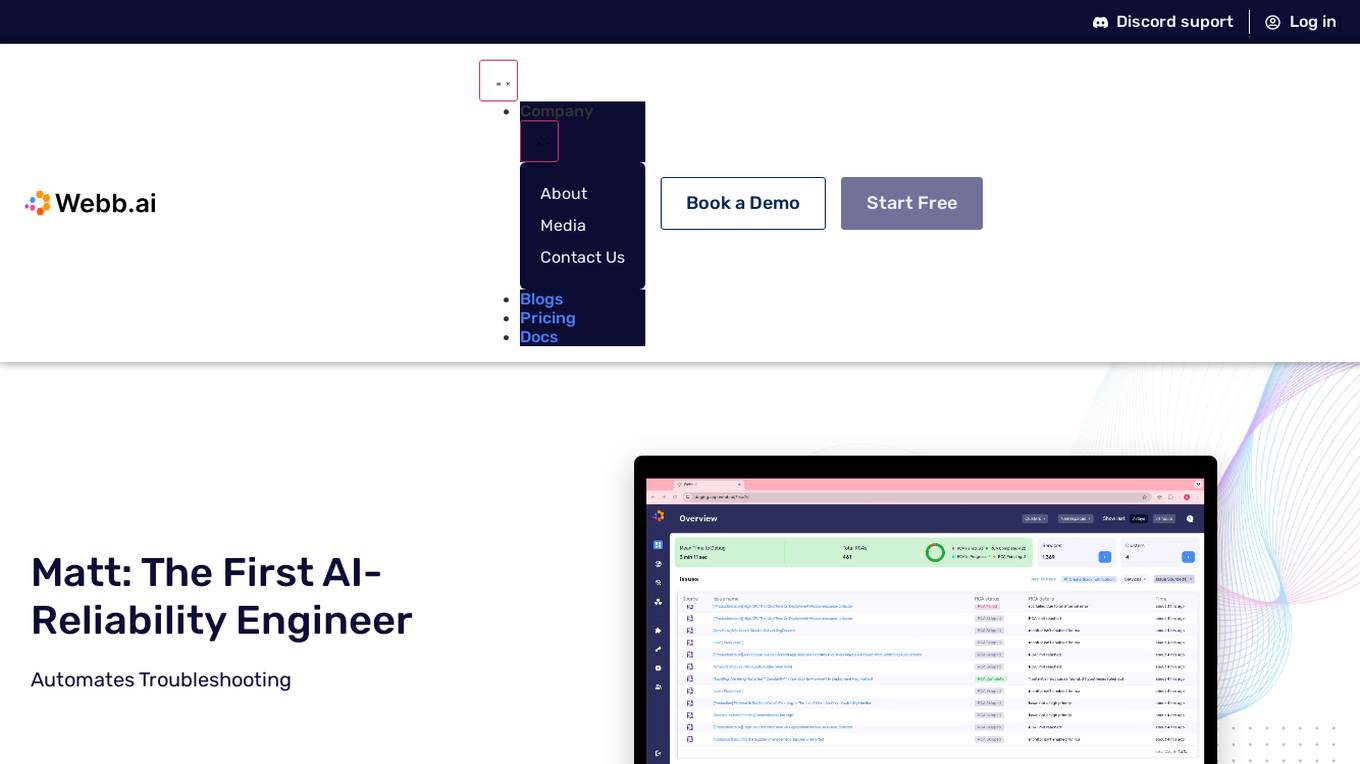

Webb.ai

Webb.ai is an AI-powered platform that offers automated troubleshooting for Kubernetes. It is designed to assist users in identifying and resolving issues within their Kubernetes environment efficiently. By leveraging AI technology, Webb.ai provides insights and recommendations to streamline the troubleshooting process, ultimately improving system reliability and performance. The platform is user-friendly and caters to both beginners and experienced users in the field of Kubernetes management.

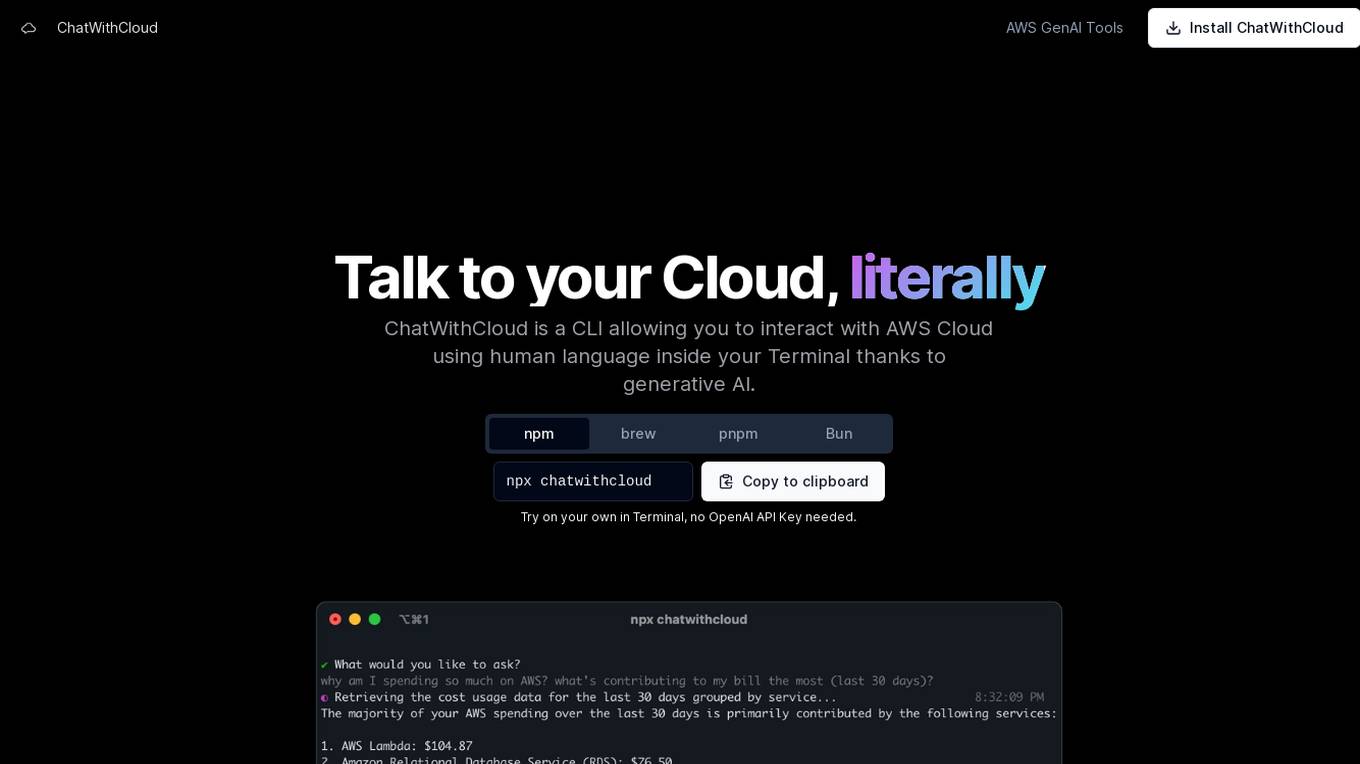

ChatWithCloud

ChatWithCloud is a command-line interface (CLI) tool that enables users to interact with AWS Cloud using natural language within the Terminal, powered by generative AI. It allows users to perform various tasks such as cost analysis, security analysis, troubleshooting, and fixing infrastructure issues without the need for an OpenAI API Key. The tool offers both a lifetime license option and a managed subscription model for users' convenience.

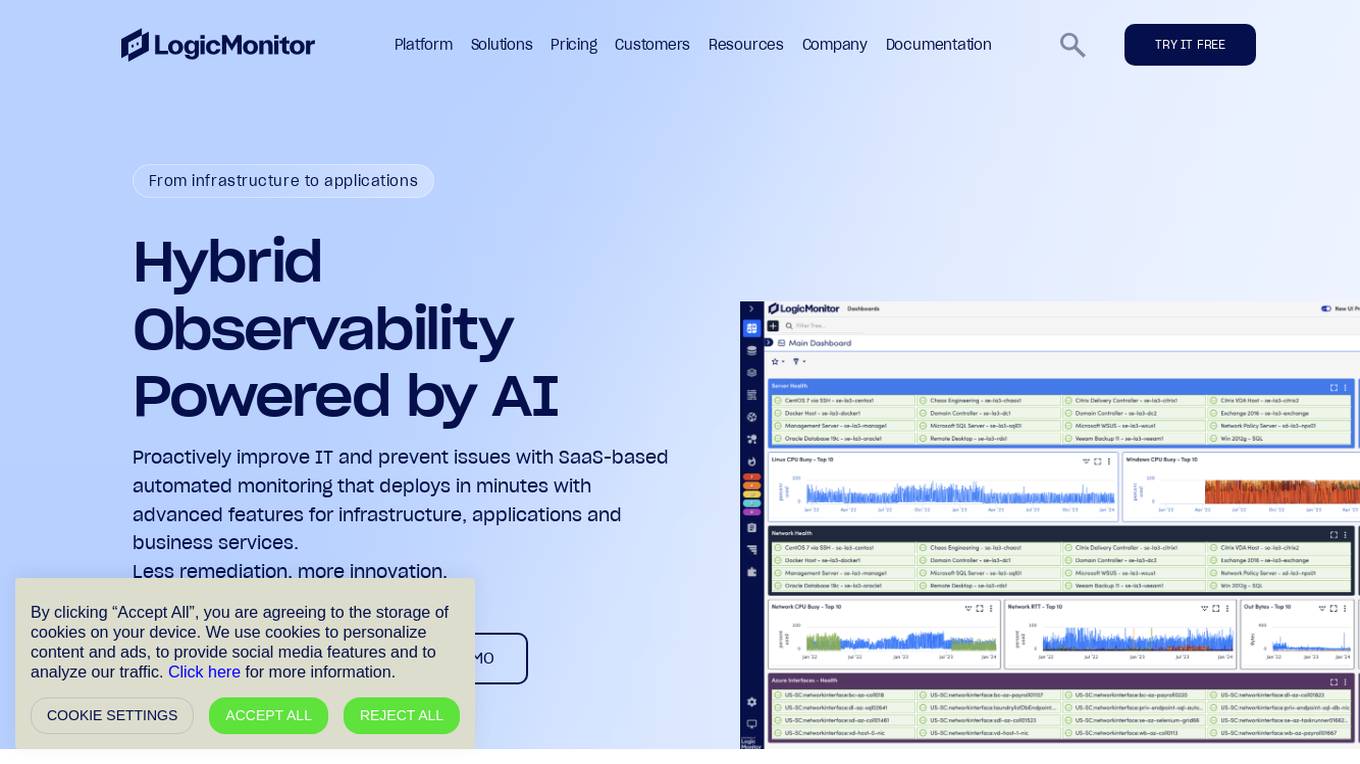

LogicMonitor

LogicMonitor is a cloud-based infrastructure monitoring platform that provides real-time insights and automation for comprehensive, seamless monitoring with agentless architecture. It offers a unified platform for monitoring infrastructure, applications, and business services, with advanced features for hybrid observability. LogicMonitor's AI-driven capabilities simplify complex IT ecosystems, accelerate incident response, and empower organizations to thrive in the digital landscape.

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::vd75v-1770832320154-3c2268e79b55) for reference. Users are directed to consult the documentation for further information and troubleshooting.

1case.io

1case.io is a website that currently displays a Connection Timed Out error with the code 522. The error message suggests a problem with the connection between Cloudflare's network and the origin web server, resulting in the inability to display the web page. The website seems to be experiencing technical difficulties that prevent it from functioning properly. Users are advised to wait a few minutes and try again, or the website owner should contact their hosting provider for assistance in resolving the issue.

KubeHelper

KubeHelper is an AI-powered tool designed to reduce Kubernetes downtime by providing troubleshooting solutions and command searches. It seamlessly integrates with Slack, allowing users to interact with their Kubernetes cluster in plain English without the need to remember complex commands. With features like troubleshooting steps, command search, infrastructure management, scaling capabilities, and service disruption detection, KubeHelper aims to simplify Kubernetes operations and enhance system reliability.

assetsai.art

assetsai.art is a website that currently faces an Invalid SSL certificate error (Error code 526). The error message suggests that the origin web server does not have a valid SSL certificate, leading to potential security risks for visitors. The site advises visitors to try again in a few minutes or for the owner to ensure an up-to-date and valid SSL certificate issued by a Certificate Authority is configured. The website seems to be hosted on Cloudflare, as indicated by the troubleshooting information provided.

Cloudflare Origin DNS Error Resolver

The website www.deck.rocks encountered an Origin DNS error, which is a common issue related to the Cloudflare network. The error message indicates that the requested domain (www.deck.rocks) could not be resolved by Cloudflare's DNS. The page provides troubleshooting information for both visitors and website owners, advising them to wait a few minutes and check their DNS settings, especially if using a CNAME origin record. The error message also includes links to Cloudflare's support documentation for further details.

ai.prodi.gg

The website ai.prodi.gg encountered an Origin DNS error, which is a common issue related to the Cloudflare network. The error message indicates that the requested domain (ai.prodi.gg) could not be resolved by Cloudflare. The page provides troubleshooting information and suggestions for both visitors and website owners to resolve the DNS error. It also includes a link to Cloudflare's support documentation for further details. The website primarily serves as a platform for managing DNS settings and troubleshooting network-related issues.

Allwire Technologies

Allwire Technologies, LLC is a boutique IT consultancy firm that specializes in building intelligent IT infrastructure solutions. They offer services such as hybrid infrastructure management, security expertise, IT helpdesk support, operational insurance, and AI-driven solutions. The company focuses on empowering clients by providing tailored IT solutions without vendor lock-in. Allwire Technologies is known for fixing complex IT problems and modernizing existing tech stacks through a combination of cloud and data center solutions.

Server Error Analyzer

The website is experiencing a 500 Internal Server Error, which indicates a problem with the server hosting the website. This error message is generated by the server when it is unable to fulfill a request from a client. The OpenResty software may be involved in the server configuration. Users encountering this error should contact the website administrator for assistance in resolving the issue.

Cloudhumans

The website en.cloudhumans.com is experiencing a DNS issue where the IP address is prohibited, causing a conflict within Cloudflare's system. Users encountering this error are advised to refer to the provided link for troubleshooting details. The site is hosted on the Cloudflare network, and the owner is instructed to log in and change the DNS A records to resolve the issue. Additionally, the page provides information about Cloudflare Ray ID and user's IP address, along with performance and security features by Cloudflare.

Cirroe AI

Cirroe AI is an intelligent chatbot designed to help users deploy and troubleshoot their AWS cloud infrastructure quickly and efficiently. With Cirroe AI, users can experience seamless automation, reduced downtime, and increased productivity by simplifying their AWS cloud operations. The chatbot allows for fast deployments, intuitive debugging, and cost-effective solutions, ultimately saving time and boosting efficiency in managing cloud infrastructure.

Lambda Docs

Lambda Docs is an AI tool that provides cloud and hardware solutions for individuals, teams, and organizations. It offers services such as Managed Kubernetes, Preinstalled Kubernetes, Slurm, and access to GPU clusters. The platform also provides educational resources and tutorials for machine learning engineers and researchers to fine-tune models and deploy AI solutions.

Office Kube Workflow

Office Kube Workflow is an AI-powered productivity tool that offers fully configured workspaces, high degree of workflow automation, workflow extensibility, cloud power leverage, and support for team/organization workflows. It incorporates AI capabilities to boost productivity by enabling seamless creation of artifacts, troubleshooting, and code optimization within the workspace. The platform is designed with enterprise-grade quality focusing on security, scalability, and resilience.

403 Forbidden Resolver

The website is currently displaying a '403 Forbidden' error, which means that the server is refusing to respond to the request. This could be due to various reasons such as insufficient permissions, server misconfiguration, or a client error. The 'openresty' message indicates that the server is using the OpenResty web platform. It is important to troubleshoot and resolve the issue to regain access to the website.

Highcountry Toyota Internet Connection Troubleshooter

Highcountrytoyota.stage.autogo.ai is an AI tool designed to provide assistance and support for troubleshooting internet connection issues. The website offers guidance on resolving connection problems, including checking network settings, firewall configurations, and proxy server issues. Users can find step-by-step instructions and tips to troubleshoot and fix connection errors. The platform aims to help users quickly identify and resolve connectivity issues to ensure seamless internet access.

Web Server Error Resolver

The website is currently displaying a '403 Forbidden' error, which indicates that the server is refusing to respond to the request. This error message is typically displayed when the server understands the request made by the client but refuses to fulfill it. The 'openresty' mentioned in the text is likely the web server software being used. It is important to troubleshoot and resolve the 403 Forbidden error to regain access to the website's content.

0 - Open Source AI Tools

20 - OpenAI Gpts

Cloud Architecture Advisor

Guides cloud strategy and architecture to optimize business operations.

cloud exams coach

AI Cloud Computing (Engineering, Architecture, DevOps ) Certifications Coach for AWS, GCP, and Azure. I provide timed mock exams.

Nimbus Navigator

Cloud Engineer Expert, guiding in cloud tech, projects, career, and industry trends.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.

Cloud Networking Advisor

Optimizes cloud-based networks for efficient organizational operations.

Architext

Architext is a sophisticated chatbot designed to guide users through the complexities of AWS architecture, leveraging the AWS Well-Architected Framework. It offers real-time, tailored advice, interactive learning, and up-to-date resources for both novices and experts in AWS cloud infrastructure.

Aws Guru

Your friendly coworker in AWS troubleshooting, offering precise, bullet-point advice. Leave feedback: https://dlmdby03vet.typeform.com/to/VqWNt8Dh

Azure Mentor

Expert in Azure's latest services, including Application Insights, API Management, and more.