Best AI tools for< Train Rl Models >

20 - AI tool Sites

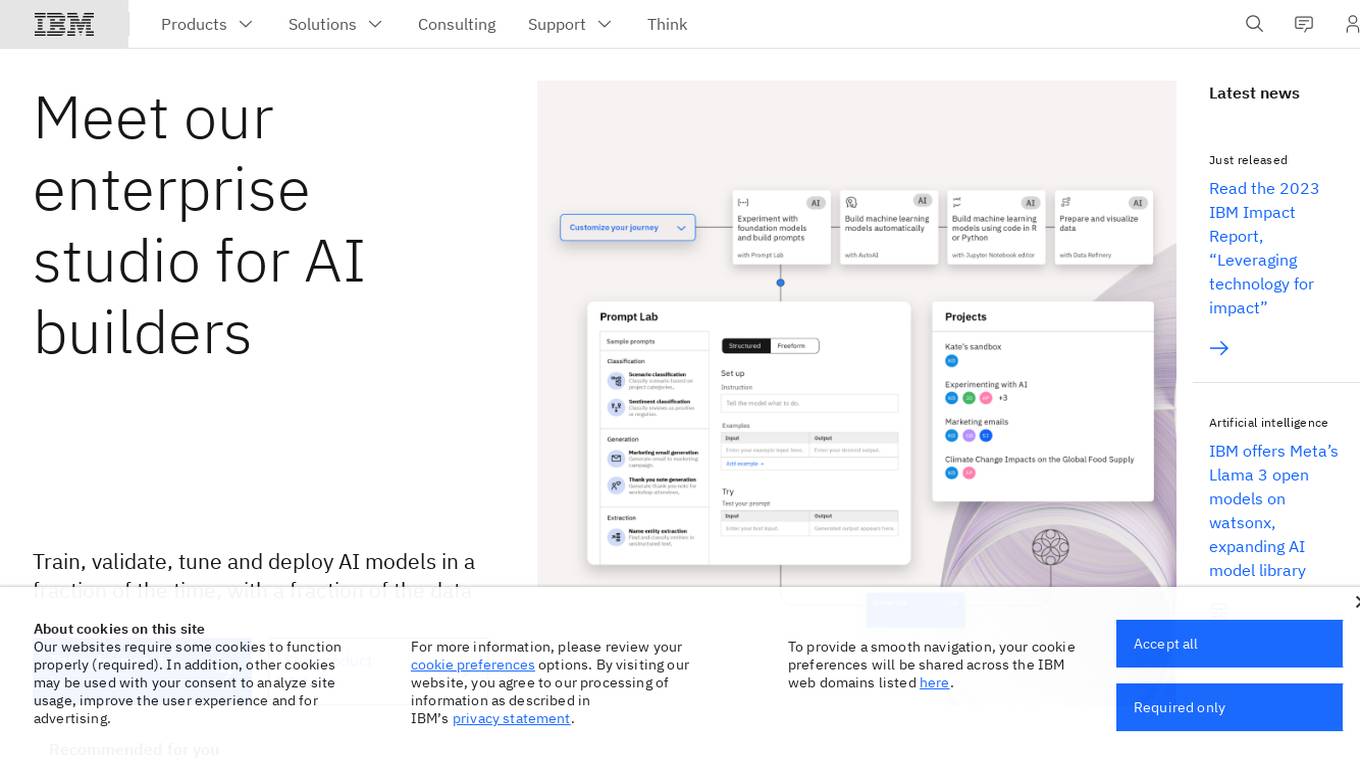

IBM Watsonx

IBM Watsonx is an enterprise studio for AI builders. It provides a platform to train, validate, tune, and deploy AI models quickly and efficiently. With Watsonx, users can access a library of pre-trained AI models, build their own models, and deploy them to the cloud or on-premises. Watsonx also offers a range of tools and services to help users manage and monitor their AI models.

Outlier AI

Outlier AI is a platform that connects subject matter experts to help build the world's most advanced Generative AI. It allows experts to work on various projects from generating training data to evaluating model performance. The platform offers flexibility, allowing contributors to work from home on their own schedule. Outlier AI aims to redefine how AI learns by leveraging the expertise of domain specialists across different fields.

Athletica AI

Athletica AI is an AI-powered athletic training and personalized fitness application that offers tailored coaching and training plans for various sports like cycling, running, duathlon, triathlon, and rowing. It adapts to individual fitness levels, abilities, and availability, providing daily step-by-step training plans and comprehensive session analyses. Athletica AI integrates seamlessly with workout data from platforms like Garmin, Strava, and Concept 2 to craft personalized training plans and workouts. The application aims to help athletes train smarter, not harder, by leveraging the power of AI to optimize performance and achieve fitness goals.

Backend.AI

Backend.AI is an enterprise-scale cluster backend for AI frameworks that offers scalability, GPU virtualization, HPC optimization, and DGX-Ready software products. It provides a fast and efficient way to build, train, and serve AI models of any type and size, with flexible infrastructure options. Backend.AI aims to optimize backend resources, reduce costs, and simplify deployment for AI developers and researchers. The platform integrates seamlessly with existing tools and offers fractional GPU usage and pay-as-you-play model to maximize resource utilization.

Kaiden AI

Kaiden AI is an AI-powered training platform that offers personalized, immersive simulations to enhance skills and performance across various industries and roles. It provides feedback-rich scenarios, voice-enabled interactions, and detailed performance insights. Users can create custom training scenarios, engage with AI personas, and receive real-time feedback to improve communication skills. Kaiden AI aims to revolutionize training solutions by combining AI technology with real-world practice.

Endurance

Endurance is a platform designed for runners, swimmers, and cyclists to engage in group training activities with friends or local communities. Users can create or join teams, share structured workouts, and benefit from collective motivation and accountability. The platform aims to make training fun and effective by leveraging the power of group workouts and social connections.

ChatCube

ChatCube is an AI-powered chatbot maker that allows users to create chatbots for their websites without coding. It uses advanced AI technology to train chatbots on any document or website within 60 seconds. ChatCube offers a range of features, including a user-friendly visual editor, lightning-fast integration, fine-tuning on specific data sources, data encryption and security, and customizable chatbots. By leveraging the power of AI, ChatCube helps businesses improve customer support efficiency and reduce support ticket reductions by up to 28%.

Workout Tools

Workout Tools is an AI-powered personal trainer that helps you train smarter and reach your fitness goals faster. It takes into account different parameters, such as your physics, the type of workout you're interested in, your available equipment, and comes up with a suggested workout. Don't like the workout? Just generate another one. It's that simple.

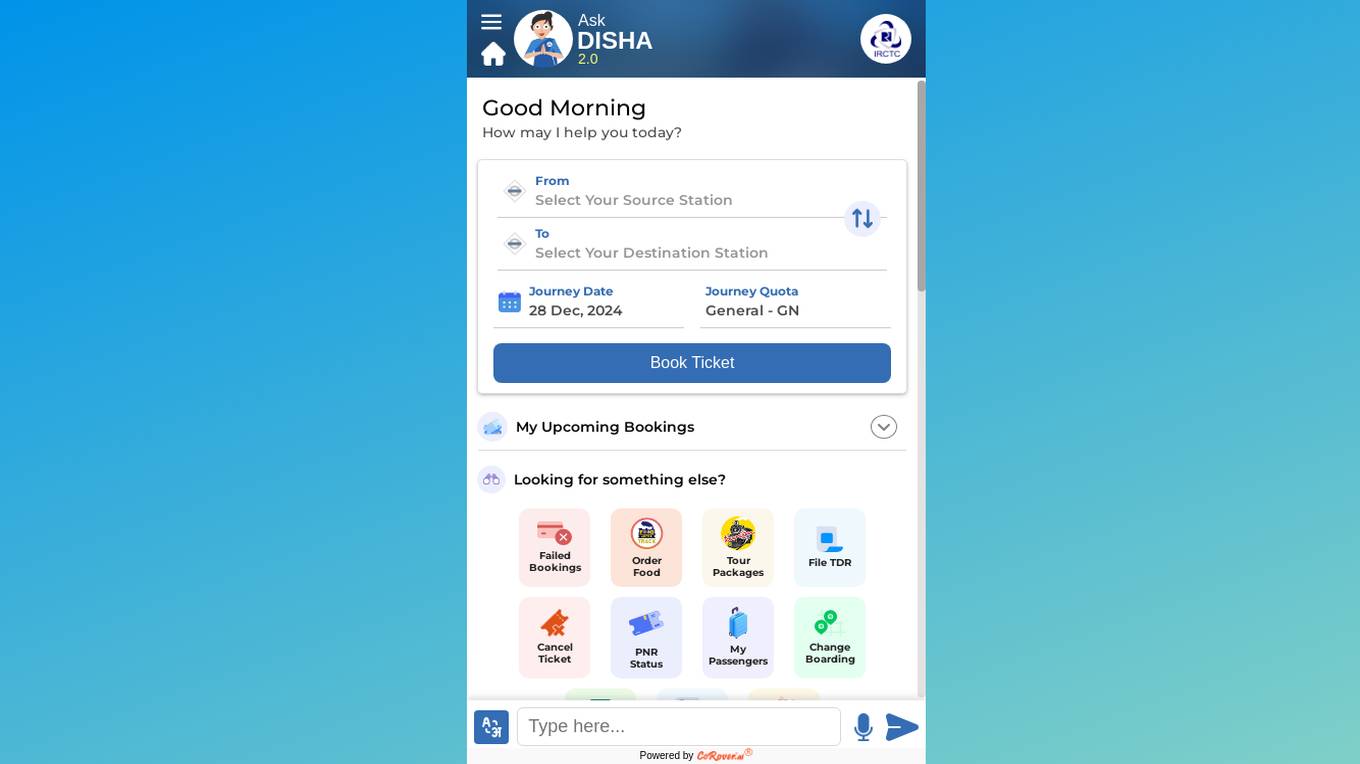

CoRover.ai

CoRover.ai is an AI-powered chatbot designed to help users book train tickets seamlessly through conversation. The chatbot, named AskDISHA, is integrated with the IRCTC platform, allowing users to inquire about train schedules, ticket availability, and make bookings effortlessly. CoRover.ai leverages artificial intelligence to provide personalized assistance and streamline the ticket booking process for users, enhancing their overall experience.

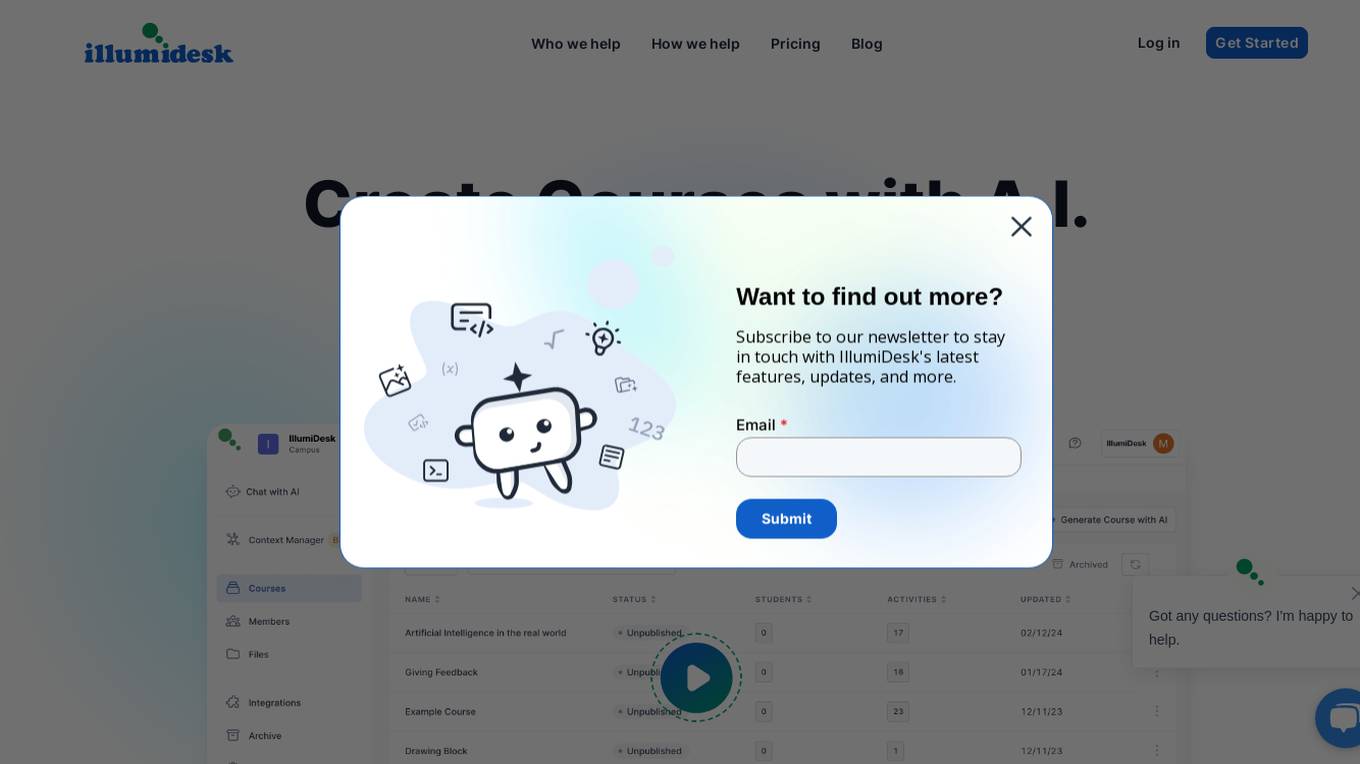

IllumiDesk

IllumiDesk is a generative AI platform for instructors and content developers that helps teams create and monetize content tailored 10X faster. With IllumiDesk, you can automate grading tasks, collaborate with your learners, create awesome content at the speed of AI, and integrate with the services you know and love. IllumiDesk's AI will help you create, maintain, and structure your content into interactive lessons. You can also leverage IllumiDesk's flexible integration options using the RESTful API and/or LTI v1.3 to leverage existing content and flows. IllumiDesk is trusted by training agencies and universities around the world.

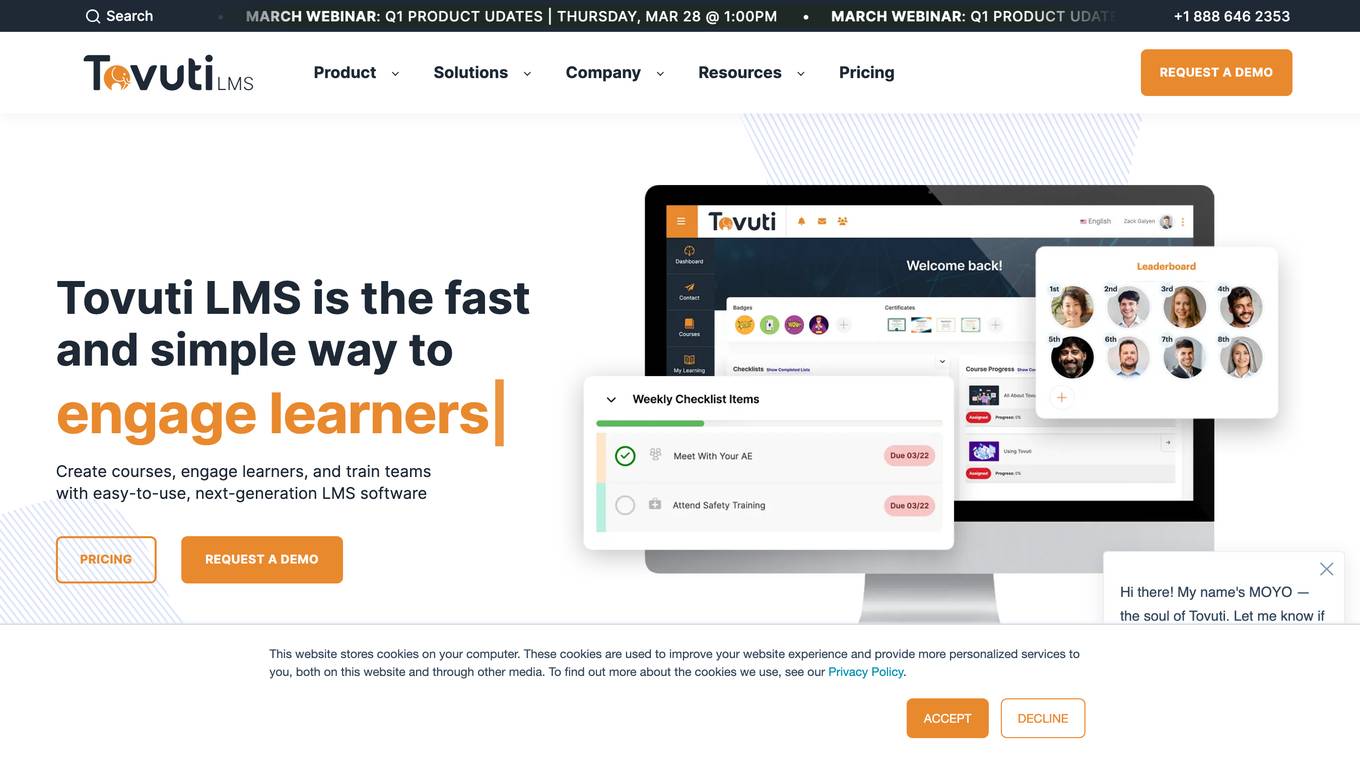

Tovuti LMS

Tovuti LMS is an adaptive, people-first learning platform that helps organizations create engaging courses, train teams, and track progress. With its easy-to-use interface and powerful features, Tovuti LMS makes learning fun and easy. Tovuti LMS is trusted by leading organizations around the world to provide their employees with the training they need to succeed.

Chatbond

Chatbond is an AI chatbot builder that enables users to create customized chatbots for websites and messaging platforms without the need for coding skills. With Chatbond, users can design conversational interfaces, integrate AI capabilities, and deploy chatbots to enhance customer engagement and streamline communication processes. The platform offers a user-friendly interface with drag-and-drop functionality, pre-built templates, and analytics tools to monitor chatbot performance and optimize interactions. Chatbond empowers businesses to automate customer support, lead generation, and sales processes, improving efficiency and scalability.

Teachable Machine

Teachable Machine is a web-based tool that makes it easy to create custom machine learning models, even if you don't have any coding experience. With Teachable Machine, you can train models to recognize images, sounds, and poses. Once you've trained a model, you can export it to use in your own projects.

Sherpa.ai

Sherpa.ai is a SaaS platform that enables data collaborations without sharing data. It allows businesses to build and train models with sensitive data from different parties, without compromising privacy or regulatory compliance. Sherpa.ai's Federated Learning platform is used in various industries, including healthcare, financial services, and manufacturing, to improve AI models, accelerate research, and optimize operations.

Surge AI

Surge AI is a data labeling platform that provides human-generated data for training and evaluating large language models (LLMs). It offers a global workforce of annotators who can label data in over 40 languages. Surge AI's platform is designed to be easy to use and integrates with popular machine learning tools and frameworks. The company's customers include leading AI companies, research labs, and startups.

Entry Point AI

Entry Point AI is a modern AI optimization platform for fine-tuning proprietary and open-source language models. It provides a user-friendly interface to manage prompts, fine-tunes, and evaluations in one place. The platform enables users to optimize models from leading providers, train across providers, work collaboratively, write templates, import/export data, share models, and avoid common pitfalls associated with fine-tuning. Entry Point AI simplifies the fine-tuning process, making it accessible to users without the need for extensive data, infrastructure, or insider knowledge.

Bifrost AI

Bifrost AI is a data generation engine designed for AI and robotics applications. It enables users to train and validate AI models faster by generating physically accurate synthetic datasets in 3D simulations, eliminating the need for real-world data. The platform offers pixel-perfect labels, scenario metadata, and a simulated 3D world to enhance AI understanding. Bifrost AI empowers users to create new scenarios and datasets rapidly, stress test AI perception, and improve model performance. It is built for teams at every stage of AI development, offering features like automated labeling, class imbalance correction, and performance enhancement.

DocsAI

DocsAI is an AI-powered document companion that helps you organize, search, and chat with your documents. It integrates with various sources, including websites, text files, PDFs, Docx, Notion, and Confluence. You can customize the companion's appearance to match your brand and suggest better answers to improve its accuracy. DocsAI also offers a chat widget that can be embedded on any website, allowing you to chat with your documents and get summaries, insights, and leads. It is mobile and tablet-friendly, and you can export chats and analyze data to identify trends and improve customer satisfaction. DocsAI is open source and offers custom prompts and multi-language support.

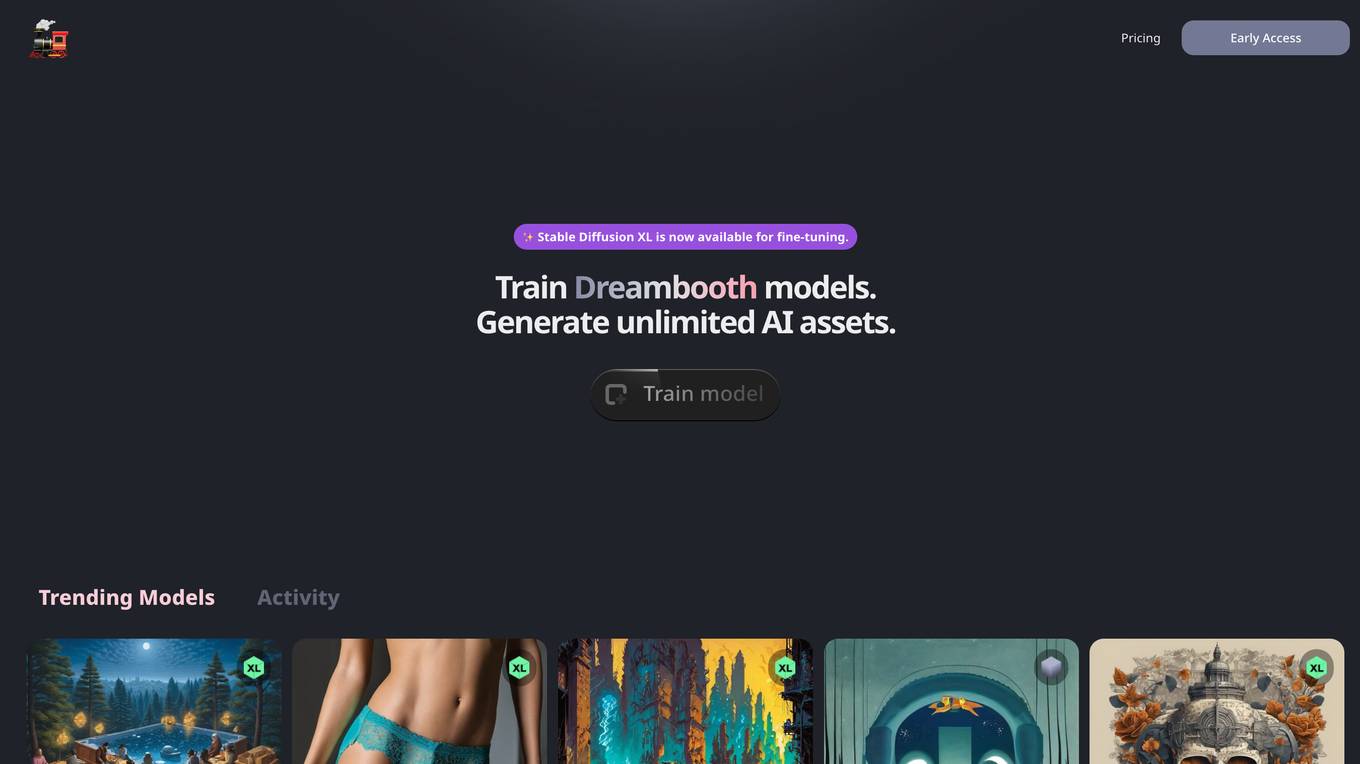

TrainEngine.ai

TrainEngine.ai is a powerful AI-powered image generation tool that allows users to create stunning, unique images from text prompts. With its advanced algorithms and vast dataset, TrainEngine.ai can generate images in a wide range of styles, from realistic to abstract, and in various formats, including photos, paintings, and illustrations. The platform is easy to use, making it accessible to both professional artists and hobbyists alike. TrainEngine.ai offers a range of features, including the ability to fine-tune models, generate unlimited AI assets, and access trending models. It also provides a marketplace where users can buy and sell AI-generated images.

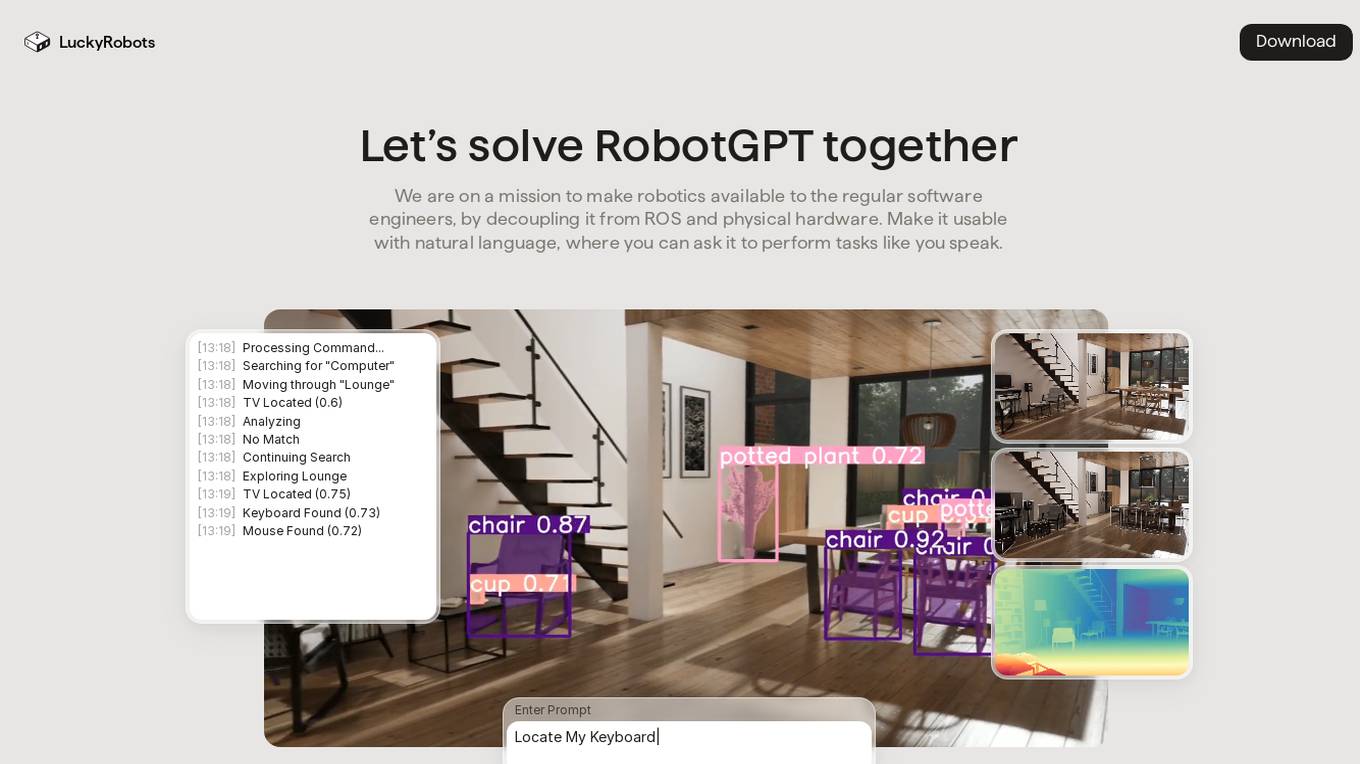

LuckyRobots

LuckyRobots is an AI tool designed to make robotics accessible to software engineers by providing a simulation platform for deploying end-to-end AI models. The platform allows users to interact with robots using natural language commands, explore virtual environments, test robot models in realistic scenarios, and receive camera feeds for monitoring. LuckyRobots aims to train AI models on real-world simulations and respond to natural language inputs, offering a user-friendly and innovative approach to robotics development.

2 - Open Source AI Tools

rl

TorchRL is an open-source Reinforcement Learning (RL) library for PyTorch. It provides pytorch and **python-first** , low and high level abstractions for RL that are intended to be **efficient** , **modular** , **documented** and properly **tested**. The code is aimed at supporting research in RL. Most of it is written in python in a highly modular way, such that researchers can easily swap components, transform them or write new ones with little effort.

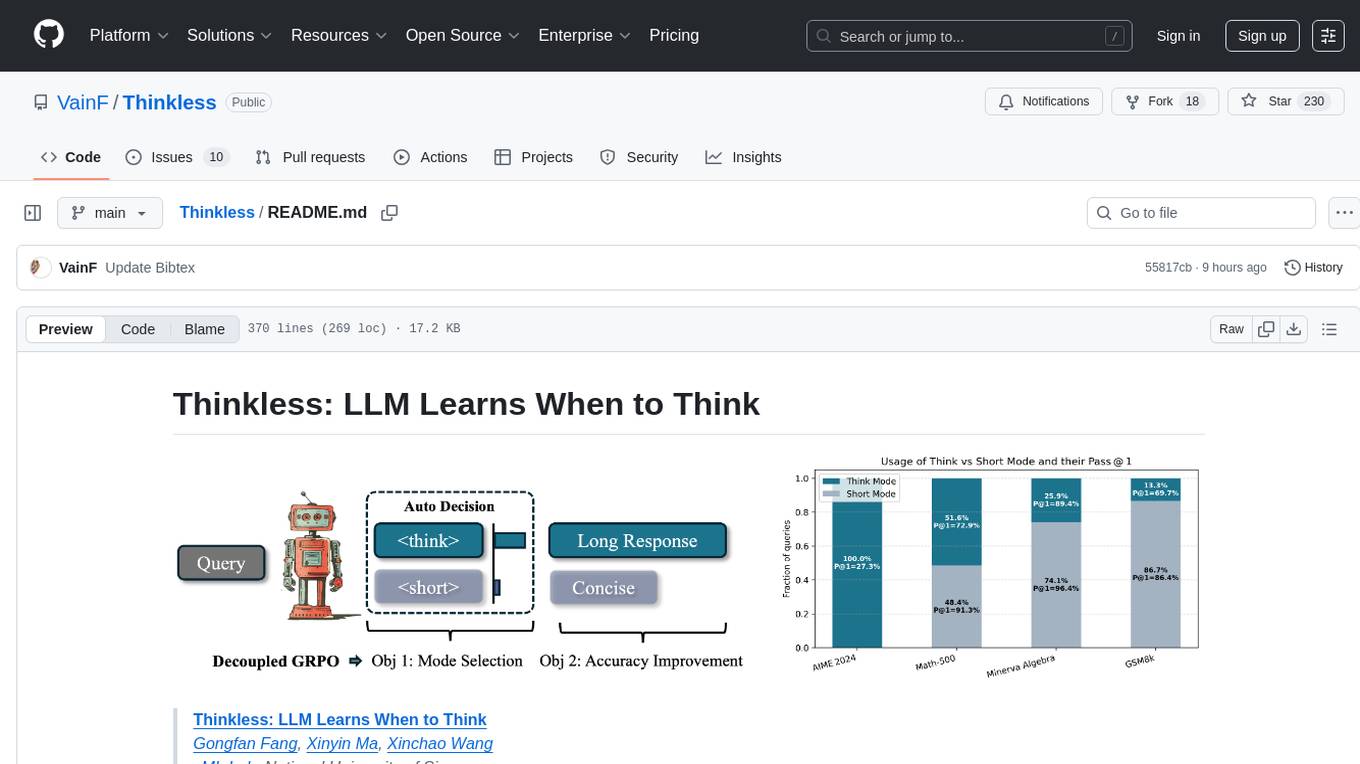

Thinkless

Thinkless is a learnable framework that empowers a Language and Reasoning Model (LLM) to adaptively select between short-form and long-form reasoning based on task complexity and model's ability. It is trained under a reinforcement learning paradigm and employs control tokens for concise responses and detailed reasoning. The core method uses a Decoupled Group Relative Policy Optimization (DeGRPO) algorithm to govern reasoning mode selection and improve answer accuracy, reducing long-chain thinking by 50% - 90% on benchmarks like Minerva Algebra and MATH-500. Thinkless enhances computational efficiency of Reasoning Language Models.

20 - OpenAI Gpts

How to Train a Chessie

Comprehensive training and wellness guide for Chesapeake Bay Retrievers.

The Train Traveler

Friendly train travel guide focusing on the best routes, essential travel information, and personalized travel insights, for both experienced and novice travelers.

How to Train Your Dog (or Cat, or Dragon, or...)

Expert in pet training advice, friendly and engaging.

TrainTalk

Your personal advisor for eco-friendly train travel. Let's plan your next journey together!

Monster Battle - RPG Game

Train monsters, travel the world, earn Arena Tokens and become the ultimate monster battling champion of earth!

Hero Master AI: Superhero Training

Train to become a superhero or a supervillain. Master your powers, make pivotal choices. Each decision you make in this action-packed game not only shapes your abilities but also your moral alignment in the battle between good and evil. Another GPT Simulator by Dave Lalande

Pytorch Trainer GPT

Your purpose is to create the pytorch code to train language models using pytorch

Design Recruiter

Job interview coach for product designers. Train interviews and say stop when you need a feedback. You got this!!

Pocket Training Activity Expert

Expert in engaging, interactive training methods and activities.

RailwayGPT

Technical expert on locomotives, trains, signalling, and railway technology. Can answer questions and draw designs specific to transportation domain.

Railroad Conductors and Yardmasters Roadmap

Don’t know where to even begin? Let me help create a roadmap towards the career of your dreams! Type "help" for More Information