Best AI tools for< Test Responses >

20 - AI tool Sites

Repromptify

Repromptify is an AI tool that simplifies the process of creating AI prompts. It allows users to generate end-to-end optimized prompts for various AI models such as GPT-4, LLMs, DALLE•2, and Midjourney ChatGPT. With Repromptify, users can easily test and generate images and responses tailored to their needs without worrying about ambiguity or details. The tool offers a free trial for users to explore its features upon signing up.

Fake Hacker News

The website is a platform where users can submit fake hacker news for testing purposes. Users can log in to submit their titles and test their submissions. The platform allows users to see how readers may respond to their posts. The website was built by Justin and Michael.

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

Leapwork

Leapwork is an AI-powered test automation platform that enables users to build, manage, maintain, and analyze complex data-driven testing across various applications, including AI apps. It offers a democratized testing approach with an intuitive visual interface, composable architecture, and generative AI capabilities. Leapwork supports testing of diverse application types, web, mobile, desktop applications, and APIs. It allows for scalable testing with reusable test flows that adapt to changes in the application under test. Leapwork can be deployed on the cloud or on-premises, providing full control to the users.

bottest.ai

bottest.ai is an AI-powered chatbot testing tool that focuses on ensuring quality, reliability, and safety in AI-based chatbots. The tool offers automated testing capabilities without the need for coding, making it easy for users to test their chatbots efficiently. With features like regression testing, performance testing, multi-language testing, and AI-powered coverage, bottest.ai provides a comprehensive solution for testing chatbots. Users can record tests, evaluate responses, and improve their chatbots based on analytics provided by the tool. The tool also supports enterprise readiness by allowing scalability, permissions management, and integration with existing workflows.

Grit Brokerage

Grit Brokerage is a domain and website brokerage platform that facilitates the buying and selling of domains. The platform allows users to inquire about domain prices, submit offers, and connect with domain brokers. With a focus on domain transactions, Grit Brokerage provides a seamless experience for individuals and businesses looking to acquire or sell domain names.

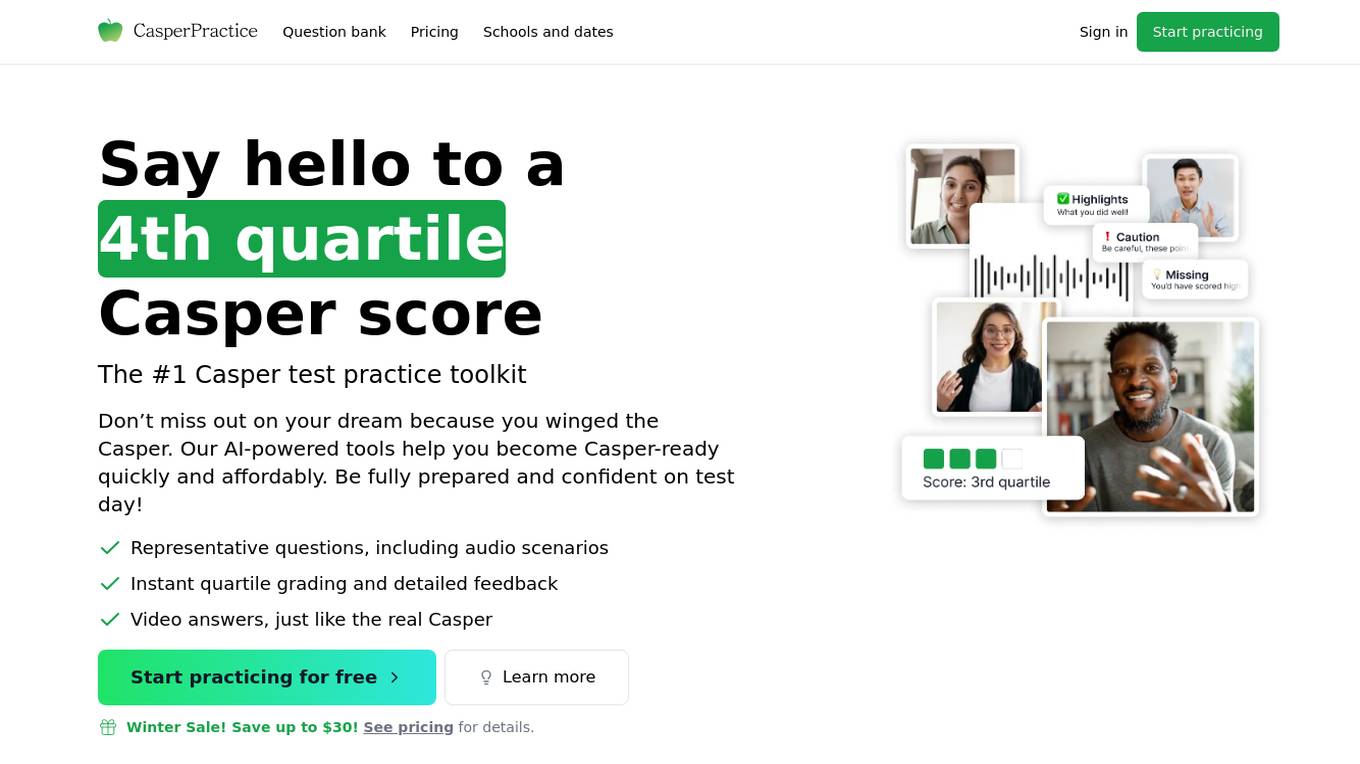

CasperPractice

CasperPractice is an AI-powered toolkit designed to help individuals prepare for the Casper test, an open-response situational judgment test used for admissions to various professional schools. The platform offers personalized practice scenarios, instant quartile grading, detailed feedback, video answers, and a fully simulated test environment. With a large question bank and unlimited practice options, users can improve their test-taking skills and aim for a top quartile score. CasperPractice is known for its affordability, instant feedback, and realistic simulation, making it a valuable resource for anyone preparing for the Casper test.

Promptitude.io

Promptitude.io is a platform that allows users to integrate GPT into their apps and workflows. It provides a variety of features to help users manage their prompts, personalize their AI responses, and publish their prompts for use by others. Promptitude.io also offers a library of pre-built prompts that users can use to get started quickly.

Ai Homework Helper

Ai Homework Helper is a free AI tool designed to assist students with their homework across various subjects such as Math, Geometry, Physics, Chemistry, and Statistics. Users can quickly get accurate and detailed responses for any academic level by uploading questions or images. The tool generates step-by-step explanations, helping users understand the logic behind the answers. It is a time-saving solution that provides end-to-end academic assistance, ensuring correct and original responses. The tool is user-friendly, free to use without sign-up requirements, and supports multiple input options like photos, pictures, and PDF files.

Teste.ai

Teste.ai is an AI-powered platform that allows users to create software testing scenarios and test cases using top-notch artificial intelligence technology. The platform offers a variety of tools based on AI to accelerate the software quality testing journey, helping testers cover a wide range of requirements with a vast array of test scenarios efficiently. Teste.ai's intelligent features enable users to save time and enhance efficiency in creating, executing, and managing software tests. With advanced AI integration, the platform provides automatic generation of test cases based on software documentation or specific requirements, ensuring comprehensive test coverage and precise responses to testing queries.

jsonAI

jsonAI is an AI tool that allows users to easily transform data into structured JSON format. Users can define their schema, add custom prompts, and receive AI-structured JSON responses. The tool enables users to create complex schemas with nested objects, control the response JSON on the fly, and test their JSON data in real-time. jsonAI offers a free trial plan, seamless integration with existing apps, and ensures data security by not storing user data on their servers.

AdaraChatbot

AdaraChatbot is a platform that allows users to build their own chatbot using OpenAI Assistant API. It offers seamless integration for effortlessly incorporating a chatbot into websites. Users can test the chatbot assistant, ask questions, and receive responses powered by OpenAI Assistant API. AdaraChatbot provides features such as building chatbots with OpenAI's assistant, easy integration with websites, user inquiry with lead collection, real-time analytics, file attachments, and compatibility with popular website platforms. The application offers different pricing plans suitable for personal projects, organizations, and tailored solutions for large-scale operations.

Smartbot

Smartbot is a 100% customizable AI assistant designed to boost productivity and seamlessly integrate into daily work life. It offers a prompt library with ready-to-use prompts, access to top AI models, and tailored support for employability. Developers can benefit from a code visualization interface to test and visualize generated code. Smartbot aims to optimize tasks, provide high-quality responses, and enhance skills through events and resources.

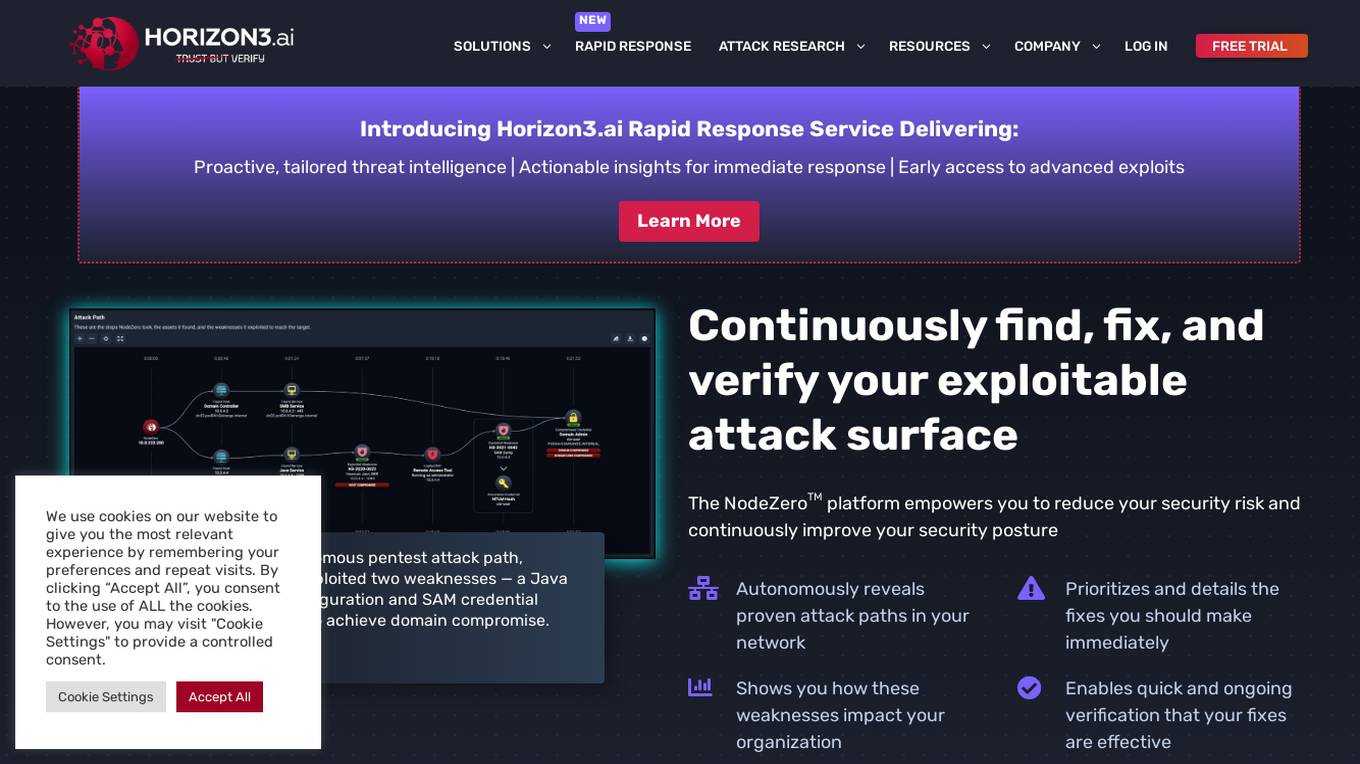

NodeZero™ Platform

Horizon3.ai Solutions offers the NodeZero™ Platform, an AI-powered autonomous penetration testing tool designed to enhance cybersecurity measures. The platform combines expert human analysis by Offensive Security Certified Professionals with automated testing capabilities to streamline compliance processes and proactively identify vulnerabilities. NodeZero empowers organizations to continuously assess their security posture, prioritize fixes, and verify the effectiveness of remediation efforts. With features like internal and external pentesting, rapid response capabilities, AD password audits, phishing impact testing, and attack research, NodeZero is a comprehensive solution for large organizations, ITOps, SecOps, security teams, pentesters, and MSSPs. The platform provides real-time reporting, integrates with existing security tools, reduces operational costs, and helps organizations make data-driven security decisions.

Human AI Marketing Software

The website is a digital marketing agency that offers AI marketing software to help companies grow smarter. They develop and battle-test AI marketing software to improve marketing results. The software allows for hyper-personalization of email and marketing campaigns, centralizes content strategy with Blueprint SEO, and provides real-time customer insights. The agency also offers articles on AI marketing trends and strategies.

Devath

Devath is the world's first AI-powered SmartHome platform that revolutionizes the way users interact with their smart devices. It eliminates the need for writing extensive lines of code by allowing users to simply give instructions to the AI for seamless device control. With features like splash resistance and responsive design, Devath offers a user-friendly experience for managing smart home functionalities. The platform also enables developers to preview and test their apps before submission, providing a 99% faster publishing process. Devath is continuously evolving with user feedback and aims to enhance the SmartHome experience through AI copilots and customizable features. With Devath, users can control their devices from the web and enjoy free unlimited access to the AI era of SmartHome.

Future AGI

Future AGI is a revolutionary AI data management platform that aims to achieve 99% accuracy in AI applications across software and hardware. It provides a comprehensive evaluation and optimization platform for enterprises to enhance the performance of their AI models. Future AGI offers features such as creating trustworthy, accurate, and responsible AI, 10x faster processing, generating and managing diverse synthetic datasets, testing and analyzing agentic workflow configurations, assessing agent performance, enhancing LLM application performance, monitoring and protecting applications in production, and evaluating AI across different modalities.

Framer

Framer is a design tool that allows users to create interactive prototypes for web and mobile applications. With Framer, designers can easily bring their ideas to life by adding animations, transitions, and interactions to their designs. The tool offers a range of features to streamline the design process and enhance collaboration among team members. Framer is popular among UI/UX designers for its intuitive interface and powerful capabilities.

Fyx.ai

Fyx.ai is a cutting-edge AI-powered SaaS platform that revolutionizes the advertising landscape by enabling marketers to create, test, and optimize ads through virtual audience simulations. The platform offers features such as custom virtual audience creation, ad simulation and testing, comprehensive analytics, AI-powered optimization, and integration with major ad platforms. Fyx.ai helps users save millions in ad spend by identifying and targeting responsive audience segments, optimizing campaign performance, and achieving higher ROI. The platform's user-friendly interface provides real-time updates and insights to stay ahead of the competition.

AI Generated Test Cases

AI Generated Test Cases is an innovative tool that leverages artificial intelligence to automatically generate test cases for software applications. By utilizing advanced algorithms and machine learning techniques, this tool can efficiently create a comprehensive set of test scenarios to ensure the quality and reliability of software products. With AI Generated Test Cases, software development teams can save time and effort in the testing phase, leading to faster release cycles and improved overall productivity.

0 - Open Source AI Tools

20 - OpenAI Gpts

IELTS Writing Test

Simulates the IELTS Writing Test, evaluates responses, and estimates band scores.

Coder Simulator

Provides realistic software developer responses. Asks for clarification, and won't make assumptions. Often begins responses with "It depends".

RansomChatGPT

I'm a ransomware negotiation simulation and analysis bot trained with over 131 real-life negotiations. Type "start negotiation" to begin! New feature: Type "threat actor personality test"

末日幸存者:社会动态模拟 Doomsday Survivor

上帝视角观察、探索和影响一个末日丧尸灾难后的人类社会。Observe, explore and influence human society after the apocalyptic zombie disaster from a God's perspective. Sponsor:小红书“ ItsJoe就出行 ”

Web App Prototyper

Specializing in crafting cutting-edge web applications using Next.js, prioritizing responsive, accessible design and seamless GitHub Copilot integration.

Test Shaman

Test Shaman: Guiding software testing with Grug wisdom and humor, balancing fun with practical advice.

Raven's Progressive Matrices Test

Provides Raven's Progressive Matrices test with explanations and calculates your IQ score.

IQ Test Assistant

An AI conducting 30-question IQ tests, assessing and providing detailed feedback.

Test Case GPT

I will provide guidance on testing, verification, and validation for QA roles.