Best AI tools for< Support Multiple Gpus >

20 - AI tool Sites

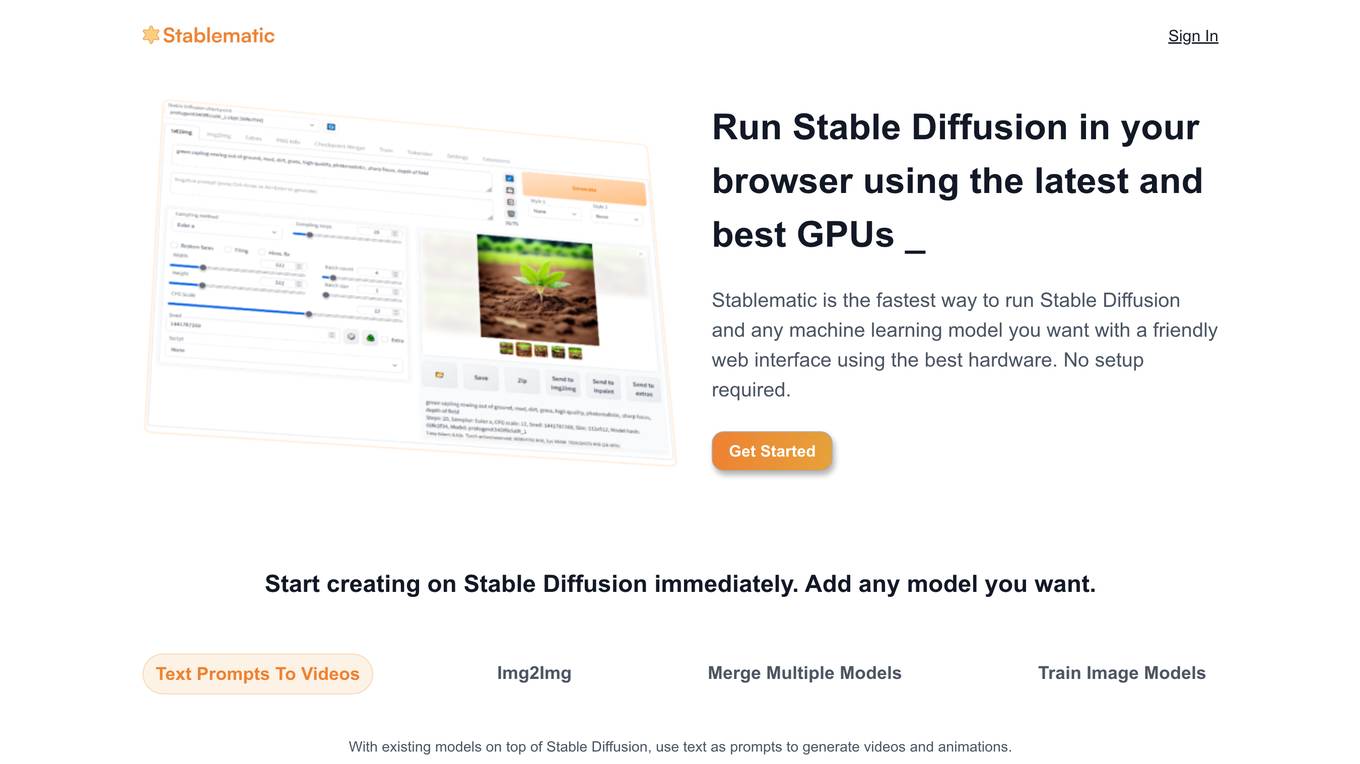

Stablematic

Stablematic is a web-based platform that allows users to run Stable Diffusion and other machine learning models without the need for local setup or hardware limitations. It provides a user-friendly interface, pre-installed plugins, and dedicated GPU resources for a seamless and efficient workflow. Users can generate images and videos from text prompts, merge multiple models, train custom models, and access a range of pre-trained models, including Dreambooth and CivitAi models. Stablematic also offers API access for developers and dedicated support for users to explore and utilize the capabilities of Stable Diffusion and other machine learning models.

Juice Remote GPU

Juice Remote GPU is a software that enables AI and Graphics workloads on remote GPUs. It allows users to offload GPU processing for any CUDA or Vulkan application to a remote host running the Juice agent. The software injects CUDA and Vulkan implementations during runtime, eliminating the need for code changes in the application. Juice supports multiple clients connecting to multiple GPUs and multiple clients sharing a single GPU. It is useful for sharing a single GPU across multiple workstations, allocating GPUs dynamically to CPU-only machines, and simplifying development workflows and deployments. Juice Remote GPU performs within 5% of a local GPU when running in the same datacenter. It supports various APIs, including CUDA, Vulkan, DirectX, and OpenGL, and is compatible with PyTorch and TensorFlow. The team behind Juice Remote GPU consists of engineers from Meta, Intel, and the gaming industry.

LiveChatAI

LiveChatAI is an AI chatbot application that works with your data to provide interactive and personalized customer support solutions. It blends AI and human support to deliver dynamic and accurate responses, improving customer satisfaction and reducing support volume. With features like AI Actions, custom question & answers, and content import, LiveChatAI offers a seamless integration for businesses across various platforms and languages. The application is designed to be user-friendly, requiring no AI expertise, and offers instant localization in 95 languages.

AI Diff Checker Comparator Online

The AI Diff Checker Comparator Online is an advanced online comparison tool that leverages AI technology to help users compare multiple text files, JSON files, and code files side by side. It offers both pairwise and baseline comparison modes, ensuring precise results. The tool processes files based on their content structure, supports various file types, and provides real-time editing capabilities. Users can benefit from its accurate comparison algorithms and innovative features, making it a powerful and easy-to-use solution for spotting differences between files.

15minuteplan.ai

15minuteplan.ai is a cutting-edge AI Business Plan Generator that enables entrepreneurs to create professional business plans in under 15 minutes. The tool simplifies the process by guiding users through a series of questions and leveraging advanced language models like GPT-3.5 and GPT-4 to generate comprehensive plans. It caters to entrepreneurs seeking investor funding, bank loans, or simply looking to create a business plan for various purposes. The AI tool is designed to save time and effort by providing quick and efficient solutions for business planning.

AI Comic Translate

AI Comic Translate is an intelligent comic translation tool that revolutionizes comic translation by providing fast, accurate, and multi-language translation services for comic enthusiasts and creators. It offers cost-effective solutions, easy-to-use interface design, and supports translation between multiple languages, breaking language barriers and taking comic works global.

EmbedAI

EmbedAI is a platform that enables users to create custom AI chatbots powered by ChatGPT. Users can train the AI chatbot on their own data and embed it on their website. The platform allows for efficient management of information and offers automated responses to user queries. EmbedAI supports multiple languages and provides customization options for the chatbot's appearance. It integrates easily with other apps via API or Zapier, making it a versatile tool for enhancing customer engagement and streamlining processes.

ASKTOWEB

ASKTOWEB is an AI-powered service that enhances websites by adding AI search buttons to SaaS landing pages, software documentation pages, and other websites. It allows visitors to easily search for information without needing specific keywords, making websites more user-friendly and useful. ASKTOWEB analyzes user questions to improve site content and discover customer needs. The service offers multi-model accuracy verification, direct reference jump links, multilingual chatbot support, effortless attachment with a single line of script, and a simple UI without annoying pop-ups. ASKTOWEB reduces the burden on customer support by acting as a buffer for inquiries about available information on the website.

Kel

Kel is an AI Assistant designed to operate within the Command Line Interface (CLI). It offers users the ability to automate repetitive tasks, boost productivity, and enhance the intelligence and efficiency of their CLI experience. Kel supports multiple Language Model Models (LLMs) including OpenAI, Anthropic, and Ollama. Users can upload files to interact with their artifacts and bring their own API key for integration. The tool is free and open source, allowing for community contributions on GitHub. For support, users can reach out to the Kel team.

Doclingo

Doclingo is an AI-powered document translation tool that supports translating documents in various formats such as PDF, Word, Excel, PowerPoint, SRT subtitles, ePub ebooks, AR&ZIP packages, and more. It utilizes large language models to provide accurate and professional translations, preserving the original layout of the documents. Users can enjoy a limited-time free trial upon registration, with the option to subscribe for more features. Doclingo aims to offer high-quality translation services through continuous algorithm improvements.

ttsMP3.com

ttsMP3.com is a free Text-To-Speech and Text-to-MP3 tool that allows users to easily convert US English text into professional speech for various purposes such as e-learning, presentations, YouTube videos, and website accessibility. The tool offers a wide range of voices in different languages and accents, including regular and AI voices. Users can download the generated speech as MP3 files, and customize speech with features like breaks, emphasis, speed adjustments, pitch variations, whispers, and conversations. Supported voice languages include Arabic, English, Portuguese, Spanish, Chinese, Danish, Dutch, French, German, Icelandic, Indian, Italian, Japanese, Korean, Mexican, Norwegian, Polish, Romanian, Russian, Swedish, Turkish, and Welsh.

Humanize AI Text

Humanize AI Text is a free online AI humanizer tool that converts AI-generated content from ChatGPT, Google Bard, Jasper, QuillBot, Grammarly, or any other AI to human text without altering the content's meaning. The platform uses advanced algorithms to analyze and produce output that mimics human writing style. It offers various modes for conversion and supports multiple languages. The tool aims to help content creators, bloggers, and writers enhance their content quality and improve search engine ranking by converting AI-generated text into human-readable form.

Paraphrasing.io

Paraphrasing.io is a free AI paraphrasing tool that helps users rewrite, edit, and adjust the tone of their content for improved comprehension. It prevents plagiarism in various types of content such as blogs, research papers, and more using cutting-edge AI technology. The tool offers four paraphrasing modes to cater to different writing styles and resonates with a distinct writing style. Users including writers, bloggers, researchers, students, and laypersons can benefit from this online tool to enhance the uniqueness, engagement, and readability of their content.

CoeFont

CoeFont is a global AI Voice Hub that offers innovative AI voice solutions to empower users worldwide to unleash the full potential of their voices. With features like Text-to-Speech Editor, Voice Changer, and AI Voice Creation, CoeFont provides a platform for users to transform written text into lifelike audio, experiment with voice effects, and monetize their voice talent. The application supports multiple languages, offers a wide range of voices, and ensures natural-sounding interactions through real-time conversion. CoeFont is dedicated to promoting inclusivity and accessibility through initiatives like the Voice for All project, providing free AI voice services to individuals at risk of losing their voices.

Corti

Corti is an AI platform that provides advanced capabilities for patient consultations. It offers features such as Co-Pilot, a proactive AI scribe and assistant for clinicians, and Mission Control, an AI-powered conversation recorder. Corti's AI tools support various healthcare tasks, including high precision procedure and diagnosis coding, context-aware assistants for clinicians, and support for multiple languages in speech and text. The platform is trusted by major hospitals and healthcare providers worldwide.

Spinach

Spinach is an AI-powered tool that transforms meeting discussions into actionable notes and automates post-meeting tasks. It seamlessly integrates with existing tools, supports multiple languages, and ensures enterprise-grade security. Users can effortlessly capture decision points, action items, and status updates, enhancing team collaboration and productivity.

Rodin AI

Rodin AI is a free AI 3D model generator that allows users to instantly create stunning 3D assets, models, and objects powered by AI technology. The platform offers various subscription plans tailored for different user needs, such as business, education, and enterprise. Users can generate 3D designs using AI algorithms and access features like OmniCraft for texture generation, Mesh Editor for model editing, and HDRI Generation for high-quality panoramas. Rodin AI supports multiple languages and provides advanced options for subscribers to enhance their 3D modeling experience.

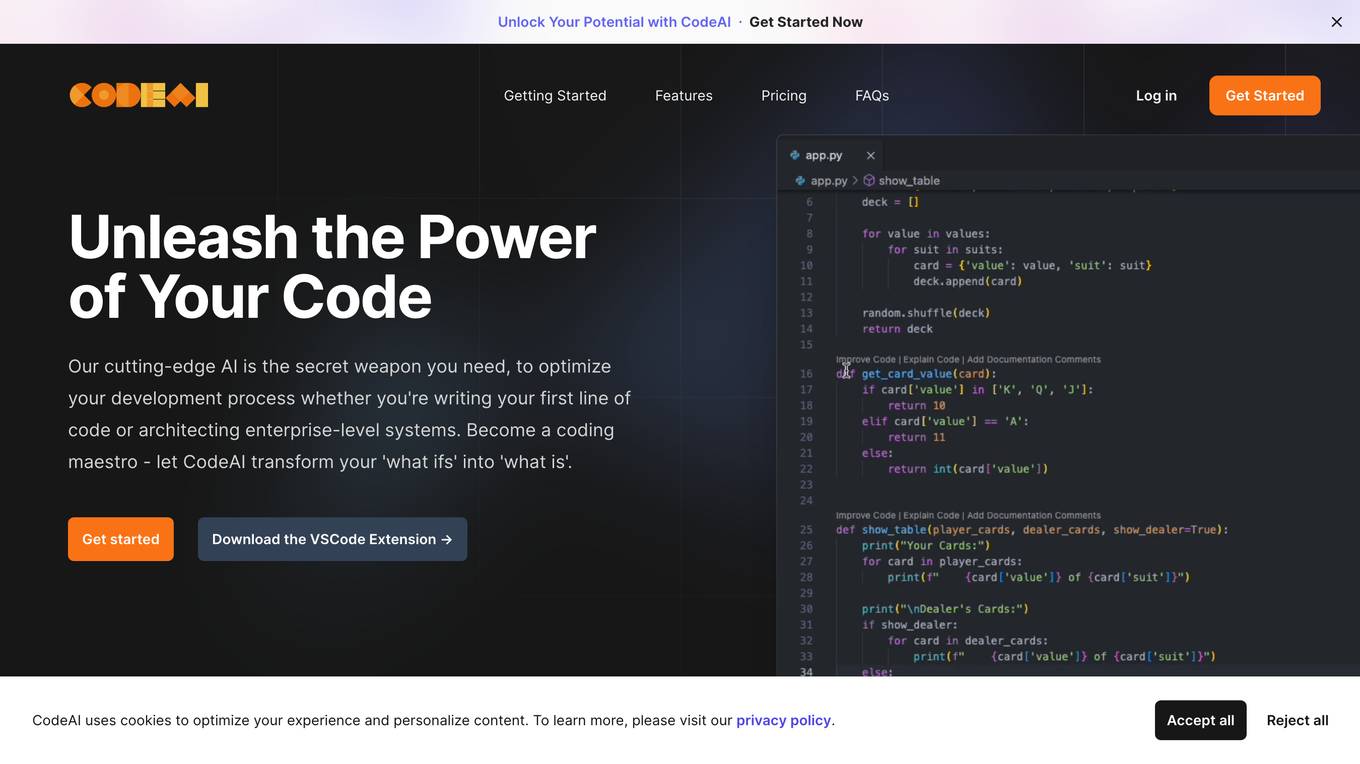

CodeAI

CodeAI is an advanced AI tool designed to optimize the development process for coders of all levels. It offers cutting-edge AI capabilities to enhance coding efficiency, provide real-time feedback, automate tasks like generating commit messages and updating changelogs, and boost productivity. CodeAI supports multiple programming languages and is suitable for individual developers, small teams, and professionals working on various projects.

Spicy Chat AI

Spicy Chat AI is an innovative AI application that allows users to engage in uncensored adult-themed conversations with lifelike NSFW Character AI. Users can create virtual personas, enjoy uninhibited chats, and experience emotional depth in a safe and expressive environment. The platform prioritizes user privacy with SSL encryption and compliance with data protection standards. Spicy Chat AI offers both free and premium plans, supporting multiple languages and unique features like AI voice response and chat-based image generation.

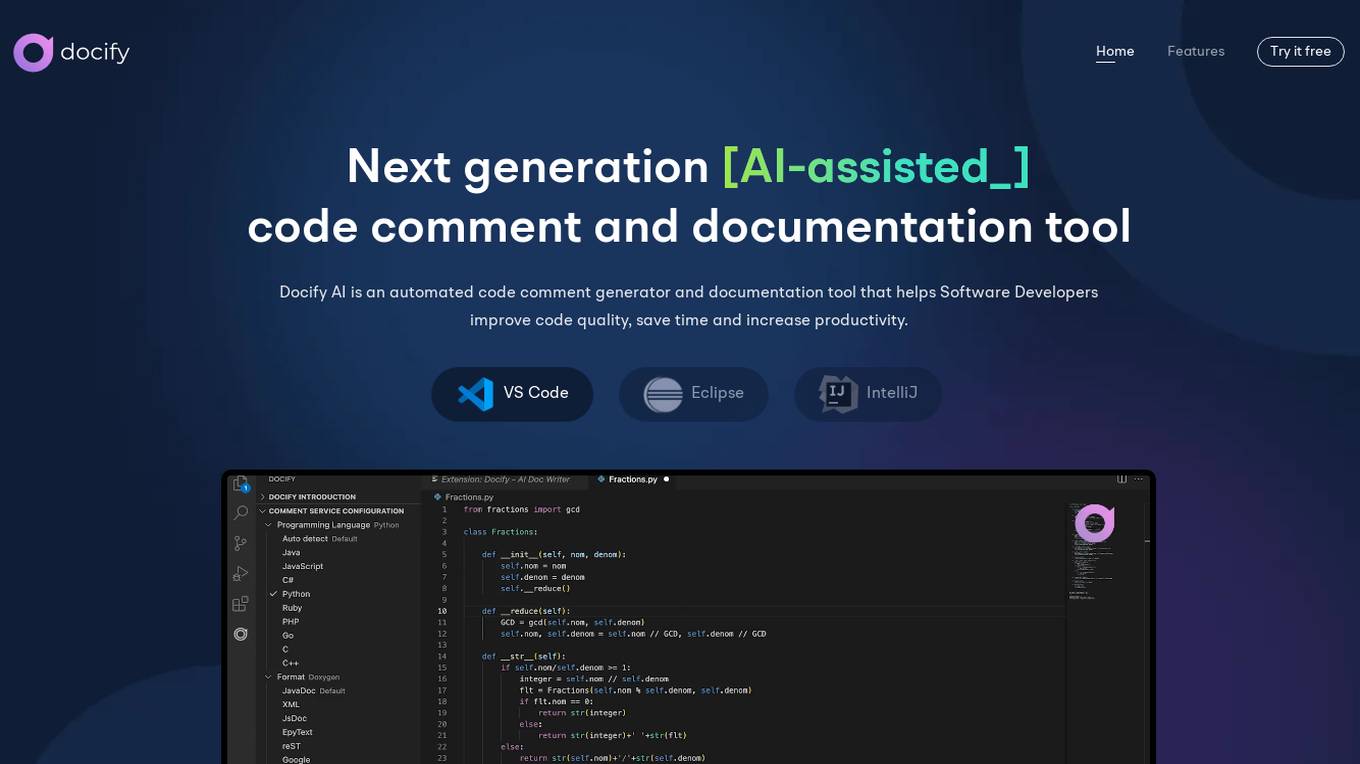

Docify AI

Docify AI is an AI-assisted code comment and documentation tool designed to help software developers improve code quality, save time, and increase productivity. It offers features such as automated documentation generation, comment translation, inline comments, and code coverage analysis. The tool supports multiple programming languages and provides a user-friendly interface for efficient code documentation. Docify AI is built on proprietary AI models, ensuring data privacy and high performance for professional developers.

1 - Open Source AI Tools

FluidFrames.RIFE

FluidFrames.RIFE is a Windows app powered by RIFE AI to create frame-generated and slowmotion videos. It is written in Python and utilizes external packages such as torch, onnxruntime-directml, customtkinter, OpenCV, moviepy, and Nuitka. The app features an elegant GUI, video frame generation at different speeds, video slow motion, video resizing, multiple GPU support, and compatibility with various video formats. Future versions aim to support different GPU types, enhance the GUI, include audio processing, optimize video processing speed, and introduce new features like saving AI-generated frames and supporting different RIFE AI models.

20 - OpenAI Gpts

Social Mentor One Gpt

Genero le bozze di condivisione su Facebook, Instagram, LinkedIn, X e Threads per articoli giornalistici a partire da un link. 👇 Incolla direttamente il link senza scrivere altro e premi invio

Marketing Scribe

I'm a creative bot crafting engaging social posts in English and Dutch, informed by extensive copywriting resources.

Multiple Sclerosis MS Companion

Friendly and conversational MS companion, empathetic and informative.

Directv Packages - How To Guide 3 Months Free

Comprehensive guide on Directv packages and multiple offers.

LightingGPT

(EN) LightingGPT is an innovative AI system created by Lightinology. It specifically designed to answer a wide range of questions about lighting and optics. It supports multiple languages. (中) LightingGPT是由Lightinology創建的人工智能系統,專門設計來解答有關照明和光學的各種問題。支援各國語言。

Learn WCAG2.2 (Web Accessibility)

This GPT is created to learn Web Content Accessibility Guidelines (WCAG) 2.2. Supports multiple languages.

CreceTube Experto

Asistente multilingüe para la creación de contenido de video, con apoyo y consejos creativos en múltiples idiomas.

Ekko Support Specialist

How to be a master of surprise plays and unconventional strategies in the bot lane as a support role.

Backloger.ai -Support Log Analyzer and Summary

Drop your Support Log Here, Allowing it to automatically generate concise summaries reporting to the tech team.

Tech Support Advisor

From setting up a printer to troubleshooting a device, I’m here to help you step-by-step.