Best AI tools for< Support Multimodal Models >

20 - AI tool Sites

Roboflow

Roboflow is an AI tool designed for computer vision tasks, offering a platform that allows users to annotate, train, deploy, and perform inference on models. It provides integrations, ecosystem support, and features like notebooks, autodistillation, and supervision. Roboflow caters to various industries such as aerospace, agriculture, healthcare, finance, and more, with a focus on simplifying the development and deployment of computer vision models.

Sightengine

The website offers content moderation and image analysis products using powerful APIs to automatically assess, filter, and moderate images, videos, and text. It provides features such as image moderation, video moderation, text moderation, AI image detection, and video anonymization. The application helps in detecting unwanted content, AI-generated images, and personal information in videos. It also offers tools to identify near-duplicates, spam, and abusive links, and prevent phishing and circumvention attempts. The platform is fast, scalable, accurate, easy to integrate, and privacy compliant, making it suitable for various industries like marketplaces, dating apps, and news platforms.

Reka

Reka is a cutting-edge AI application offering next-generation multimodal AI models that empower agents to see, hear, and speak. Their flagship model, Reka Core, competes with industry leaders like OpenAI and Google, showcasing top performance across various evaluation metrics. Reka's models are natively multimodal, capable of tasks such as generating textual descriptions from videos, translating speech, answering complex questions, writing code, and more. With advanced reasoning capabilities, Reka enables users to solve a wide range of complex problems. The application provides end-to-end support for 32 languages, image and video comprehension, multilingual understanding, tool use, function calling, and coding, as well as speech input and output.

FuriosaAI

FuriosaAI is an AI application that offers Hardware RNGD for LLM and Multimodality, as well as WARBOY for Computer Vision. It provides a comprehensive developer experience through the Furiosa SDK, Model Zoo, and Dev Support. The application focuses on efficient AI inference, high-performance LLM and multimodal deployment capabilities, and sustainable mass adoption of AI. FuriosaAI features the Tensor Contraction Processor architecture, software for streamlined LLM deployment, and a robust ecosystem support. It aims to deliver powerful and efficient deep learning acceleration while ensuring future-proof programmability and efficiency.

Anthropic

Anthropic is a research and deployment company founded in 2021 by former OpenAI researchers Dario Amodei, Daniela Amodei, and Geoffrey Irving. The company is developing large language models, including Claude, a multimodal AI model that can perform a variety of language-related tasks, such as answering questions, generating text, and translating languages.

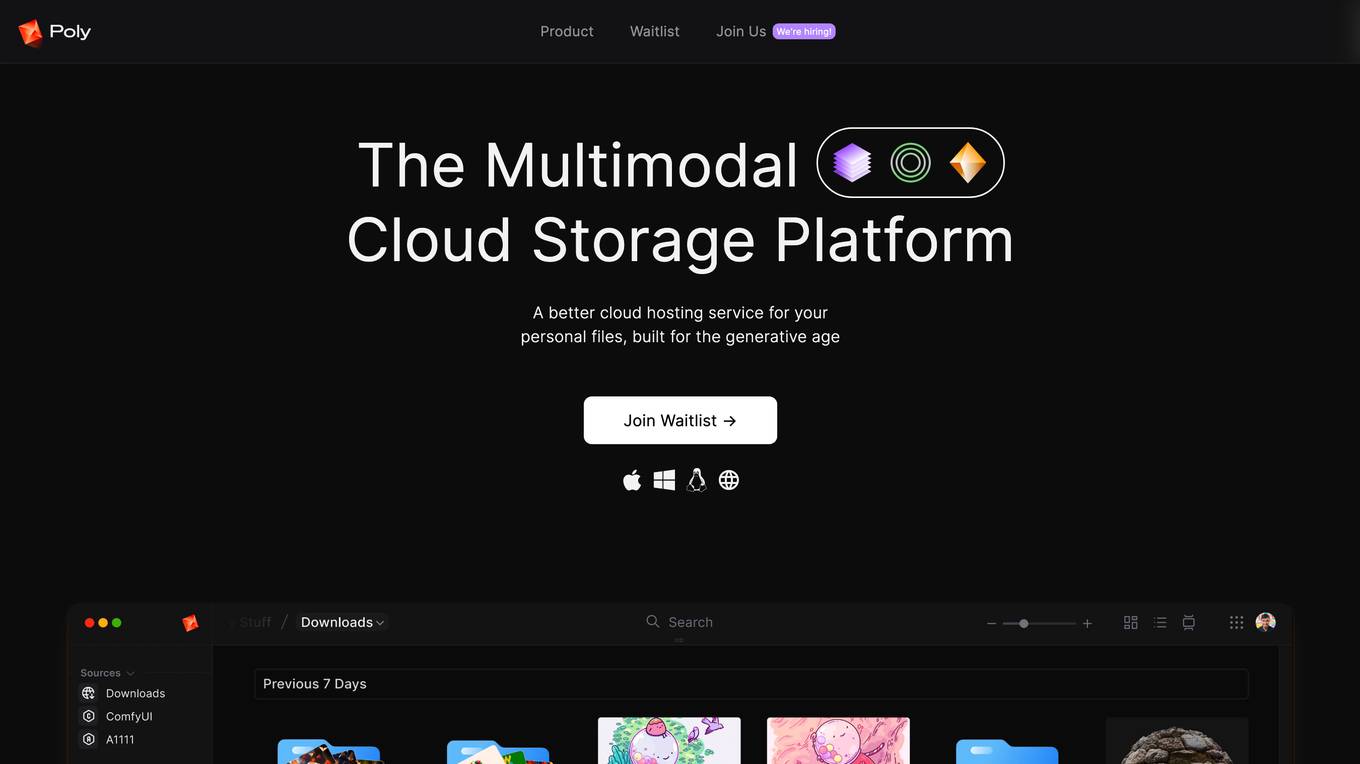

Poly

Poly is a next-generation intelligent cloud storage platform that is built for the generative age. It offers a better cloud hosting service for your personal files, with features such as AI-enabled multimodal search, customizable layouts, dynamic collections, and one-click asset conversion. Poly is also designed to support outputs from your preferred generative AI models, including Automatic1111, ComfyUI, DALL-E, and Midjourney. With Poly, you can browse, manage, and navigate all your media generated by AI, and seamlessly connect and auto-import your files from your favorite apps.

Free ChatGPT Omni (GPT4o)

Free ChatGPT Omni (GPT4o) is a user-friendly website that allows users to effortlessly chat with ChatGPT for free. It is designed to be accessible to everyone, regardless of language proficiency or technical expertise. GPT4o is OpenAI's groundbreaking multimodal language model that integrates text, audio, and visual inputs and outputs, revolutionizing human-computer interaction. The website offers real-time audio interaction, multimodal integration, advanced language understanding, vision capabilities, improved efficiency, and safety measures.

NEX

NEX is a controllable AI image generation tool designed for product creative image suite. It offers a variety of multimodal controls to generate and reimagine images according to user preferences. With IP-consistent models and team workspaces, NEX empowers users to bring their creative ideas to life. The tool supports fine-grained controls like pose, color, and character consistency, making it suitable for various creative tasks across industries such as media, entertainment, gaming, and fashion. NEX provides data-safe workspaces, private and custom-built AI models, and tailored generative media models for professional use.

ChatGPT4o

ChatGPT4o is OpenAI's latest flagship model, capable of processing text, audio, image, and video inputs, and generating corresponding outputs. It offers both free and paid usage options, with enhanced performance in English and coding tasks, and significantly improved capabilities in processing non-English languages. ChatGPT4o includes built-in safety measures and has undergone extensive external testing to ensure safety. It supports multimodal inputs and outputs, with advantages in response speed, language support, and safety, making it suitable for various applications such as real-time translation, customer support, creative content generation, and interactive learning.

GPT40

GPT40.net is a platform where users can interact with the latest GPT-4o model from OpenAI. The tool offers free and paid options for users to ask questions and receive answers in various formats such as text, audio, image, and video. GPT40 is designed to provide natural and intuitive human-computer interactions through its multimodal capabilities and fast response times. It ensures safety through built-in measures and is suitable for applications like real-time translation, customer support, content generation, and interactive learning.

NEX

NEX is a controllable AI image generation tool designed for product creative image suite. It offers a variety of multimodal controls, IP-consistent models, and team workspaces to bring ideas to life. With fine-grained controls like pose, color, and character consistency, NEX supports any creative task. It provides tailored generative media models for various applications, private and custom-built AI models, and collaborative workspaces for secure data sharing. NEX is ideal for creative enterprises in media & entertainment, gaming, fashion, and more, offering up to 10x cost reduction in model development compared to competitors.

Shaped

Shaped is an AI tool designed to provide relevant recommendations and search results to increase engagement, conversion, and revenue. It offers a configurable system that adapts in real-time, with features such as easy set-up, real-time adaptability, state-of-the-art model library, high customizability, and explainable results. Shaped is suitable for technical teams and offers white-glove support. It specializes in real-time ranking systems and supports multi-modal unstructured data understanding. The tool ensures secure infrastructure and has advantages like increased redemption rate, average order value, and diversity.

Ray 2

Ray 2 is an advanced AI video generation tool that offers a cutting-edge solution for creators and businesses to produce high-quality videos effortlessly. With features like realistic video outputs, text-to-video capability, multi-modal input support, and production-ready results, Ray 2 is designed to streamline the video creation process. Users can experience seamless coherent motion, high resolution output, advanced text understanding, dynamic aspect ratios, and fast processing, making it a game-changer in the field of video generation.

Wordware

Wordware is an AI toolkit that empowers cross-functional teams to build reliable high-quality agents through rapid iteration. It combines the best aspects of software with the power of natural language, freeing users from traditional no-code tool constraints. With advanced technical capabilities, multiple LLM providers, one-click API deployment, and multimodal support, Wordware offers a seamless experience for AI app development and deployment.

Gnani.ai

Gnani.ai is an AI application that offers a suite of agentic AI solutions for enterprises, including Inya Workforce for AI automation, Inya Assist for real-time agent support, Inya Shield for voice biometrics, and Inya Insights for call analytics. The platform enables businesses to build and deploy voice and chat agents quickly without the need for coding. With features like automated QA, voice biometrics, and real-time call analytics, Gnani.ai helps businesses improve customer experience, increase operational efficiency, and drive revenue growth across various industries.

GPT-4o

GPT-4o is an advanced multimodal AI platform developed by OpenAI, offering a comprehensive AI interaction experience across text, imagery, and audio. It excels in text comprehension, image analysis, and voice recognition, providing swift, cost-effective, and universally accessible AI technology. GPT-4o democratizes AI by balancing free access with premium features for paid subscribers, revolutionizing the way we interact with artificial intelligence.

Wan 2.5.AI

Wan 2.5.AI is a revolutionary native multimodal video generation platform that offers synchronized audio-visual generation with cinematic quality output. It features a unified framework for text, image, video, and audio processing, advanced image editing capabilities, and human preference alignment through RLHF. Wan 2.5.AI is designed to transform creative challenges, support AI research and development, enhance interactive education, and facilitate creative prototyping.

nunu.ai

nunu.ai is an AI-powered platform designed to revolutionize game testing by leveraging AI agents to conduct end-to-end tests at scale. The platform allows users to describe what they want to test in plain English, eliminating the need for coding or technical expertise. With powerful dashboards and detailed reports, nunu.ai provides real-time monitoring of test outcomes. The platform offers cost reduction, 24/7 availability, easy integration, human-like testing, multi-platform support, minimal maintenance, multiplayer testing capabilities, and enterprise-grade security.

Google Gemini Pro Chat Bot

Google Gemini Pro Chat Bot is an advanced AI tool designed to provide automated chatbot services for businesses. It utilizes artificial intelligence to engage with customers, answer queries, and assist in various tasks. The chatbot is highly customizable, allowing businesses to tailor the responses and interactions based on their specific needs. With its user-friendly interface and powerful AI capabilities, Google Gemini Pro Chat Bot is a valuable tool for enhancing customer support and streamlining communication processes.

CONVA

CONVA is a platform that allows developers to add voice-first AI copilot functionality to their mobile and web apps. It provides natural, multilingual, and multimodal conversational AI experiences for app users. CONVA's voice copilot can help users with tasks such as product discovery, search, navigation, and recommendations. It is easy to integrate and can be used across multiple platforms, including iOS, Android, Flutter, React, Web, and Shopify. CONVA also offers pre-trained category-specific voice copilot, cross-platform availability, multilingual support, demand insights, and a full-stack solution for voice and text-driven search, action, navigation, and recommendations.

0 - Open Source AI Tools

20 - OpenAI Gpts

Ekko Support Specialist

How to be a master of surprise plays and unconventional strategies in the bot lane as a support role.

Backloger.ai -Support Log Analyzer and Summary

Drop your Support Log Here, Allowing it to automatically generate concise summaries reporting to the tech team.

Tech Support Advisor

From setting up a printer to troubleshooting a device, I’m here to help you step-by-step.

Z Support

Expert in Nissan 370Z & 350Z modifications, offering tailored vehicle upgrade advice.

Emotional Support Copywriter

A creative copywriter you can hang out with and who won't do their timesheets either.

PCT 365 Support Bot

Microsoft 365 support agent, redirects admin-level requests to PCT Support.

Technischer Support Bot

Ein Bot, der grundlegende technische Unterstützung und Fehlerbehebung für gängige Software und Hardware bietet.

Military Support

Supportive and informative guide on military, veterans, and military assistance.

Dror Globerman's GPT Tech Support

Your go-to assistant for everyday tech support and guidance.

Customer Support Assistant

Expert in crafting empathetic, professional emails for customer support.