Best AI tools for< Submit Evaluation >

20 - AI tool Sites

Beauty.AI

Beauty.AI is an AI application that hosts an international beauty contest judged by artificial intelligence. The app allows humans to submit selfies for evaluation by AI algorithms that assess criteria linked to human beauty and health. The platform aims to challenge biases in perception and promote healthy aging through the use of deep learning and semantic analysis. Beauty.AI offers a unique opportunity for individuals to participate in a groundbreaking competition that combines technology and beauty standards.

Legal Benchmarks

Legal Benchmarks is a platform that provides independent lawyer-led AI evaluations for in-house legal work in the legal industry. The platform evaluates AI assistants on critical legal tasks like contract drafting and information extraction. It offers rankings based on how different AI tools perform on real-world legal tasks, helping legal teams understand and adopt AI solutions. Legal Benchmarks also allows legal AI vendors to submit their tools for evaluation and provides access to customized private reports, insights, and practical breakdowns of AI tools' performance.

The Future of Recruitment

The Future of Recruitment is an AI-powered platform that revolutionizes the job search process by allowing users to upload their resume, customize their dream job criteria, and receive feedback from an AI algorithm. The platform combines technology with satire to provide a unique and entertaining experience for job seekers. It ensures privacy by promptly discarding resumes after processing and focuses on improving recruitment practices through data analysis.

Ask AI Vet

The website 'Ask AI Vet' offers a free online service where users can ask veterinary questions and receive answers within 5 minutes using advanced artificial intelligence technology. The platform has already answered over 13,000 questions and is designed to provide general recommendations and preliminary evaluations for pet owners. Users can submit questions anonymously and receive responses from the AI Vet virtual assistant, which is not a replacement for professional care but can offer valuable insights on pet health and well-being.

LifeShack

LifeShack is an AI-powered job search tool that revolutionizes the job application process. It automates job searching, evaluation, and application submission, saving users time and increasing their chances of landing top-notch opportunities. With features like automatic job matching, AI-optimized cover letters, and tailored resumes, LifeShack streamlines the job search experience and provides peace of mind to job seekers.

The AI Art Magazine

The AI Art Magazine is a platform that celebrates the fusion of human creativity and intelligent machines, showcasing the evolution of art in the digital age. It brings AI art into print, crafting a shared narrative and highlighting the creative ways artists engage with AI. The magazine invites artists and non-artists to explore the intersection of art and technology, providing a central point for submissions and a platform for contributors to share their AI art and stories. With a jury that includes an AI member, the magazine selects artistic works that challenge and inspire, aiming to spread knowledge and passion for AI art to new audiences.

Quicklisting

Quicklisting is an AI-powered tool that helps startups submit their information to over 200 directories and 500 newsletters. It automates the submission process, saving startups time and effort. Quicklisting also provides startups with access to a database of directories and newsletters, making it easy for them to find the right ones to submit to. With Quicklisting, startups can increase their online visibility, reach a wider audience, and get more backlinks to their website.

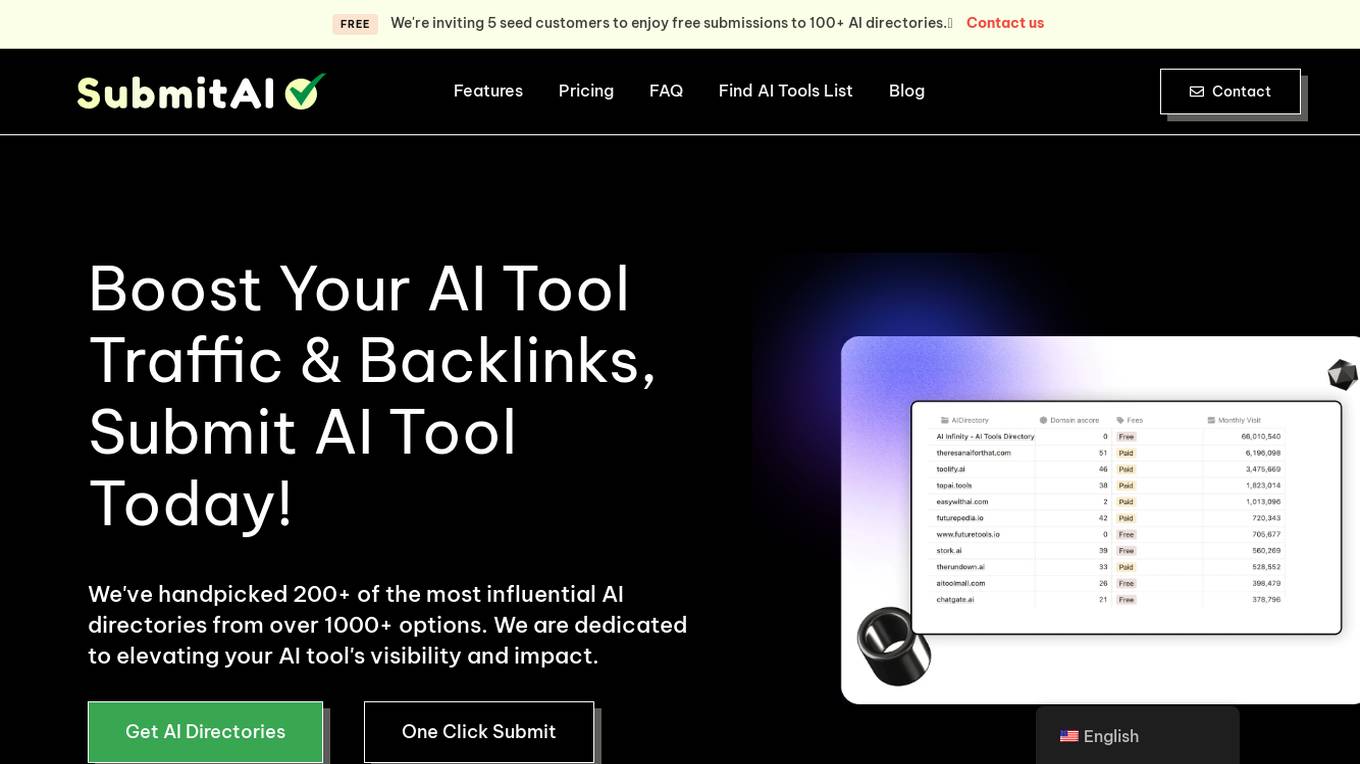

SubmitAI

SubmitAI is an AI tool that offers a service to submit AI tools to over 100 directories, aiming to enhance visibility and impact for AI products. The platform handpicks influential directories to optimize traffic and backlinks, providing a seamless submission process. Users can choose from different submission plans to save time and effort, with detailed reports and data insights included. SubmitAI also offers community engagement opportunities within the AI industry, fostering collaboration and networking. The tool prioritizes user satisfaction and data security, ensuring encrypted information for directory submissions.

Hair Shop Directory

The website is an AI tool called Hair Shop Directory that helps users discover and submit hair stores. It provides a comprehensive list of various hair products such as wigs, extensions, weaves, lace closures, and more. Users can easily compare different vendors and find their favorite hair stores. The platform is free to use and offers updated hair shop lists daily. Additionally, it features an AI Hairstyle Changer tool that is currently under development.

Altern

Altern is a platform where users can discover and share the latest tools, products, and resources related to artificial intelligence (AI). Users can sign up to join the community and access a wide range of AI tools, companies, reviews, and newsletters. The platform features a curated list of top AI tools and products, as well as user-generated content. Altern aims to connect AI enthusiasts and professionals, providing a space for learning, collaboration, and innovation in the field of AI.

Orbic AI

Orbic AI is a premier AI listing directory that serves as the ultimate hub for developers, offering a wide range of AI tools, GPT stores, and AWS PartyRocks. With over 600,000 registered pages and counting, Orbic AI provides a platform for developers to discover and access cutting-edge AI technologies. The platform is designed to streamline the process of finding and utilizing AI tools, GPT stores, and applications, catering to the needs of developers across various domains. Built with NextGenAIKit, Orbic AI is a comprehensive resource for developers seeking innovative solutions in the AI space.

Roast My Job Application

Roast My Job Application is an AI-powered tool designed to provide brutally honest feedback on job applications. Users can submit their cover letters to be reviewed by Coda AI, specifically by Ubel, a sarcastic and unapologetic recruiter. The tool simulates a recruitment process at Omnicorp Inc., a fictional company, offering various positions for applicants to apply. The application is AI-generated and securely stores user data for composing rejection letters.

Afroverse

Afroverse is an AI-powered music investment platform designed specifically for the Afrobeat industry. It provides tools for demo submissions, cross-border collaborations, music distribution, investment opportunities, community engagement, and more.

Aigclist

Aigclist is a website that provides a directory of AI tools and resources. The website is designed to help users find the right AI tools for their needs. Aigclist also provides information on AI trends and news.

Free AI Apps Directory

The Free AI Apps Directory is a curated list of all free AI applications available for immediate use. Users can easily find and explore various AI apps through this platform. The website provides information on app launch status, device compatibility, and allows users to submit new apps. It is a valuable resource for individuals interested in leveraging AI technology for different purposes.

SEO Roast

SEO Roast is an AI-powered SEO tool that offers SEO audits, action plans, and strategies to help websites improve their search engine rankings. The tool provides detailed video analysis, step-by-step implementation guides, priority lists for fixing issues, and a 6-month guarantee for results. Trusted by founders and businesses, SEO Roast simplifies complex SEO concepts into actionable insights, delivering immediate wins and long-term growth strategies. With a focus on data-driven analysis and tailored recommendations, SEO Roast helps businesses achieve a 300% increase in organic traffic and 5x more qualified leads. The tool uncovers hidden opportunities, provides backlink opportunities, and offers customized action plans to outrank competitors.

RestoGPT AI

RestoGPT AI is a Restaurant Marketing and Sales Platform designed to help local restaurants streamline their online ordering and delivery processes. It acts as an AI employee that manages the entire online business, from building a branded website to customer database management, order processing, delivery dispatch, menu maintenance, customer support, and data-driven marketing campaigns. The platform aims to increase customer retention, generate more direct orders, and improve overall efficiency in restaurant operations.

Brandity.ai

Brandity.ai is an AI-powered brand identity tool that helps users generate complete visual identities quickly and efficiently. The tool utilizes advanced algorithms to adapt to users' brand needs and preferences, maintaining a consistent style across all brand assets. Brandity's AI-driven identity generation ensures coherence and uniqueness in brand identities, from color schemes to art styles, tailored to fit each brand's unique requirements. The tool offers a range of pricing plans suitable for individuals, SMEs, agencies, and high-conversion entities, providing flexibility and scalability in generating logo, scenes, props, and patterns. With Brandity, users can kickstart their brand identity in less than 5 minutes, saving time and ensuring a compelling brand image across various applications.

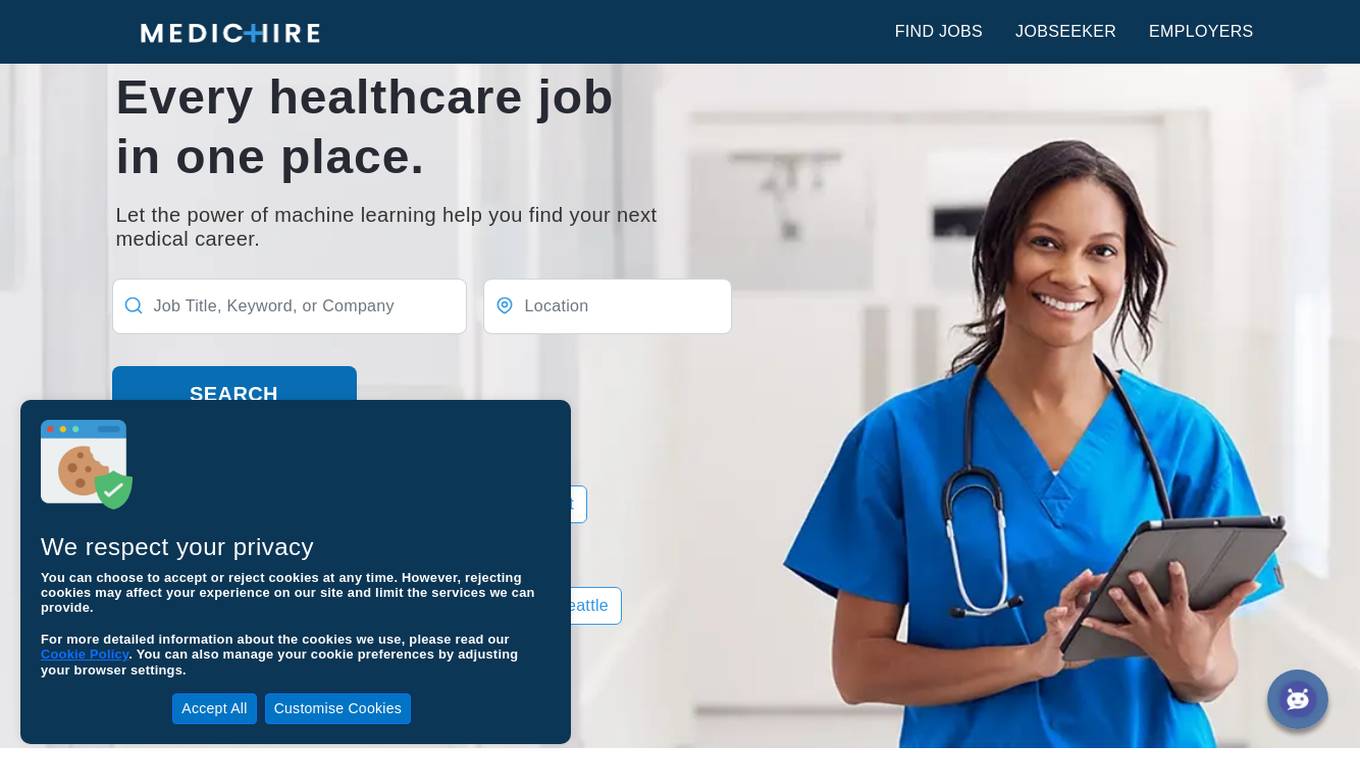

MedicHire

MedicHire is an AI-powered job search engine focused on medical and healthcare professions. It leverages machine learning to provide a comprehensive platform for job seekers and employers in the healthcare industry. The website offers a unique Web Story format for job listings, combining storytelling and technology to enhance the job discovery experience. MedicHire aims to simplify healthcare hiring by automating the recruitment process and connecting top talent with leading healthcare companies.

Content Assistant

Content Assistant is an AI-powered browser extension that revolutionizes content creation and interaction. It offers smart context retrieval, conversational capabilities, custom prompts, and unlimited use cases for enhancing content experiences. Users can effortlessly create, edit, review, and engage with content through speech-to-text functionality. The extension transforms the content experience by providing AI-generated responses based on prompts and context, ultimately improving content composition and review processes.

1 - Open Source AI Tools

CJA_Comprehensive_Jailbreak_Assessment

This public repository contains the paper 'Comprehensive Assessment of Jailbreak Attacks Against LLMs'. It provides a labeling method to label results using Python and offers the opportunity to submit evaluation results to the leaderboard. Full codes will be released after the paper is accepted.

20 - OpenAI Gpts

Metaverse Radio GPT

* Submit Your Music * Get Acquainted * Music * News * Talk * Broadcasting EVERYWHERE 24/7 * Metaverse Radio WMVR-db Chicago (www.Metaverse.Radio) * Ideal for music lovers and creators, it offers album art creation, music submission guidance, and a splash of humor.

EE-GPT

A search engine and troubleshooter for electrical engineers to promote an open-source community. Submit your questions, corrections and feedback to [email protected]

Pawtrait Creator

Creates cartoon pet portraits. Upload a photo of your pet, type its name, submit it, and watch the magic happen.

Better GPT Builder

Guides users in creating GPTs with a structured approach. Experimental! See https://github.com/allisonmorrell/gptbuilder for background, full prompts and files, and to submit ideas and issues.

Winternet - (Project Proposals)

Assists with Information Technology related project proposal creation and submission.

(Unofficial) Bullhorn Support Agent

I am not affiliated with Bullhorn, nor do I have rights to this software. For this, please visit Bullhorn.com as they are the owner. The rights holders may ask me to remove this test bot.

Project Deliverable Submission Advisor

Guides project teams towards successful deliverable submissions.

Borrower's Defense Assistant

Assistance in understanding and filling out the Borrower's Defense to Repayment Form provided by the United States Department of Education.

Hur bra är remissvaret?

Få feed-back på hur väl ett remissvar svarar mot Regeringskansliets önskemål om hur remissvar bör utformas.

孙溢高级护理职称申报材料准备助手

帮助你准备高级护理职称申报所需的各种材料的助手。可以根据你的申报职称级别、申报专业方向、申报单位等信息,为你生成一份符合格式要求和内容要求的申报材料清单,包括申报表、考核表、临床成果等资料。它还可以提供一些参考文献和范文,帮助你完善和优化你的申报材料。