Best AI tools for< Software Testing >

20 - AI tool Sites

Testlio

Testlio is a trusted software testing partner that maximizes software testing impact by offering comprehensive solutions for quality challenges. They provide a range of services including manual and automated testing, tailored testing strategies for diverse industries, and a cutting-edge platform for seamless collaboration. Testlio's AI-enhanced solutions help reduce risk in high-stake releases and ensure smarter decision-making. With a focus on quality reliability and efficiency, Testlio is a proven partner for mission-critical quality assurance.

Momentic

Momentic is a purpose-built AI tool for modern software testing, offering automation for E2E, UI, API, and accessibility testing. It leverages AI to streamline testing processes, from element identification to test generation, helping users shorten development cycles and enhance productivity. With an intuitive editor and the ability to describe elements in plain English, Momentic simplifies test creation and execution. It supports local testing without the need for a public URL, smart waiting for in-flight requests, and integration with CI/CD pipelines. Momentic is trusted by numerous companies for its efficiency in writing and maintaining end-to-end tests.

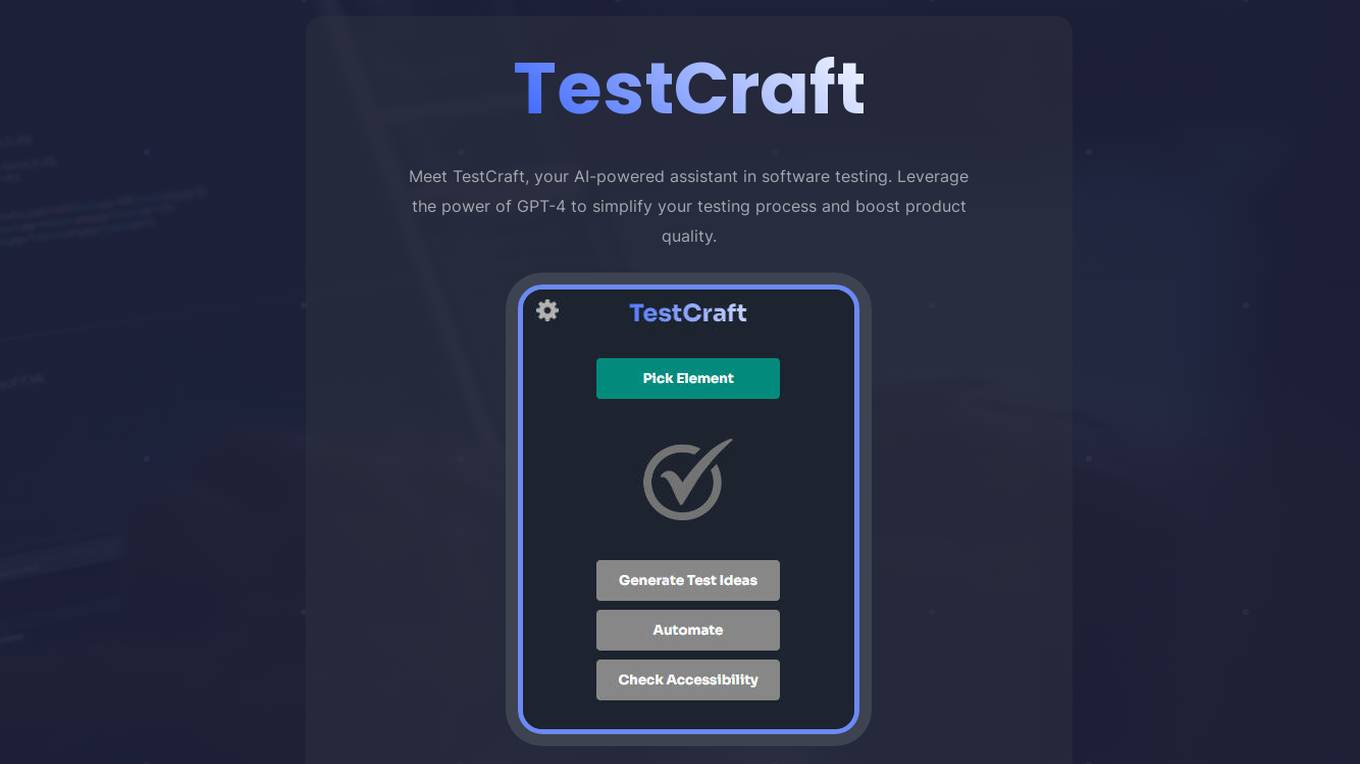

TestCraft

TestCraft is an AI-powered assistant in software testing that leverages the capabilities of GPT-4 to simplify the testing process and enhance product quality. It generates automated tests for various automation frameworks and programming languages, helps in ideation by producing innovative test ideas, ensures project accessibility by identifying potential issues, and streamlines the testing process by transforming test ideas into automated tests. TestCraft aims to make software testing more efficient and effective.

TestArmy

TestArmy is an AI-driven software testing platform that offers an army of testing agents to help users achieve software quality by balancing cost, speed, and quality. The platform leverages AI agents to generate Gherkin tests based on user specifications, automate test execution, and provide detailed logs and suggestions for test maintenance. TestArmy is designed for rapid scaling and adaptability to changes in the codebase, making it a valuable tool for both technical and non-technical users.

Teste.ai

Teste.ai is an AI-powered platform that allows users to create software testing scenarios and test cases using top-notch artificial intelligence technology. The platform offers a variety of tools based on AI to accelerate the software quality testing journey, helping testers cover a wide range of requirements with a vast array of test scenarios efficiently. Teste.ai's intelligent features enable users to save time and enhance efficiency in creating, executing, and managing software tests. With advanced AI integration, the platform provides automatic generation of test cases based on software documentation or specific requirements, ensuring comprehensive test coverage and precise responses to testing queries.

Abstracta Solutions

Abstracta Solutions is an AI software development company that provides holistic solutions for software quality. They offer services such as AI software development, testing strategy, functional testing, test automation, performance testing, tool development, accessibility testing, security testing, and DevOps services. Abstracta Solutions empowers organizations with AI-driven solutions to streamline software development processes and enhance customer experiences. They focus on continuously delivering high-quality software by co-creating quality strategies and leveraging expertise in different areas of software development.

Webo.AI

Webo.AI is a test automation platform powered by AI that offers a smarter and faster way to conduct testing. It provides generative AI for tailored test cases, AI-powered automation, predictive analysis, and patented AiHealing for test maintenance. Webo.AI aims to reduce test time, production defects, and QA costs while increasing release velocity and software quality. The platform is designed to cater to startups and offers comprehensive test coverage with human-readable AI-generated test cases.

Parasoft

Parasoft is an intelligent automated testing and quality platform that offers a range of tools covering every stage of the software development lifecycle. It provides solutions for compliance standards, automated software testing, and various industries' needs. Parasoft helps users accelerate software delivery, ensure quality, and comply with safety and security standards.

BugRaptors

BugRaptors is an AI-powered quality engineering services company that offers a wide range of software testing services. They provide manual testing, compatibility testing, functional testing, UAT services, mobile app testing, web testing, game testing, regression testing, usability testing, crowd-source testing, automation testing, and more. BugRaptors leverages AI and automation to deliver world-class QA services, ensuring seamless customer experience and aligning with DevOps automation goals. They have developed proprietary tools like MoboRaptors, BugBot, RaptorVista, RaptorGen, RaptorHub, RaptorAssist, RaptorSelect, and RaptorVision to enhance their services and provide quality engineering solutions.

Tricentis

Tricentis is an AI-powered testing tool that offers a comprehensive set of test automation capabilities to address various testing challenges. It provides end-to-end test automation solutions for a wide range of applications, including Salesforce, mobile testing, performance testing, and data integrity testing. Tricentis leverages advanced ML technologies to enable faster and smarter testing, ensuring quality at speed with reduced risk, time, and costs. The platform also offers continuous performance testing, change and data intelligence, and model-based, codeless test automation for mobile applications.

Supertest

Supertest is an AI copilot designed for software testing, offering a cutting-edge solution to revolutionize the way unit tests are written. By integrating seamlessly with VS Code, Supertest allows users to create unit tests in seconds with just one click. The tool automates various day-to-day QA engineering tasks using AI technology, providing a game-changing solution for development teams to save time and improve efficiency.

Momentic

Momentic is an AI testing tool that offers automated AI testing for software applications. It streamlines regression testing, production monitoring, and UI automation, making test automation easy with its AI capabilities. Momentic is designed to be simple to set up, easy to maintain, and accelerates team productivity by creating and deploying tests faster with its intuitive low-code editor. The tool adapts to applications, saves time with automated test maintenance, and allows testing anywhere, anytime using cloud, local, or CI/CD pipelines.

Autify

Autify is an AI testing company focused on solving challenges in automation testing. They aim to make software testing faster and easier, enabling companies to release faster and maintain application stability. Their flagship product, Autify No Code, allows anyone to create automated end-to-end tests for applications. Zenes, their new product, simplifies the process of creating new software tests through AI. Autify is dedicated to innovation in the automation testing space and is trusted by leading organizations.

AI Generated Test Cases

AI Generated Test Cases is an innovative tool that leverages artificial intelligence to automatically generate test cases for software applications. By utilizing advanced algorithms and machine learning techniques, this tool can efficiently create a comprehensive set of test scenarios to ensure the quality and reliability of software products. With AI Generated Test Cases, software development teams can save time and effort in the testing phase, leading to faster release cycles and improved overall productivity.

Qodex

Qodex is an AI-powered QA tool designed for end-to-end API testing, built by developers for developers. It offers enterprise-level QA efficiency with full automation and zero coding required. The tool auto-generates tests in plain English and adapts as the product evolves. Qodex also provides interactive API documentation and seamless integration, making it a cost-effective solution for enhancing productivity and efficiency in software testing.

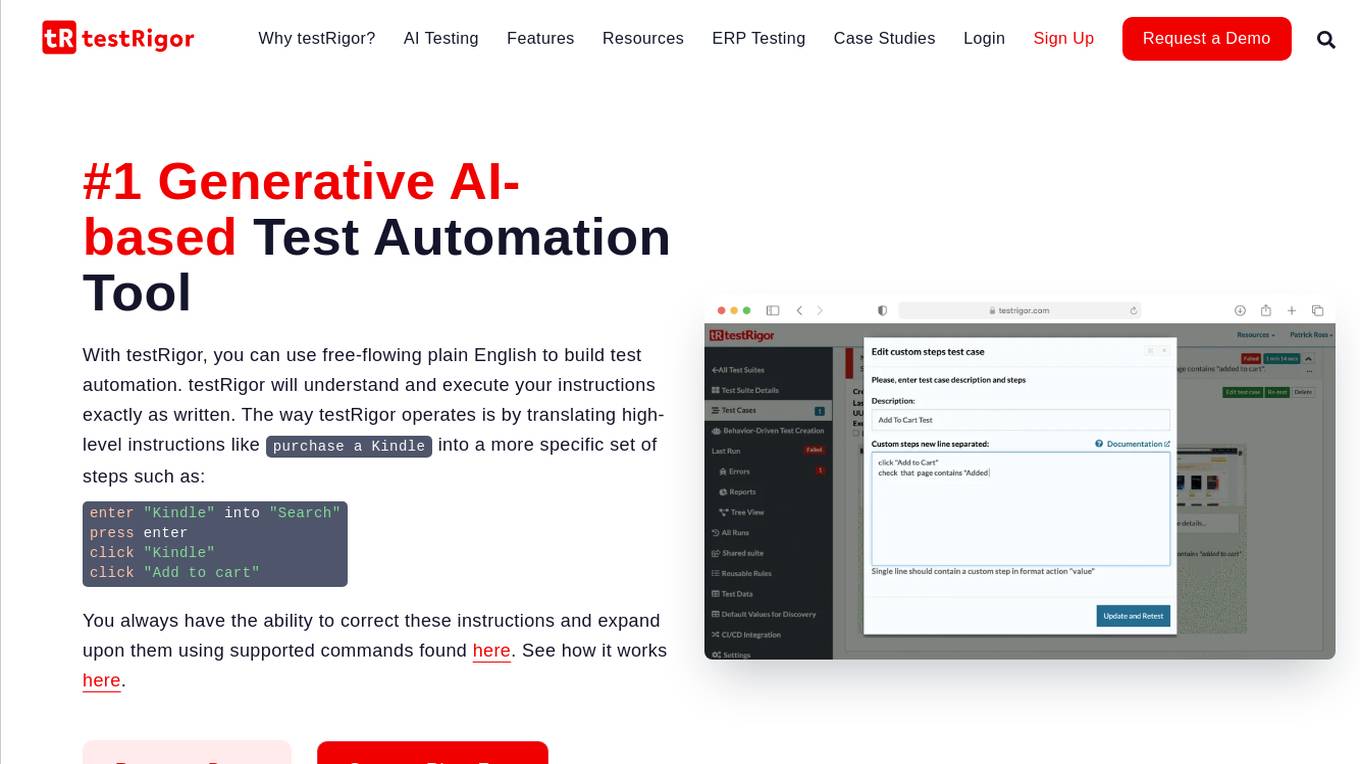

testRigor

testRigor is an AI-based test automation tool that allows users to create and execute test cases using plain English instructions. It leverages generative AI in software testing to automate test creation and maintenance, offering features such as no code/codeless testing, web, mobile, and desktop testing, Salesforce automation, and accessibility testing. With testRigor, users can achieve test coverage faster and with minimal maintenance, enabling organizations to reallocate QA engineers to build API tests and increase test coverage significantly. The tool is designed to simplify test automation, reduce QA headaches, and improve productivity by streamlining the testing process.

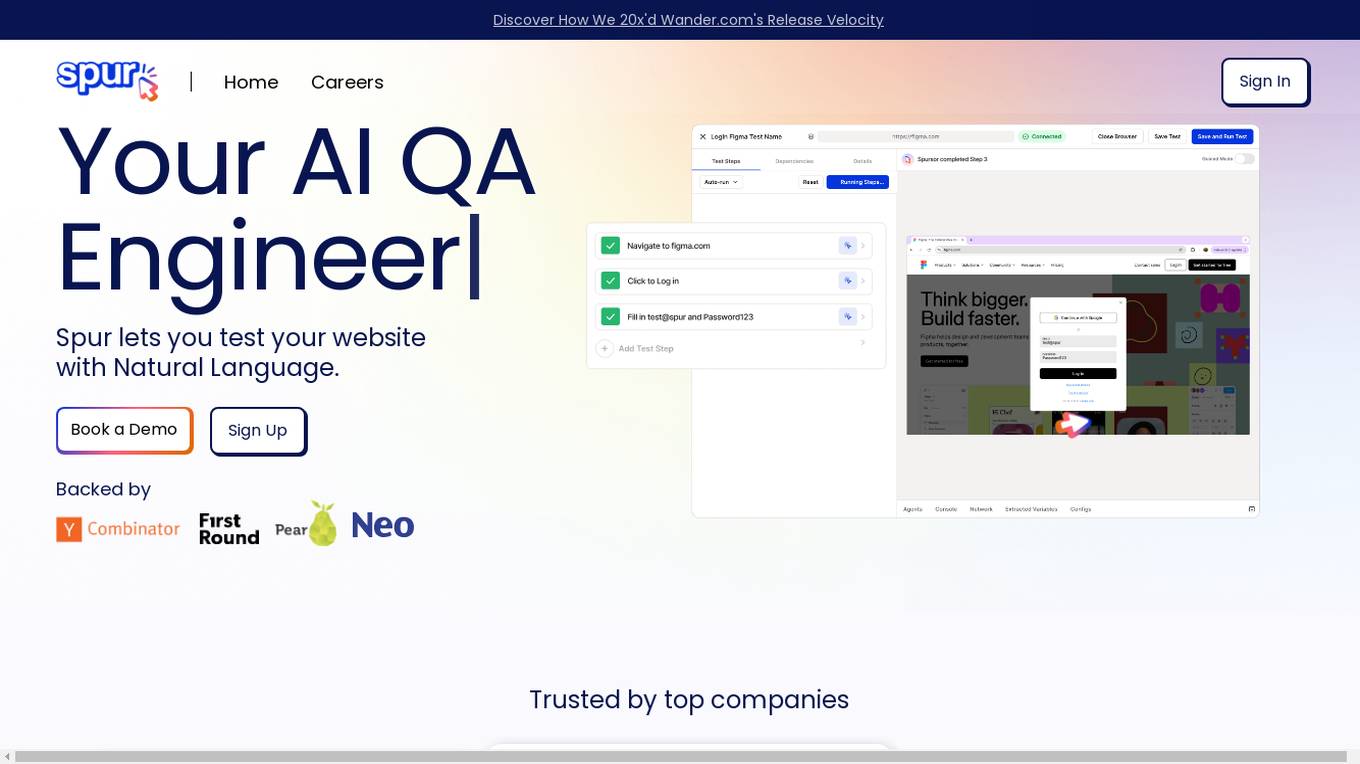

Spur

Spur is an AI QA tool that allows users to test websites using natural language, eliminating the need for complex test scripts. It offers reliable automated tests that adapt to UI changes, real-time playback for debugging, and powerful validations. Spur's AI-powered tests reduce manual testing time, improve software testing processes, and ensure the reliability of tests even with site changes. The tool is user-friendly, requires no coding skills, and supports API testing.

ACCELQ

ACCELQ is a powerful AI-driven test automation platform that offers codeless automation for web, desktop, mobile, and API testing. It provides a unified platform for continuous delivery, full-stack automation, and manual testing integration. ACCELQ is known for its industry-first no-code, no-setup mobile automation platform and comprehensive API automation capabilities. The platform is designed to handle real-world complexities with zero coding required, making it intuitive and scalable for businesses of all sizes.

Zeus Notebook

Zeus Notebook is an AI code assistant designed by Ying Hang Seah. It allows users to run a Python notebook entirely on their browser. Users can enter their OpenAI API key to enable chat functionality. The application is a helpful tool for developers and programmers to get assistance with coding tasks and projects.

HST Solutions

HST Solutions is a trusted digital engineering and enterprise modernization partner that offers custom software development, AI applications, and data engineering services. They combine deep technical expertise and industry experience to help clients anticipate future needs and provide innovative solutions. The company focuses on delivering transformation and solving complex challenges with precision and innovation.

0 - Open Source AI Tools

20 - OpenAI Gpts

Expert Testers

Chat with Software Testing Experts. Ping Jason if you won't want to be an expert or have feedback.

Test Shaman

Test Shaman: Guiding software testing with Grug wisdom and humor, balancing fun with practical advice.

Selenium Sage

Expert in Selenium test automation, providing practical advice and solutions.

Automation QA Interview Assistant

I provide Automation QA interview prep and conduct mock interviews.

Performance Testing Advisor

Ensures software performance meets organizational standards and expectations.

Security Testing Advisor

Ensures software security through comprehensive testing techniques.

Tech Mentor

Expert software architect with experience in design, construction, development, testing and deployment of Web, Mobile and Standalone software architectures

React Native Testing Library Owl

Assists in writing React Native tests using the React Native Testing Library.

Vitest Expert Testing Framework Multilingual

Multilingual AI for Vitest unit testing management.

Product Testing Advisor

Ensures product quality through rigorous, systematic testing processes.