Best AI tools for< Scale Ml Infrastructure >

20 - AI tool Sites

PoplarML

PoplarML is a platform that enables the deployment of production-ready, scalable ML systems with minimal engineering effort. It offers one-click deploys, real-time inference, and framework agnostic support. With PoplarML, users can seamlessly deploy ML models using a CLI tool to a fleet of GPUs and invoke their models through a REST API endpoint. The platform supports Tensorflow, Pytorch, and JAX models.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

Tübingen AI Center

Tübingen AI Center is a thriving hub for European AI, hosted by the Eberhard Karls University of Tübingen in cooperation with the Max Planck Institute for Intelligent Systems. It comprises 20 world-class machine learning research groups with more than 300 PhD students and Postdocs. The center fosters AI talents by offering education and hands-on experience from elementary school onwards. The Machine Learning Cloud at Tübingen AI Center provides cutting-edge AI research infrastructure, supporting collaborative work and large-scale simulations in ML. Funded by the Federal Ministry of Education and Research and the Ministry of Science, Research and Arts Baden-Württemberg.

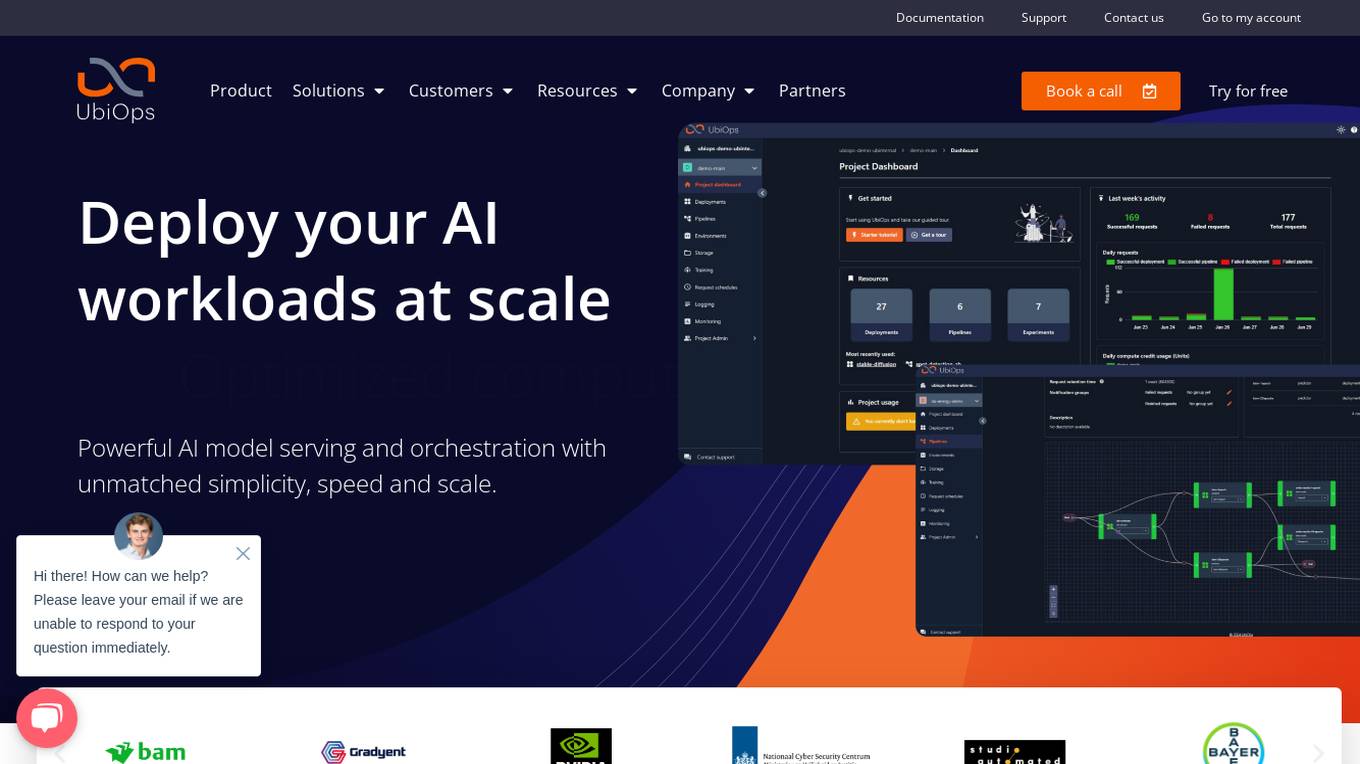

UbiOps

UbiOps is an AI infrastructure platform that helps teams quickly run their AI & ML workloads as reliable and secure microservices. It offers powerful AI model serving and orchestration with unmatched simplicity, speed, and scale. UbiOps allows users to deploy models and functions in minutes, manage AI workloads from a single control plane, integrate easily with tools like PyTorch and TensorFlow, and ensure security and compliance by design. The platform supports hybrid and multi-cloud workload orchestration, rapid adaptive scaling, and modular applications with unique workflow management system.

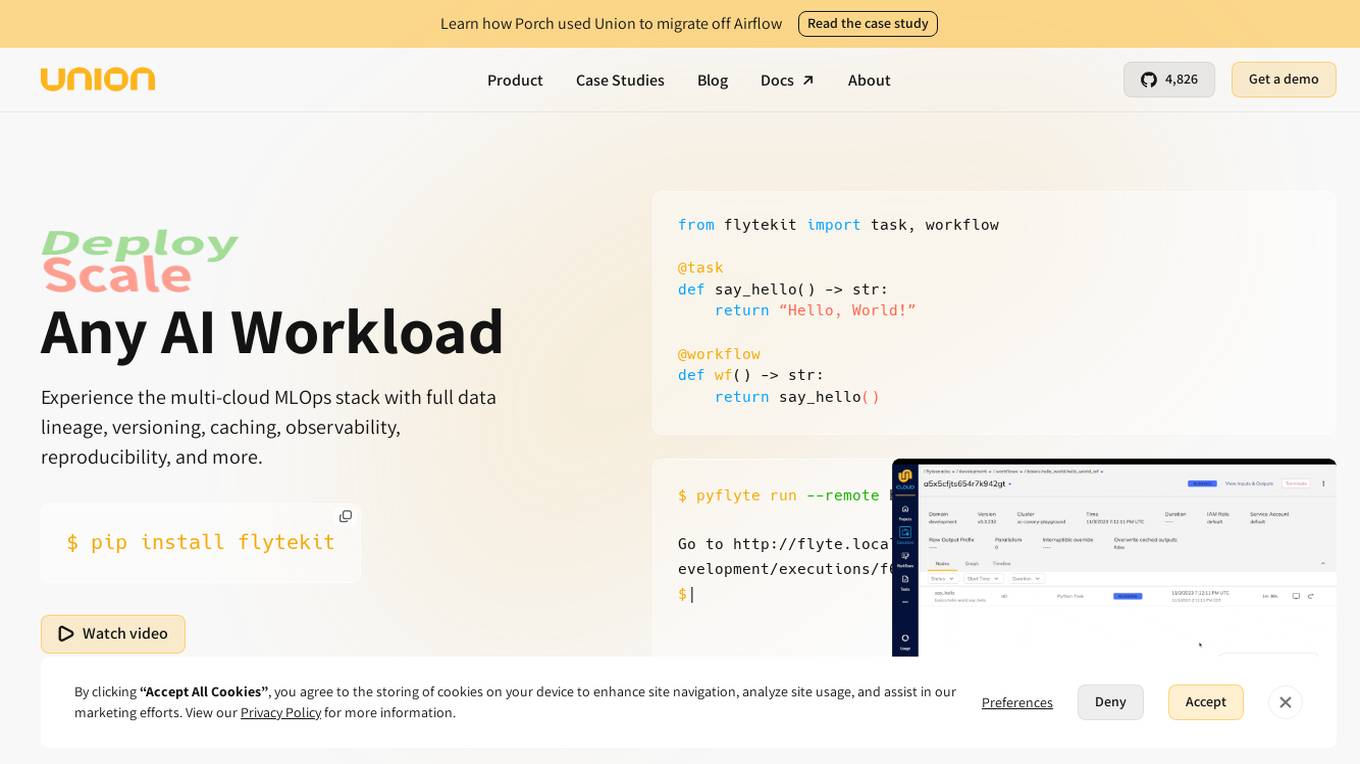

Union.ai

Union.ai is an infrastructure platform designed for AI, ML, and data workloads. It offers a scalable MLOps platform that optimizes resources, reduces costs, and fosters collaboration among team members. Union.ai provides features such as declarative infrastructure, data lineage tracking, accelerated datasets, and more to streamline AI orchestration on Kubernetes. It aims to simplify the management of AI, ML, and data workflows in production environments by addressing complexities and offering cost-effective strategies.

FinetuneFast

FinetuneFast is an AI tool designed to help developers, indie makers, and businesses to efficiently finetune machine learning models, process data, and deploy AI solutions at lightning speed. With pre-configured training scripts, efficient data loading pipelines, and one-click model deployment, FinetuneFast streamlines the process of building and deploying AI models, saving users valuable time and effort. The tool is user-friendly, accessible for ML beginners, and offers lifetime updates for continuous improvement.

Center for AI Safety (CAIS)

The Center for AI Safety (CAIS) is a research and field-building nonprofit organization based in San Francisco. They conduct impactful research, advocacy projects, and provide resources to reduce societal-scale risks associated with artificial intelligence (AI). CAIS focuses on technical AI safety research, field-building projects, and offers a compute cluster for AI/ML safety projects. They aim to develop and use AI safely to benefit society, addressing inherent risks and advocating for safety standards.

Seldon

Seldon is an MLOps platform that helps enterprises deploy, monitor, and manage machine learning models at scale. It provides a range of features to help organizations accelerate model deployment, optimize infrastructure resource allocation, and manage models and risk. Seldon is trusted by the world's leading MLOps teams and has been used to install and manage over 10 million ML models. With Seldon, organizations can reduce deployment time from months to minutes, increase efficiency, and reduce infrastructure and cloud costs.

ITHENA

ITHENA is an AI-powered platform offering solutions for smart manufacturing, digital shopfloor, manufacturing excellence, B2B eCommerce, smart infrastructure, smart EV charging, smart energy management, smart street lights, smart asset tracking, smart marketplace, social analytics, modern content app services, business transformation, systems of engagement, customer experience, manufacturing integration, manufacturing execution, industrial IoT, data management, big data & analytics, AI/ML, data science, cloud modernization, and cloud adoption. It caters to industries such as industrial manufacturing, automotive & mobility, healthcare & life sciences, energy, utility & government, and media & entertainment. ITHENA provides tailored solutions for both SMBs and enterprise-scale businesses.

UnfoldAI

UnfoldAI is a website offering articles, strategies, and tutorials for building production-grade ML systems. Authored by Simeon Emanuilov, the site covers topics such as deep learning, computer vision, LLMs, programming, MLOps, performance, scalability, and AI consulting. It aims to provide insights and best practices for professionals in the field of machine learning to create robust, efficient, and scalable systems.

SiMa.ai

SiMa.ai is an AI application that offers high-performance, power-efficient, and scalable edge machine learning solutions for various industries such as automotive, industrial, healthcare, drones, and government sectors. The platform provides MLSoC™ boards, DevKit 2.0, Palette Software 1.2, and Edgematic™ for developers to accelerate complete applications and deploy AI-enabled solutions. SiMa.ai's Machine Learning System on Chip (MLSoC) enables full-pipeline implementations of real-world ML solutions, making it a trusted platform for edge AI development.

xZactly.ai

xZactly.ai is an AI tool that works with Artificial Intelligence start-up businesses and high growth companies to deliver accelerated revenue growth, AI specific sales, business development, and marketing expertise, seed and venture capital financing, business scale, and global expansion fund. The tool helps in connecting businesses and investors, providing go-to-market strategies, and offering triage, transformation, and turnaround solutions for AI and ML companies. With over 25 years of experience in sales, marketing, and business development, xZactly.ai aims to accelerate sales and boost revenue for AI-driven businesses by delivering expertise, strategy, and execution.

JFrog ML

JFrog ML is an AI platform designed to streamline AI development from prototype to production. It offers a unified MLOps platform to build, train, deploy, and manage AI workflows at scale. With features like Feature Store, LLMOps, and model monitoring, JFrog ML empowers AI teams to collaborate efficiently and optimize AI & ML models in production.

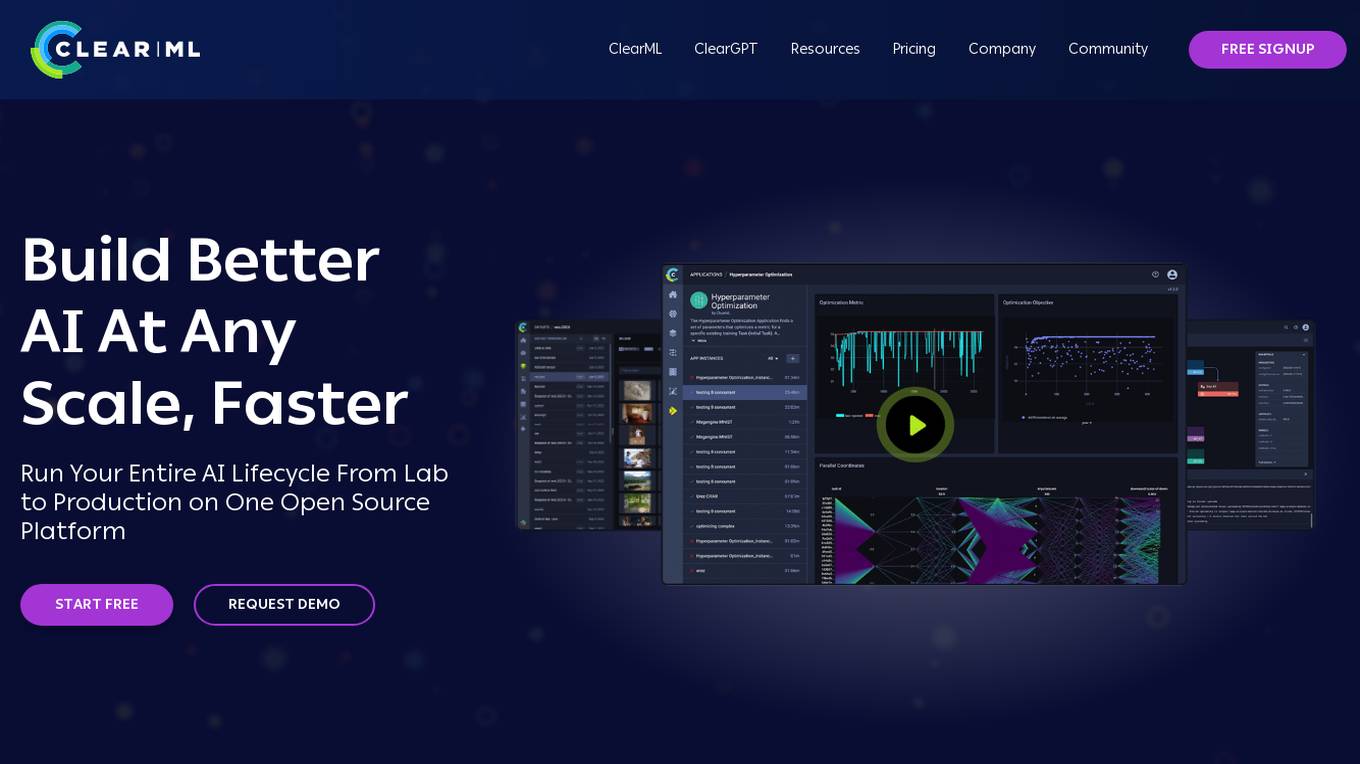

ClearML

ClearML is an open-source, end-to-end platform for continuous machine learning (ML). It provides a unified platform for data management, experiment tracking, model training, deployment, and monitoring. ClearML is designed to make it easy for teams to collaborate on ML projects and to ensure that models are deployed and maintained in a reliable and scalable way.

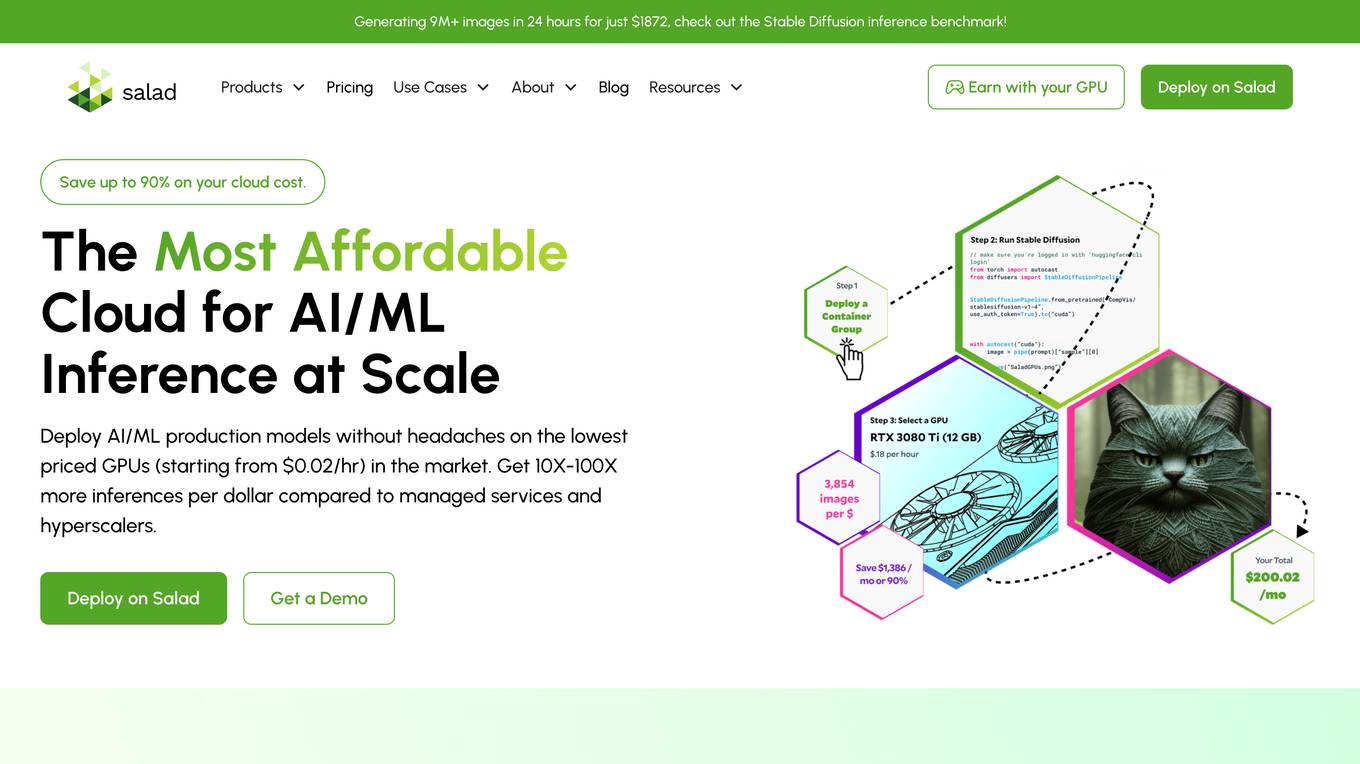

Salad

Salad is a distributed GPU cloud platform that offers fully managed and massively scalable services for AI applications. It provides the lowest priced AI transcription in the market, with features like image generation, voice AI, computer vision, data collection, and batch processing. Salad democratizes cloud computing by leveraging consumer GPUs to deliver cost-effective AI/ML inference at scale. The platform is trusted by hundreds of machine learning and data science teams for its affordability, scalability, and ease of deployment.

MATH (ML & AI Technology Hub)

MATH (ML & AI Technology Hub) is a premier innovation hub and ecosystem enabler based in T-Hub, Hyderabad. It serves as a Centre of Excellence for Machine Learning and Artificial Intelligence, connecting startups, academia, government, and industry giants. MATH offers a dynamic space, physical resources, and a thriving ecosystem to incubate startups in various sectors. The hub aims to foster AI innovation, empower startups, and create job opportunities in the AI field by 2025.

QuData

QuData is an AI and ML solutions provider that helps businesses enhance their value through AI/ML implementation, product design, QA, and consultancy services. They offer a range of services including ChatGPT integration, speech synthesis, speech recognition, image analysis, text analysis, predictive analytics, big data analysis, innovative research, and DevOps solutions. QuData has extensive experience in machine learning and artificial intelligence, enabling them to create high-quality solutions for specific industries, helping customers save development costs and achieve their business goals.

Luma AI

Luma AI is an AI-powered platform that specializes in video generation using advanced models like Ray2 and Dream Machine. The platform offers director-grade control over style, character, and setting, allowing users to reshape videos with ease. Luma AI aims to build multimodal general intelligence that can generate, understand, and operate in the physical world, paving the way for creative, immersive, and interactive systems beyond traditional text-based approaches. The platform caters to creatives in various industries, offering powerful tools for worldbuilding, storytelling, and creative expression.

Luma AI

Luma AI is a 3D capture platform that allows users to create interactive 3D scenes from videos. With Luma AI, users can capture 3D models of people, objects, and environments, and then use those models to create interactive experiences such as virtual tours, product demonstrations, and training simulations.

Blackthorn AI

Blackthorn AI is an AI development company offering innovative solutions for businesses across various industries. With a focus on AI & ML development services, generative AI, big data analytics, and custom software development, Blackthorn AI aims to transform businesses with precision and measurable results. The company has a proven track record of successful projects and a team of certified experts, delivering enterprise-grade accuracy and results. Recognized as a top AI company, Blackthorn AI specializes in solving complex challenges and empowering businesses to innovate and excel in a competitive market.

0 - Open Source AI Tools

20 - OpenAI Gpts

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

R&D Process Scale-up Advisor

Optimizes production processes for efficient large-scale operations.

CIM Analyst

In-depth CIM analysis with a structured rating scale, offering detailed business evaluations.

Business Angel - Startup and Insights PRO

Business Angel provides expert startup guidance: funding, growth hacks, and pitch advice. Navigate the startup ecosystem, from seed to scale. Essential for entrepreneurs aiming for success. Master your strategy and launch with confidence. Your startup journey begins here!

Sysadmin

I help you with all your sysadmin tasks, from setting up your server to scaling your already exsisting one. I can help you with understanding the long list of log files and give you solutions to the problems.

Seabiscuit Launch Lander

Startup Strong Within 180 Days: Tailored advice for launching, promoting, and scaling businesses of all types. It covers all stages from pre-launch to post-launch and develops strategies including market research, branding, promotional tactics, and operational planning unique your business. (v1.8)

Startup Advisor

Startup advisor guiding founders through detailed idea evaluation, product-market-fit, business model, GTM, and scaling.