Best AI tools for< Safeguard Privacy In Ai Age >

20 - AI tool Sites

SecureLabs

SecureLabs is an AI-powered platform that offers comprehensive security, privacy, and compliance management solutions for businesses. The platform integrates cutting-edge AI technology to provide continuous monitoring, incident response, risk mitigation, and compliance services. SecureLabs helps organizations stay current and compliant with major regulations such as HIPAA, GDPR, CCPA, and more. By leveraging AI agents, SecureLabs offers autonomous aids that tirelessly safeguard accounts, data, and compliance down to the account level. The platform aims to help businesses combat threats in an era of talent shortages while keeping costs down.

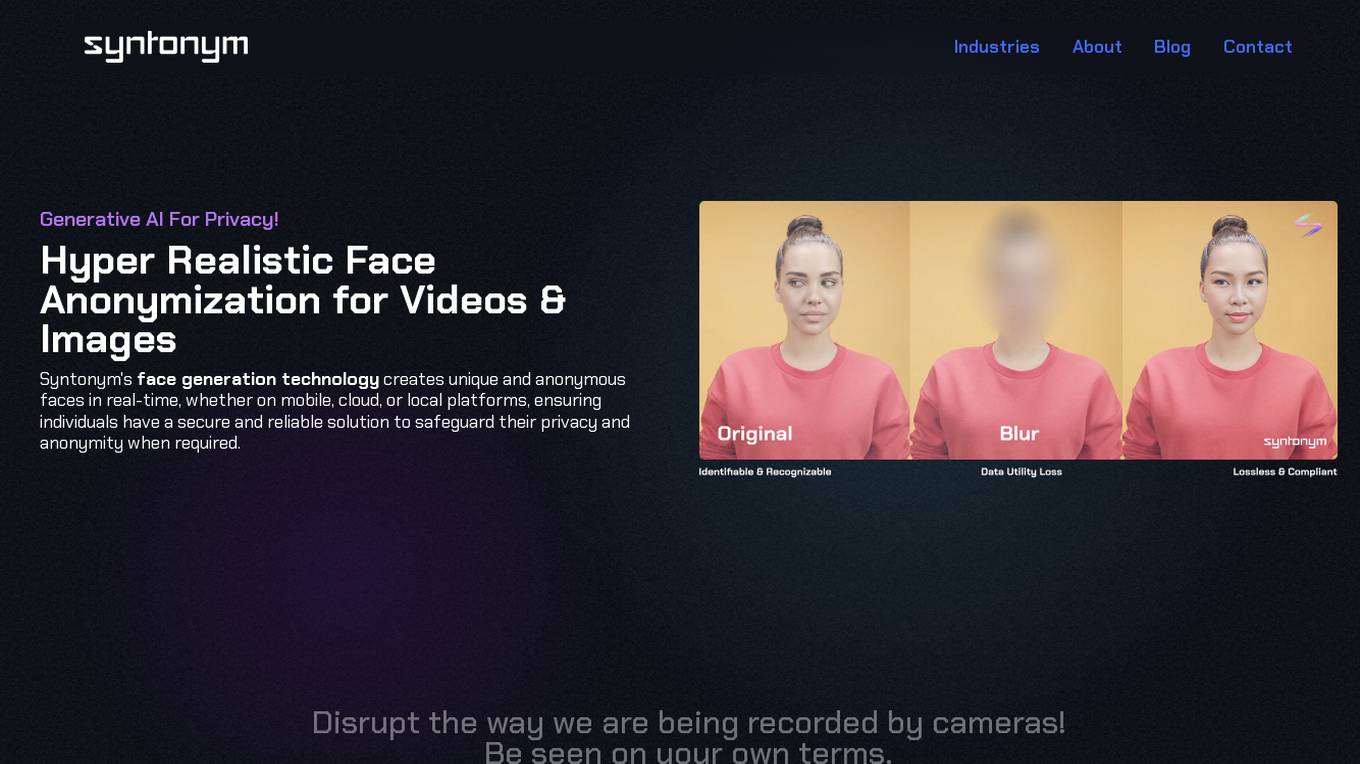

Syntonym

Syntonym is a generative AI tool focused on privacy, specifically offering hyper-realistic face anonymization for videos and images. The technology creates unique and anonymous faces in real-time, ensuring individuals have a secure and reliable solution to safeguard their privacy and anonymity. Syntonym disrupts the way individuals are recorded by cameras, allowing them to be seen on their own terms and integrating privacy layers into video platforms for interactive communication. The tool removes biometrics to unlock unique video processing potential with real-time, lossless anonymization in compliance with privacy regulations.

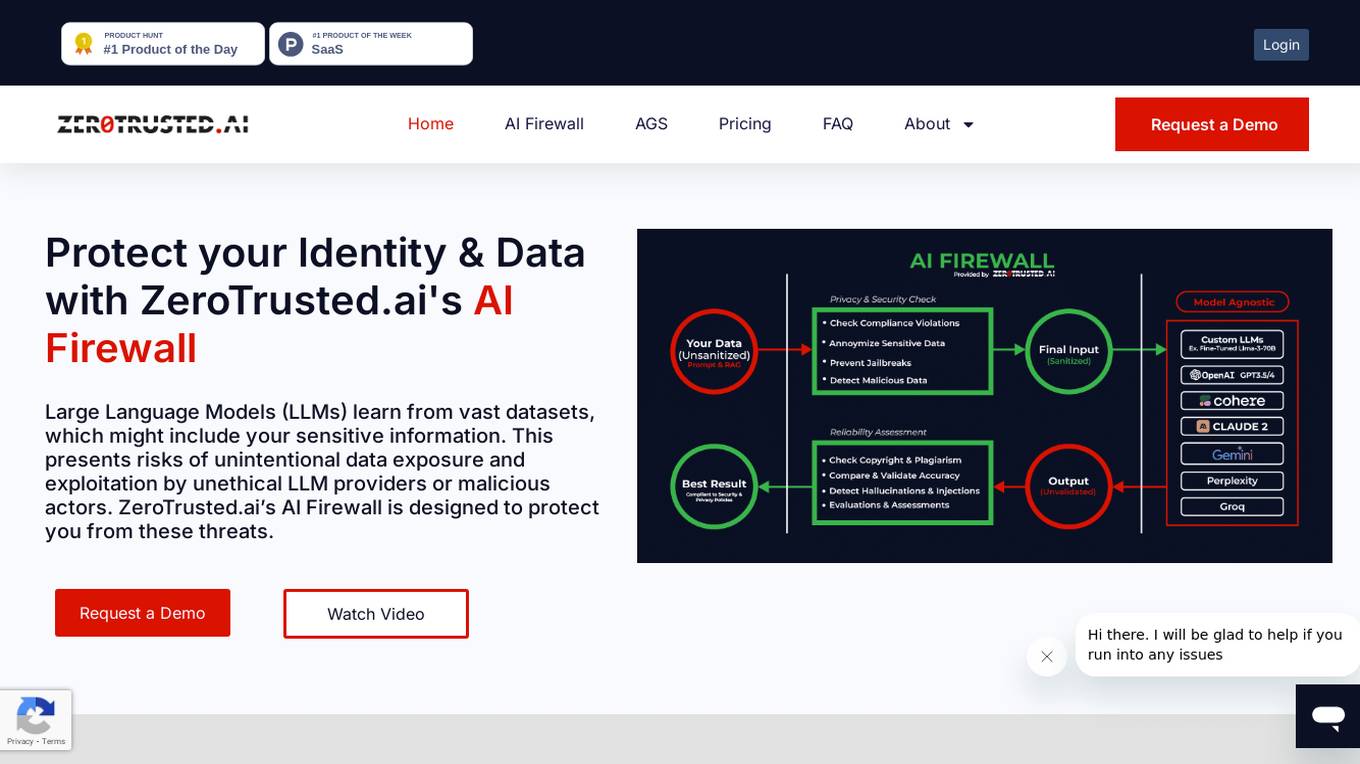

ZeroTrusted.ai

ZeroTrusted.ai is a cybersecurity platform that offers an AI Firewall to protect users from data exposure and exploitation by unethical providers or malicious actors. The platform provides features such as anonymity, security, reliability, integrations, and privacy to safeguard sensitive information. ZeroTrusted.ai empowers organizations with cutting-edge encryption techniques, AI & ML technologies, and decentralized storage capabilities for maximum security and compliance with regulations like PCI, GDPR, and NIST.

Graded Pro

Graded Pro is an advanced AI grading tool designed for teachers and educators worldwide. It offers automated assessment and grading of various academic works, including handwritten submissions, art, coding assignments, essays, and diagrams. The platform supports a wide range of educational standards and curriculums, providing detailed feedback to students based on customizable rubrics. Graded Pro prioritizes security and privacy, complying with GDPR and FERPA regulations to safeguard student data. With features like effortless grading, support for all subjects and file types, and integration with Google Classroom, Graded Pro streamlines the grading process and enhances the teaching experience.

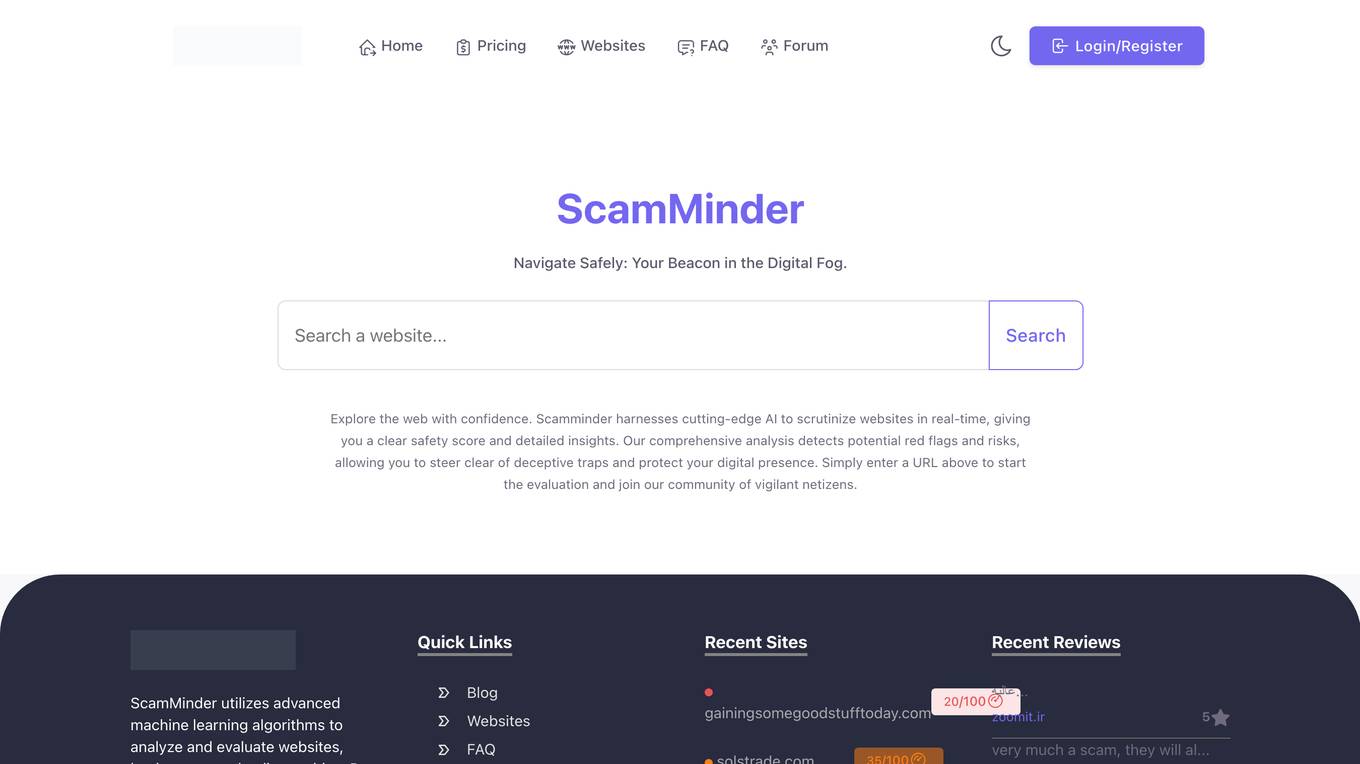

ScamMinder

ScamMinder is an AI-powered tool designed to enhance online safety by analyzing and evaluating websites in real-time. It harnesses cutting-edge AI technology to provide users with a safety score and detailed insights, helping them detect potential risks and red flags. By utilizing advanced machine learning algorithms, ScamMinder assists users in making informed decisions about engaging with websites, businesses, and online entities. With a focus on trustworthiness assessment, the tool aims to protect users from deceptive traps and safeguard their digital presence.

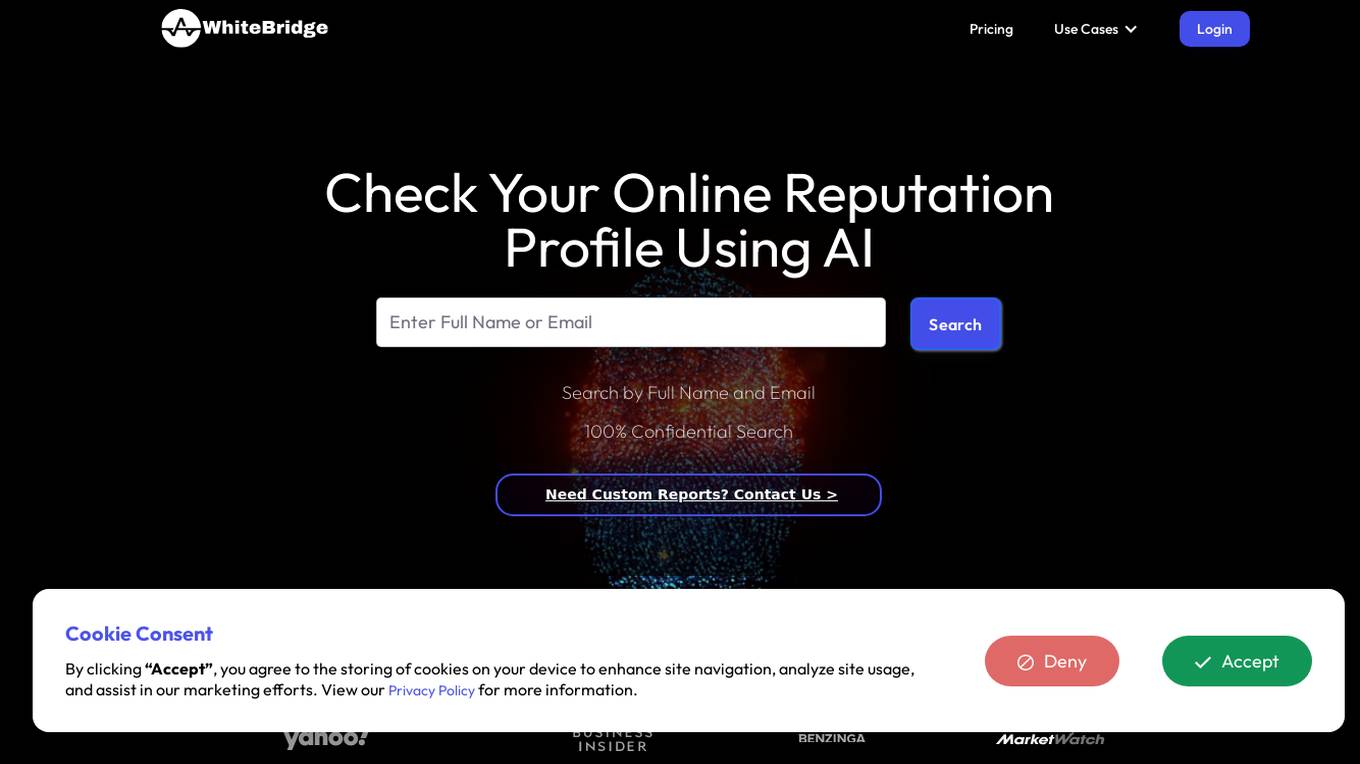

WhiteBridge

WhiteBridge is an AI-powered online reputation management tool that helps individuals and businesses transform scattered online data into a coherent narrative of their digital identity. By finding, verifying, and structuring information about someone into insightful reports, WhiteBridge enables users to safeguard their reputation, understand prospects, prepare for pitches, hire wisely, and verify authenticity. The tool offers real-time validation, background analysis, and access to over 100 public data APIs to provide unmatched quality of information. WhiteBridge is designed for recruiters, sales reps, business owners, and privacy-conscious individuals to streamline background checks, build better connections, verify information, and safeguard personal data.

AI Health World Summit

The AI Health World Summit is a biennial event organized by SingHealth that brings together global leaders in healthcare, academia, government, legal, and industry sectors to explore the latest advancements and applications of artificial intelligence in healthcare. The summit focuses on crucial topics such as AI technologies adoption, clinical applications, data governance, privacy safeguards, advancements in vision and language models for health contexts, responsible AI practices, and AI education. The event aims to foster collaboration and innovation in AI-driven healthcare solutions.

Transparency Coalition

The Transparency Coalition is a platform dedicated to advocating for legislation and transparency in the field of artificial intelligence. It aims to create AI safeguards for the greater good by focusing on training data, accountability, and ethical practices in AI development and deployment. The platform emphasizes the importance of regulating training data to prevent misuse and harm caused by AI systems. Through advocacy and education, the Transparency Coalition seeks to promote responsible AI innovation and protect personal privacy.

Angelmatch.io

Angelmatch.io is a website that provides a security service to protect against malicious bots. Users may encounter a brief security verification process to ensure they are not bots before accessing the site. The service is powered by Cloudflare, offering performance and security features to safeguard user data and privacy.

t.ly

The website t.ly provides a security service to protect against malicious bots. Users may encounter a 'Just a moment...' page during the verification process to ensure they are not bots. The service is powered by Cloudflare, offering performance and security features to safeguard user privacy.

Gummysearch

Gummysearch.com is a website that provides a security service to protect against malicious bots. Users may encounter a security verification page while the website confirms that they are not bots. The service ensures a safe browsing experience by verifying user authenticity through JavaScript and cookies. Powered by Cloudflare, gummysearch.com prioritizes performance and security to safeguard user privacy.

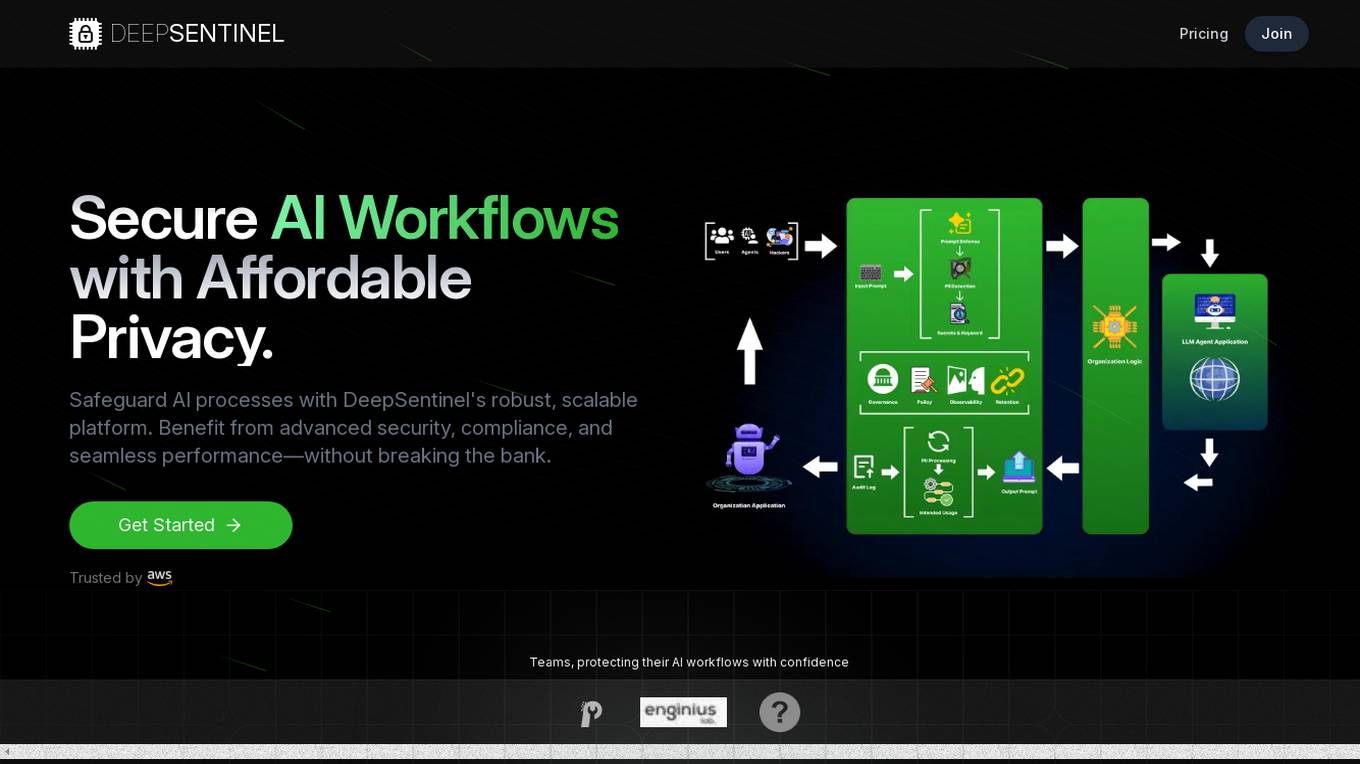

DeepSentinel

DeepSentinel is an AI application that provides secure AI workflows with affordable deep data privacy. It offers a robust, scalable platform for safeguarding AI processes with advanced security, compliance, and seamless performance. The platform allows users to track, protect, and control their AI workflows, ensuring secure and efficient operations. DeepSentinel also provides real-time threat monitoring, granular control, and global trust for securing sensitive data and ensuring compliance with international regulations.

Qypt AI

Qypt AI is an advanced tool designed to elevate privacy and empower security through secure file sharing and collaboration. It offers end-to-end encryption, AI-powered redaction, and privacy-preserving queries to ensure confidential information remains protected. With features like zero-trust collaboration and client confidentiality, Qypt AI is built by security experts to provide a secure platform for sharing sensitive data. Users can easily set up the tool, define sharing permissions, and invite collaborators to review documents while maintaining control over access. Qypt AI is a cutting-edge solution for individuals and businesses looking to safeguard their data and prevent information leaks.

Relyance AI

Relyance AI is a platform that offers 360 Data Governance and Trust solutions. It helps businesses safeguard against fines and reputation damage while enhancing customer trust to drive business growth. The platform provides visibility into enterprise-wide data processing, ensuring compliance with regulatory and customer obligations. Relyance AI uses AI-powered risk insights to proactively identify and address risks, offering a unified trust and governance infrastructure. It offers features such as data inventory and mapping, automated assessments, security posture management, and vendor risk management. The platform is designed to streamline data governance processes, reduce costs, and improve operational efficiency.

Lakera

Lakera is the world's most advanced AI security platform that offers cutting-edge solutions to safeguard GenAI applications against various security threats. Lakera provides real-time security controls, stress-testing for AI systems, and protection against prompt attacks, data loss, and insecure content. The platform is powered by a proprietary AI threat database and aligns with global AI security frameworks to ensure top-notch security standards. Lakera is suitable for security teams, product teams, and LLM builders looking to secure their AI applications effectively and efficiently.

Protect AI

Protect AI is a comprehensive platform designed to secure AI systems by providing visibility and manageability to detect and mitigate unique AI security threats. The platform empowers organizations to embrace a security-first approach to AI, offering solutions for AI Security Posture Management, ML model security enforcement, AI/ML supply chain vulnerability database, LLM security monitoring, and observability. Protect AI aims to safeguard AI applications and ML systems from potential vulnerabilities, enabling users to build, adopt, and deploy AI models confidently and at scale.

Super Amplify

Super Amplify is an AI platform that enhances productivity and performance by leveraging advanced AI technology to transform raw data into actionable insights. It offers unparalleled security features such as data isolation, privacy guards, user management, and data encryption. The platform works closely with enterprises to scale operations, safeguard intellectual property, and maximize efficiency. Super Amplify harnesses the power of Private GPTs to automate routine tasks and empower employees to focus on strategic functions. It also provides AI agents to revolutionize workflows, enhance productivity, and boost business outcomes. With a range of AI models and tools, Super Amplify streamlines business operations, optimizes workflows, and drives long-term growth and success.

Lakera

Lakera is the world's most advanced AI security platform designed to protect organizations from AI threats. It offers solutions for prompt injection detection, unsafe content identification, PII and data loss prevention, data poisoning prevention, and insecure LLM plugin design. Lakera is recognized for setting global AI security standards and is trusted by leading enterprises, foundation model providers, and startups. The platform is powered by a proprietary AI threat database and aligns with global AI security frameworks.

Attestiv

Attestiv is an AI-powered digital content analysis and forensics platform that offers solutions to prevent fraud, losses, and cyber threats from deepfakes. The platform helps in reducing costs through automated photo, video, and document inspection and analysis, protecting company reputation, and monetizing trust in secure systems. Attestiv's technology provides validation and authenticity for all digital assets, safeguarding against altered photos, videos, and documents that are increasingly easy to create but difficult to detect. The platform uses patented AI technology to ensure the authenticity of uploaded media and offers sector-agnostic solutions for various industries.

iMobie

iMobie is a software company that offers a suite of AI-powered tools designed to optimize digital devices, manage data, and enhance user experiences. The company provides a range of applications such as AnyTrans, PhoneRescue, AnyUnlock, AnyFix, AnyDroid, PhoneClean, and MacClean, each catering to different needs of users. These tools are known for their efficiency, ease of use, and innovative features that make digital life easier and more secure.