Best AI tools for< Refer To Documentation >

20 - AI tool Sites

Site Not Found

The website page seems to be a placeholder or error page with the message 'Site Not Found'. It indicates that the user may not have deployed an app yet or may have an empty directory. The page suggests referring to hosting documentation to deploy the first app. The site appears to be under construction or experiencing technical issues.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::zdhct-1723140771934-b5e5ad909fad'. Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::tszrz-1723627812794-26f3e29ebbda). Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::6sb2w-1770832030424-6965d20399bc). Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sfo1::g65rs-1771006489042-f14391d82645). Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. Users are directed to refer to the documentation for more information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::hfkql-1741193256810-ca47dff01080). Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. Users encountering this error are directed to refer to the documentation for more information and troubleshooting assistance.

Error 404 Not Found

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::t6mdp-1736442717535-3a5d4eeaf597'. Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Not Found

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (cle1::t5xdd-1771006563046-a762790f1009). Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. Users encountering this error are directed to refer to the documentation for more information and troubleshooting.

404 Error Page

The website displays a '404: NOT_FOUND' error message indicating that the requested deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::lmmss-1741279839229-d64d8958cb1b'. Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Page

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::z2jxr-1736614143090-03728368920f'. Users are directed to refer to the documentation for further information and troubleshooting.

Error Resolver

The website displays a '404: NOT_FOUND' error message along with a code and ID indicating a deployment not found. Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. Users encountering this error are advised to refer to the documentation for more information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::mk7hv-1736442739549-625ea5452a6a). The message advises users to refer to the documentation for further information and troubleshooting.

CloudFront Error Page

The website encountered an error (502 ERROR) due to CloudFront not being able to resolve the origin domain name. This error message indicates a connection issue between the user's device and the server hosting the app or website. It suggests potential causes such as high traffic volume or a configuration error. The user is advised to try again later or contact the app or website owner for assistance. If the user provides content through CloudFront, they can refer to the CloudFront documentation for troubleshooting steps.

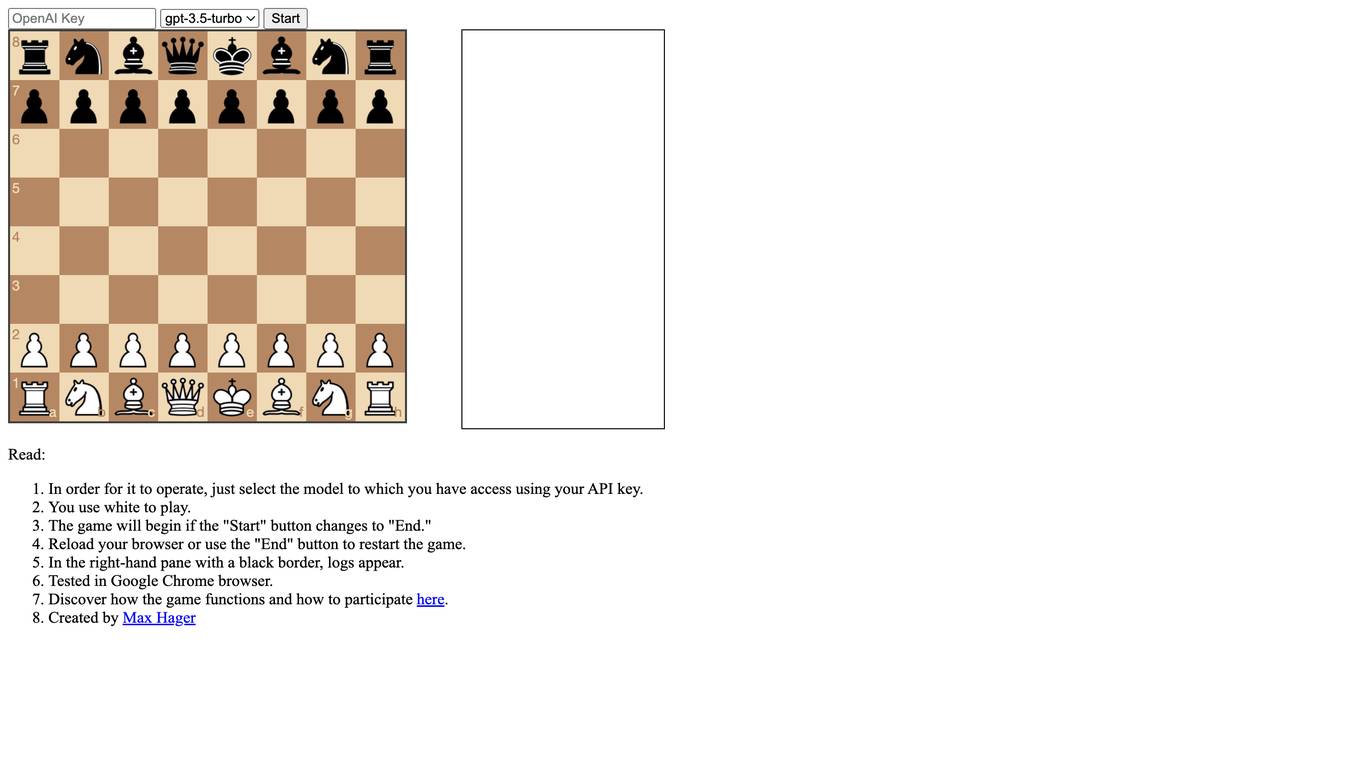

LLMChess

LLMChess is a web-based chess game that utilizes large language models (LLMs) to power the gameplay. Players can select the LLM model they wish to play against, and the game will commence once the "Start" button is clicked. The game logs are displayed in a black-bordered pane on the right-hand side of the screen. LLMChess is compatible with the Google Chrome browser. For more information on the game's functionality and participation guidelines, please refer to the provided link.

Winston AI

Winston AI is an AI-powered platform offering an affiliate program for detecting AI-generated content. Users can sign up to refer paying customers and earn a 40% commission on all payments within the first 12 months. The platform prohibits the use of paid advertisements that may rival their marketing efforts. Powered by Rewardful, Winston AI provides a seamless experience for affiliates to promote and earn from the software.

Yomu AI

Yomu AI is an AI application that offers an Ambassador Program where users can earn a commission for referring paid customers. The platform requires users to sign up or log in to access its features. Yomu AI Ambassador Program is powered by Rewardful and incentivizes users to promote the AI tool.

0 - Open Source AI Tools

5 - OpenAI Gpts

GPTLaudos

Olá radiologista. Para começar, digite /prelim e escreva o tipo de exame e os seus achados preliminares, logo em seguida enviarei o laudo completo!

Chip

"Chip" refers to the chip on this bot's shoulder. he's...not friendly. But he's still helpful, even when he's insulting you.