Best AI tools for< Reduce Data Overhead >

20 - AI tool Sites

MindsDB

MindsDB is an AI development cloud platform that enables developers to customize AI for their specific needs and purposes. It provides a range of features and tools for building, deploying, and managing AI models, including integrations with various data sources, AI engines, and applications. MindsDB aims to make AI more accessible and useful for businesses and organizations by allowing them to tailor AI solutions to their unique requirements.

Thoughtful

Thoughtful is an AI-powered revenue cycle automation platform that offers efficiency reports, eligibility verification, patient intake automation, claims processing, and more. It deploys AI across healthcare organizations to maximize profitability, reduce errors, and enhance operational excellence. Thoughtful's AI agents work tirelessly, 10x more efficiently than humans, and never get tired. The platform helps providers improve revenue cycle management, financial health, HR processes, and healthcare IT operations through seamless integration, reduced overhead, and significant performance improvements. Thoughtful offers a white-glove service, custom-built platform, seamless integration with all healthcare applications, and performance-based contracting with refund and value guarantees.

Granica

Granica is an AI tool designed for data compression and optimization, enabling users to transform petabytes of data into terabytes through self-optimizing, lossless compression. It works seamlessly across various data platforms like Iceberg, Delta, Trino, Spark, Snowflake, BigQuery, and Databricks, offering significant cost savings and improved query performance. Granica is trusted by data and AI leaders globally for its ability to reduce data bloat, speed up queries, and enhance data lake optimization. The tool is built for structured AI, providing transparent deployment, continuous adaptation, hands-off orchestration, and trusted controls for data security and compliance.

Polymer DSPM

Polymer DSPM is an AI-driven Data Security Posture Management platform that offers Data Loss Prevention (DLP) and Breach Prevention solutions. It provides real-time data visibility, adaptive controls, and automated remediation to prevent data breaches. The platform empowers users to actively manage human-based risks and fosters enterprise-wide behavior change through real-time nudges and risk scoring. Polymer helps organizations secure their data in the age of AI by guiding employees in real-time to prevent accidental sharing of confidential information. It integrates with popular chat, file storage, and GenAI tools to protect sensitive data and reduce noise and data exposure. The platform leverages AI to contextualize risk, trigger security workflows, and actively nudge employees to reduce risky behavior over time.

ClosedLoop

ClosedLoop is a healthcare data science platform that helps organizations improve outcomes and reduce unnecessary costs with accurate, explainable, and actionable predictions of individual-level health risks. The platform provides a comprehensive library of easily modifiable templates for healthcare-specific predictive models, machine learning (ML) features, queries, and data transformation, which accelerates time to value. ClosedLoop's AI/ML platform is designed exclusively for the data science needs of modern healthcare organizations and helps deliver measurable clinical and financial impact.

SentinelOne

SentinelOne is an advanced enterprise cybersecurity AI platform that offers a comprehensive suite of AI-powered security solutions for endpoint, cloud, and identity protection. The platform leverages artificial intelligence to anticipate threats, manage vulnerabilities, and protect resources across the entire enterprise ecosystem. With features such as Singularity XDR, Purple AI, and AI-SIEM, SentinelOne empowers security teams to detect and respond to cyber threats in real-time. The platform is trusted by leading enterprises worldwide and has received industry recognition for its innovative approach to cybersecurity.

SentinelOne

SentinelOne is an advanced enterprise cybersecurity AI platform that offers a comprehensive suite of AI-powered security solutions for endpoint, cloud, and identity protection. The platform leverages AI technology to anticipate threats, manage vulnerabilities, and protect resources across the enterprise ecosystem. SentinelOne provides real-time threat hunting, managed services, and actionable insights through its unified data lake, empowering security teams to respond effectively to cyber threats. With a focus on automation, efficiency, and value maximization, SentinelOne is a trusted cybersecurity solution for leading enterprises worldwide.

Pongo

Pongo is an AI-powered tool that helps reduce hallucinations in Large Language Models (LLMs) by up to 80%. It utilizes multiple state-of-the-art semantic similarity models and a proprietary ranking algorithm to ensure accurate and relevant search results. Pongo integrates seamlessly with existing pipelines, whether using a vector database or Elasticsearch, and processes top search results to deliver refined and reliable information. Its distributed architecture ensures consistent latency, handling a wide range of requests without compromising speed. Pongo prioritizes data security, operating at runtime with zero data retention and no data leaving its secure AWS VPC.

Codimite

Codimite is an AI-assisted offshore development company that provides a range of services to help businesses accelerate their software development, reduce costs, and drive innovation. Codimite's team of experienced engineers and project managers use AI-powered tools and technologies to deliver exceptional results for their clients. The company's services include AI-assisted software development, cloud modernization, and data and artificial intelligence solutions.

Beebzi.AI

Beebzi.AI is an all-in-one AI content creation platform that offers a wide array of tools for generating various types of content such as articles, blogs, emails, images, voiceovers, and more. The platform utilizes advanced AI technology and behavioral science to empower businesses and individuals in their marketing and sales endeavors. With features like AI Article Wizard, AI Room Designer, AI Landing Page Generator, and AI Code Generation, Beebzi.AI revolutionizes content creation by providing customizable templates, multiple language support, and real-time data insights. The platform also offers various subscription plans tailored for individual entrepreneurs, teams, and businesses, with flexible pricing models based on word count allocations. Beebzi.AI aims to streamline content creation processes, enhance productivity, and drive organic traffic through SEO-optimized content.

Fyma

Fyma is an AI-driven analytics tool that helps property managers, asset operators, and urban planners optimize spaces, reduce costs, and enhance visitor experiences by transforming existing cameras into AI-enabled sensors. It provides real-time insights on occupancy, space utilization, and tenant placement decisions, allowing users to make data-driven decisions for property management and revenue optimization.

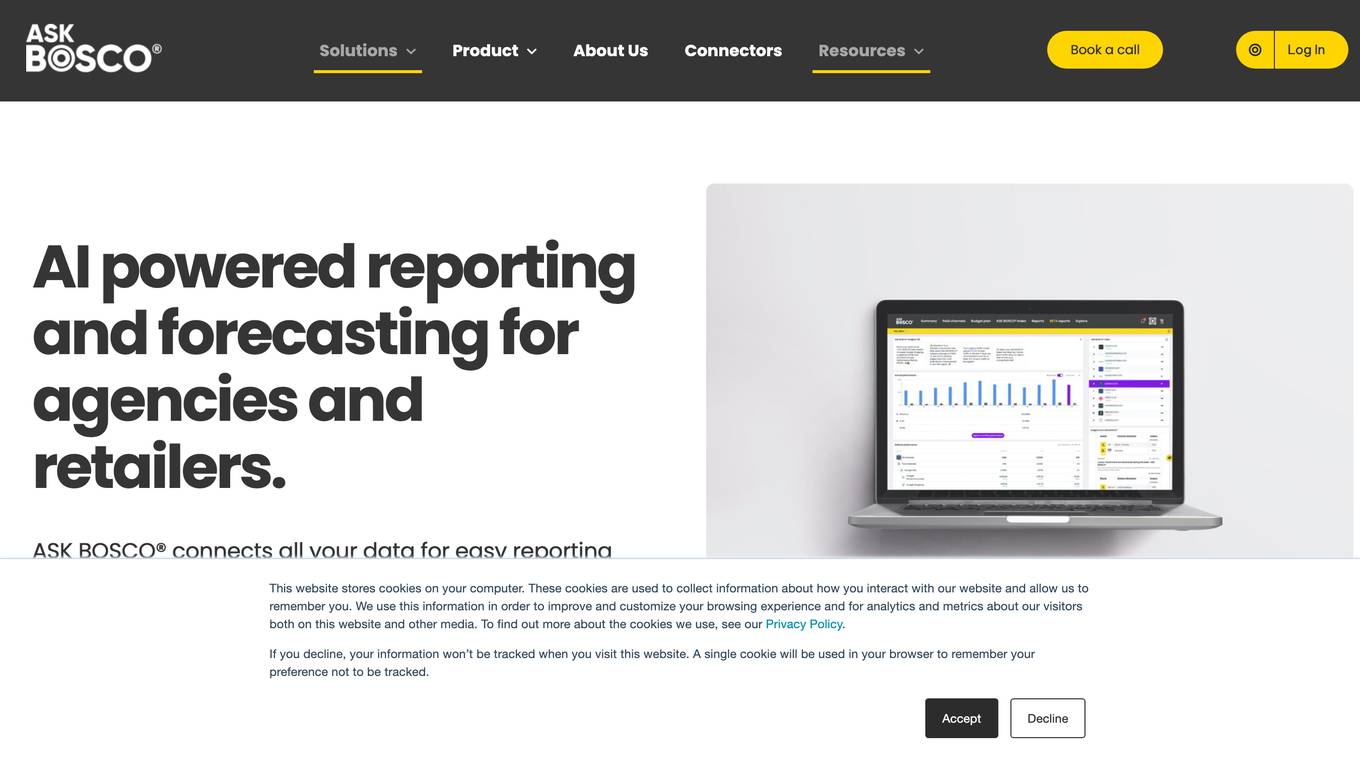

ASK BOSCO®

ASK BOSCO® is an AI reporting and forecasting tool designed for agencies and retailers. It uses AI technology to collect and analyze data from marketing and ecommerce sources, providing insights for better decision-making and forecasting. The platform offers features such as AI reporting, competitor benchmarking, AI budget planning, and data integrations, helping users streamline their processes and boost performance. Trusted by leading brands and agencies, ASK BOSCO® aims to simplify data analysis and reporting, enabling users to make data-driven decisions with confidence.

Trially AI

Trially AI is a HIPAA-compliant AI clinical trial platform that leverages advanced technology to help life science leaders enroll qualified patients faster. The platform uses AI algorithms to unlock rich medical data, match patients to trials, and improve enrollment rates. Trially AI is proven to deliver superior results 4x faster than other technology providers, with features like multiplying enrollment, reducing screen fails, and increasing eligibility accuracy. It benefits sponsors, CROs, research sites, and hospitals by improving enrollment rates, saving time on chart reviews, and enhancing site success outcomes.

Value Chain Generator®

The Value Chain Generator® is an AI & Big Data platform for circular bioeconomy that helps companies, waste processors, and regions maximize the value and minimize the carbon footprint of by-products and waste. It uses global techno-economic and climate intelligence to identify circular opportunities, match with suitable partners and technologies, and create profitable and impactful solutions. The platform accelerates the circular transition by integrating local industries through technology, reducing waste, and increasing profits.

Defined.ai

Defined.ai is a leading provider of high-quality and ethical data for AI applications. Founded in 2015, Defined.ai has a global presence with offices in the US, Europe, and Asia. The company's mission is to make AI more accessible and ethical by providing a marketplace for buying and selling AI data, tools, and models. Defined.ai also offers professional services to help deliver success in complex machine learning projects.

Hermae Solutions

Hermae Solutions offers an AI Assistant for Enterprise Design Systems, providing onboarding acceleration, contractor efficiency, design system adoption support, knowledge distribution, and various AI documentation and Storybook assistants. The platform enables users to train custom AI assistants, embed them into documentation sites, and communicate instantly with the knowledge base. Hermae's process simplifies efficiency improvements by gathering information sources, processing data for AI supplementation, customizing integration, and supporting integration success. The AI assistant helps reduce engineering costs and increase development efficiency across the board.

ASSIST

ASSIST is an AI-driven document management software designed to streamline financial paperwork processing and data entry tasks. The application offers features such as SmartDoc Entry for extracting information from invoices and receipts, Polyglot Processing for multilingual support, One-Tap Integration with accounting platforms, ExportEase for data export in CSV format, and AutoFlow Revolution for automated workflows. ASSIST aims to simplify document management, enhance efficiency, and drive digital transformation in businesses by leveraging AI technology.

Findem

Findem is an AI Talent Acquisition Software that offers a comprehensive platform for candidate sourcing, talent CRM, talent analytics, market intelligence, and more. It leverages AI technology to automate talent acquisition workflows and consolidate top-of-funnel activities. Findem provides unique 3D data and attributes to empower organizations in transforming their talent strategy. The platform is designed to attract, nurture, and hire with confidence, offering features such as Copilot for Sourcing, external talent sourcing, inbound management, candidate rediscovery, and talent CRM.

SnapMeasureAI

SnapMeasureAI is an AI application that specializes in automated AI image labeling, precise 3D body measurements, and video-based motion capture. It uses advanced AI technology to accurately understand and model the human body, working with any body type, skin tone, pose, or background. The application caters to various industries such as retail, fitness & health, AI training data, and security, offering a free demo for interested users.

GrapixAI

GrapixAI is a leading provider of low-cost cloud GPU rental services and AI server solutions. The company's focus on flexibility, scalability, and cutting-edge technology enables a variety of AI applications in both local and cloud environments. GrapixAI offers the lowest prices for on-demand GPUs such as RTX4090, RTX 3090, RTX A6000, RTX A5000, and A40. The platform provides Docker-based container ecosystem for quick software setup, powerful GPU search console, customizable pricing options, various security levels, GUI and CLI interfaces, real-time bidding system, and personalized customer support.

0 - Open Source AI Tools

20 - OpenAI Gpts

SAP Logistic Super Hero

SAP Logistics expert with a focus on data analysis, project management, and accurate information.

CDR Guru

To master Unified Communications Data across platforms like Cisco, Avaya, Mitel, and Microsoft Teams, by orchestrating a team of expert agents and providing actionable solutions.

Dalia

Material facts application that details the content, impact category data, and environmental rating of materials.

Process Optimization Advisor

Improves operational efficiency by optimizing processes and reducing waste.

Six Sigma Guru

No one knows more Six Sigma than us! You can try our GPT Six Sigma Guru for study or simply to find answers to your problems.

Greenlight Energy Guide

Illuminating the path to smarter energy use and effortless savings.

Robotic Insights Expert

RPA and Robotics Engineering expert, developed on OpenAI technology.

Gas Intellect Pro

Leading-Edge Gas Analysis and Optimization: Adaptable, Accurate, Advanced, developed on OpenAI.

Cloud Networking Advisor

Optimizes cloud-based networks for efficient organizational operations.