Best AI tools for< Quit Addiction >

4 - AI tool Sites

Quit Porn

Quit Porn is a free GPT client designed to help individuals overcome porn addiction. The platform offers AI-powered therapy and counseling services to support users in their journey towards quitting pornography. By providing immediate access to professional guidance and resources, Quit Porn aims to address the harmful effects of pornography addiction on physical and mental health, relationships, and overall well-being. Users can benefit from personalized support and strategies to break free from the addiction and achieve long-term recovery.

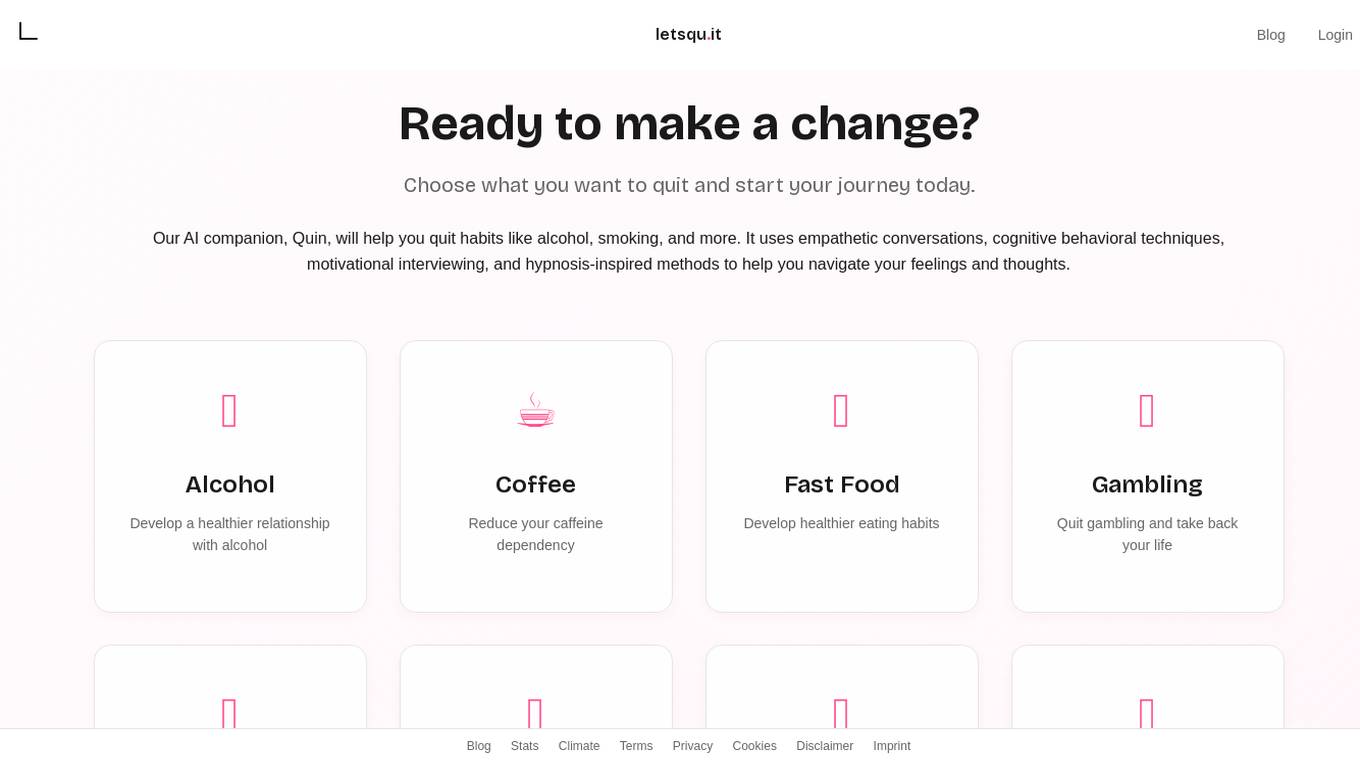

letsqu.it

letsqu.it is an AI companion application designed to help individuals quit various habits such as alcohol, smoking, gaming, and more. The application utilizes empathetic conversations, cognitive behavioral techniques, motivational interviewing, and hypnosis-inspired methods to assist users in navigating their emotions and thoughts towards positive behavioral changes.

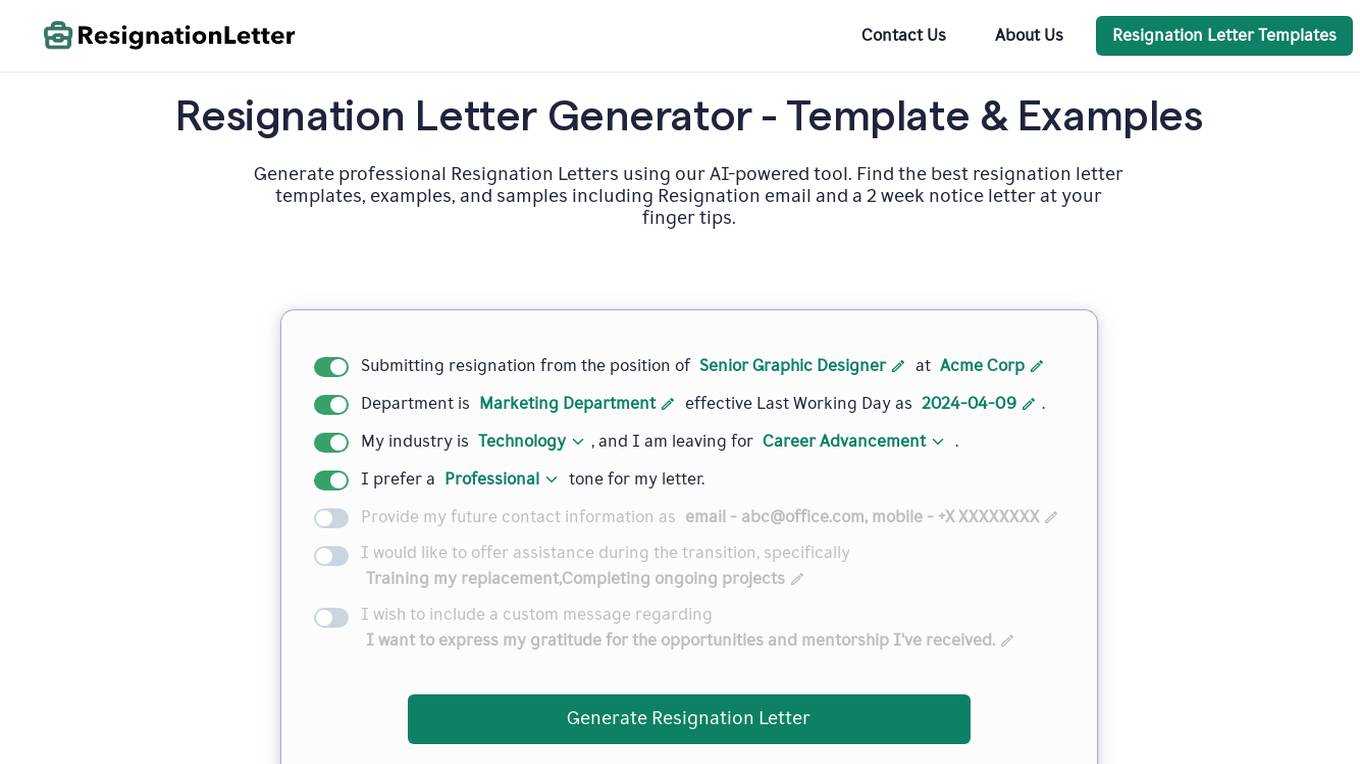

Resignation Letter Generator

This website provides a tool to generate professional resignation letters, including templates, examples, and samples. It also offers guidance on writing a formal and respectable letter of resignation with clear notice, expressing gratitude, offering support for the transition, and closing formally. Additionally, it provides tips on what to include and what not to include in a resignation letter, as well as a collection of resignation letter templates for various scenarios. The website also includes a section on frequently asked questions about resignation letters, covering topics such as whether a reason for resignation is compulsory, whether a resignation letter needs to be signed, who to send it to, whether it's acceptable to resign by email, the best day to resign, the appropriate notice period, and valid reasons for immediate resignation.

Instant Resign

Instant Resign is an AI-powered application designed to assist individuals in resigning from their jobs quickly and professionally. The platform offers a stress-free process by generating tailored resignation letters instantly and handling the delivery to the employer. With a user-friendly interface, Instant Resign aims to streamline the job resignation process and provide full exit support to users worldwide.

0 - Open Source AI Tools

5 - OpenAI Gpts

BreatheEasy

A supportive assistant for quitting smoking, integrating psychological techniques.

Smoke-Free Buddy

A supportive companion for smoke-free living, tracking progress and offering motivation.

Auto-Hypnosis Coach Master

The Bot provides guidance and contact for auto-hypnosis sessions