Best AI tools for< Prevent Harmful Content >

20 - AI tool Sites

Redflag AI

Redflag AI is a leading provider of content and brand protection solutions. Our mission is to help businesses protect their brands and reputations from online threats. We offer a range of services to help businesses identify, remove, and prevent harmful content from appearing online.

Secur3D

Secur3D is an AI tool designed for automated 3D asset analysis and moderation. It focuses on protecting user-generated content (UGC) on creator marketplaces by utilizing advanced algorithms to detect and prevent unauthorized or harmful content. With Secur3D, creators can ensure the safety and integrity of their content, providing a secure environment for both creators and users.

Lakera

Lakera is the world's most advanced AI security platform that offers cutting-edge solutions to safeguard GenAI applications against various security threats. Lakera provides real-time security controls, stress-testing for AI systems, and protection against prompt attacks, data loss, and insecure content. The platform is powered by a proprietary AI threat database and aligns with global AI security frameworks to ensure top-notch security standards. Lakera is suitable for security teams, product teams, and LLM builders looking to secure their AI applications effectively and efficiently.

Alphy

Alphy is a modern AI tool for communication compliance that helps companies detect and prevent harmful and unlawful language in their communication. The AI classifier has a 94% accuracy rate and can identify over 40 high-risk categories of harmful language. By using Reflect AI, companies can shield themselves from reputational, ethical, and legal risks, ensuring compliance and preventing costly litigation.

Modulate

Modulate is a voice intelligence tool that provides proactive voice chat moderation solutions for various platforms, including gaming, delivery services, and social platforms. It uses advanced AI technology to detect and prevent harmful behaviors, ensuring a safer and more positive user experience. Modulate helps organizations comply with regulations, enhance user safety, and improve community interactions through its customizable and intelligent moderation tools.

Responsible AI Licenses (RAIL)

Responsible AI Licenses (RAIL) is an initiative that empowers developers to restrict the use of their AI technology to prevent irresponsible and harmful applications. They provide licenses with behavioral-use clauses to control specific use-cases and prevent misuse of AI artifacts. The organization aims to standardize RAIL Licenses, develop collaboration tools, and educate developers on responsible AI practices.

Giskard

Giskard is an automated Red Teaming platform designed to prevent security vulnerabilities and business compliance failures in AI agents. It offers advanced features for detecting AI vulnerabilities, proactive monitoring, and aligning AI testing with real business requirements. The platform integrates with observability stacks, provides enterprise-grade security, and ensures data protection. Giskard is trusted by enterprise AI teams and has been used to detect over 280,000 AI vulnerabilities.

Nightfall AI

Nightfall AI is an all-in-one data loss prevention platform that helps organizations prevent data leaks by putting data loss prevention on autopilot across SaaS & Gen AI apps, endpoints, and browsers. It offers features such as data exfiltration prevention, data detection & response, and data discovery & classification. Nightfall AI uses AI-powered LLM & behavioral models to deeply understand content sensitivity and data lineage, providing complete coverage across various applications and devices. The platform ensures frictionless deployment & maintenance with API-based integrations and lightweight agents, offering a streamlined user experience for quick understanding of exposure and user intent. Nightfall AI also involves and coaches end users to self-remediate, reducing the burden on SOC teams.

EchoMark

EchoMark is a cloud-based data leak prevention solution that uses invisible forensic watermarks to protect sensitive information from unauthorized access and exfiltration. It allows organizations to securely share and collaborate on documents and emails without compromising privacy and security. EchoMark's advanced investigation tools can trace the source of a leaked document or email, even if it has been shared via printout or photo.

Scopey

Scopey is an AI-powered scope management tool designed to help businesses manage shifting client demands and prevent scope creep. It offers real-time tracking of project changes, detailed scopes of work creation, seamless integration with team workflows, and upselling opportunities. Scopey aims to save time, increase revenue, ensure transparency, stop scope creep, and boost project success effortlessly.

Rootly

Rootly is an AI-native incident management platform designed to help prevent and resolve incidents faster by leveraging AI technology. It offers features such as AI SRE, on-call incident response, retrospectives, platform integrations, and incident communications. Rootly is loved by fast-growing startups and Fortune 500 companies for its reliability, scalability, and extensibility. The platform automates workflows, provides real-time collaboration, and offers proactive suggestions to streamline incident management processes.

AI Scam Detective

AI Scam Detective is an AI tool designed to help users detect and prevent online scams. Users can input messages or conversations into the tool, which then provides a scam likelihood score from 1 to 10. The tool aims to empower users to identify potential scams and protect themselves from fraudulent activities. Created by Sam Meehan.

TrueBees

TrueBees is a deepfakes detector application that leverages the power of Artificial Intelligence to detect and prevent the spread of AI-generated images on social media. It provides a reliable solution for media professionals and individuals to verify the trustworthiness of images, combat deepfakes, and ensure the authenticity of visual content shared online. By combining digital media forensics and blockchain technology, TrueBees offers a secure and accurate platform for image authentication, making it a valuable tool in the fight against disinformation and fraudulent content.

AI Spend

AI Spend is an AI application designed to help users monitor their AI costs and prevent surprises. It allows users to keep track of their OpenAI usage and costs, providing fast insights, a beautiful dashboard, cost insights, notifications, usage analytics, and details on models and tokens. The application ensures simple pricing with no additional costs and securely stores API keys. Users can easily remove their data if needed, emphasizing privacy and security.

Facia.ai

Facia.ai is an AI-powered platform that specializes in liveness detection and deepfake detection solutions for businesses and governments. It offers cutting-edge technology to prevent identity fraud, verify authenticity, and enhance security measures. The platform provides accurate face matching, facial recognition, and age verification services, along with customizable integration options and reliable support. Facia.ai aims to combat misinformation, identity fraud, and evolving threats in various industries by leveraging AI algorithms and real-time detection capabilities.

Concentric AI

Concentric AI is a Managed Data Security Posture Management tool that utilizes Semantic Intelligence to provide comprehensive data security solutions. The platform offers features such as autonomous data discovery, data risk identification, centralized remediation, easy deployment, and data security posture management. Concentric AI helps organizations protect sensitive data, prevent data loss, and ensure compliance with data security regulations. The tool is designed to simplify data governance and enhance data security across various data repositories, both in the cloud and on-premises.

Polymer DSPM

Polymer DSPM is an AI-driven Data Security Posture Management platform that offers Data Loss Prevention (DLP) and Breach Prevention solutions. It provides real-time data visibility, adaptive controls, and automated remediation to prevent data breaches. The platform empowers users to actively manage human-based risks and fosters enterprise-wide behavior change through real-time nudges and risk scoring. Polymer helps organizations secure their data in the age of AI by guiding employees in real-time to prevent accidental sharing of confidential information. It integrates with popular chat, file storage, and GenAI tools to protect sensitive data and reduce noise and data exposure. The platform leverages AI to contextualize risk, trigger security workflows, and actively nudge employees to reduce risky behavior over time.

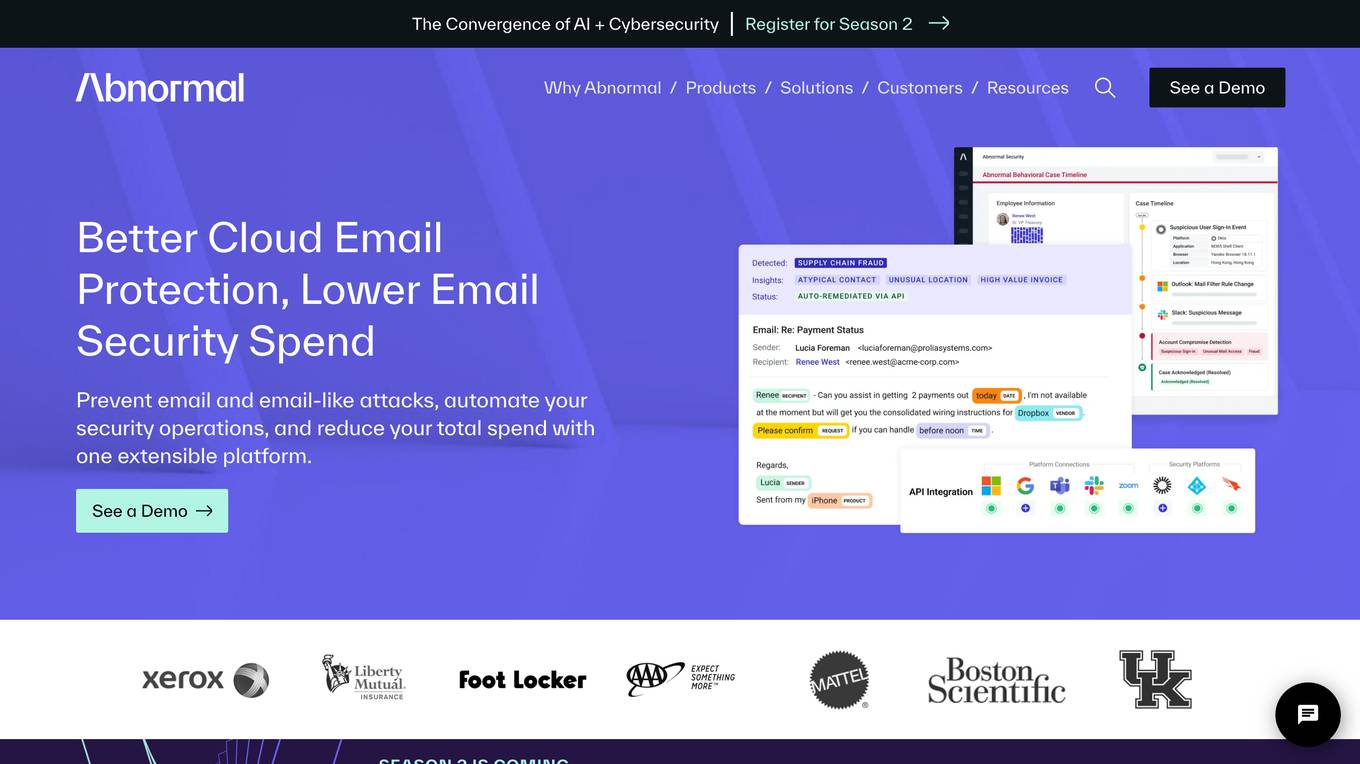

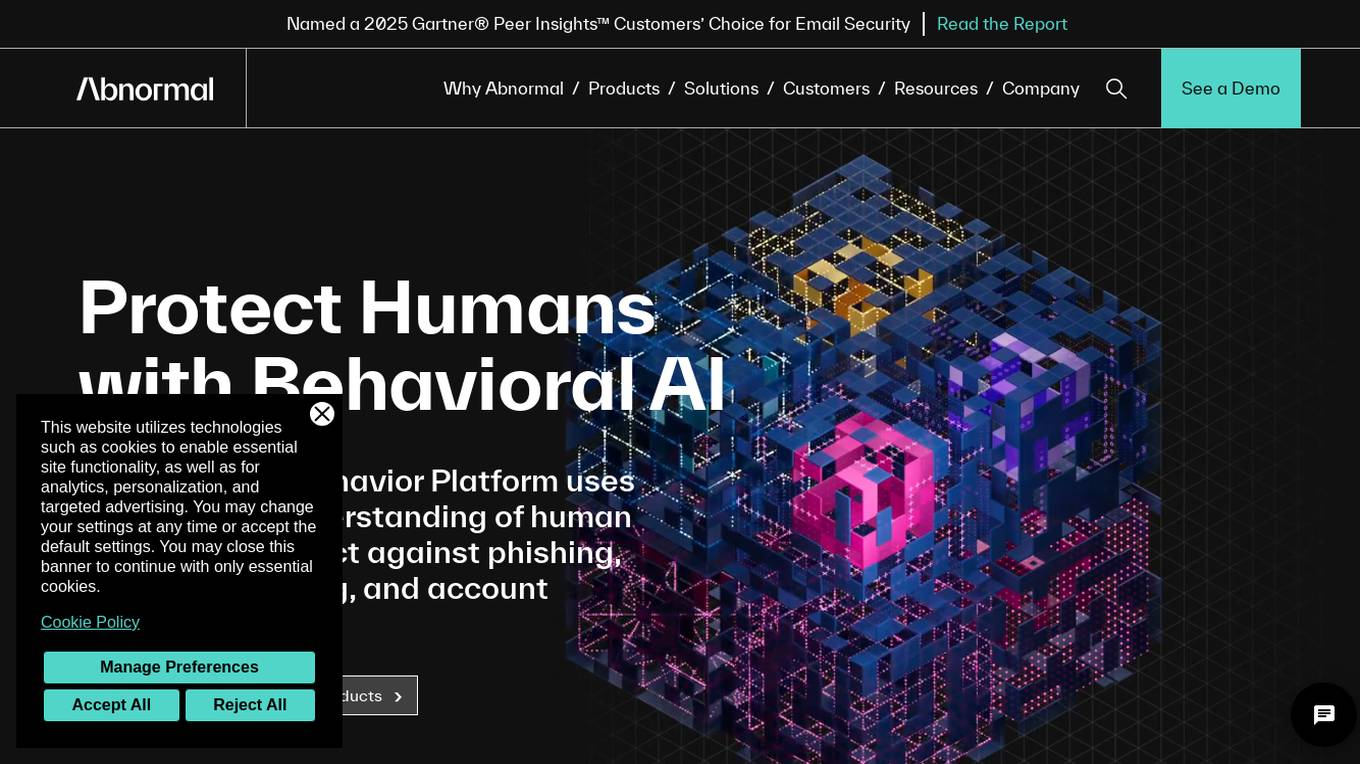

Abnormal

Abnormal is an AI-powered platform that leverages superhuman understanding of human behavior to protect against email attacks such as phishing, social engineering, and account takeovers. The platform offers unified protection across email and cloud applications, behavioral anomaly detection, account compromise detection, data security, and autonomous AI agents for security operations. Abnormal is recognized as a leader in email security and AI-native security, trusted by over 3,000 customers, including 20% of the Fortune 500. The platform aims to autonomously protect humans, reduce risks, save costs, accelerate AI adoption, and provide industry-leading security solutions.

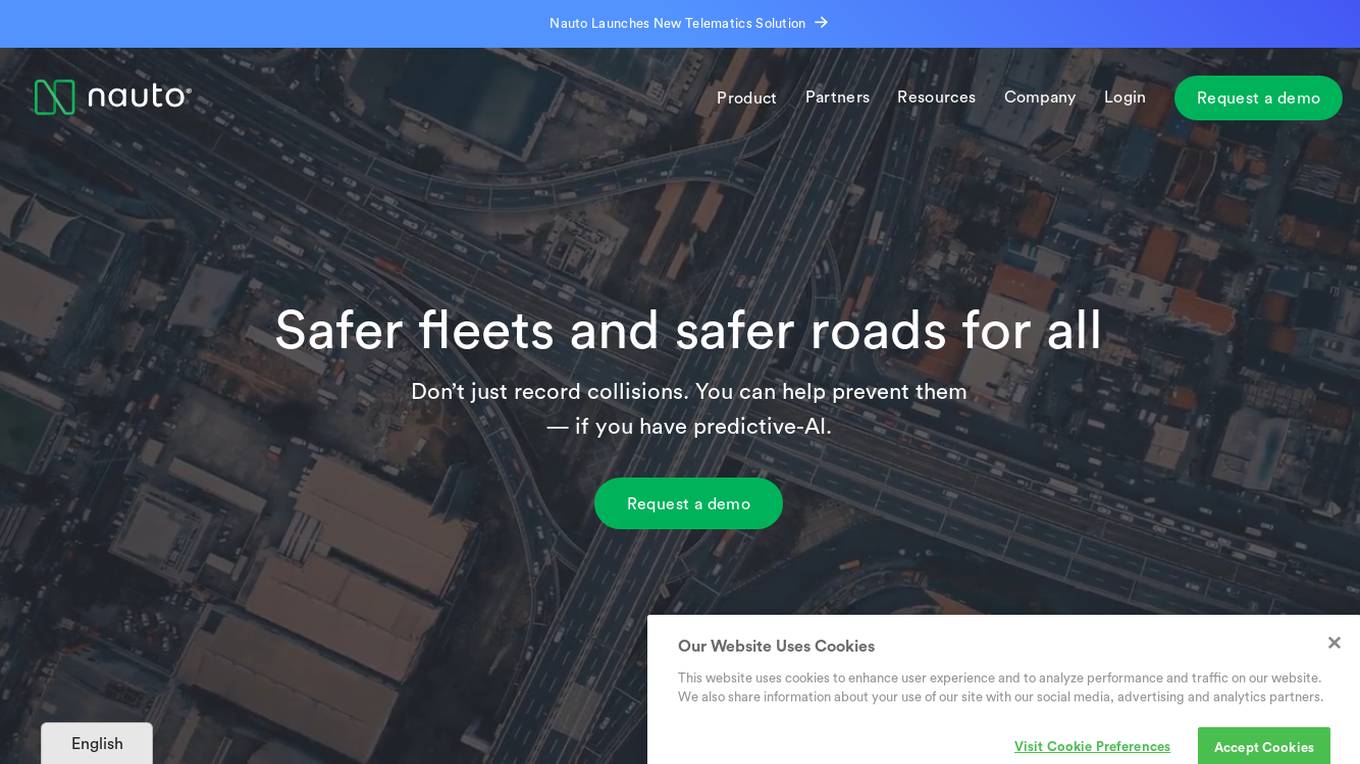

Nauto

Nauto is an AI-powered fleet management software that helps businesses improve driver safety and reduce collisions. It uses a dual-facing camera and external sensors to detect distracted and drowsy driving, as well as in-cabin and external risks. Nauto's predictive AI algorithms can assess, predict, and alert drivers of imminent risks to avoid collisions. It also provides real-time alerts to end distracted and drowsy driving, and self-guided coaching videos to help drivers improve their behavior. Nauto's claims management feature can quickly and reliably process and resolve claims, resulting in millions of dollars saved. Overall, Nauto is a comprehensive driver and vehicle safety platform that can help businesses reduce risk, improve safety, and save money.

Abnormal Security

Abnormal Security is an AI-powered platform that leverages superhuman understanding of human behavior to protect against email threats such as phishing, social engineering, and account takeovers. The platform is trusted by over 3,000 customers, including 25% of the Fortune 500 companies. Abnormal Security offers a comprehensive cloud email security solution, behavioral anomaly detection, SaaS security, and autonomous AI security agents to provide multi-layered protection against advanced email attacks. The platform is recognized as a leader in email security and AI-native security, delivering unmatched protection and reducing the risk of phishing attacks by 90%.

0 - Open Source AI Tools

20 - OpenAI Gpts

Online Doc

You are a virtual general practitioner who makes a basic diagnosis based on the consultant's description and gives advice on treatment and how to prevent such diseases.

Plagiarism Checker

Plagiarism Checker GPT is powered by Winston AI and created to help identify plagiarized content. It is designed to help you detect instances of plagiarism and maintain integrity in academia and publishing. Winston AI is the most trusted AI and Plagiarism Checker.

Punaises de Lit

Expert sur les punaises de lit, conseils d'identification et mesures à prendre en cas d'infestation.

Data Guardian

Expert in privacy news, data breach advice, and multilingual data export assistance.

GPT Auth™

This is a demonstration of GPT Auth™, an authentication system designed to protect your customized GPT.

STOP HPV End Cervical Cancer

Eradicate Cervical Cancer by Providing Trustworthy Information on HPV

Knee and Leg Care Assistant

Helps users with knee and leg care, offering exercises and wellness tips.

Physiotherapist

A virtual physiotherapist providing tailored exercises and stretches for pain relief.