Best AI tools for< Practice Responsible Gambling >

20 - AI tool Sites

75 Wbet Com Daftar : OLX500

75 Wbet Com Daftar : OLX500 is an online platform offering a variety of casino games, including slots, live casino, poker, and more. Users can access popular games like Sweet Bonanza, Mahjong Ways, and Gates of Olympus. The platform also provides guidance on how to install the app on Android devices. With a focus on responsible gambling, 75 Wbet Com Daftar : OLX500 aims to enhance the gaming experience for its users.

Filechatai

The website is a platform that provides information about reliable betting sites in 2024. It offers access to current login addresses for various betting companies. Users can find details about bonuses, payment methods, sports betting options, casino games, live betting, and mobile compatibility. The site emphasizes the importance of choosing reliable and licensed casino sites. It also covers topics such as welcome bonuses, game fairness, payment security, and bonus terms and conditions. Additionally, it discusses strategies like the Reverse Martingale and card counting in blackjack. The platform aims to guide users in making informed decisions while engaging in online betting and casino activities.

AIGA AI Governance Framework

The AIGA AI Governance Framework is a practice-oriented framework for implementing responsible AI. It provides organizations with a systematic approach to AI governance, covering the entire process of AI system development and operations. The framework supports compliance with the upcoming European AI regulation and serves as a practical guide for organizations aiming for more responsible AI practices. It is designed to facilitate the development and deployment of transparent, accountable, fair, and non-maleficent AI systems.

Microsoft Responsible AI Toolbox

Microsoft Responsible AI Toolbox is a suite of tools designed to assess, develop, and deploy AI systems in a safe, trustworthy, and ethical manner. It offers integrated tools and functionalities to help operationalize Responsible AI in practice, enabling users to make user-facing decisions faster and easier. The Responsible AI Dashboard provides a customizable experience for model debugging, decision-making, and business actions. With a focus on responsible assessment, the toolbox aims to promote ethical AI practices and transparency in AI development.

Gavel

Gavel is a legal document automation and intake software designed for legal professionals. It offers a range of features to help lawyers and law firms automate tasks, streamline workflows, and improve efficiency. Gavel's AI-enabled onboarding process, Blueprint, streamlines the onboarding process without accessing any client data. The software also includes features such as secure client collaboration, integrated payments, and custom workflow creation. Gavel is suitable for legal professionals of all sizes and practice areas, from solo practitioners to large firms.

Simbo AI

Simbo AI is a Gen AI platform designed for healthcare enterprises, offering autonomous applications for medical practice automation. It combines LLM and symbolic knowledge bases to provide hallucination-free responses. The platform is fully controllable, consistent, secure, and responsible, ensuring accurate and reliable AI interactions. Simbo AI utilizes Symbolic RAG technology with Lossless NLU for exact search and fact-checking capabilities. It aims to automate medical practices, reduce costs, and improve patient care, ultimately enhancing the lives of doctors and patients.

Responsible AI Institute

The Responsible AI Institute is a global non-profit organization dedicated to equipping organizations and AI professionals with tools and knowledge to create, procure, and deploy AI systems that are safe and trustworthy. They offer independent assessments, conformity assessments, and certification programs to ensure that AI systems align with internal policies, regulations, laws, and best practices for responsible technology use. The institute also provides resources, news, and a community platform for members to collaborate and stay informed about responsible AI practices and regulations.

Google Public Policy

Google Public Policy is a website dedicated to showcasing Google's public policy initiatives and priorities. It provides information on various topics such as consumer choice, economic opportunity, privacy, responsible AI, security, sustainability, and trustworthy information & content. The site highlights Google's efforts in advancing bold and responsible AI, strengthening security, and promoting a more sustainable future. It also features news updates, research briefs, and collaborations with organizations to address societal challenges through technology and innovation.

Lumenova AI

Lumenova AI is an AI platform that focuses on making AI ethical, transparent, and compliant. It provides solutions for AI governance, assessment, risk management, and compliance. The platform offers comprehensive evaluation and assessment of AI models, proactive risk management solutions, and simplified compliance management. Lumenova AI aims to help enterprises navigate the future confidently by ensuring responsible AI practices and compliance with regulations.

Responsible AI Licenses (RAIL)

Responsible AI Licenses (RAIL) is an initiative that empowers developers to restrict the use of their AI technology to prevent irresponsible and harmful applications. They provide licenses with behavioral-use clauses to control specific use-cases and prevent misuse of AI artifacts. The organization aims to standardize RAIL Licenses, develop collaboration tools, and educate developers on responsible AI practices.

Motific.ai

Motific.ai is a responsible GenAI tool powered by data at scale. It offers a fully managed service with natural language compliance and security guardrails, an intelligence service, and an enterprise data-powered, end-to-end retrieval augmented generation (RAG) service. Users can rapidly deliver trustworthy GenAI assistants and API endpoints, configure assistants with organization's data, optimize performance, and connect with top GenAI model providers. Motific.ai enables users to create custom knowledge bases, connect to various data sources, and ensure responsible AI practices. It supports English language only and offers insights on usage, time savings, and model optimization.

Arya.ai

Arya.ai is an AI tool designed for Banks, Insurers, and Financial Services to deploy safe, responsible, and auditable AI applications. It offers a range of AI Apps, ML Observability Tools, and a Decisioning Platform. Arya.ai provides curated APIs, ML explainability, monitoring, and audit capabilities. The platform includes task-specific AI models for autonomous underwriting, claims processing, fraud monitoring, and more. Arya.ai aims to facilitate the rapid deployment and scaling of AI applications while ensuring institution-wide adoption of responsible AI practices.

MobiHealthNews

MobiHealthNews is a digital health publication that covers breaking news and trends in healthcare. The website provides insights on AI models in radiology, responsible AI implementation, and digital tools for mental health. It also features news on partnerships in healthcare technology and advancements in AI adoption. MobiHealthNews aims to deliver daily updates on the latest developments in digital health and AI applications.

AI Health World Summit

The AI Health World Summit is a biennial event organized by SingHealth that brings together global leaders in healthcare, academia, government, legal, and industry sectors to explore the latest advancements and applications of artificial intelligence in healthcare. The summit focuses on crucial topics such as AI technologies adoption, clinical applications, data governance, privacy safeguards, advancements in vision and language models for health contexts, responsible AI practices, and AI education. The event aims to foster collaboration and innovation in AI-driven healthcare solutions.

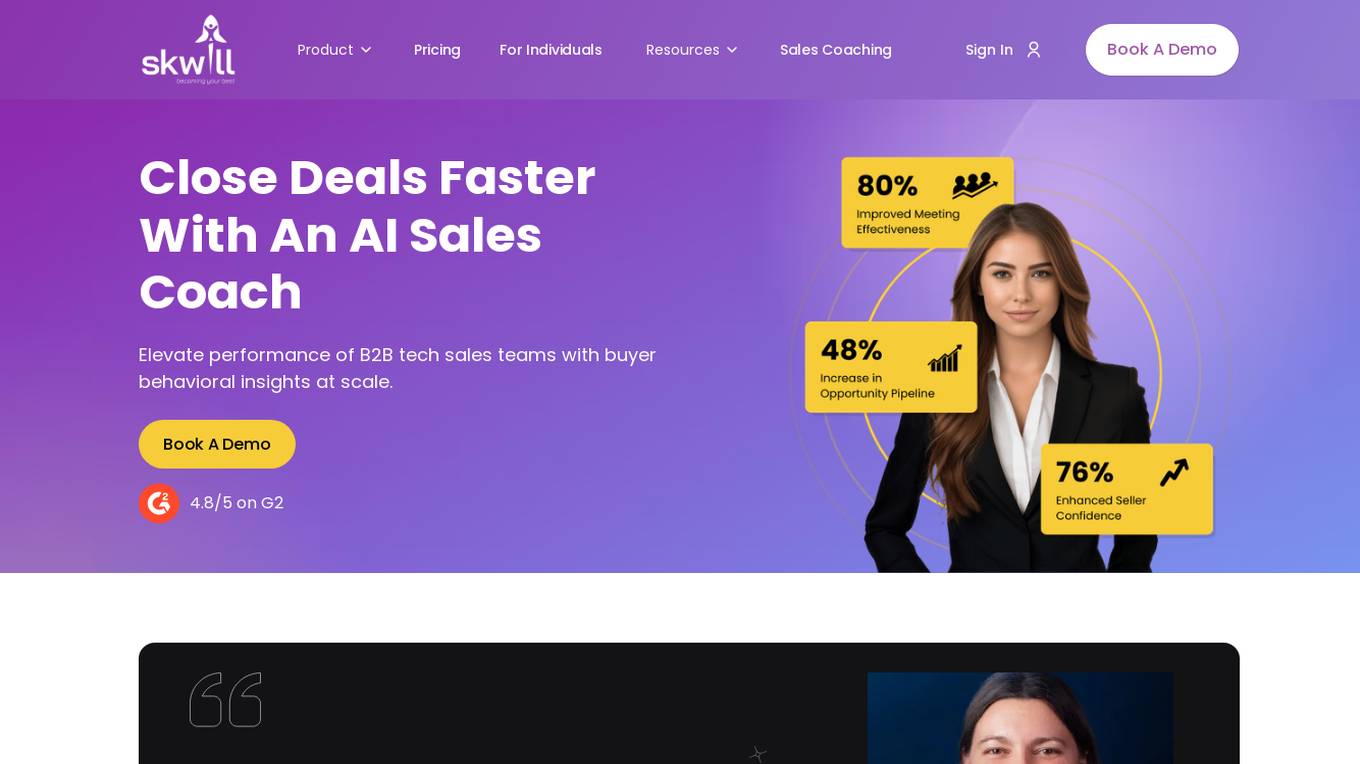

Skwill

Skwill is an AI-powered sales coaching platform designed for high-performing teams. It offers personalized coaching nudges for meetings, uses behavioral and language data for impactful interactions, and ensures data security and responsible AI practices. Skwill empowers users with insights to enhance sales performance, boost engagement, and improve productivity. The platform caters to enterprise sales and customer-facing roles, providing tools for meeting preparation, pipeline building, negotiation, deal closure, sales pitch customization, key account management, and more.

Microsoft AI

Microsoft AI is an advanced artificial intelligence solution that offers a wide range of AI-powered tools and services for businesses and individuals. It provides innovative AI solutions to enhance productivity, creativity, and connectivity across various industries. With a focus on responsible AI practices, Microsoft AI aims to empower organizations to leverage AI technology effectively and securely.

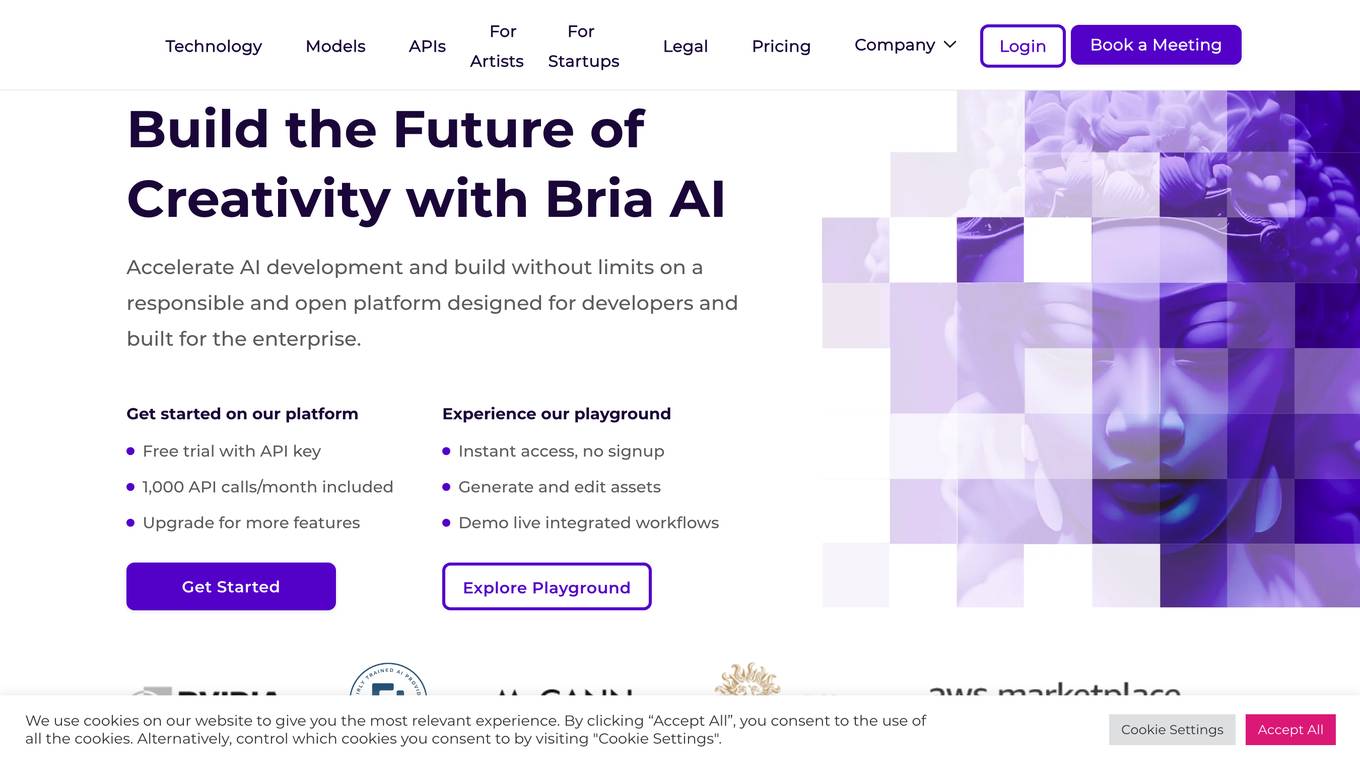

BRIA.ai

BRIA.ai is a visual generative AI platform that provides developers and businesses with the tools they need to build and deploy AI-powered applications. The platform includes a suite of pre-trained foundation models, APIs, and tools that can be used to generate and modify images, videos, and other visual content. BRIA.ai is committed to responsible AI practices and ensures that all of its models are trained on licensed and safe-to-use data.

Typeface

Typeface is an Enterprise Generative AI Platform for Marketing & Content Creation. It offers a suite of AI tools for marketing and content creation, empowering users to create personalized, on-brand content at scale. The platform integrates with various apps and systems to streamline workflows and enhance creativity. Typeface has been recognized for its innovative AI solutions and responsible AI practices, making it a trusted choice for industry leaders.

Letter AI

Letter AI is a revenue acceleration platform powered by Generative AI, designed to empower sales teams to find information faster and support revenue growth. The platform offers AI-powered training and coaching, content creation and management, real-time assistance from an AI co-pilot, and tools for deal pursuit automation. Letter AI combines generative AI technology with company-specific data to deliver a unified revenue enablement platform, enhancing user experience and driving cost savings. The platform ensures security and privacy by adhering to industry-leading standards and responsible data practices.

AI Image Generator

The AI Image Generator by Shutterstock is an innovative tool that allows users to instantly create stunning images from text prompts. Users can apply various visual styles such as cartoon, oil painting, photorealistic, and 3D to their images, customize them, and download the AI-generated images for use in creative projects or social media sharing. The tool supports over 20 languages and is designed to avoid generating inappropriate or offensive content. It also compensates contributors for their roles in the generative AI process, promoting responsible AI practices.

0 - Open Source AI Tools

20 - OpenAI Gpts

AI DEI

Insights on Diversity, Equality, and Inclusion - This AI chat provides info on DEI topics, but opinions may not align with all views. Use responsibly, consult experts, and promote respectful discussions.

👑 Data Privacy for Social Media Companies 👑

Data Privacy for Social Media Companies & Platforms collect detailed personal information, preferences, and interactions of users, making it essential to have strong data privacy policies and practices in place.

CSS and React Wizard

CSS Expert in all frameworks with a focus on clarity and best practices

CS Educator Assistant

Middle School CS TA Specializing in UDL and Culturally Responsive Teaching

TailwindCSS GPT

Converts wireframes into Tailwind CSS HTML code, focusing on frontend design to get speed and v0 quick.

GMS Practice Planner

This GPT will create a practice plan with a given time based on Gold Medal Squared methodology

CaseCracker™: Consultant Case Interview Practice

Crack open the door to your future. (Partner tip: use the iPhone app for voice chat)

Interview practice

Interview with Ami. An AI moderated practice interviewer created by @SprinterviewAi

Mock Interview Practice

4.5 ★ A mock interview is a practice interview, that could be useful while you're looking for a job. This GPT works for any job and any language.

FORECASTING: PRINCIPLES AND PRACTICE

预测:方法与实践(第三版) Rob J Hyndman 和 George Athanasopoulos 澳大利亚莫纳什大学

Hairy Otter and the Order of Adjectives

Practice your Wizarding Words to Make Magical Creatures

FAANG.AI

Get into FAANG. Practice with an AI expert in algorithms, data structures, and system design. Do a mock interview and improve.