Best AI tools for< Perform Reinforcement Learning >

20 - AI tool Sites

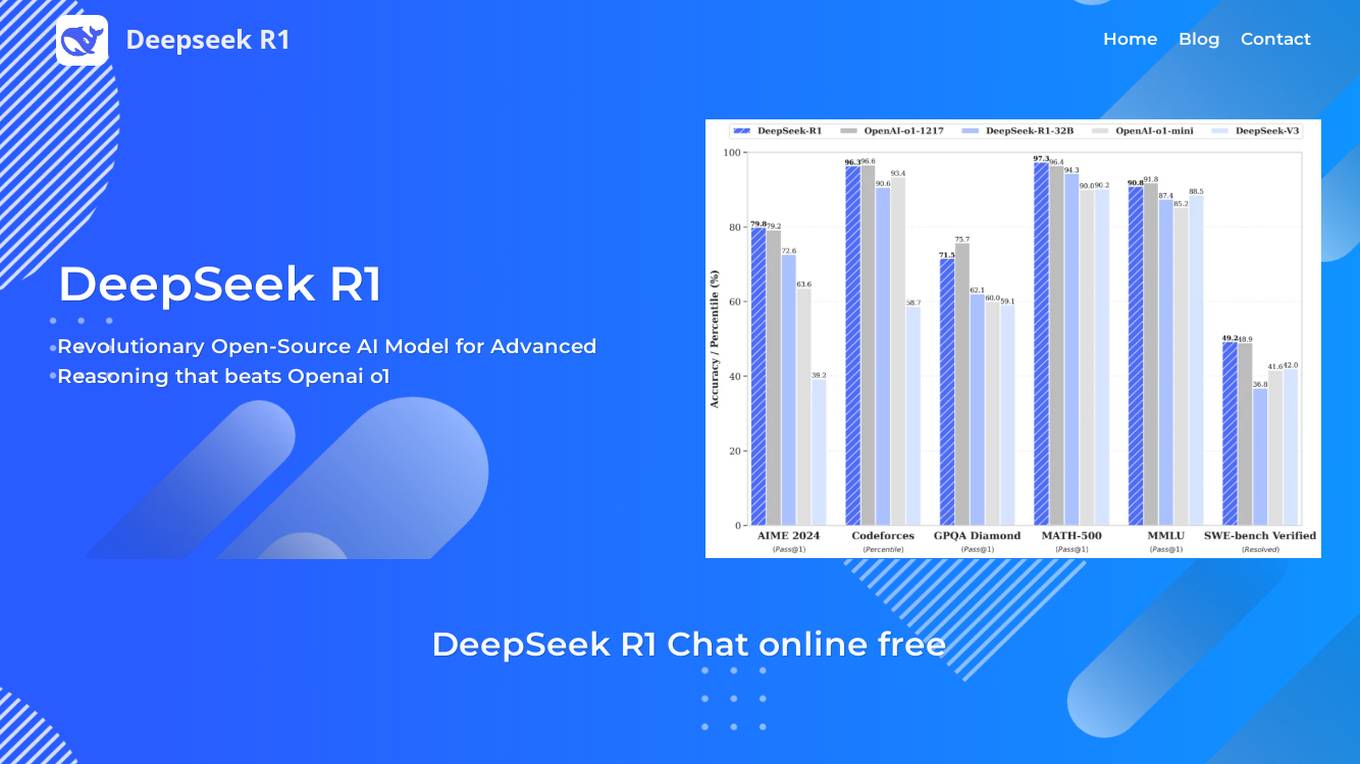

DeepSeek R1

DeepSeek R1 is a revolutionary open-source AI model for advanced reasoning that outperforms leading AI models in mathematics, coding, and general reasoning tasks. It utilizes a sophisticated MoE architecture with 37B active/671B total parameters and 128K context length, incorporating advanced reinforcement learning techniques. DeepSeek R1 offers multiple variants and distilled models optimized for complex problem-solving, multilingual understanding, and production-grade code generation. It provides cost-effective pricing compared to competitors like OpenAI o1, making it an attractive choice for developers and enterprises.

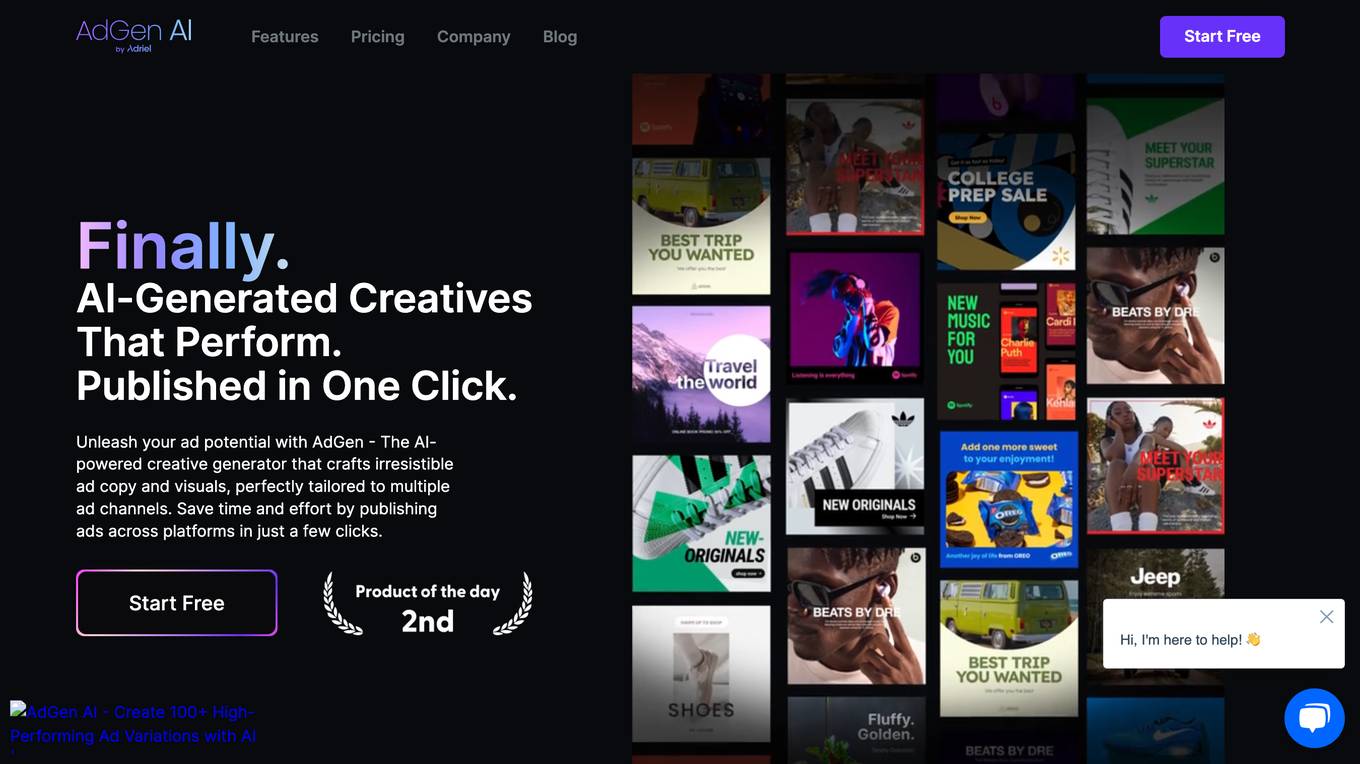

AdGen AI

AdGen AI is an AI-powered creative generator that helps businesses create high-performing ad copy and visuals for multiple ad channels. It uses machine learning models to analyze product data and generate a variety of ad creatives that are tailored to the target audience. AdGen AI also allows users to publish ads directly from the platform, making it easy to launch and manage ad campaigns.

JobInterview.guru

JobInterview.guru is an AI-powered platform designed to provide personalized interview training for job seekers. Leveraging advanced AI technology, the platform offers realistic job interview simulations, detailed insights into interview questions, and personalized feedback to help users prepare effectively. With a focus on efficiency and cost-effectiveness, JobInterview.guru aims to empower users to confidently navigate their job interviews and land their dream jobs.

LambdaTest

LambdaTest is a next-generation mobile apps and cross-browser testing cloud platform that offers a wide range of testing services. It allows users to perform manual live-interactive cross-browser testing, run Selenium, Cypress, Playwright scripts on cloud-based infrastructure, and execute AI-powered automation testing. The platform also provides accessibility testing, real devices cloud, visual regression cloud, and AI-powered test analytics. LambdaTest is trusted by over 2 million users globally and offers a unified digital experience testing cloud to accelerate go-to-market strategies.

Laxis

Laxis is a revolutionary AI Meeting Assistant designed to capture and distill key insights from every customer interaction effortlessly. It seamlessly integrates across platforms, from online meetings to CRM updates, all with a user-friendly interface. Laxis empowers revenue teams to maximize every customer conversation, ensuring no valuable detail is missed. With Laxis, sales teams can close more deals with AI note-taking and insights from client conversations, business development teams can engage prospects more effectively and grow their business faster, marketing teams can repurpose podcasts, webinars, and meetings into engaging content with a single click, product and market researchers can conduct better research interviews that get to the "aha!" moment faster, project managers can remember key takeaways and status updates, and capture them for progress reports, and product and UX designers can capture and organize insights from their interviews and user research.

CampaignBuilder.AI

CampaignBuilder.AI is an AI-powered platform that enables users to quickly generate and launch AI-optimized advertising campaigns across major ad platforms. The tool offers a range of features to streamline campaign creation, including AI-generated copywriting, audience targeting, and creative building. With full-funnel capabilities, CampaignBuilder.AI aims to help businesses of all sizes improve campaign performance and efficiency. The platform provides users with creative freedom and automation to save time and enhance campaign effectiveness.

Laxis

Laxis is an AI Meeting Assistant designed to empower revenue teams by capturing and distilling key insights from customer interactions effortlessly. It offers seamless integration across platforms, from online meetings to CRM updates, with a user-friendly interface. Laxis helps users stay focused during meetings, auto-generate meeting summaries, identify customer requirements, and extract valuable insights. It supports multilingual interactions, real-time transcriptions, and provides answers based on past conversations. Trusted by over 35,000 business professionals from 3000 organizations, Laxis saves time, improves note-taking, and enhances communication with clients and prospects.

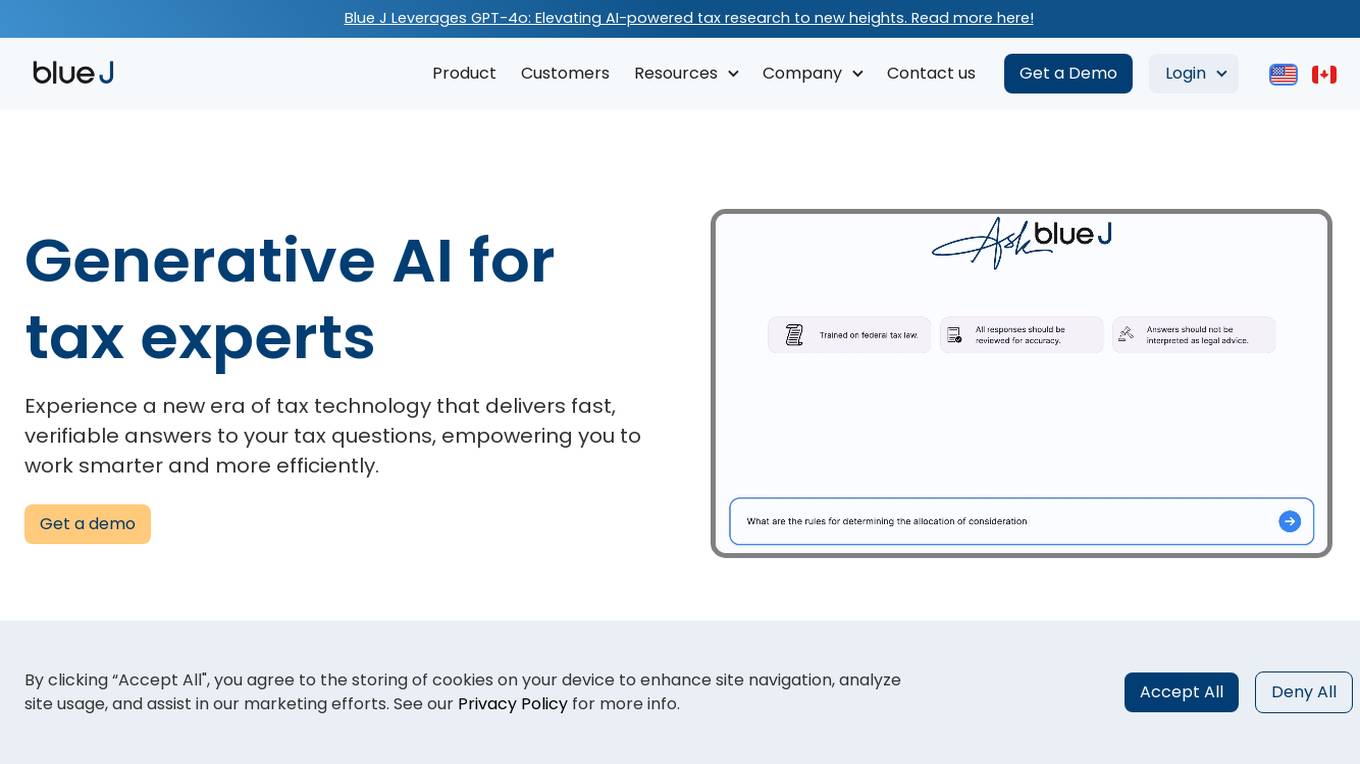

Ask Blue J

Ask Blue J is a generative AI tool designed specifically for tax experts. It provides fast, verifiable answers to complex tax questions, helping professionals work smarter and more efficiently. With its extensive database of curated tax content and industry-leading AI technology, Ask Blue J enables users to conduct efficient research, expedite drafting, and enhance their overall productivity.

Blue J

Blue J is a legal technology company founded in 2015, dedicated to enhancing tax research with the power of AI. Their AI-powered tool, Ask Blue J, provides fast and verifiable answers to tax questions, enabling tax professionals to work more efficiently. Blue J's generative AI technology helps users find authoritative sources quickly, expedite drafting processes, and cater to junior staff's research needs. The tool is trusted by hundreds of leading firms and offers a comprehensive database of curated tax content.

Sales Closer AI

Sales Closer AI is an AI-powered sales tool designed to help businesses scale their sales operations by creating AI agents capable of handling various tasks such as phone calls, scheduling, and conducting personalized discovery calls. The tool integrates seamlessly with existing CRM and marketing tools, enabling users to uncover customer pain points, build rapport, and deliver interactive demos in multiple languages. Sales Closer AI continuously learns and optimizes its approach, providing detailed notes for future reference and boosting conversion rates across different industries.

GPTConsole

GPTConsole is an AI-powered platform that helps developers build production-ready applications faster and more efficiently. Its AI agents can generate code for a variety of applications, including web applications, AI applications, and landing pages. GPTConsole also offers a range of features to help developers build and maintain their applications, including an AI agent that can learn your entire codebase and answer your questions, and a CLI tool for accessing agents directly from the command line.

OpenResty

The website appears to be displaying a '403 Forbidden' error message, which typically indicates that the user is not authorized to access the requested page. This error is often caused by issues related to permissions or server configuration. The message 'openresty' suggests that the website might be using the OpenResty web platform. OpenResty is a web platform based on NGINX and LuaJIT, commonly used for building dynamic web applications. It provides a high-performance web server and a flexible programming environment for web development.

Validator by Yazero

Validator by Yazero is a platform that helps users validate their startup ideas using AI. It provides a community where users can share their ideas, get feedback, and find collaborators. Validator also offers a variety of features to help users improve their ideas, such as idea validation, market research, and financial planning.

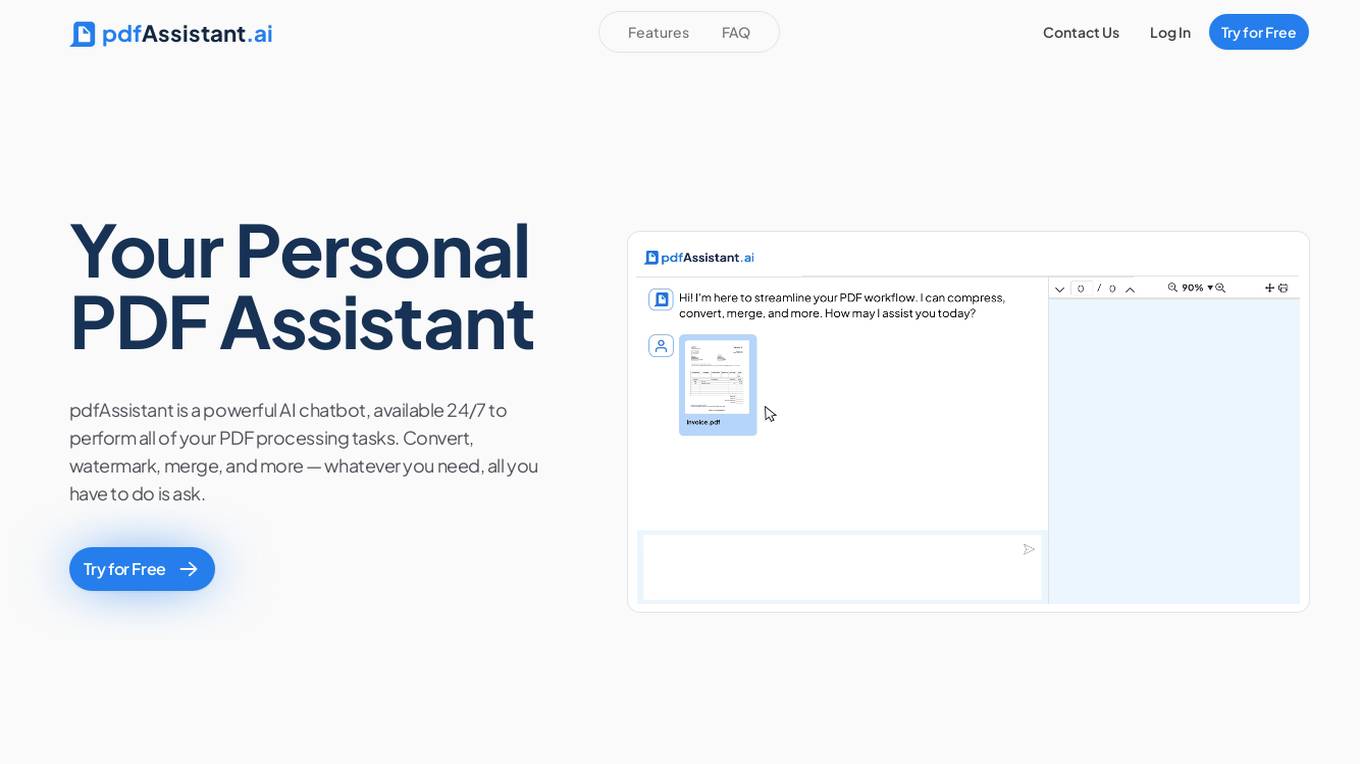

pdfAssistant

pdfAssistant is a powerful AI chatbot designed to assist users with various PDF processing tasks. It offers a user-friendly chat-based interface that allows users to convert, watermark, merge, split, and perform other PDF-related operations using natural language commands. The application is powered by industry-leading PDF and AI technology, providing fast and accurate results. With pdfAssistant, users can work smarter and more efficiently by simplifying complex PDF software processes.

KYP.ai

KYP.ai is a productivity intelligence platform that offers a 360° view of organizations across people, process, and technology dimensions. It provides instant productivity intelligence, end-to-end process optimization, holistic productivity insights, ROI-driven automation, and unparalleled scalability. The platform helps in live visibility, immediate impact, hybrid workplace management, technology landscape rationalization, and AI-powered aggregation and analysis. KYP.ai focuses on workforce enablement, no integration hassles, no-code configuration, and secure, privacy-compliant data processing.

Keywordly

Keywordly is an AI-powered SEO Content Workflow Platform designed to help users optimize, write, research, and monitor content for better rankings. It offers features such as AI-powered content creation, content research and planning, content optimization, and more. With Keywordly, users can generate high-quality content aligned with keyword clusters, identify high-value keywords, repurpose and optimize existing content, and boost brand visibility on search engines and platforms like Google and ChatGPT. The platform streamlines the content creation process by providing tools for keyword discovery, clustering, and content strategy planning, helping users drive organic traffic and improve their SEO efforts.

Solidroad

Solidroad is an AI-first training and feedback platform that turns company knowledge-base into immersive training programs. It offers personalized coaching, realistic simulations, and real-time feedback to improve team performance. The platform aims to make training programs easier to manage and more engaging for employees.

Vizly

Vizly is an AI-powered data analysis tool that empowers users to make the most of their data. It allows users to chat with their data, visualize insights, and perform complex analysis. Vizly supports various file formats like CSV, Excel, and JSON, making it versatile for different data sources. The tool is free to use for up to 10 messages per month and offers a student discount of 50%. Vizly is suitable for individuals, students, academics, and organizations looking to gain actionable insights from their data.

Yogger

Yogger is an AI-powered video analysis and movement assessment tool designed for coaches, trainers, physical therapists, and athletes. It allows users to track form, gather data, and analyze movement for any sport or activity in seconds. With AI-powered screenings, users can get instant scores and insights whether training in person or online. Yogger helps in recovery, training enhancement, and injury prevention, all accessible from a mobile device.

GeekSight Inc.

GeekSight Inc. offers a range of Trello Power-Ups designed to enhance team collaboration and productivity. One of their key products is Notes & Docs, an AI-powered note-taking tool integrated with Trello. The tool aims to streamline task management by providing comprehensive task context information organization and content creation workflows. Additionally, they provide PersonaTool for building customer personas and Card Annotations for enhancing communication on Trello boards.

1 - Open Source AI Tools

R1-Searcher

R1-searcher is a tool designed to incentivize the search capability in large reasoning models (LRMs) via reinforcement learning. It enables LRMs to invoke web search and obtain external information during the reasoning process by utilizing a two-stage outcome-supervision reinforcement learning approach. The tool does not require instruction fine-tuning for cold start and is compatible with existing Base LLMs or Chat LLMs. It includes training code, inference code, model checkpoints, and a detailed technical report.

20 - OpenAI Gpts

Athlete's Breathing Coach

Breathing coach for athletes, focusing on performance and recovery

CardioRescue Expert

Asistente especializado en el manejo de la parada cardiorespiratoria según las recomendaciones del ERC (2021) y del ILCOR (2023).

The Verbally Mental Magician

Mysterious magician creating baffling verbal and numerical tricks of the mind.

Deus Ex Machina

A guide in esoteric and occult knowledge, utilizing innovative chaos magick techniques.

GMC Repair Manual

Expert in GMC vehicle maintenance and repair, with internet browsing for extra info.

Project Quality Assurance Advisor

Ensures project deliverables meet predetermined quality standards.