Best AI tools for< Optimize Usage >

20 - AI tool Sites

femtoAI

femtoAI is an embedded AI platform that empowers intelligence in everyday devices. It offers AI models, software, tools, and AI accelerator technology solutions to deliver high performance at lower latency and energy consumption. The platform is built upon a unique accelerator and models that fit large AI into tiny silicon chips, providing ready-to-deploy models, software development kits, and a sparse processing unit for custom applications. femtoAI brings the transformative power of AI to wearables, household appliances, robotics, and autonomous vehicles, enhancing user experiences in various domains.

Unsloth

Unsloth is an AI tool designed to make finetuning large language models like Llama-3, Mistral, Phi-3, and Gemma 2x faster, use 70% less memory, and with no degradation in accuracy. The tool provides documentation to help users navigate through training their custom models, covering essentials such as installing and updating Unsloth, creating datasets, running, and deploying models. Users can also integrate third-party tools and utilize platforms like Google Colab.

WriteText.ai

WriteText.ai is an AI-powered product description generator designed to help e-commerce businesses create high-quality, SEO-optimized product descriptions quickly and efficiently. It offers a range of features to enhance content relevance, optimize keyword usage, and streamline the content creation workflow. With WriteText.ai, users can generate product descriptions in multiple languages, analyze product images for contextual text, and seamlessly publish content directly to their e-commerce platform.

Chatty

Chatty is an AI-powered chat application that utilizes cutting-edge models to provide efficient and personalized responses to user queries. The application is designed to optimize VRAM usage by employing models with specific suffixes, resulting in reduced memory requirements. Users can expect a slight delay in the initial response due to model downloading. Chatty aims to enhance user experience through its advanced AI capabilities.

Valyr

Valyr is a tool that helps you track usage, costs, and latency metrics for your GPT-3 logs with just one line of code. It's easy to get started and can be up and running in less than 3 minutes.

NeuProScan

NeuProScan is an AI platform designed for the early detection of pre-clinical Alzheimer's from MRI scans. It utilizes AI technology to predict the likelihood of developing Alzheimer's years in advance, helping doctors improve diagnosis accuracy and optimize the use of costly PET scans. The platform is fully customizable, user-friendly, and can be run on devices or in the cloud. NeuProScan aims to provide patients and healthcare systems with valuable insights for better planning and decision-making.

HireFlow.net

HireFlow.net is an AI-powered platform designed to optimize resumes and enhance job prospects. The website offers a free resume checker that leverages advanced Artificial Intelligence technology to provide personalized feedback and suggestions for improving resumes. Users can also access features such as CV analysis, cover letter and resignation letter generators, and expert insights to stand out in the competitive job market.

Reclaim.ai

Reclaim.ai is an AI-powered scheduling application designed to optimize users' schedules for better productivity, collaboration, and work-life balance. The app offers features such as Smart Meetings, Scheduling Links, Calendar Sync, Buffer Time, and Time Tracking. It helps users analyze their time across meetings, tasks, and work-life balance metrics. Reclaim.ai is trusted by over 300,000 people across 40,000 companies, with a 4.8/5 rating on G2. The application is known for its ability to defend focus time, automate daily plans, and manage smart events efficiently.

GrowthBar

GrowthBar is an AI-powered writing tool that helps content teams and bloggers research, write, optimize, and distribute SEO-friendly content. It offers a range of features including an AI writing assistant, SEO writing services, keyword research, competitor research, and more. GrowthBar is designed to help users create high-quality content that ranks well in search engines and drives organic traffic.

Priceflow

Priceflow is an AI tool designed to help users create pricing pages that convert. It allows users to learn from the pricing pages of top AI & SaaS products to enhance their pricing strategy, model, and design. The platform offers various resources and subscription options tailored to different needs, such as tiered pricing, usage-based pricing, and more. Priceflow aims to empower businesses to optimize their pricing strategies through AI-driven insights and best practices.

cloudNito

cloudNito is an AI-driven platform that specializes in cloud cost optimization and management for businesses using AWS services. The platform offers automated cost optimization, comprehensive insights and analytics, unified cloud management, anomaly detection, cost and usage explorer, recommendations for waste reduction, and resource optimization. By leveraging advanced AI solutions, cloudNito aims to help businesses efficiently manage their AWS cloud resources, reduce costs, and enhance performance.

RAGNA Desktop

RAGNA Desktop is a private AI multitool that runs locally on your desktop PC or laptop without the need for an internet connection. It is designed to automate repetitive tasks, increase efficiency, and free up capacity for more important matters. The application ensures data privacy and security by processing all AI, calculations, and analyses on your device, keeping sensitive information protected. RAGNA Desktop offers tools for AI automation, flexibility, and security, helping users enhance productivity and optimize work processes while adhering to the latest data protection regulations.

AI Humanizer

AI Humanizer is a free online tool that utilizes advanced algorithms to imitate human writing. It helps users convert AI-generated text into content that appears to be written by a human. The tool offers features like natural language processing, contextual understanding, SEO optimization, and plagiarism detection avoidance. It is beneficial for content creators, marketers, students, and businesses looking to enhance their writing and SEO performance.

Notion Consulting

The website is dedicated to Notion Consulting and Notion Templates. It offers services to help agencies, law firms, online shops, startups, and real estate developers build clear, efficient systems using Notion. The services aim to create focus, simplify processes, and structure team communication. Additionally, the website provides resources for online training, digital marketing strategies, and offers personalized consultations.

Watergate

Watergate is a smart water leak detection application that helps users monitor and manage their water usage efficiently. It offers advanced features powered by AI technology to provide deep insights, proactive leak monitoring, and autopilot functionality. The application aims to optimize water use, maximize savings, and ensure water system safety. Watergate is designed to safeguard properties and conserve water resources by offering intelligent alerts and communication capabilities.

CloudKeeper

CloudKeeper is a comprehensive cloud cost optimization partner that offers solutions for AWS, Azure, and GCP. The platform provides services such as rate optimization, usage optimization, cloud consulting & support, and cloud cost visibility. CloudKeeper combines group buying, commitments management, expert consulting, and analytics to reduce cloud costs and maximize value. With a focus on savings, visibility, and services bundled together, CloudKeeper aims to simplify the cloud cost optimization journey for businesses of all sizes.

Hamming

Hamming is an AI tool designed to help automate voice agent testing and optimization. It offers features such as prompt optimization, automated voice testing, monitoring, and more. The platform allows users to test AI voice agents against simulated users, create optimized prompts, actively monitor AI app usage, and simulate customer calls to identify system gaps. Hamming is trusted by AI-forward enterprises and is built for inbound and outbound agents, including AI appointment scheduling, AI drive-through, AI customer support, AI phone follow-ups, AI personal assistant, and AI coaching and tutoring.

TrainMyAI

TrainMyAI is a comprehensive solution for creating AI chatbots using retrieval augmented generation (RAG) technology. It allows users to build custom AI chatbots on their servers, enabling interactions over WhatsApp, web, and private APIs. The platform offers deep customization options, fine-grained user management, usage history tracking, content optimization, and linked citations. With TrainMyAI, users can maintain full control over their AI models and data, either on-premise or in the cloud.

Gamelight

Gamelight is a revolutionary AI platform for mobile games marketing. It utilizes advanced algorithms to analyze app usage data and users' behavior, creating detailed user profiles and delivering personalized game recommendations. The platform also features a loyalty program that rewards users with points for gameplay duration, fostering engagement and retention. Gamelight's ROAS Algorithm identifies users with the highest likelihood of making a purchase on your game, providing exclusive access to valuable data points for effective user acquisition.

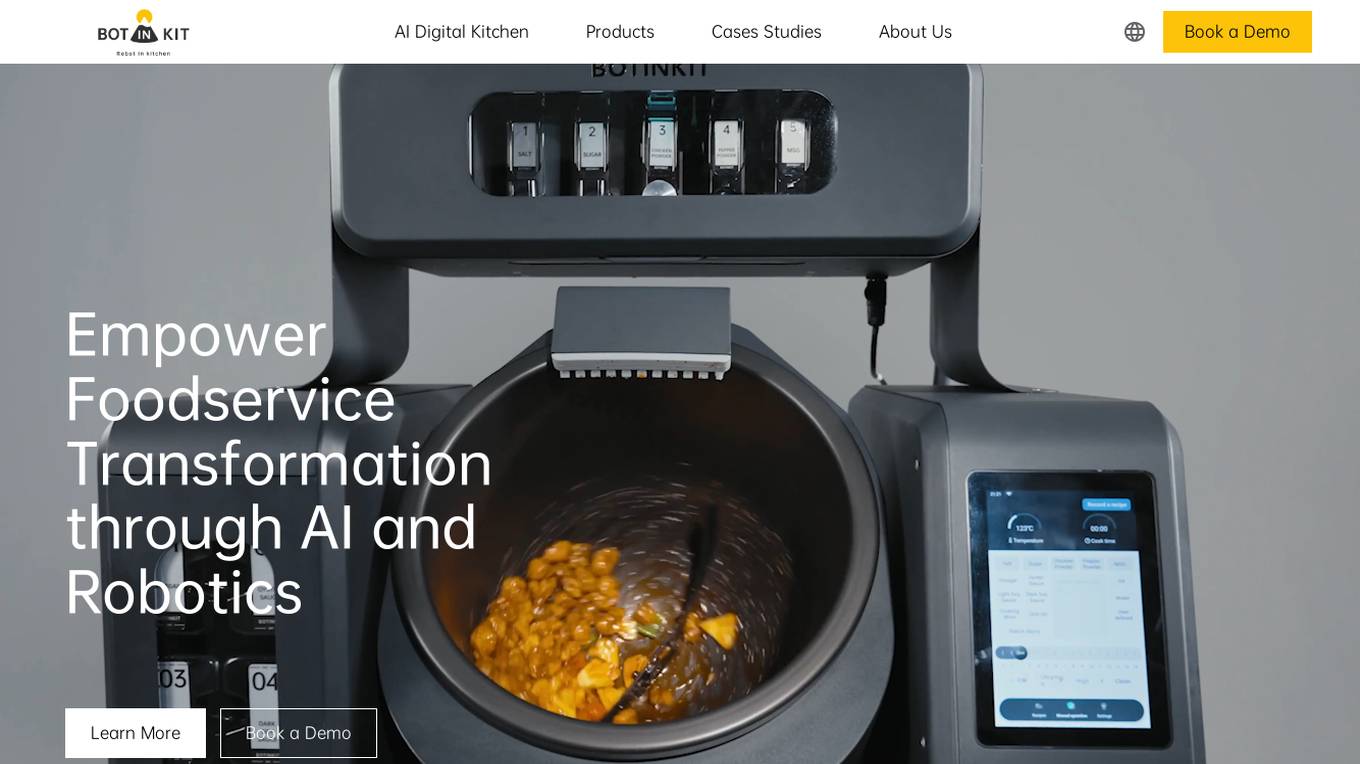

BOTINKIT

BOTINKIT is an AI-driven digital kitchen solution designed to empower foodservice transformation through AI and robotics. It helps chain restaurants globally by eliminating the reliance on skilled labor, ensuring consistent food quality, reducing kitchen labor costs, and optimizing ingredient usage. The innovative solutions offered by BOTINKIT are tailored to overcome obstacles faced by restaurants and facilitate seamless global expansion.

0 - Open Source AI Tools

20 - OpenAI Gpts

Scraping GPT Proxy and Web Scraping Tips

Scraping ChatGPT helps you with web scraping and proxy management. It provides advanced tips and strategies for efficiently handling CAPTCHAs, and managing IP rotations. Its expertise extends to ethical scraping practices, and optimizing proxy usage for seamless data retrieval

Solidity Sage

Your personal Ethereum magician — Simply ask a question or provide a code sample for insights into vulnerabilities, gas optimizations, and best practices. Don't be shy to ask about tooling and legendary attacks.

Octorate Code Companion

I help developers understand and use APIs, referencing a YAML model.

Jimmy madman

This AI is specifically for Computer Vision usage, specifically realated to PCB component identification

Gas Intellect Pro

Leading-Edge Gas Analysis and Optimization: Adaptable, Accurate, Advanced, developed on OpenAI.

OptiCode

OptiCode is designed to streamline and enhance your experience with ChatGPT software, tools, and extensions, ensuring efficient problem resolution and optimization of ChatGPT-related workflows.

Cloud Services Management Advisor

Manages and optimizes organization's cloud resources and services.

Homescreen Analyzer

Get recommendations based on your phone's Homescreen screenshot! Just add the screenshot in here for analysis 📱🧐

CV & Resume ATS Optimize + 🔴Match-JOB🔴

Professional Resume & CV Assistant 📝 Optimize for ATS 🤖 Tailor to Job Descriptions 🎯 Compelling Content ✨ Interview Tips 💡

Website Conversion by B12

I'll help you optimize your website for more conversions, and compare your site's CRO potential to competitors’.