Best AI tools for< Optimize Server Performance >

20 - AI tool Sites

OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server is refusing to respond to the request. This error is often caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the message is a web platform based on NGINX and LuaJIT, commonly used for building high-performance web applications. It is designed to handle a large number of concurrent connections and provide a scalable and efficient web server solution.

403 Forbidden

The website seems to be experiencing a 403 Forbidden error, which indicates that the server is refusing to respond to the request. This error is often caused by incorrect permissions on the server or misconfigured security settings. The message 'openresty' suggests that the server may be running on the OpenResty web platform. OpenResty is a web platform based on NGINX and LuaJIT, known for its high performance and scalability. Users encountering a 403 Forbidden error on a website may need to contact the website administrator or webmaster for assistance in resolving the issue.

OpenResty

The website is currently displaying a '403 Forbidden' error, which means that access to the requested resource is denied. This error is typically caused by insufficient permissions or server misconfiguration. The 'openresty' message indicates that the server is using the OpenResty web platform. OpenResty is a scalable web platform that integrates the Nginx web server with various Lua-based modules, providing powerful features for web development and server-side scripting.

OpenResty

The website appears to be displaying a '403 Forbidden' error message, which typically indicates that the user is not authorized to access the requested page. This error is often caused by issues related to permissions or server configuration. The message 'openresty' suggests that the website might be using the OpenResty web platform. OpenResty is a web platform based on NGINX and LuaJIT, commonly used for building dynamic web applications. It provides a high-performance web server and a flexible programming environment for web development.

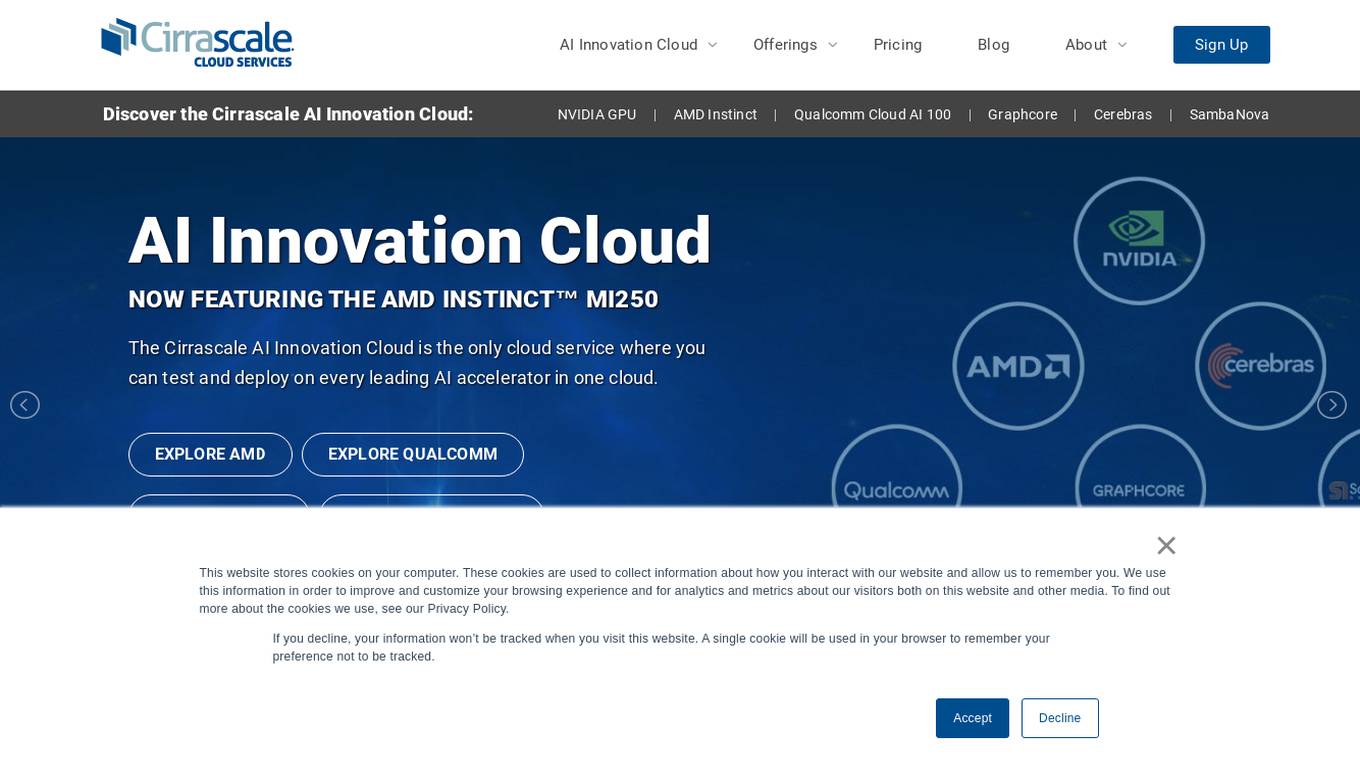

Cirrascale Cloud Services

Cirrascale Cloud Services is an AI tool that offers cloud solutions for Artificial Intelligence applications. The platform provides a range of cloud services and products tailored for AI innovation, including NVIDIA GPU Cloud, AMD Instinct Series Cloud, Qualcomm Cloud, Graphcore, Cerebras, and SambaNova. Cirrascale's AI Innovation Cloud enables users to test and deploy on leading AI accelerators in one cloud, democratizing AI by delivering high-performance AI compute and scalable deep learning solutions. The platform also offers professional and managed services, tailored multi-GPU server options, and high-throughput storage and networking solutions to accelerate development, training, and inference workloads.

OpenResty

The website is currently displaying a '403 Forbidden' error, which indicates that the server is refusing to respond to the request. This error is often caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the error message is a web platform based on NGINX and LuaJIT, commonly used for building high-performance web applications. It is designed to handle a large number of concurrent connections and provide advanced features for web development.

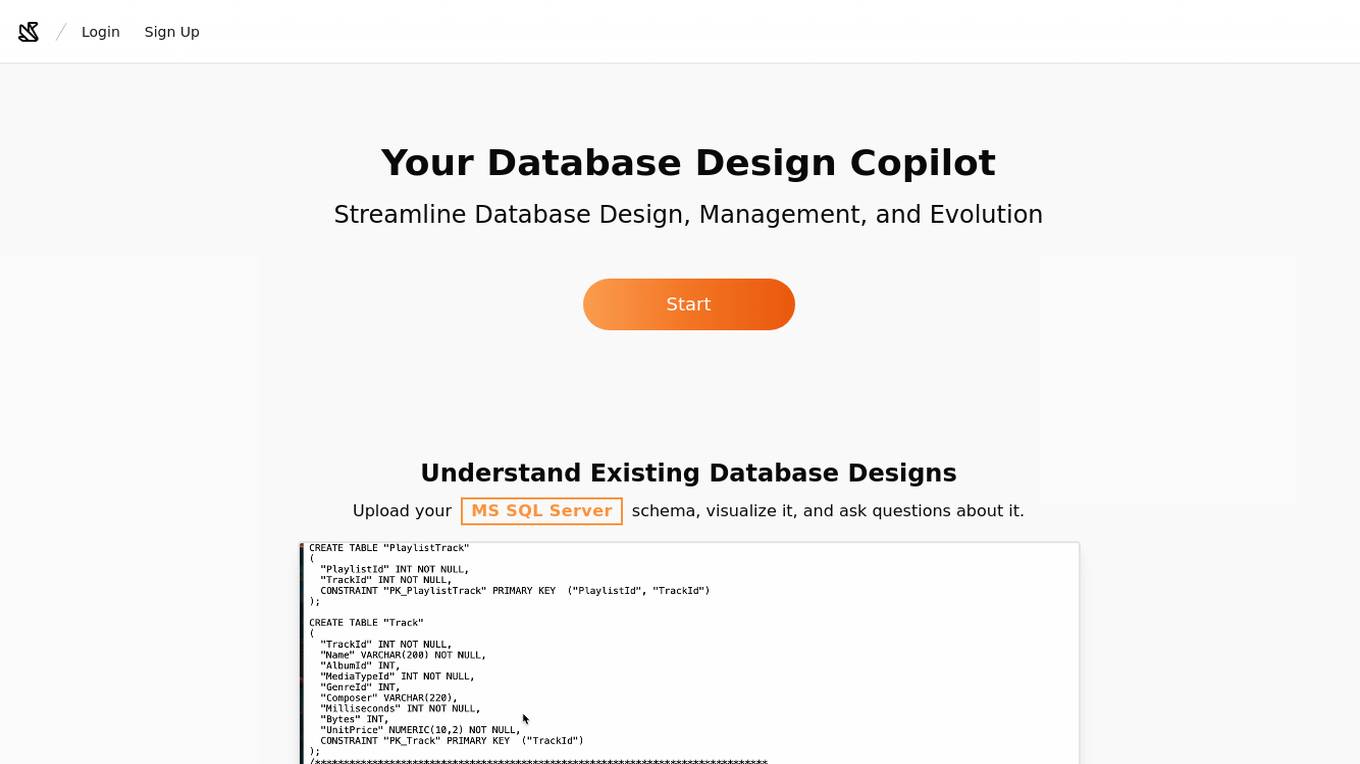

Nabubit

Nabubit is an AI-powered tool designed to assist users in database design. It serves as a virtual copilot, providing guidance and suggestions throughout the database design process. With Nabubit, users can streamline their database creation, optimize performance, and ensure data integrity. The tool leverages artificial intelligence to analyze data requirements, suggest schema designs, and enhance overall database efficiency. Nabubit is a valuable resource for developers, data analysts, and businesses looking to improve their database management practices.

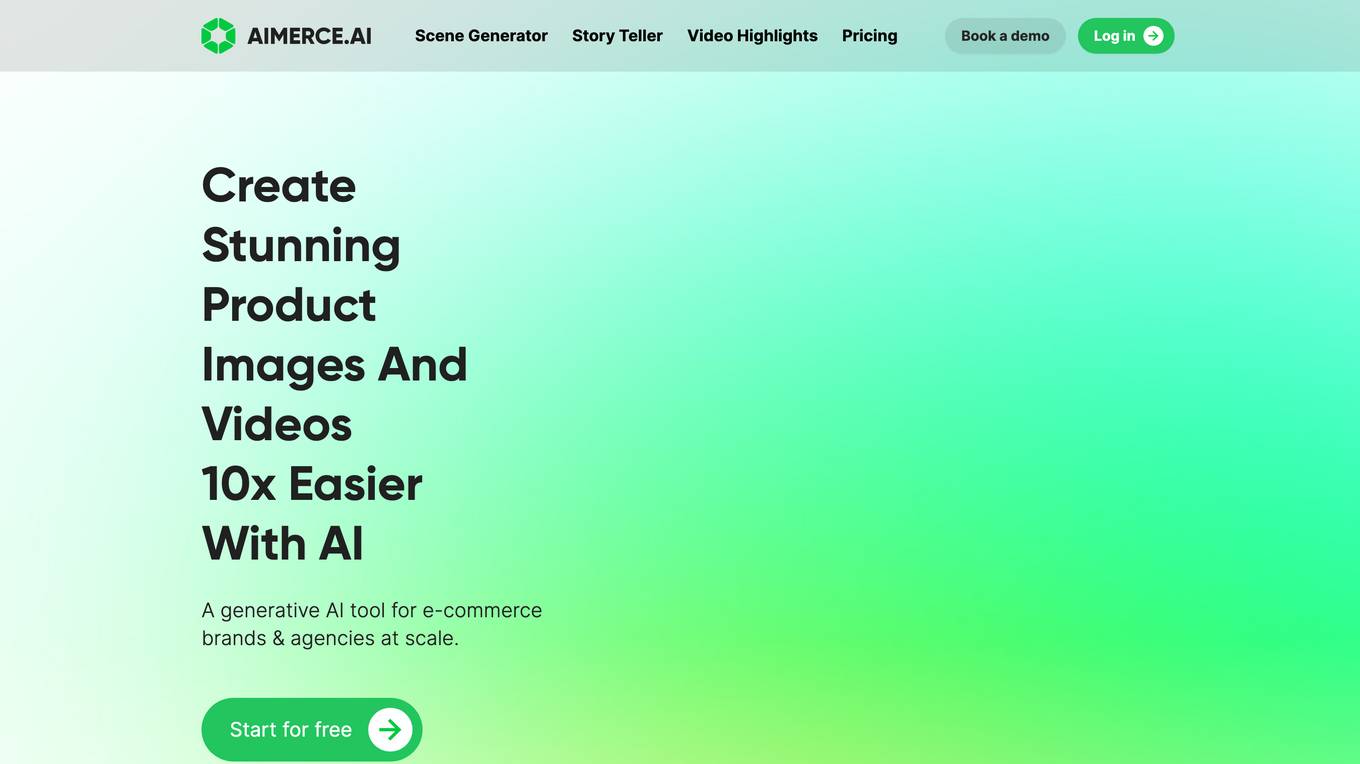

Aimerce

Aimerce is an AI application designed to help Shopify brands unlock additional revenue in the cookieless world. It provides a smart AI email marketer tool that captures, uses, and monetizes high-quality first-party data to enhance marketing performance. Aimerce offers solutions to overcome challenges posed by evolving data privacy regulations, such as Safari's 7-day cookie limitation, by extending tracking capabilities and improving retargeting strategies. The application aims to improve marketing targeting performance, increase revenue, and engage more effectively with customers.

OpenResty

The website appears to be displaying a '403 Forbidden' error message, which indicates that the server is refusing to respond to the request. This error is often caused by incorrect permissions on the server or a misconfiguration in the server settings. The message 'openresty' suggests that the server may be running the OpenResty web platform. OpenResty is a web platform based on NGINX and Lua that is commonly used to build high-performance web applications. It provides a powerful and flexible way to extend NGINX with Lua scripts, allowing for advanced web server functionality.

N/A

The website is currently displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is typically caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the message refers to a web platform based on NGINX and LuaJIT, often used for building high-performance web applications. It seems that the website is currently inaccessible due to server-side issues.

OpenResty

The website is currently displaying a '403 Forbidden' error, which indicates that the server understood the request but refuses to authorize it. This error is typically caused by insufficient permissions or misconfiguration on the server side. The 'openresty' message suggests that the server is using the OpenResty web platform. OpenResty is a powerful web platform based on Nginx and LuaJIT, providing high performance and flexibility for web applications.

403 Forbidden OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is often encountered when trying to access a webpage without proper permissions. The 'openresty' mentioned in the message refers to a web platform based on NGINX and LuaJIT, commonly used for building high-performance web applications. The website may be experiencing technical issues or undergoing maintenance.

Cloudflare

Cloudflare is a web infrastructure and website security company that provides content delivery network services, DDoS mitigation, Internet security, and distributed domain name server services. It offers a range of developer products and AI products to enhance web performance and security. Cloudflare's platform allows users to build, secure, and deliver applications globally, with features like Workers, Pages, Images, Stream, AutoRAG, AI Vectorize, AI Gateway, and AI Playground.

Magimaker

Magimaker.com is a website that currently shows a connection timed out error (Error code 522) due to issues with Cloudflare. The site seems to be a platform that may offer services related to web hosting or server management. Users experiencing this error are advised to wait a few minutes and try again, or for website owners, to contact their hosting provider for assistance. The error indicates a timeout between Cloudflare's network and the origin web server, preventing the web page from being displayed.

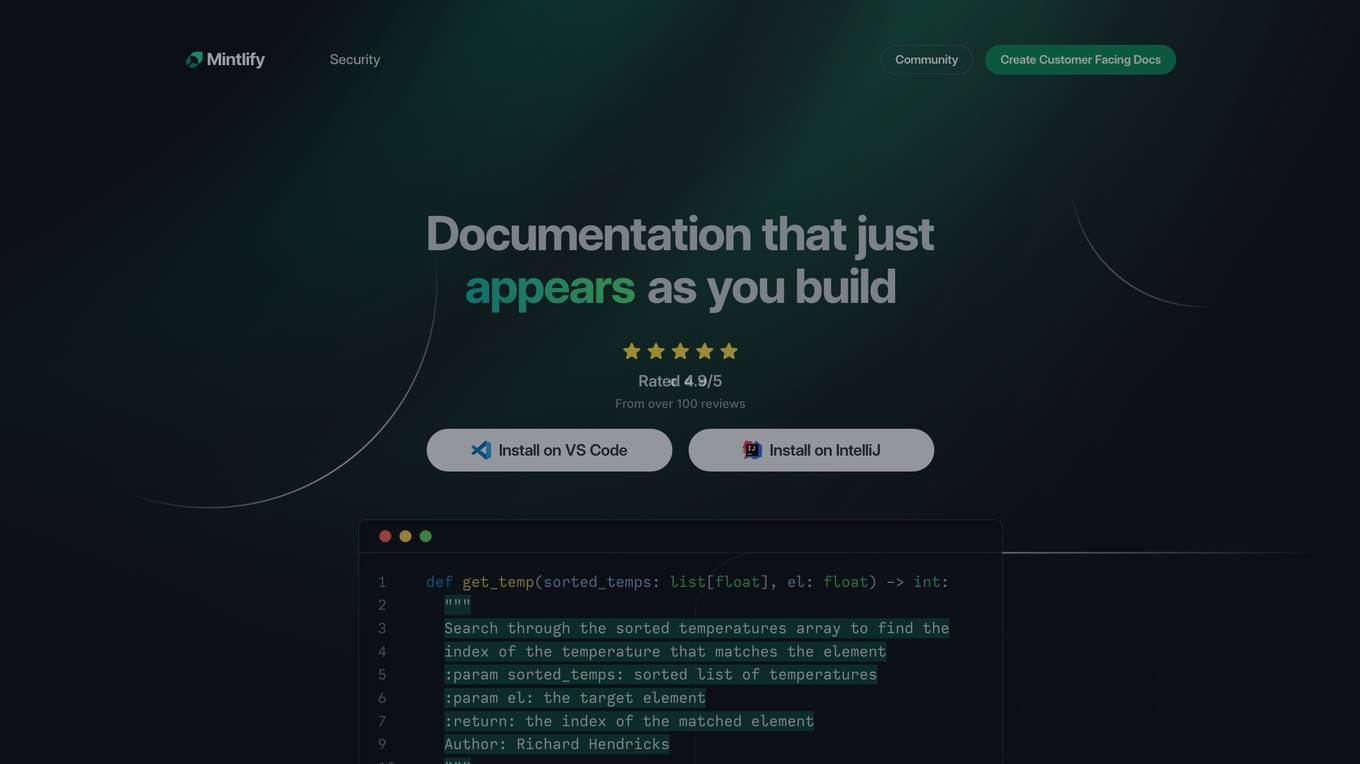

Mintlify

Mintlify.com is a website experiencing an SSL handshake failed error (Error code 525) due to Cloudflare being unable to establish an SSL connection to the origin server. The issue may be related to SSL configuration compatibility with Cloudflare, potentially caused by no shared cipher suites. Visitors are advised to try again in a few minutes, while website owners are recommended to check the SSL configuration for compatibility. The website is hosted on writer.mintlify.com and the error occurred in Singapore. Cloudflare Ray ID: 97e12c9cc812fd9a. Your IP: Click to reveal 159.65.141.37. Performance & security are managed by Cloudflare.

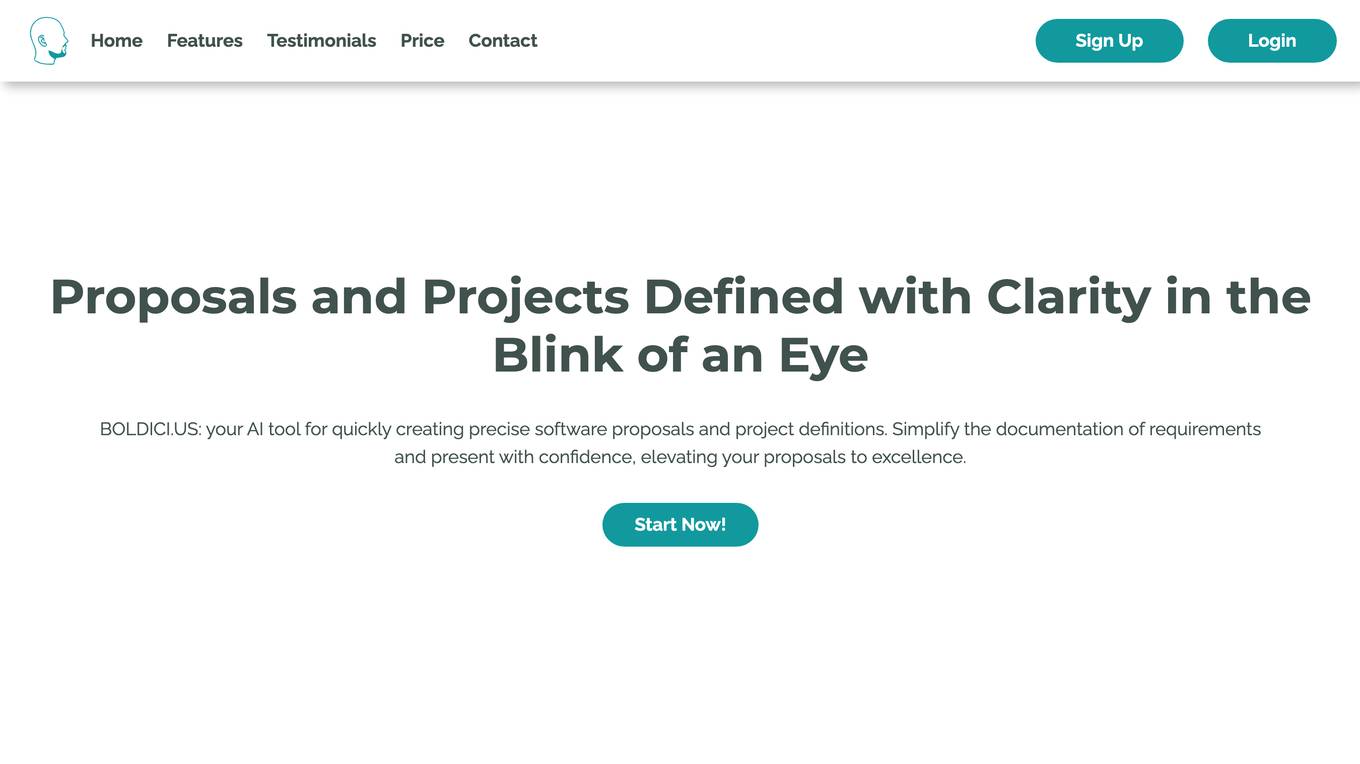

Boldici.us

Boldici.us is a website that currently appears to be experiencing technical difficulties, as indicated by the error code 521 displayed on the page. The error message suggests that the web server is down, resulting in the inability to establish a connection and display the web page content. Visitors are advised to wait a few minutes and try again, while website owners are encouraged to contact their hosting provider for assistance in resolving the issue. The website seems to be utilizing Cloudflare services for performance and security enhancements.

GrapixAI

GrapixAI is a leading provider of low-cost cloud GPU rental services and AI server solutions. The company's focus on flexibility, scalability, and cutting-edge technology enables a variety of AI applications in both local and cloud environments. GrapixAI offers the lowest prices for on-demand GPUs such as RTX4090, RTX 3090, RTX A6000, RTX A5000, and A40. The platform provides Docker-based container ecosystem for quick software setup, powerful GPU search console, customizable pricing options, various security levels, GUI and CLI interfaces, real-time bidding system, and personalized customer support.

OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server understood the request but refuses to authorize it. This error is typically caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the message refers to a web platform based on NGINX and LuaJIT, often used for building high-performance web applications. It seems that the website is currently inaccessible due to server-side issues.

OpenResty

The website is currently displaying a '403 Forbidden' error, which means that access to the requested resource is denied. This error is typically caused by insufficient permissions or misconfiguration on the server side. The 'openresty' message indicates that the server is using the OpenResty web platform. OpenResty is a web platform based on NGINX and LuaJIT, commonly used for building dynamic web applications. It provides a powerful and flexible environment for web development.

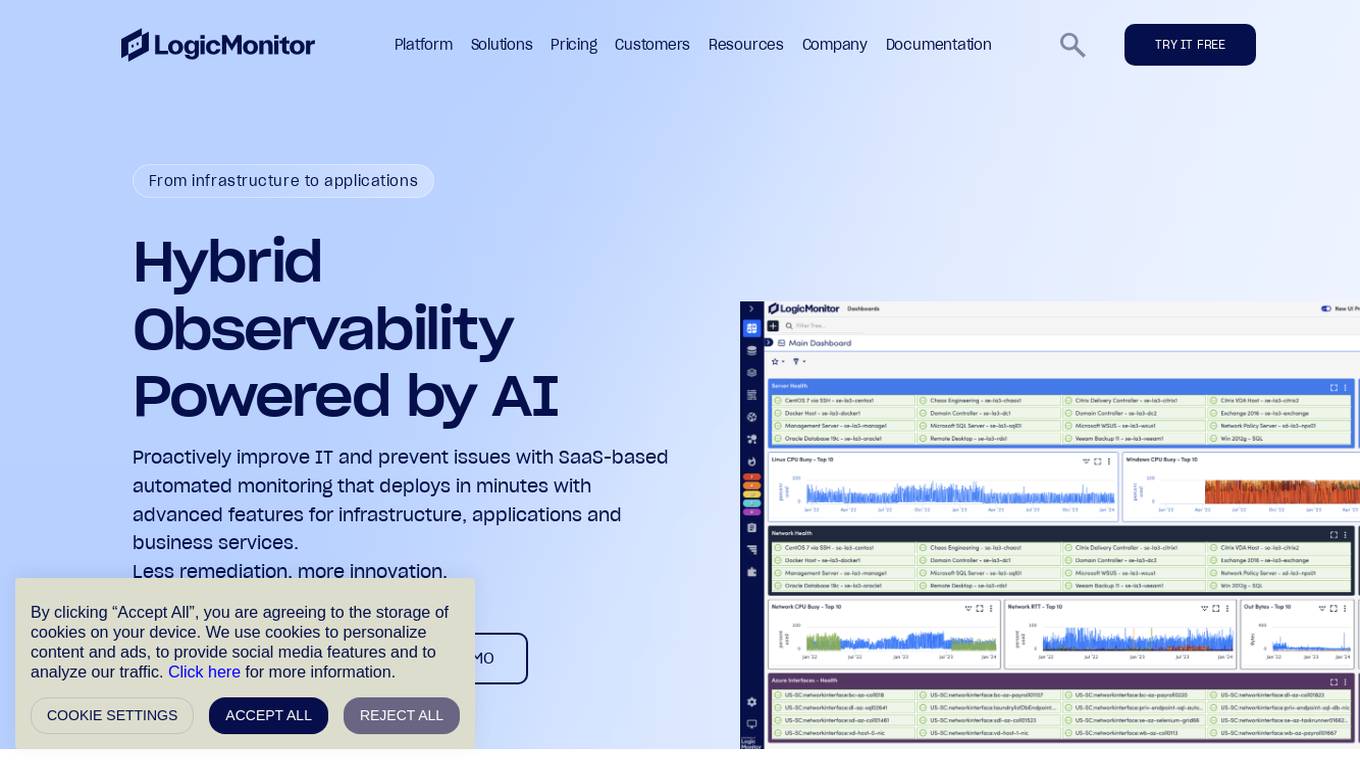

LogicMonitor

LogicMonitor is a cloud-based infrastructure monitoring platform that provides real-time insights and automation for comprehensive, seamless monitoring with agentless architecture. It offers a wide range of features including infrastructure monitoring, network monitoring, server monitoring, remote monitoring, virtual machine monitoring, SD-WAN monitoring, database monitoring, storage monitoring, configuration monitoring, cloud monitoring, container monitoring, AWS Monitoring, GCP Monitoring, Azure Monitoring, digital experience SaaS monitoring, website monitoring, APM, AIOPS, Dexda Integrations, security dashboards, and platform demo logs. LogicMonitor's AI-driven hybrid observability helps organizations simplify complex IT ecosystems, accelerate incident response, and thrive in the digital landscape.

0 - Open Source AI Tools

20 - OpenAI Gpts

Software expert

Server admin expert in cPanel, Softaculous, WHM, WordPress, and Elementor Pro.

SQL Server assistant

Expert in SQL Server for database management, optimization, and troubleshooting.

CV & Resume ATS Optimize + 🔴Match-JOB🔴

Professional Resume & CV Assistant 📝 Optimize for ATS 🤖 Tailor to Job Descriptions 🎯 Compelling Content ✨ Interview Tips 💡

Website Conversion by B12

I'll help you optimize your website for more conversions, and compare your site's CRO potential to competitors’.

Thermodynamics Advisor

Advises on thermodynamics processes to optimize system efficiency.

Cloud Architecture Advisor

Guides cloud strategy and architecture to optimize business operations.

International Tax Advisor

Advises on international tax matters to optimize company's global tax position.

Investment Management Advisor

Provides strategic financial guidance for investment behavior to optimize organization's wealth.

ESG Strategy Navigator 🌱🧭

Optimize your business with sustainable practices! ESG Strategy Navigator helps integrate Environmental, Social, Governance (ESG) factors into corporate strategy, ensuring compliance, ethical impact, and value creation. 🌟