Best AI tools for< Optimize Data Workflows >

20 - AI tool Sites

DataBahn

DataBahn is an AI-powered data pipeline management platform that empowers global enterprises with intelligent tools to gather, manage, and move data reliably and quickly. It offers a comprehensive solution for data integration, management, and optimization, helping users save costs and time. The platform ensures real-time insights, agility, and value by automating data processes and providing complete data ownership and governance. DataBahn is trusted by ambitious companies and partners worldwide for its efficiency and effectiveness in handling data workflows.

Global Nodes

Global Nodes is a global leader in innovative solutions, specializing in Artificial Intelligence, Data Engineering, Cloud Services, Software Development, and Mobile App Development. They integrate advanced AI to accelerate product development and provide custom, secure, and scalable solutions. With a focus on cutting-edge technology and visionary thinking, Global Nodes offers services ranging from ideation and design to precision execution, transforming concepts into market-ready products. Their team has extensive experience in delivering top-notch AI, cloud, and data engineering services, making them a trusted partner for businesses worldwide.

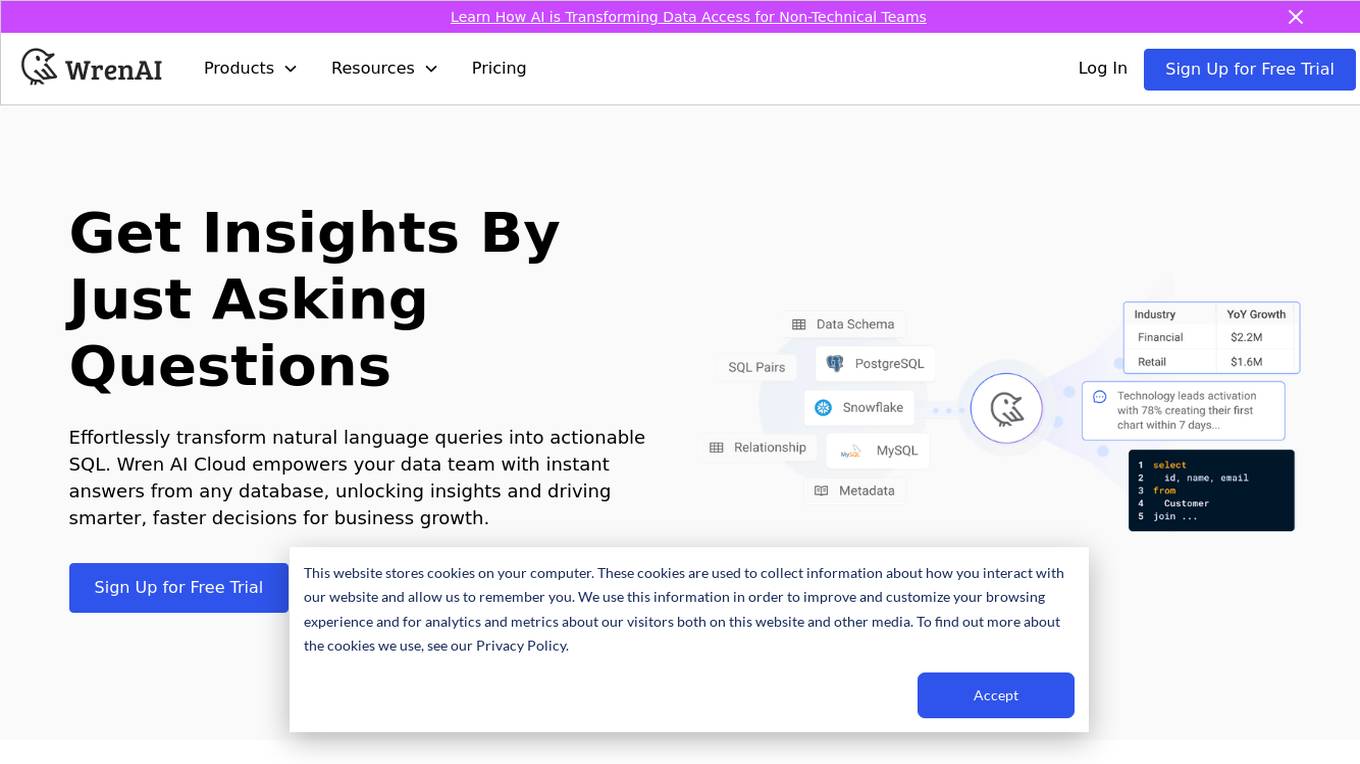

Wren AI Cloud

Wren AI Cloud is an AI-powered SQL agent that provides seamless data access and insights for non-technical teams. It empowers users to effortlessly transform natural language queries into actionable SQL, enabling faster decision-making and driving business growth. The platform offers self-serve data exploration, AI-generated insights and visuals, and multi-agentic workflow to ensure reliable results. Wren AI Cloud is scalable, versatile, and integrates with popular data tools, making it a valuable asset for businesses seeking to leverage AI for data-driven decisions.

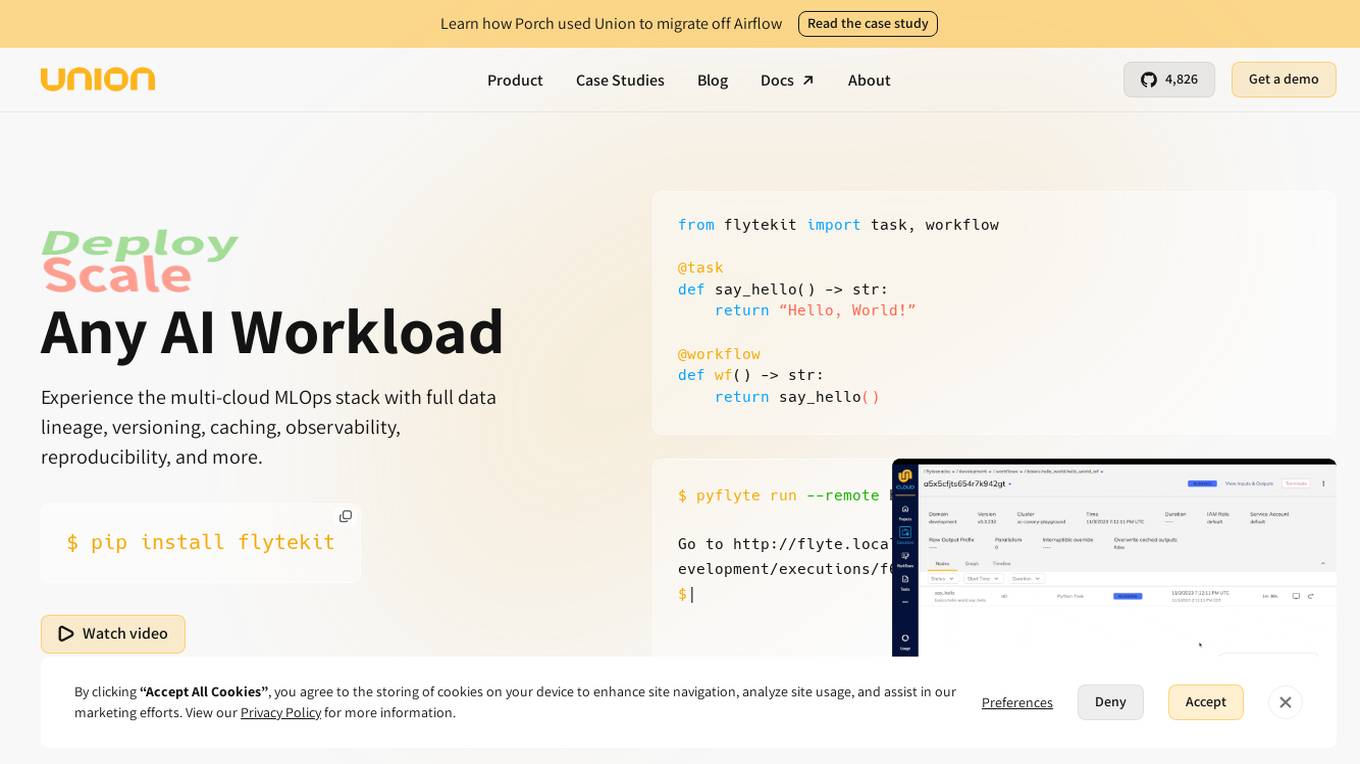

Union.ai

Union.ai is an infrastructure platform designed for AI, ML, and data workloads. It offers a scalable MLOps platform that optimizes resources, reduces costs, and fosters collaboration among team members. Union.ai provides features such as declarative infrastructure, data lineage tracking, accelerated datasets, and more to streamline AI orchestration on Kubernetes. It aims to simplify the management of AI, ML, and data workflows in production environments by addressing complexities and offering cost-effective strategies.

Vilosia

Vilosia is an AI-powered platform that helps medium and large enterprises with internal development teams to visualize their software architecture, simplify migration, and improve system modularity. The platform uses Gen AI to automatically add event triggers to the codebase, enabling users to understand data flow, system dependencies, domain boundaries, and external APIs. Vilosia also offers AI workflow analysis to extract workflows from function call chains and identify database usage. Users can scan their codebase using CLI client & CI/CD integration and stay updated with new features through the newsletter.

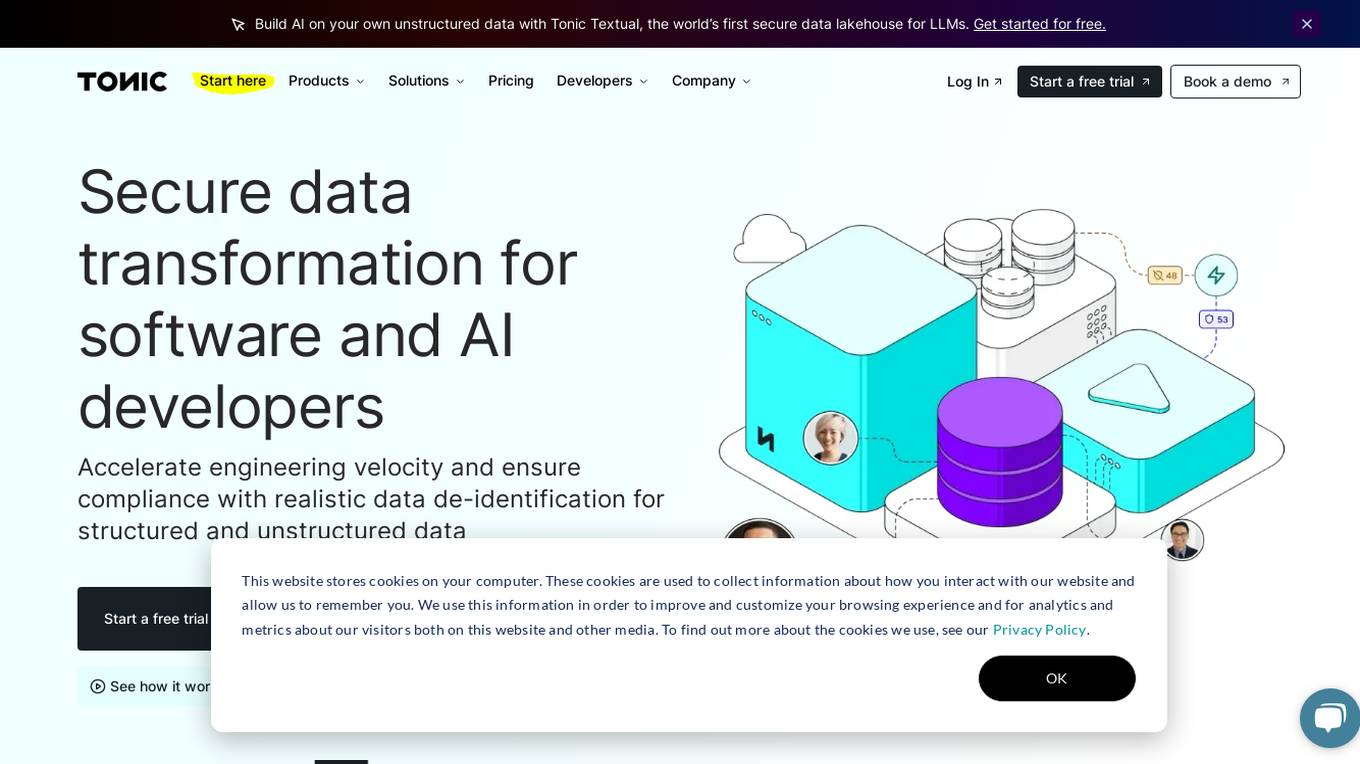

Tonic.ai

Tonic.ai is a platform that allows users to build AI models on their unstructured data. It offers various products for software development and LLM development, including tools for de-identifying and subsetting structured data, scaling down data, handling semi-structured data, and managing ephemeral data environments. Tonic.ai focuses on standardizing, enriching, and protecting unstructured data, as well as validating RAG systems. The platform also provides integrations with relational databases, data lakes, NoSQL databases, flat files, and SaaS applications, ensuring secure data transformation for software and AI developers.

Juno

Juno is an AI tool designed to enhance data science workflows by providing code suggestions, automatic debugging, and code editing capabilities. It aims to make data science tasks more efficient and productive by assisting users in writing and optimizing code. Juno prioritizes privacy and offers the option to run on private servers for sensitive datasets.

Manaflow

Manaflow is an AI tool designed to help businesses automate repetitive internal workflows involving data, APIs, and actions. It allows users to program AI agents to operate internal tools using natural language. Manaflow aims to transform tedious manual spreadsheet and software tasks into automated workflows, enabling businesses to scale with AI technology. The platform offers a variety of templates for operations, sales, and research, making it easier for users to start automating tasks. With Manaflow, users can oversee AI agents managing technical workflows, update them as needed, and focus on higher-level automations.

AmyGB Platform Services

AmyGB Platform Services offers Gen AI-powered Document Processing and API Services to supercharge productivity for businesses. Their trendsetting digital products have revolutionized how organizations handle data and streamline workflows, enabling businesses to easily optimize operations 24x7, enhance data accuracy, and improve customer satisfaction. The platform empowers business operations by driving automation revolution, providing 8x productivity, 70% cost efficiency, 80% higher accuracy, and 95% automation. AmyGB's AI-powered document processing solutions help convert documents into digital assets, extract data, and enhance customer fulfillment through automated software solutions.

HyperStart

HyperStart is an AI-powered Contract Management Software that offers end-to-end contract management solutions. It enables users to create contracts from templates within minutes, automate contracting processes with workflows, close contracts faster with version tracking and AI, execute contracts with legally binding e-signatures, find any contract or data instantly, track renewals with auto reminders, and optimize processes with data-driven analytics. The software provides features like AI-powered contract reviews, real-time collaboration, contract risk analysis visualization, and solutions tailored for various industries such as legal, HR, construction, government, finance, non-profit, procurement, healthcare, telecom, sales, real estate, oil & gas, and more.

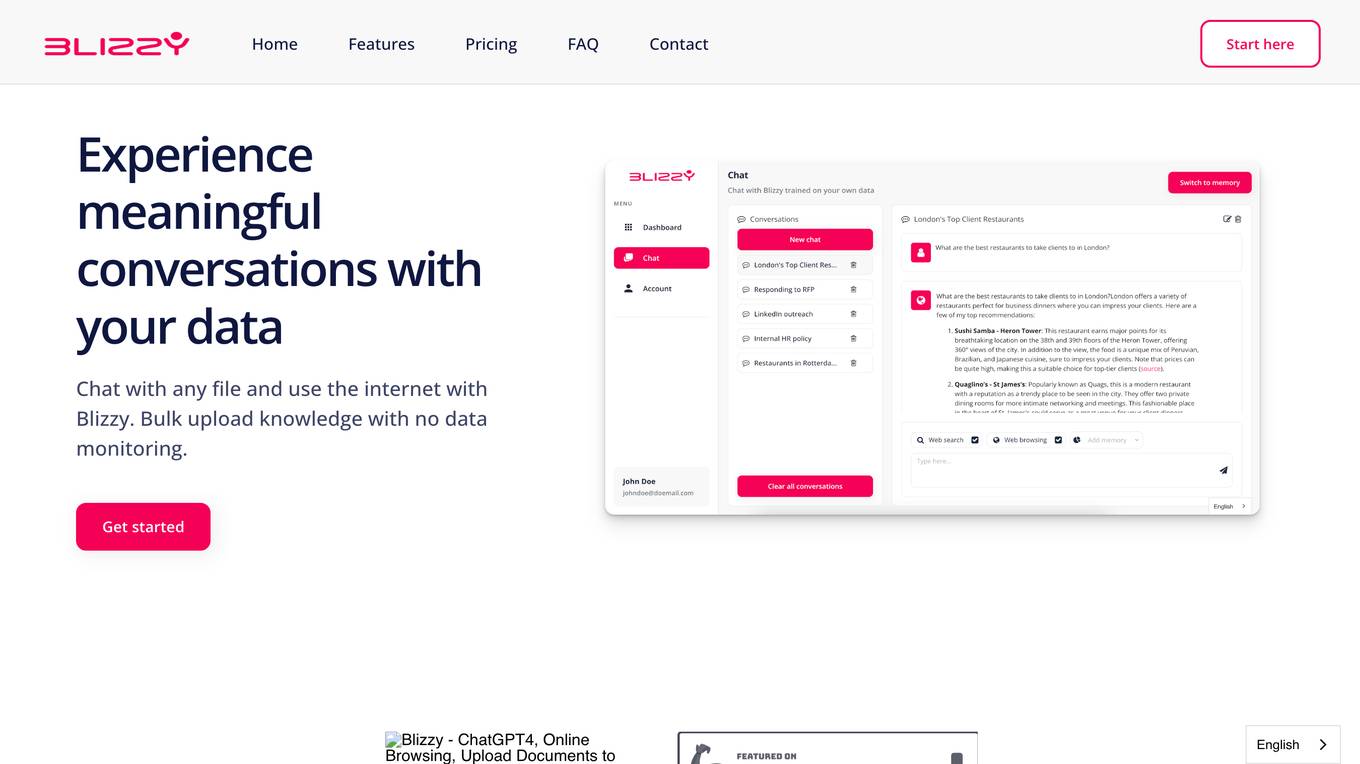

Blizzy AI

Blizzy AI is an innovative tool that allows users to have meaningful conversations with their data. Users can chat with any file and access the internet securely. With features like bulk upload knowledge, personalized knowledge vault creation, and ready-made prompts, Blizzy AI enhances marketing strategies, content creation, and online browsing experience. The tool prioritizes privacy and security by not using user data for training purposes and ensuring data accessibility only to the user.

Harmony

Harmony is an AI-powered platform that enables users to automate workflows with AI agents. It helps in automating repetitive tasks end-to-end, saving time, and delivering results without the need to increase headcount. Harmony's AI agents are designed to think, act, and deliver at scale by unifying and analyzing all data, automating high-frequency workflows, and accelerating execution with reliable autonomy. The platform is built to empower growth with AI-driven solutions across various industries, offering benefits such as increased efficiency, lower operational costs, smarter decision-making, 24/7 workflow continuity, seamless integration, and enterprise-grade security.

Macgence AI Training Data Services

Macgence is an AI training data services platform that offers high-quality off-the-shelf structured training data for organizations to build effective AI systems at scale. They provide services such as custom data sourcing, data annotation, data validation, content moderation, and localization. Macgence combines global linguistic, cultural, and technological expertise to create high-quality datasets for AI models, enabling faster time-to-market across the entire model value chain. With more than 5 years of experience, they support and scale AI initiatives of leading global innovators by designing custom data collection programs. Macgence specializes in handling AI training data for text, speech, image, and video data, offering cognitive annotation services to unlock the potential of unstructured textual data.

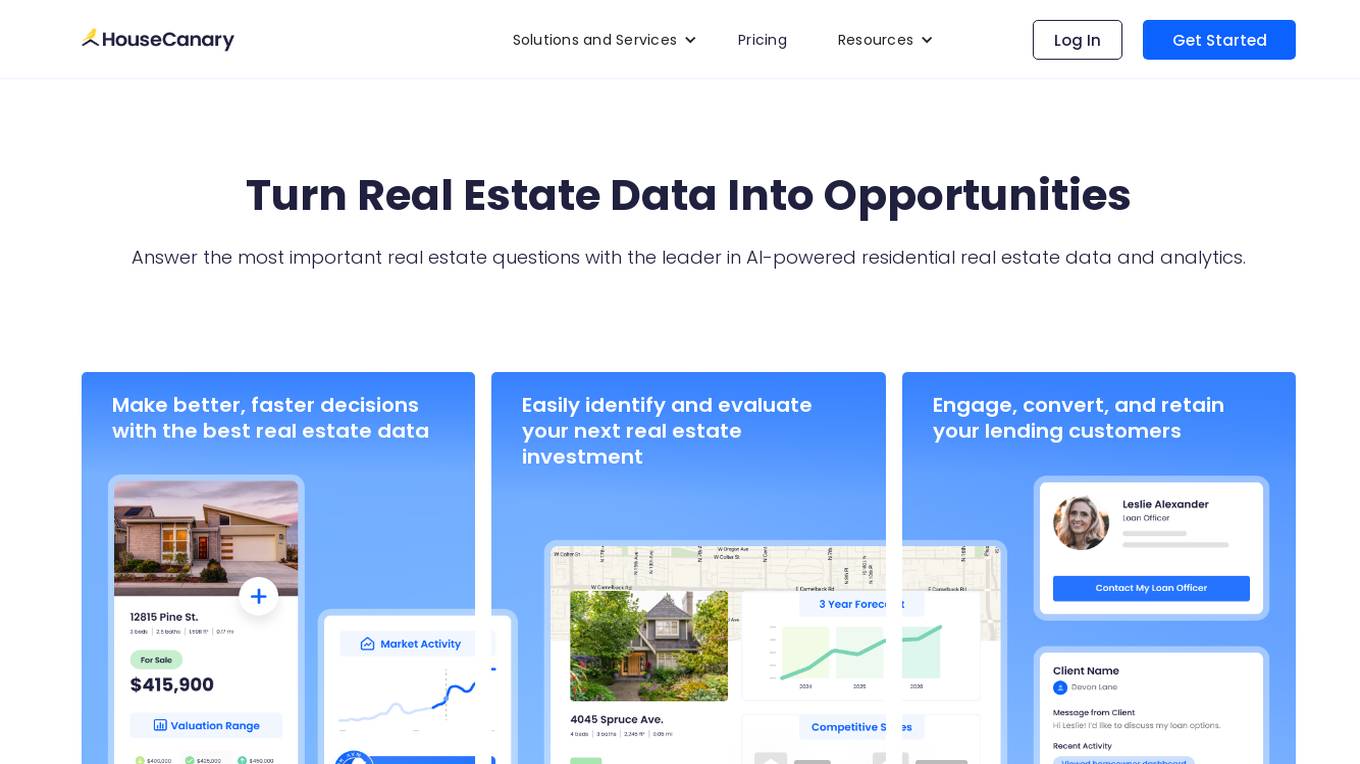

HouseCanary

HouseCanary is a leading AI-powered data and analytics platform for residential real estate. With a full suite of industry-leading products and tools, HouseCanary provides real estate investors, mortgage lenders, investment banks, whole loan buyers, and prop techs with the most comprehensive and accurate residential real estate data and analytics in the industry. HouseCanary's AI algorithms analyze a vast array of real estate data to generate meaningful insights to help teams be more efficient, ultimately saving time and money.

Promptech

Promptech is an AI teamspace designed to streamline workflows and enhance productivity. It offers a range of features including AI assistants, a collaborative teamspace, and access to large language models (LLMs). Promptech is suitable for businesses of all sizes and can be used for a variety of tasks such as streamlining tasks, enhancing collaboration, and safeguarding IP. It is a valuable resource for technology leaders and provides a cost-effective AI solution for smaller teams and startups.

ZBrain

ZBrain is an enterprise generative AI platform that offers a suite of AI-based tools and solutions for various industries. It provides real-time insights, executive summaries, due diligence enhancements, and customer support automation. ZBrain empowers businesses to streamline workflows, optimize processes, and make data-driven decisions. With features like seamless integration, no-code business logic, continuous improvement, multiple data integrations, extended DB, advanced knowledge base, and secure data handling, ZBrain aims to enhance operational efficiency and productivity across organizations.

HerculesAI

HerculesAI is an AI-powered platform that offers AI-workers for complex enterprise workflows, enabling quick, accurate, and scalable handling of processes. The platform integrates effortlessly with existing systems, providing fast and secure deployment in weeks. HerculesAI empowers organizations to automate operations, increase revenue, and achieve greater efficiency through AI-powered solutions.

NVIDIA Run:ai

NVIDIA Run:ai is an enterprise platform for AI workloads and GPU orchestration. It accelerates AI and machine learning operations by addressing key infrastructure challenges through dynamic resource allocation, comprehensive AI life-cycle support, and strategic resource management. The platform significantly enhances GPU efficiency and workload capacity by pooling resources across environments and utilizing advanced orchestration. NVIDIA Run:ai provides unparalleled flexibility and adaptability, supporting public clouds, private clouds, hybrid environments, or on-premises data centers.

Nurix AI

Nurix AI is a custom AI agent partner that offers solutions to automate workflows, build AI products, and transform enterprise workflows. Their AI agents enable proactive actions, seamless integration, and advanced voice capabilities. Nurix provides end-to-end AI solutions tailored for startups, with a team of experts from leading tech companies. Clients benefit from improved sales conversions, increased sales reach, and cost efficiency. Nurix is trusted by leading brands to deliver cutting-edge AI solutions.

AlphaWatch

The website offers a precision workflow tool for enterprises in the finance industry, combining AI technology with human oversight to empower financial decisions. It provides features such as accurate search citations, multilingual models, and complex human-in-loop automation. The tool integrates seamlessly with existing platforms, offers time savings, and self-improving models. It is backed by innovative generative AI solutions and neural search capabilities, with a focus on transforming data processes and decision-making in finance.

0 - Open Source AI Tools

20 - OpenAI Gpts

OptiCode

OptiCode is designed to streamline and enhance your experience with ChatGPT software, tools, and extensions, ensuring efficient problem resolution and optimization of ChatGPT-related workflows.

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Your Business Data Optimizer Pro

A chatbot expert in business data analysis and optimization.

Calorie Count & Cut Cost: Food Data

Apples vs. Oranges? Optimize your low-calorie diet. Compare food items. Get tailored advice on satiating, nutritious, cost-effective food choices based on 240 items.

AI Business Transformer

Top AI for business automation, data analytics, content creation. Optimize efficiency, gain insights, and innovate with AI Business Transformer.

Ecommerce Pricing Advisor

Optimize your pricing for peak market performance and profitability. Seamlessly navigate ecommerce challenges with expert, data-driven pricing strategies. 📈💹

DataTrend Analyst

I transform complex social media data into actionable, strategic insights to optimize your campaigns and drive engagement.

Operations Department Assistant

An Operations Department Assistant aids the operations team by handling administrative tasks, process documentation, and data analysis, helping to streamline and optimize various operational processes within an organization.

Algorithm Expert

I develop and optimize algorithms with a technical and analytical approach.

Data Architect

Database Developer assisting with SQL/NoSQL, architecture, and optimization.