Best AI tools for< Multi-gpu Training >

20 - AI tool Sites

Cirrascale Cloud Services

Cirrascale Cloud Services is an AI tool that offers cloud solutions for Artificial Intelligence applications. The platform provides a range of cloud services and products tailored for AI innovation, including NVIDIA GPU Cloud, AMD Instinct Series Cloud, Qualcomm Cloud, Graphcore, Cerebras, and SambaNova. Cirrascale's AI Innovation Cloud enables users to test and deploy on leading AI accelerators in one cloud, democratizing AI by delivering high-performance AI compute and scalable deep learning solutions. The platform also offers professional and managed services, tailored multi-GPU server options, and high-throughput storage and networking solutions to accelerate development, training, and inference workloads.

Lambda

Lambda is a superintelligence cloud platform that offers on-demand GPU clusters for multi-node training and fine-tuning, private large-scale GPU clusters, seamless management and scaling of AI workloads, inference endpoints and API, and a privacy-first chat app with open source models. It also provides NVIDIA's latest generation infrastructure for enterprise AI. With Lambda, AI teams can access gigawatt-scale AI factories for training and inference, deploy GPU instances, and leverage the latest NVIDIA GPUs for high-performance computing.

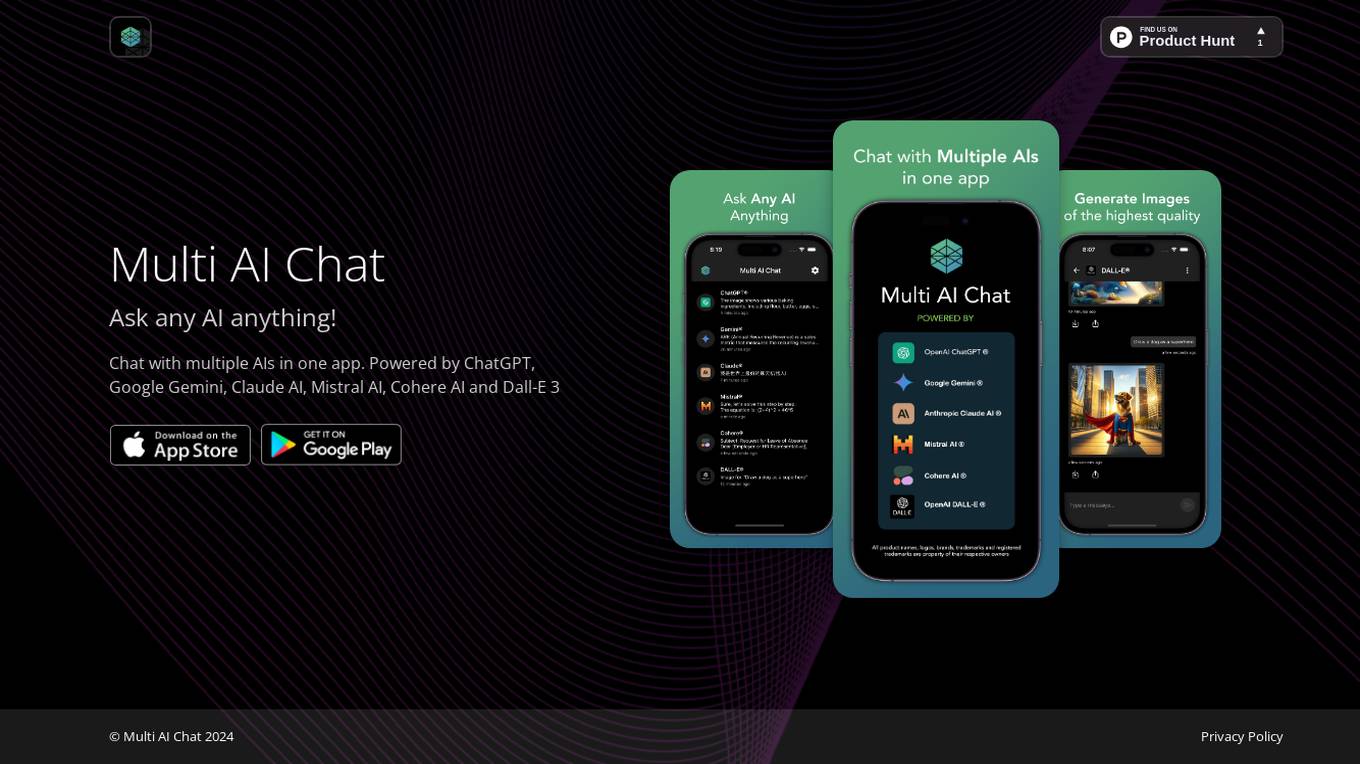

Multi AI Chat

Multi AI Chat is an AI-powered application that allows users to interact with various AI models by asking questions. The platform enables users to seek information, get answers, and engage in conversations with AI on a wide range of topics. With Multi AI Chat, users can explore the capabilities of different AI technologies and enhance their understanding of artificial intelligence.

DataSpark AI Multi-Agent Platform

DataSpark AI Multi-Agent Platform is an advanced artificial intelligence tool developed by DataSpark, Inc. The platform leverages cutting-edge AI technology to provide users with a powerful solution for multi-agent systems. With DataSpark AI, users can access the first GenAI Super Agent, enabling them to discover new insights and make informed decisions. The platform is designed to streamline complex processes and enhance productivity across various industries.

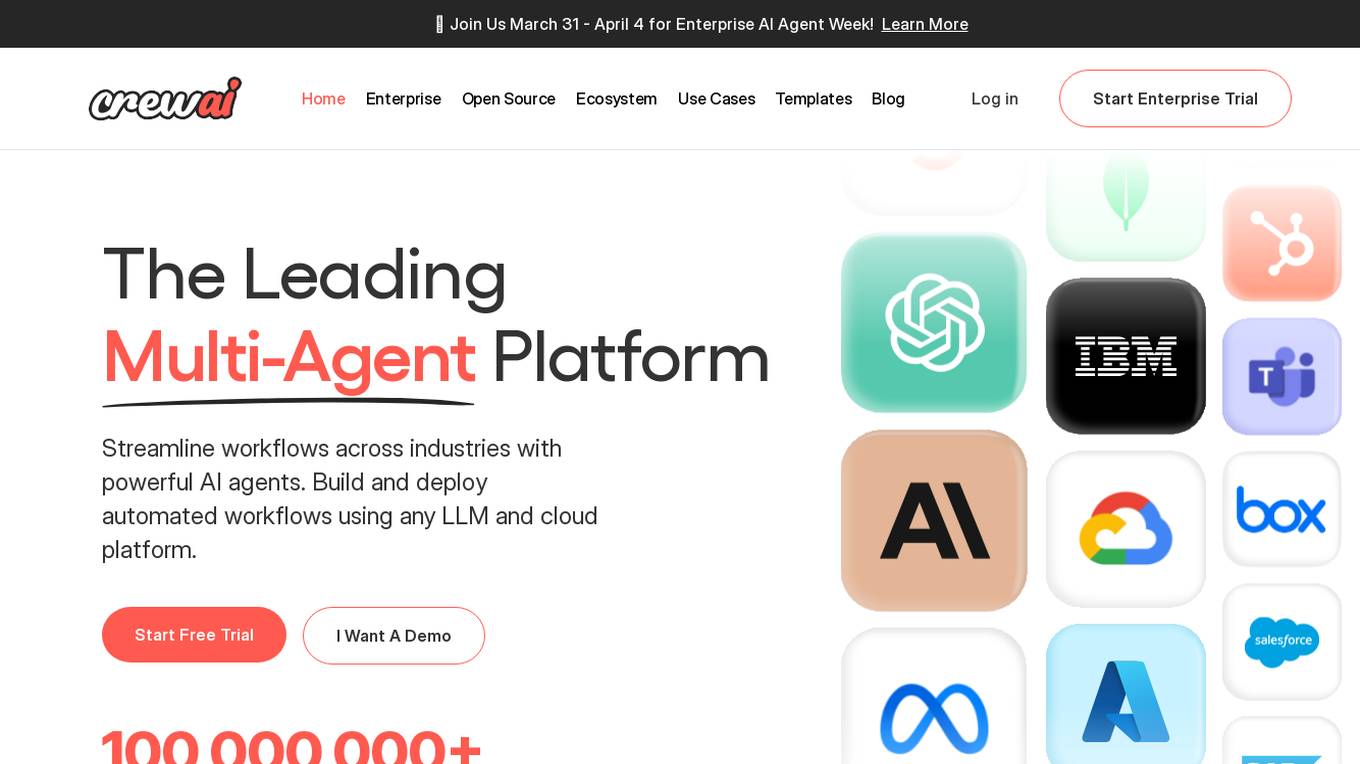

CrewAI

CrewAI is a leading multi-agent platform that enables users to streamline workflows across industries with powerful AI agents. Users can build and deploy automated workflows using any LLM and cloud platform. The platform offers tools for building, deploying, monitoring, and improving AI agents, providing complete visibility and control over automation processes. CrewAI is trusted by industry leaders and used in over 60 countries, offering a comprehensive solution for multi-agent automation.

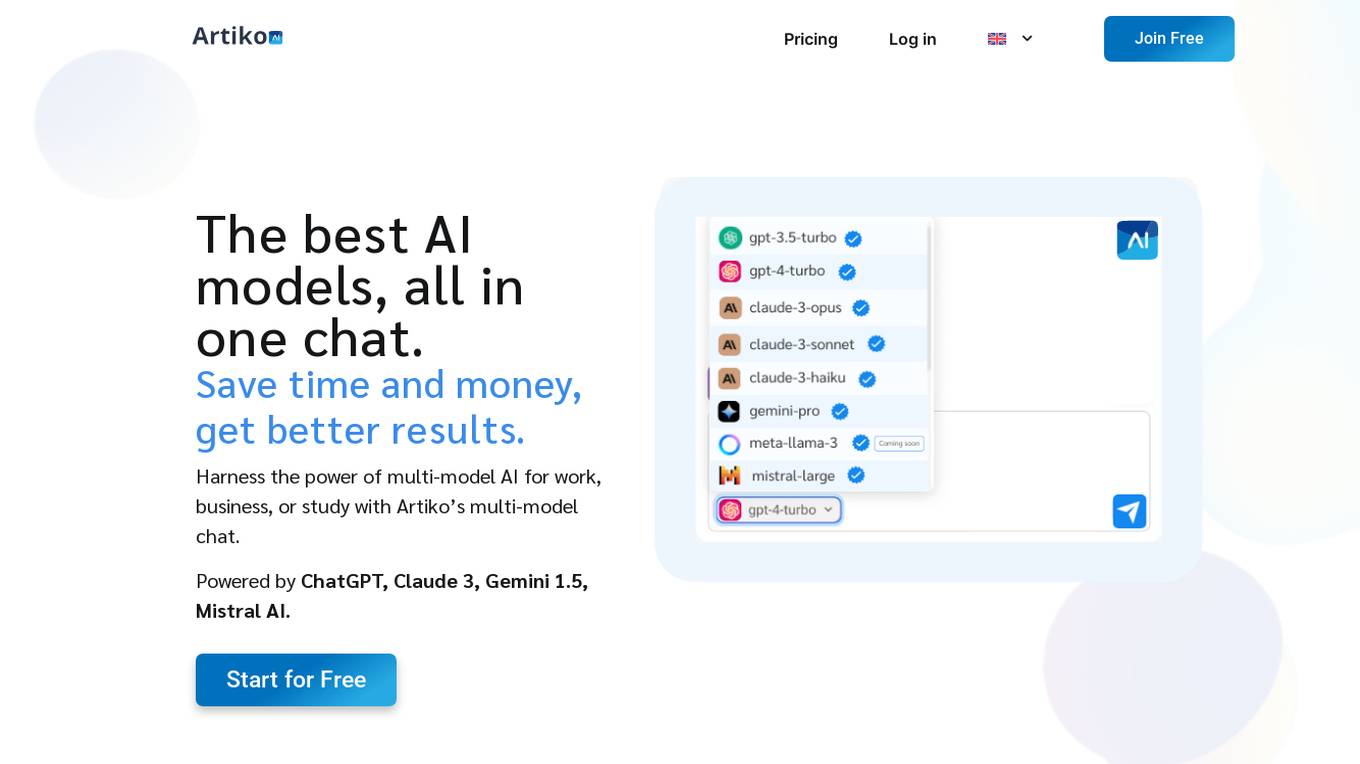

Artiko.ai

Artiko.ai is a multi-model AI chat platform that integrates advanced AI models such as ChatGPT, Claude 3, Gemini 1.5, and Mistral AI. It offers a convenient and cost-effective solution for work, business, or study by providing a single chat interface to harness the power of multi-model AI. Users can save time and money while achieving better results through features like text rewriting, data conversation, AI assistants, website chatbot, PDF and document chat, translation, brainstorming, and integration with various tools like Woocommerce, Amazon, Salesforce, and more.

Salieri

Salieri is a multi-agent LLM home multiverse platform that offers an efficient, trustworthy, and automated AI workflow. The innovative Multiverse Factory allows developers to elevate their projects by generating personalized AI applications through an intuitive interface. The platform aims to optimize user queries via LLM API calls, reduce expenses, and enhance the cognitive functions of AI agents. Salieri's team comprises experts from top AI institutes like MIT and Google, focusing on generative AI, neural knowledge graph, and composite AI models.

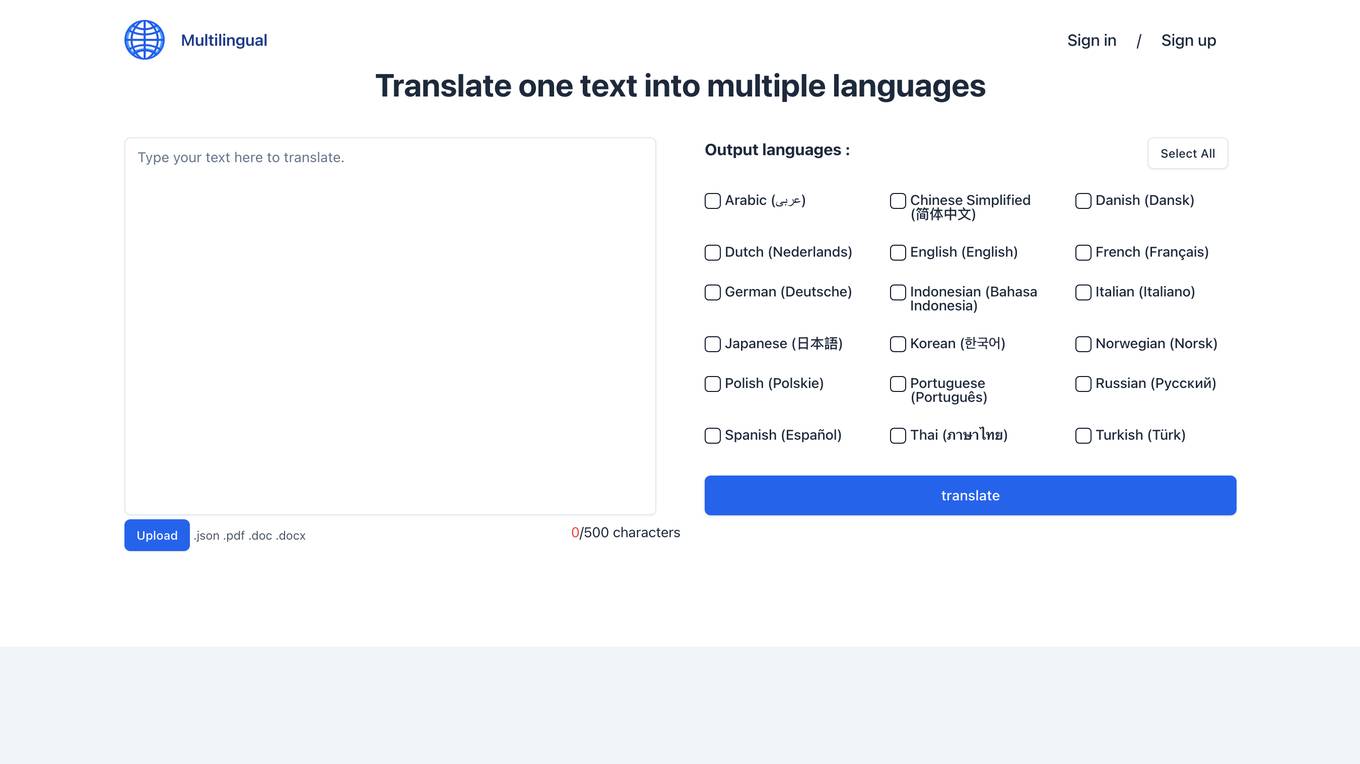

Multilingual.top

Multilingual.top is an advanced translation platform that enables users to translate text into multiple languages at once. It leverages artificial intelligence, specifically OpenAI's technology, to provide accurate and authentic translations. With Multilingual.top, users can break away from the traditional one-to-one translation limits and get multilingual results in one go, saving time and effort. The platform supports a wide range of languages, including Arabic, Chinese, Danish, Dutch, English, French, German, Indonesian, Italian, Japanese, Korean, Norwegian, Polish, Portuguese, Russian, Spanish, Thai, Turkish, and more. Multilingual.top offers a free translation service with some limits to prevent misuse and ensure everyone has fair access. Users can also upload documents in JSON, PDF, DOCX, and DOC formats for translation, making it especially useful for office workers and professionals dealing with documentation. The platform is continuously updated to improve translation accuracy and target language breadth.

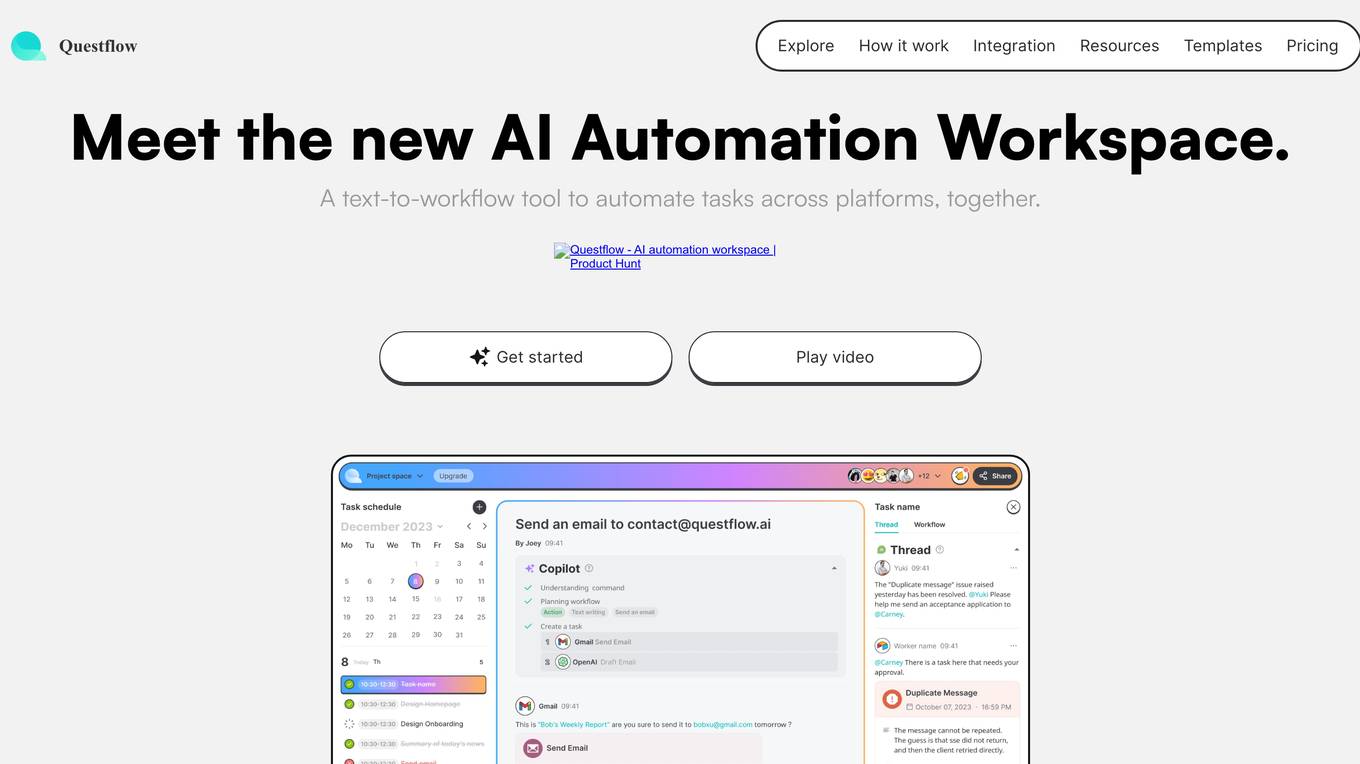

Questflow

Questflow is a decentralized AI agent economy platform that allows users to orchestrate multiple AI agents to gather insights, take action, and earn rewards autonomously. It serves as a co-pilot for work, helping knowledge workers automate repetitive tasks in a private, safety-first approach. The platform offers features such as multi-agent orchestration, user-friendly dashboard, visual reports, smart keyword generator, content evaluation, SEO goal setting, automated alerts, actionable SEO tips, regular SEO goal setting, and link optimization wizard.

Floneum

Floneum is a versatile AI-powered tool designed for language tasks. It offers a user-friendly interface to build workflows using large language models. With Floneum, users can securely extend functionality by writing plugins in various languages compiled to WebAssembly. The tool provides a sandboxed environment for plugins, ensuring limited resource access. With 41 built-in plugins, Floneum simplifies tasks such as text generation, search engine operations, file handling, Python execution, browser automation, and data manipulation.

Claude

Claude is a large multi-modal model, trained by Google. It is similar to GPT-3, but it is trained on a larger dataset and with more advanced techniques. Claude is capable of generating human-like text, translating languages, answering questions, and writing different kinds of creative content.

AirDroid

AirDroid is an AI-powered device management solution that offers both business and personal services. It provides features such as remote support, file transfer, application management, and AI-powered insights. The application aims to streamline IT resources, reduce costs, and increase efficiency for businesses, while also offering personal management solutions for private mobile devices. AirDroid is designed to empower businesses with intelligent AI assistance and enhance user experience through seamless multi-screen interactions.

ReadWeb.ai

ReadWeb.ai is a free web-based tool that provides instant multi-language translation of web pages. It allows users to translate any webpage into up to 10 different languages with just one click. ReadWeb.ai also offers a unique bilingual reading experience, allowing users to view translations in an easy-to-understand, top-and-bottom format. This makes it an ideal tool for language learners, researchers, and anyone who needs to access information from websites in different languages.

Flamel.ai

Flamel.ai is a social media management platform designed specifically for multi-location brands and franchises. It enables businesses to manage social media content and engagement across all of their locations from a centralized platform. Flamel.ai uses AI to help businesses create personalized and engaging content that resonates with local audiences. The platform also provides analytics and insights to help businesses track their social media performance and identify areas for improvement.

CX Genie

CX Genie is an AI-powered customer support platform that offers a comprehensive solution for businesses seeking to enhance their customer support operations. It leverages advanced AI technology to deliver smarter, faster, and more accurate customer support, including AI chatbots, help desk management, workflow automation, product data synchronization, and ticket management. With features like seamless integrations, customizable plans, and a user-friendly interface, CX Genie aims to provide outstanding customer support and drive business growth.

Wave.video

Wave.video is an online video editor and hosting platform that allows users to create, edit, and host videos. It offers a wide range of features, including a live streaming studio, video recorder, stock library, and video hosting. Wave.video is easy to use and affordable, making it a great option for businesses and individuals who need to create high-quality videos.

Qwen

Qwen is an AI tool that focuses on developing and releasing various language models, including dense models, coding models, mathematical models, and vision language models. The Qwen family offers open-source models with different parameter ranges to cater to various user needs, such as production use, mobile applications, coding assistance, mathematical problem-solving, and visual understanding of images and videos. Qwen aims to enhance intelligence and provide smarter and more knowledgeable models for developers and users.

Galleri

Galleri is a multi-cancer early detection test that uses a single blood draw to screen for over 50 types of cancer. It is recommended for adults aged 50 or older who are at an elevated risk for cancer. Galleri is not a diagnostic test and does not detect all cancers. A positive result requires confirmatory diagnostic evaluation by medically established procedures (e.g., imaging) to confirm cancer.

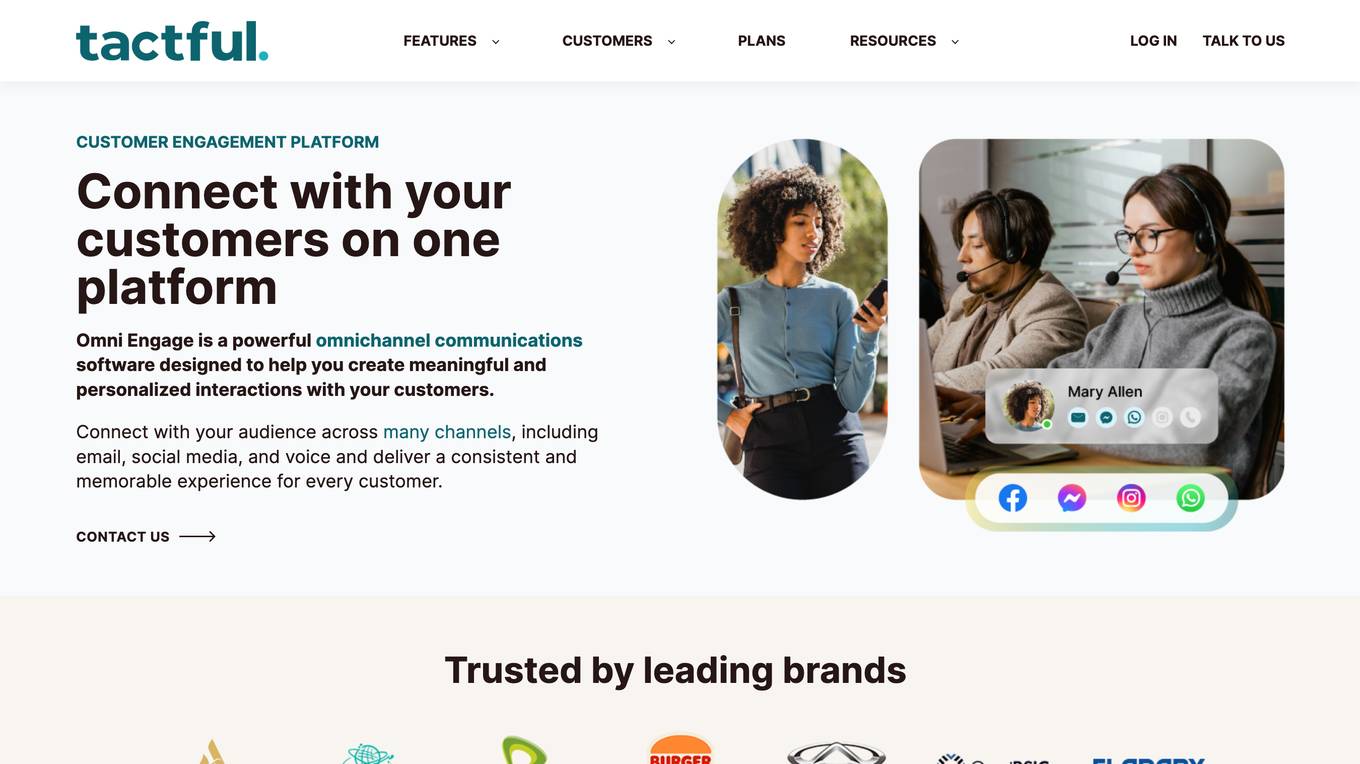

Omni Engage

Omni Engage is a powerful omnichannel communications software designed to help businesses create meaningful and personalized interactions with their customers. It allows businesses to connect with their audience across multiple channels, including email, social media, and voice, and deliver a consistent and memorable experience for every customer. Omni Engage simplifies customer engagement with its Unified Inbox, which enables agents to handle requests from all channels seamlessly and efficiently. It also offers AI automation with Omni Automate, which streamlines customer interactions by automating routine inquiries and providing rapid response times. With its robust reporting and analytics capabilities, Omni Engage empowers supervisors to measure engagement and performance across all channels, identify areas for improvement, and drive success.

Seedream 4.0

Seedream 4.0 is a next-generation multi-modal AI image generator designed for creators to produce photorealistic images with pro-grade controls and fast rendering capabilities. It offers features such as deep scene understanding, reference-based consistency, artistic style transfer, ultra-fast rendering, sequential story generation, and commercial-grade design. Users can create stunning visuals with AI in four simple steps: adding references, describing their vision, generating and refining, and exporting in high resolution. Seedream 4.0 is ideal for various applications including narrative visuals, product sets, comics, ads, social carousels, posters, key visuals, and marketing graphics.

1 - Open Source AI Tools

x-lstm

This repository contains an unofficial implementation of the xLSTM model introduced in Beck et al. (2024). It serves as a didactic tool to explain the details of a modern Long-Short Term Memory model with competitive performance against Transformers or State-Space models. The repository also includes a Lightning-based implementation of a basic LLM for multi-GPU training. It provides modules for scalar-LSTM and matrix-LSTM, as well as an xLSTM LLM built using Pytorch Lightning for easy training on multi-GPUs.

20 - OpenAI Gpts

Tango Multi-Agent Wizard

I'm Tango, your go-to for simulating dialogues with any persona, entity, style, or expertise.

OE Buddy

Assistant for multi-job remote workers, aiding in task management and communication.

Duesentrieb x100

Multi-algorithmic mastermind who innovates technology solutions and optimizes product design. And it is a duck. // Carefully test any generated solutions.

Multiple Personas v2.0.1

A Multi-Agent Multi-Tasking Assistant. Seamlessly switches personas with different skills and backgrounds to tackle complex tasks. Powered by Mr Persona.

MULTITASKER GPT-4 (Turbo)

Advanced multi-tasking GPT with real-time data management, image generation, and document editing.

Dr. Watt's Energy Insight Lab

Energy Insights Lab is a multi-disciplinary team of dedicated professionals advising on energy markets, technologies, and decarbonization.

Art Authenticator Guide

Advanced artwork authenticator with unrestricted, multi-functional abilities.

Video Insights: Summaries/Transcription/Vision

Chat with any video or audio. High-quality search, summarization, insights, multi-language transcriptions, and more. We currently support Youtube and files uploaded on our website.

![Website Builder [Multipage & High Quality] Screenshot](/screenshots_gpts/g-SJ6OGqYNh.jpg)

Website Builder [Multipage & High Quality]

👋 I'm Wegic, the AI web designer & developer by your side! I can help you quickly create and launch a multi-page website! #website builder##website generator##website create#