Best AI tools for< Monitor Vendor Performance >

20 - AI tool Sites

LangChain

LangChain is an AI tool that offers a suite of products supporting developers in the LLM application lifecycle. It provides a framework to construct LLM-powered apps easily, visibility into app performance, and a turnkey solution for serving APIs. LangChain enables developers to build context-aware, reasoning applications and future-proof their applications by incorporating vendor optionality. LangSmith, a part of LangChain, helps teams improve accuracy and performance, iterate faster, and ship new AI features efficiently. The tool is designed to drive operational efficiency, increase discovery & personalization, and deliver premium products that generate revenue.

ModelOp

ModelOp is the leading AI Governance software for enterprises, providing a single source of truth for all AI systems, automated process workflows, real-time insights, and integrations to extend the value of existing technology investments. It helps organizations safeguard AI initiatives without stifling innovation, ensuring compliance, accelerating innovation, and improving key performance indicators. ModelOp supports generative AI, Large Language Models (LLMs), in-house, third-party vendor, and embedded systems. The software enables visibility, accountability, risk tiering, systemic tracking, enforceable controls, workflow automation, reporting, and rapid establishment of AI governance.

Netify

Netify provides network intelligence and visibility. Its solution stack starts with a Deep Packet Inspection (DPI) engine that passively collects data on the local network. This lightweight engine identifies applications, protocols, hostnames, encryption ciphers, and other network attributes. The software can be integrated into network devices for traffic identification, firewalling, QoS, and cybersecurity. Netify's Informatics engine collects data from local DPI engines and uses the power of a public or private cloud to transform it into network intelligence. From device identification to cybersecurity risk detection, Informatics provides a way to take a proactive approach to manage network threats, bottlenecks, and usage. Lastly, Netify's Data Feeds provide data to help vendors understand how applications behave on the Internet.

Slicker

Slicker is an AI-powered tool designed to recover failed subscription payments and maximize subscription revenue for businesses. It uses a proprietary AI engine to process each failing payment individually, converting past due invoices into revenue. With features like payment recovery on auto-pilot, state-of-the-art machine learning model, lightning-fast setup, in-depth payment analytics, and enterprise-grade security, Slicker offers a comprehensive solution to reduce churn and boost revenue. The tool is fully transparent, allowing users to inspect and review every action taken by the AI engine. Slicker seamlessly integrates with popular billing and payment platforms, making it easy to implement and start seeing results quickly.

Relyance AI

Relyance AI is a platform that offers 360 Data Governance and Trust solutions. It helps businesses safeguard against fines and reputation damage while enhancing customer trust to drive business growth. The platform provides visibility into enterprise-wide data processing, ensuring compliance with regulatory and customer obligations. Relyance AI uses AI-powered risk insights to proactively identify and address risks, offering a unified trust and governance infrastructure. It offers features such as data inventory and mapping, automated assessments, security posture management, and vendor risk management. The platform is designed to streamline data governance processes, reduce costs, and improve operational efficiency.

Wing Security

Wing Security is a SaaS Security Posture Management (SSPM) solution that helps businesses protect their data by providing full visibility and control over applications, users, and data. The platform offers features such as automated remediation, AI discovery, real-time SaaS visibility, vendor risk management, insider risk management, and more. Wing Security enables organizations to eliminate risky applications, manage user behavior, and protect sensitive data from unauthorized access. With a focus on security first, Wing Security helps businesses leverage the benefits of SaaS while staying protected.

Sprinto

Sprinto is a Continuous Security & Compliance Platform that helps organizations manage and maintain compliance with various frameworks such as SOC 2, ISO 27001, NIST, GDPR, HIPAA, and more. It offers features like Vendor Risk Management, Vulnerability Assessment, Access Control Policies, Security Questionnaire, and Change Management. Sprinto automates evidence collection, streamlines workflows, and provides expert support to ensure organizations stay audit-ready and compliant. The platform is AI-powered, scalable, and supports over 40 compliance frameworks, making it a comprehensive solution for security and compliance needs.

Move AI

Move AI is an AI-powered moving assistant that simplifies the relocation process by providing personalized moving blueprints, matching users with top-tier vendors, and overseeing every aspect of the move in real-time. The platform streamlines tasks, offers fixed quotes, and anticipates needs to ensure a smooth and efficient moving experience. Despite being AI-driven, Move AI also offers human support for personalized care and attention.

LLMMM Marketing Monitor

LLMMM is an AI tool designed to monitor how AI models perceive and present brands. It offers real-time monitoring and cross-model insights to help brands understand their digital presence across various leading AI platforms. With automated analysis and lightning-fast results, LLMMM provides immediate visibility into how AI chatbots interpret brands. The tool focuses on brand intelligence, brand safety monitoring, misalignment detection, and cross-model brand intelligence. Users can create an account in minutes and access a range of features to track and analyze their brand's performance in the AI landscape.

New Relic

New Relic is an AI monitoring platform that offers an all-in-one observability solution for monitoring, debugging, and improving the entire technology stack. With over 30 capabilities and 750+ integrations, New Relic provides the power of AI to help users gain insights and optimize performance across various aspects of their infrastructure, applications, and digital experiences.

Arize AI

Arize AI is an AI Observability & LLM Evaluation Platform that helps you monitor, troubleshoot, and evaluate your machine learning models. With Arize, you can catch model issues, troubleshoot root causes, and continuously improve performance. Arize is used by top AI companies to surface, resolve, and improve their models.

Devi

Devi is an AI-powered social media lead generation and outreach tool that helps businesses find and engage with potential customers on Facebook, LinkedIn, Twitter, Reddit, and other platforms. It uses artificial intelligence to monitor keywords and identify high-intent leads, and then provides users with tools to reach out to those leads and build relationships. Devi also offers a variety of other features, such as AI-generated content, scheduling, and analytics.

Hexowatch

Hexowatch is an AI-powered website monitoring and archiving tool that helps businesses track changes to any website, including visual, content, source code, technology, availability, or price changes. It provides detailed change reports, archives snapshots of pages, and offers side-by-side comparisons and diff reports to highlight changes. Hexowatch also allows users to access monitored data fields as a downloadable CSV file, Google Sheet, RSS feed, or sync any update via Zapier to over 2000 different applications.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

KWatch.io

KWatch.io is a social listening tool that helps businesses monitor keywords on social media platforms like LinkedIn, Twitter, Reddit, and Hacker News. It uses AI to analyze the sentiment around keywords and provides real-time alerts when specific keywords are mentioned. KWatch.io can be used for a variety of purposes, including attracting customers, getting feedback, watching competitors, conducting market intelligence, and providing customer support. It offers various plans, including a free plan, an essential plan for $19/month, a business plan for $79/month, and an enterprise plan for $199/month.

AI Spend

AI Spend is an AI application designed to help users monitor their AI costs and prevent surprises. It allows users to keep track of their OpenAI usage and costs, providing fast insights, a beautiful dashboard, cost insights, notifications, usage analytics, and details on models and tokens. The application ensures simple pricing with no additional costs and securely stores API keys. Users can easily remove their data if needed, emphasizing privacy and security.

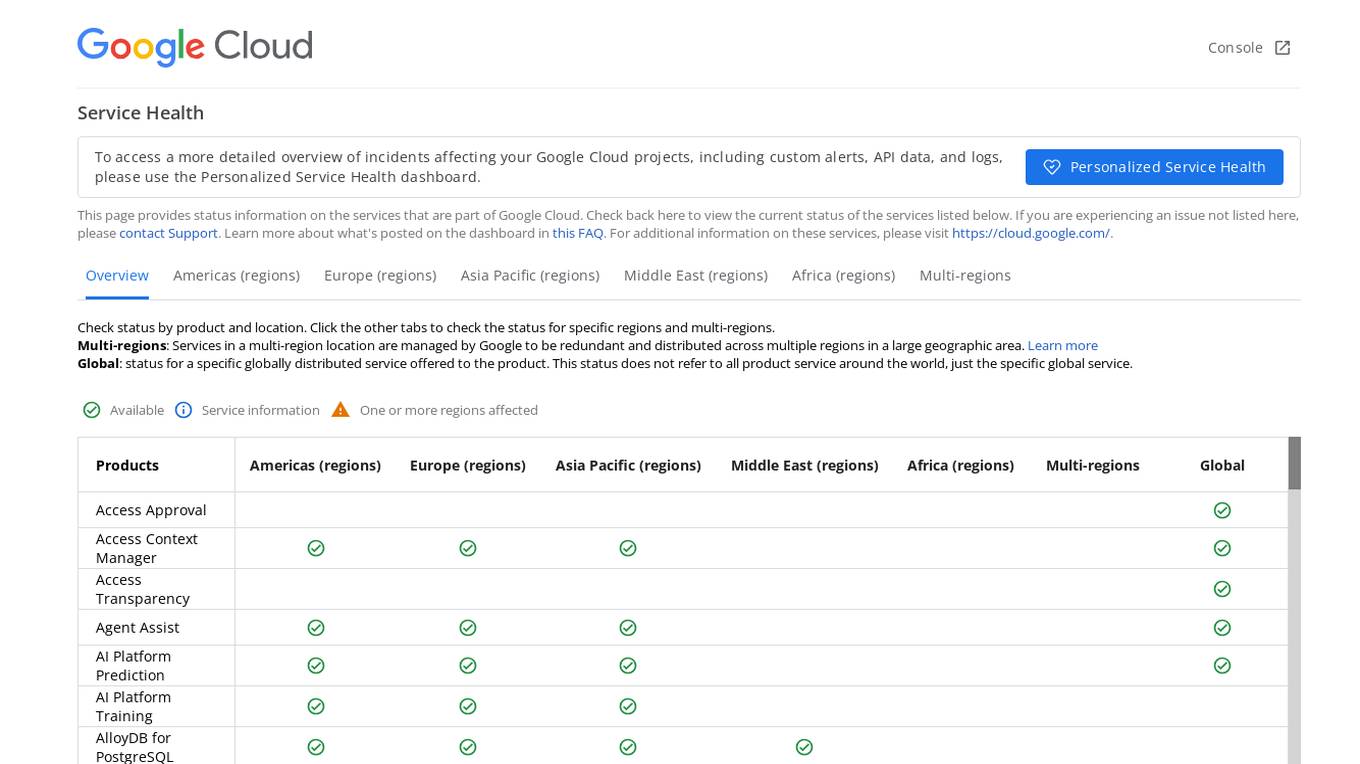

Google Cloud Service Health Console

Google Cloud Service Health Console provides status information on the services that are part of Google Cloud. It allows users to check the current status of services, view detailed overviews of incidents affecting their Google Cloud projects, and access custom alerts, API data, and logs through the Personalized Service Health dashboard. The console also offers a global view of the status of specific globally distributed services and allows users to check the status by product and location.

Pulse

Pulse is a world-class expert support tool for BigData stacks, specifically focusing on ensuring the stability and performance of Elasticsearch and OpenSearch clusters. It offers early issue detection, AI-generated insights, and expert support to optimize performance, reduce costs, and align with user needs. Pulse leverages AI for issue detection and root-cause analysis, complemented by real human expertise, making it a strategic ally in search cluster management.

Vocera

Vocera is an AI voice agent testing tool that allows users to test and monitor voice AI agents efficiently. It enables users to launch voice agents in minutes, ensuring a seamless conversational experience. With features like testing against AI-generated datasets, simulating scenarios, and monitoring AI performance, Vocera helps in evaluating and improving voice agent interactions. The tool provides real-time insights, detailed logs, and trend analysis for optimal performance, along with instant notifications for errors and failures. Vocera is designed to work for everyone, offering an intuitive dashboard and data-driven decision-making for continuous improvement.

Browse AI

Browse AI is a powerful AI-powered data extraction platform that allows users to scrape and monitor data from any website without the need for coding. With Browse AI, users can easily extract data, monitor websites for changes, turn websites into APIs, and integrate data with over 7,000 apps. The platform offers prebuilt robots for various use cases like e-commerce, real estate, recruitment, and more. Browse AI is trusted by over 740,000 users worldwide for its reliability, scalability, and ease of use.

0 - Open Source AI Tools

20 - OpenAI Gpts

Strategy Guide

An expert in AI strategy, offering insights on AI implementation and industry trends.

Outsourcing-assistenten (finans)

Dansk vejledning i outsourcing regler for kreditinstitutter og datacentraler

Quake and Volcano Watch Iceland

Seismic and volcanic monitor with in-depth data and visuals.

Qtech | FPS

Frost Protection System is an AI bot optimizing open field farming of fruits, vegetables, and flowers, combining real-time data and AI to boost yield, cut costs, and foster sustainable practices in a user-friendly interface.

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Financial Cybersecurity Analyst - Lockley Cash v1

stunspot's advisor for all things Financial Cybersec

AML/CFT Expert

Specializes in Anti-Money Laundering/Counter-Financing of Terrorism compliance and analysis.

Quality Assurance Advisor

Ensures product quality through systematic process monitoring and evaluation.

SkyNet - Global Conflict Analyst

Global Conflict Analyst that will provide a 'wartime update' on the worst global conflict atm.