Best AI tools for< Monitor Server Performance >

20 - AI tool Sites

502 Bad Gateway Error

The website is experiencing a 502 Bad Gateway error, which means the server received an invalid response from an upstream server. This error typically indicates a temporary issue with the server or network. Users may encounter this error when trying to access a website or web application. The error message '502 Bad Gateway' is a standard HTTP status code that indicates a server-side problem, not related to the user's device or internet connection. It is important to wait and try accessing the website again later, as the issue may be resolved by the website administrators.

VoteMinecraftServers

VoteMinecraftServers is a modern Minecraft voting website that provides real-time live analytics to help users stay ahead in the game. It utilizes advanced AI and ML technologies to deliver accurate and up-to-date information. The website is free to use and offers a range of features, including premium commands for enhanced functionality. VoteMinecraftServers is committed to data security and user privacy, ensuring a safe and reliable experience.

WebServerPro

The website is a platform that provides web server hosting services. It helps users set up and manage their web servers efficiently. Users can easily deploy their websites and applications on the server, ensuring a seamless online presence. The platform offers a user-friendly interface and reliable hosting solutions to meet various needs.

Boldici.us

Boldici.us is a website that currently appears to be experiencing technical difficulties, as indicated by the error code 521 displayed on the page. The error message suggests that the web server is down, resulting in the inability to establish a connection and display the web page content. Visitors are advised to wait a few minutes and try again, while website owners are encouraged to contact their hosting provider for assistance in resolving the issue. The website seems to be utilizing Cloudflare services for performance and security enhancements.

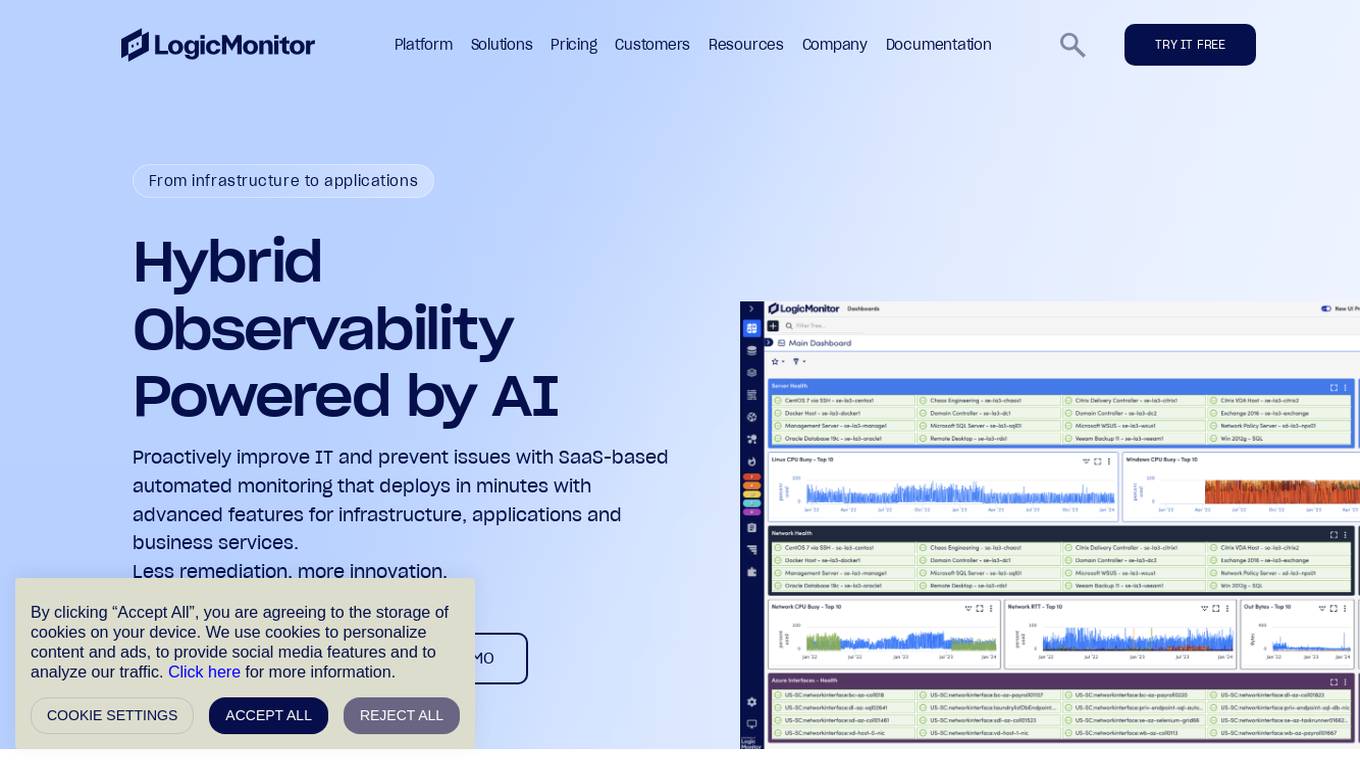

LogicMonitor

LogicMonitor is a cloud-based infrastructure monitoring platform that provides real-time insights and automation for comprehensive, seamless monitoring with agentless architecture. It offers a wide range of features including infrastructure monitoring, network monitoring, server monitoring, remote monitoring, virtual machine monitoring, SD-WAN monitoring, database monitoring, storage monitoring, configuration monitoring, cloud monitoring, container monitoring, AWS Monitoring, GCP Monitoring, Azure Monitoring, digital experience SaaS monitoring, website monitoring, APM, AIOPS, Dexda Integrations, security dashboards, and platform demo logs. LogicMonitor's AI-driven hybrid observability helps organizations simplify complex IT ecosystems, accelerate incident response, and thrive in the digital landscape.

Application Error

The website is experiencing an application error, which indicates a technical issue preventing the proper functioning of the application. An application error can occur due to various reasons such as bugs in the code, server issues, or incorrect user input. It is essential to troubleshoot and resolve application errors promptly to ensure the smooth operation of the website.

Application Error

The website seems to be experiencing an application error, which indicates a technical issue with the application. It may be a temporary problem that needs to be resolved by the website's developers. An application error can occur due to various reasons such as bugs in the code, server issues, or database problems. Users encountering this error may need to refresh the page, clear their cache, or contact the website's support team for assistance.

HostAI

HostAI is a platform that allows users to host their artificial intelligence models and applications with ease. It provides a user-friendly interface for managing and deploying AI projects, eliminating the need for complex server setups. With HostAI, users can seamlessly run their AI algorithms and applications in a secure and efficient environment. The platform supports various AI frameworks and libraries, making it versatile for different AI projects. HostAI simplifies the process of AI deployment, enabling users to focus on developing and improving their AI models.

Autobackend.dev

Autobackend.dev is a web development platform that provides tools and resources for building and managing backend systems. It offers a range of features such as database management, API integration, and server configuration. With Autobackend.dev, users can streamline the process of backend development and focus on creating innovative web applications.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

LangChain

LangChain is an AI tool that offers a suite of products supporting developers in the LLM application lifecycle. It provides a framework to construct LLM-powered apps easily, visibility into app performance, and a turnkey solution for serving APIs. LangChain enables developers to build context-aware, reasoning applications and future-proof their applications by incorporating vendor optionality. LangSmith, a part of LangChain, helps teams improve accuracy and performance, iterate faster, and ship new AI features efficiently. The tool is designed to drive operational efficiency, increase discovery & personalization, and deliver premium products that generate revenue.

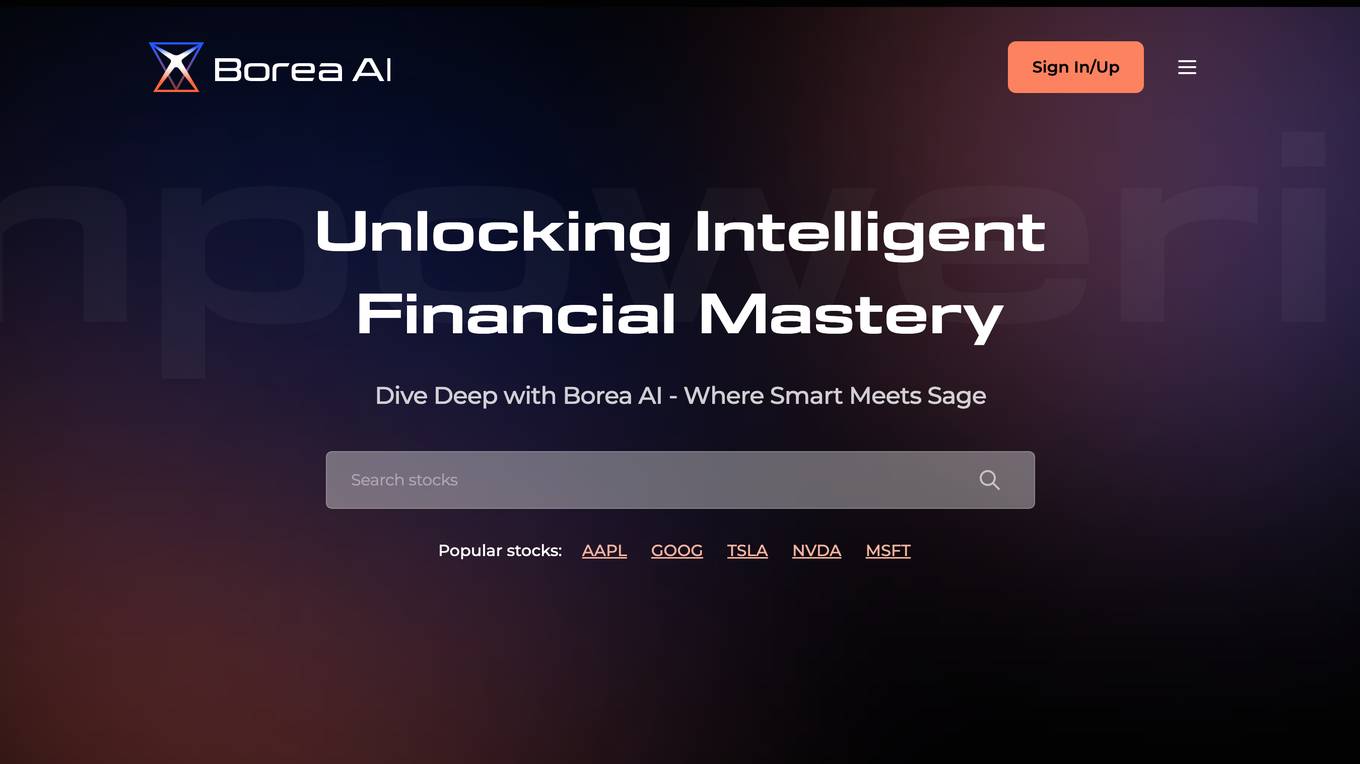

Borea AI

Borea AI is an AI application that provides stock price predictions and stock ratings based on past market behavior and historical stock performance. It empowers users to unlock intelligent financial mastery by offering insights on popular stocks, market leaders, index ETFs, top movers, most tweeted stocks, and best-performing predictions. Borea AI serves as a personal financial assistant, but it is important to note that past performance is not an indicator of future results, and professional investment advice should not be substituted.

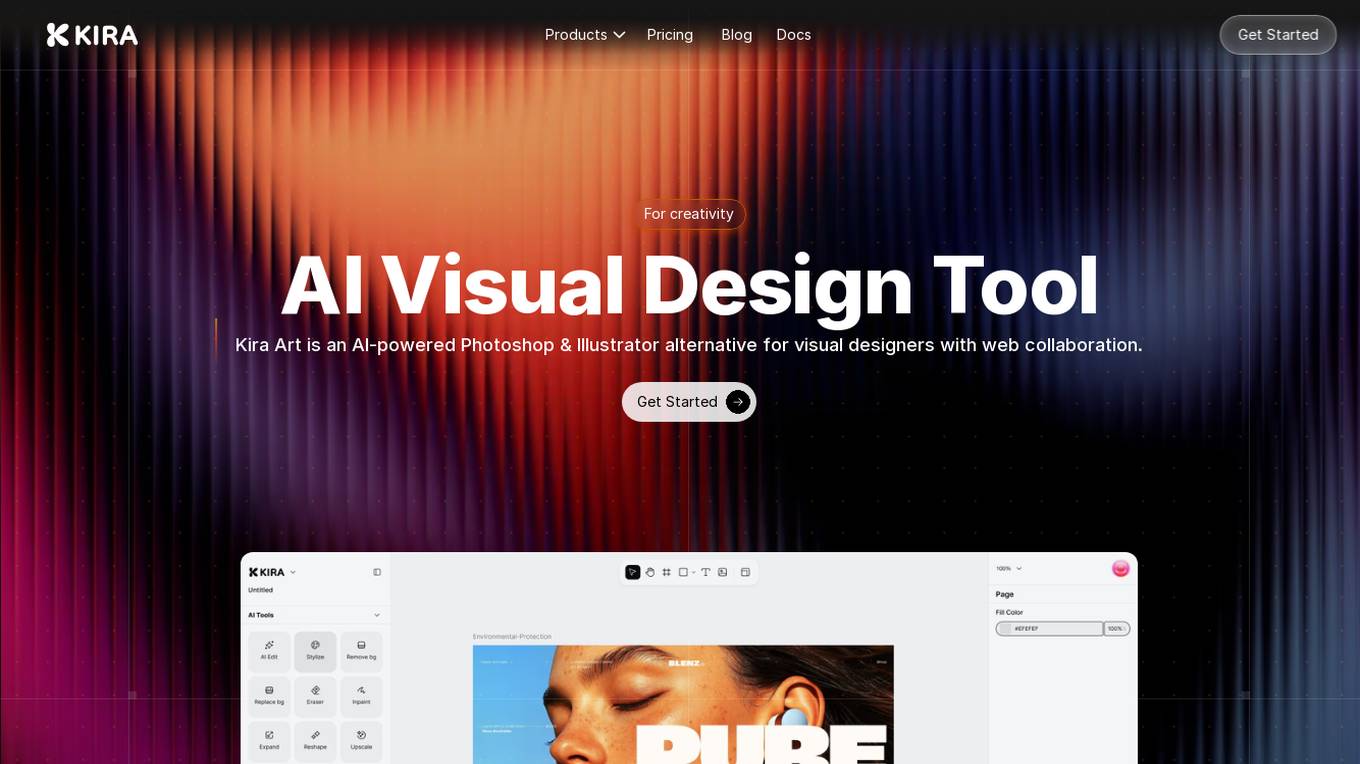

Kira Art

Kira Art is an AI-powered visual design tool that serves as an alternative to Photoshop & Illustrator. It offers fully featured vector and raster workspaces, AI-powered image generation and editing capabilities, real-time analytics, and advanced typography options. The tool is integrated with advanced AI models for enhanced performance and allows users to design without distractions on any platform or device. Kira Art aims to revolutionize the visual design process by providing a seamless and efficient experience for designers.

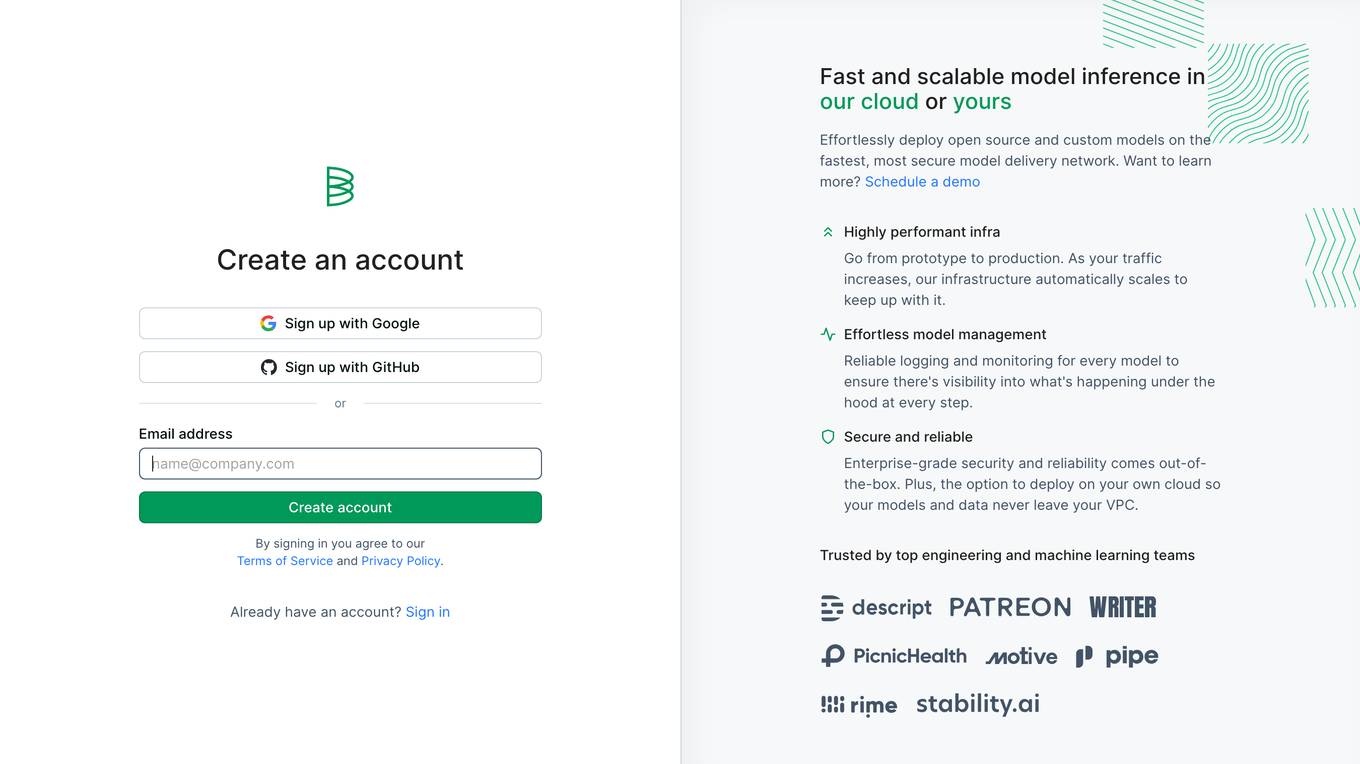

Baseten

Baseten is a machine learning infrastructure that provides a unified platform for data scientists and engineers to build, train, and deploy machine learning models. It offers a range of features to simplify the ML lifecycle, including data preparation, model training, and deployment. Baseten also provides a marketplace of pre-built models and components that can be used to accelerate the development of ML applications.

Web Server Error Resolver

The website is currently displaying a '403 Forbidden' error, which indicates that the server is refusing to respond to the request. This error message is typically displayed when the server understands the request made by the client but refuses to fulfill it. The 'openresty' mentioned in the text is likely the web server software being used. It is important to troubleshoot and resolve the 403 Forbidden error to regain access to the website's content.

Server Error Analyzer

The website encountered a server error, preventing it from fulfilling the user's request. The error message indicates a 500 Server Error, suggesting an issue on the server-side that is preventing the completion of the request. Users are advised to wait for 30 seconds and try again. This error message typically occurs when there is a problem with the server configuration or processing of the request.

ForbiddenGuard

The website is currently displaying a '403 Forbidden' error, which indicates that the server is refusing to respond to the request. This error message is typically shown when the server understands the request made by the client but refuses to fulfill it. The 'openresty' mentioned in the text is likely the web server software being used. It is important to troubleshoot and resolve the 403 Forbidden error to regain access to the desired content on the website.

403 Forbidden

The website appears to be displaying a '403 Forbidden' error message, which typically means that the user is not authorized to access the requested page. This error is often encountered when trying to access a webpage without the necessary permissions or when the server is configured to deny access. The message 'openresty' may indicate that the server is using the OpenResty web platform. It is important to ensure that the correct permissions are in place and that the requested page exists on the server.

OpenResty Server Manager

The website seems to be experiencing a 403 Forbidden error, which typically indicates that the server is denying access to the requested resource. This error is often caused by incorrect permissions or misconfigurations on the server side. The message 'openresty' suggests that the server may be using the OpenResty web platform. Users encountering this error may need to contact the website administrator for assistance in resolving the issue.

Belva

Belva is an AI-powered phone-calling assistant that can make calls on your behalf. With Belva, you can simply state your objective and the AI will make the call for you, providing you with a live transcription of the conversation. Belva's envoys can handle a variety of tasks, from making appointments and reservations to solving customer issues. You can monitor your active calls in real-time or review saved transcripts to collect information from the call. With Belva, you can get off the phone and let the AI do the talking, saving you time and hassle.

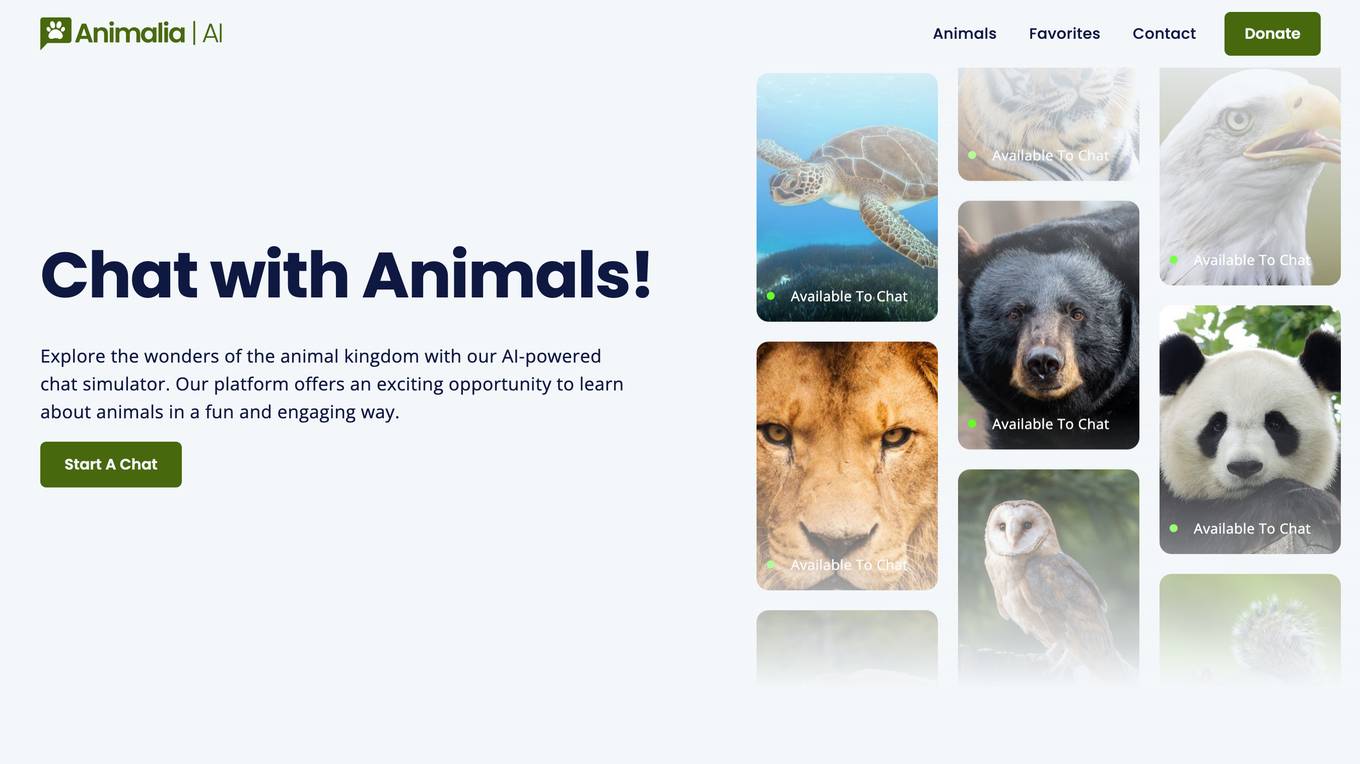

1 - Open Source AI Tools

mcp-server-motherduck

The mcp-server-motherduck repository is a server-side application that provides a centralized platform for managing and monitoring multiple Minecraft servers. It allows server administrators to easily control various aspects of their Minecraft servers, such as player management, world backups, and server performance monitoring. The application is designed to streamline server management tasks and enhance the overall gaming experience for both server administrators and players.

20 - OpenAI Gpts

Quake and Volcano Watch Iceland

Seismic and volcanic monitor with in-depth data and visuals.

Qtech | FPS

Frost Protection System is an AI bot optimizing open field farming of fruits, vegetables, and flowers, combining real-time data and AI to boost yield, cut costs, and foster sustainable practices in a user-friendly interface.

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Financial Cybersecurity Analyst - Lockley Cash v1

stunspot's advisor for all things Financial Cybersec

AML/CFT Expert

Specializes in Anti-Money Laundering/Counter-Financing of Terrorism compliance and analysis.