Best AI tools for< Monitor Performance Metrics >

20 - AI tool Sites

Crayon

Crayon is a competitive intelligence software that helps businesses track competitors, win more deals, and stay ahead in the market. Powered by AI, Crayon enables users to analyze, enable, compete, and measure their competitive landscape efficiently. The platform offers features such as competitor monitoring, AI news summarization, importance scoring, content creation, sales enablement, performance metrics, and more. With Crayon, users can receive high-priority insights, distill articles about competitors, create battlecards, find intel to win deals, and track performance metrics. The application aims to make competitive intelligence seamless and impactful for sales teams.

Kolank

Kolank is an AI platform offering a unified API with agent interoperability, automatic model selection, and cost optimization. It enables AI agents to communicate and collaborate efficiently, providing access to a wide range of AI models for text, image, and video processing. With features like load balancing, fallbacks, and performance metrics, Kolank simplifies AI model integration and usage, making it a comprehensive solution for various AI tasks.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

Jyotax.ai

Jyotax.ai is an AI-powered tax solution that revolutionizes tax compliance by simplifying the tax process with advanced AI solutions. It offers comprehensive bookkeeping, payroll processing, worldwide tax returns and filing automation, profit recovery, contract compliance, and financial modeling and budgeting services. The platform ensures accurate reporting, real-time compliance monitoring, global tax solutions, customizable tax tools, and seamless data integration. Jyotax.ai optimizes tax workflows, ensures compliance with precise AI tax calculations, and simplifies global tax operations through innovative AI solutions.

OtterTune

OtterTune was a database tuning service start-up founded by Carnegie Mellon University. Unfortunately, the company is no longer operational. The founder, DJ OT, is currently in prison for a parole violation. Despite its closure, OtterTune was known for its innovative approach to database tuning. The company received support from a dedicated team and investors who believed in its mission. The legacy of OtterTune lives on through its research, music archive, and GitHub repository.

Samespace

Samespace is an AI-powered platform designed to enhance collaboration, interactions, and team productivity in the workplace. It offers a suite of tools that leverage artificial intelligence to streamline communication, optimize workflow, and improve overall efficiency. With a focus on building dream teams effortlessly, Samespace provides a beautiful and privacy-focused team collaboration experience. The platform also includes productivity tracking features to help users monitor and enhance their performance. Additionally, Samespace offers a cutting-edge contact center platform tailored for the AI age, enabling businesses to deliver exceptional customer service.

Quantum Rise

Quantum Rise is an AI consultancy firm that believes in the power of combining human and machine intelligence to drive growth with AI and automation. They offer AI transformation services, AI for key metrics, and AI due diligence to help businesses adapt to the changing landscape. Quantum Rise focuses on delivering continuous improvement, agile development, and long-lasting results through sustainable and ethical practices. Their team of experts is dedicated to supporting businesses with data, automation, and AI solutions to achieve success in the modern era.

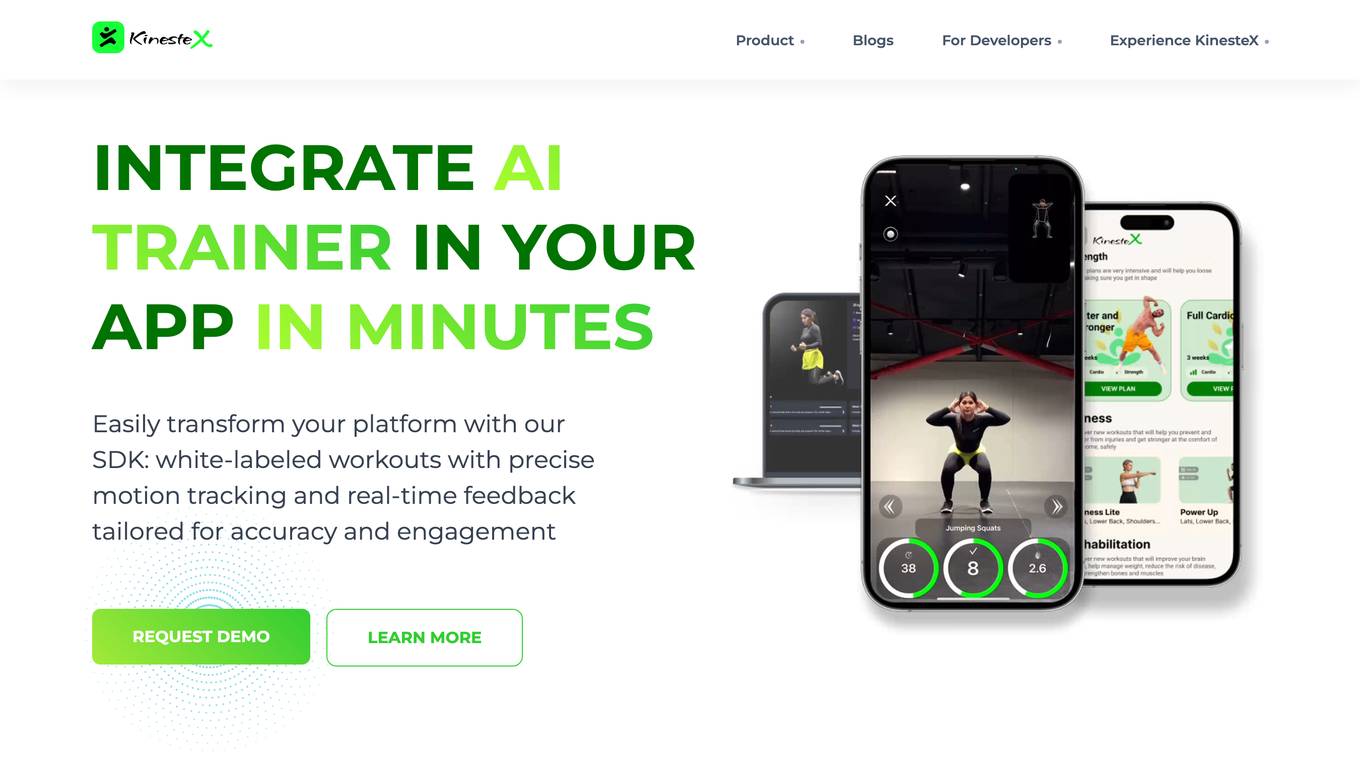

KinesteX AI

KinesteX AI is a personal fitness trainer and motion analysis SDK that offers a white-labeled motion tracking solution with ready-made or custom AI experiences. It seamlessly integrates into platforms to provide valuable insights such as movement tracking, exercise rep-count, calorie-burn rate, workout performance metrics, and more. The AI-powered motion tracking boosts user engagement, retention, and revenue by creating personalized fitness experiences. KinesteX SDK supports any camera-enabled device across Android, iOS, web, and gaming platforms for quick integration.

Fieldproxy

Fieldproxy is an AI-powered Field Service Management (FSM) software that offers solutions for various industries such as HVAC, Solar Energy, Telecommunications, Electrical & Powergrid Systems, Pest Control Services, FMCG, Banking & Financial Services, Construction, and Facilities Management. The platform provides real-time oversight, automated ticket creation, predictive service scheduling, and asset lifecycle management. Fieldproxy aims to empower on-ground teams, increase productivity, automate workflows, and enhance customer service across different sectors.

Rio Sustainability Platform

Rio Sustainability Platform is an intelligent and transparent sustainability accounting application that provides powerful, real-time data for actionable sustainability performance. The platform offers high-quality data tracked to the source to drive smarter decisions, uncover efficiencies, and reduce costs. Rio is trusted by governments, investors, and enterprise leaders for reliable ESG intelligence, translating sustainability ambitions into real-time, verifiable results using operational data and AI.

Out Of The Blue

Out Of The Blue is an AI-powered revenue optimization platform designed to help eCommerce businesses identify and address revenue-impacting issues in real-time. The platform monitors key metrics and data sources across marketing, sales, and analytics systems to detect anomalies and outages that can lead to lost revenue. By leveraging AI and machine learning algorithms, Out Of The Blue automates the process of detecting and diagnosing revenue-related problems, enabling businesses to respond quickly and effectively. The platform provides actionable insights and recommendations to help businesses optimize their revenue streams and improve overall performance.

AdminIQ

AdminIQ is an AI-powered site reliability platform that helps businesses improve the reliability and performance of their websites and applications. It uses machine learning to analyze data from various sources, including application logs, metrics, and user behavior, to identify and resolve issues before they impact users. AdminIQ also provides a suite of tools to help businesses automate their site reliability processes, such as incident management, change management, and performance monitoring.

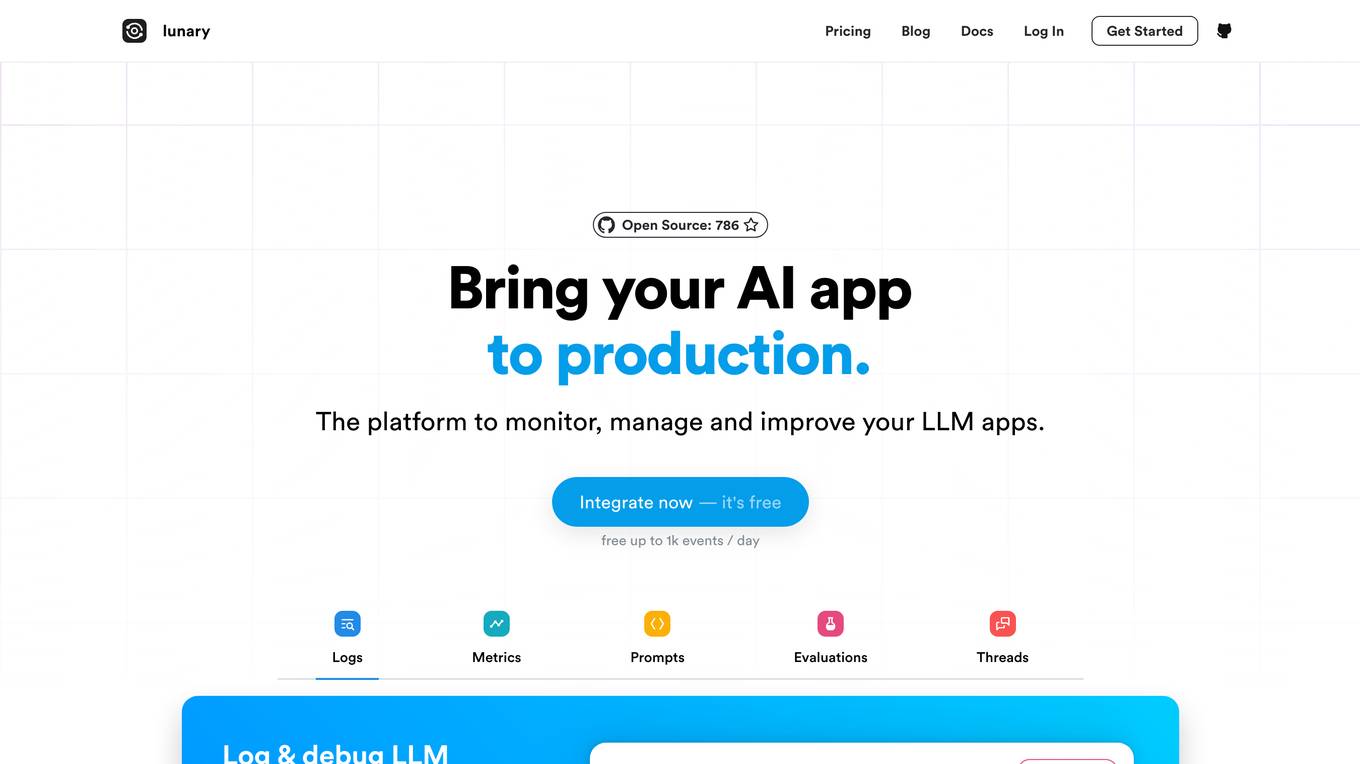

Lunary

Lunary is an AI developer platform designed to bring AI applications to production. It offers a comprehensive set of tools to manage, improve, and protect LLM apps. With features like Logs, Metrics, Prompts, Evaluations, and Threads, Lunary empowers users to monitor and optimize their AI agents effectively. The platform supports tasks such as tracing errors, labeling data for fine-tuning, optimizing costs, running benchmarks, and testing open-source models. Lunary also facilitates collaboration with non-technical teammates through features like A/B testing, versioning, and clean source-code management.

Kodus

Kodus is an AI-powered tool designed for agile project management. It offers automated assistance to team leaders in analyzing team performance, identifying productivity opportunities, and providing improvement suggestions. The tool keeps teams updated on progress, automates management processes, and ensures the integrity of engineering and agility metrics. Kodus aims to help teams unlock their full potential by streamlining processes and enhancing productivity within 90 days.

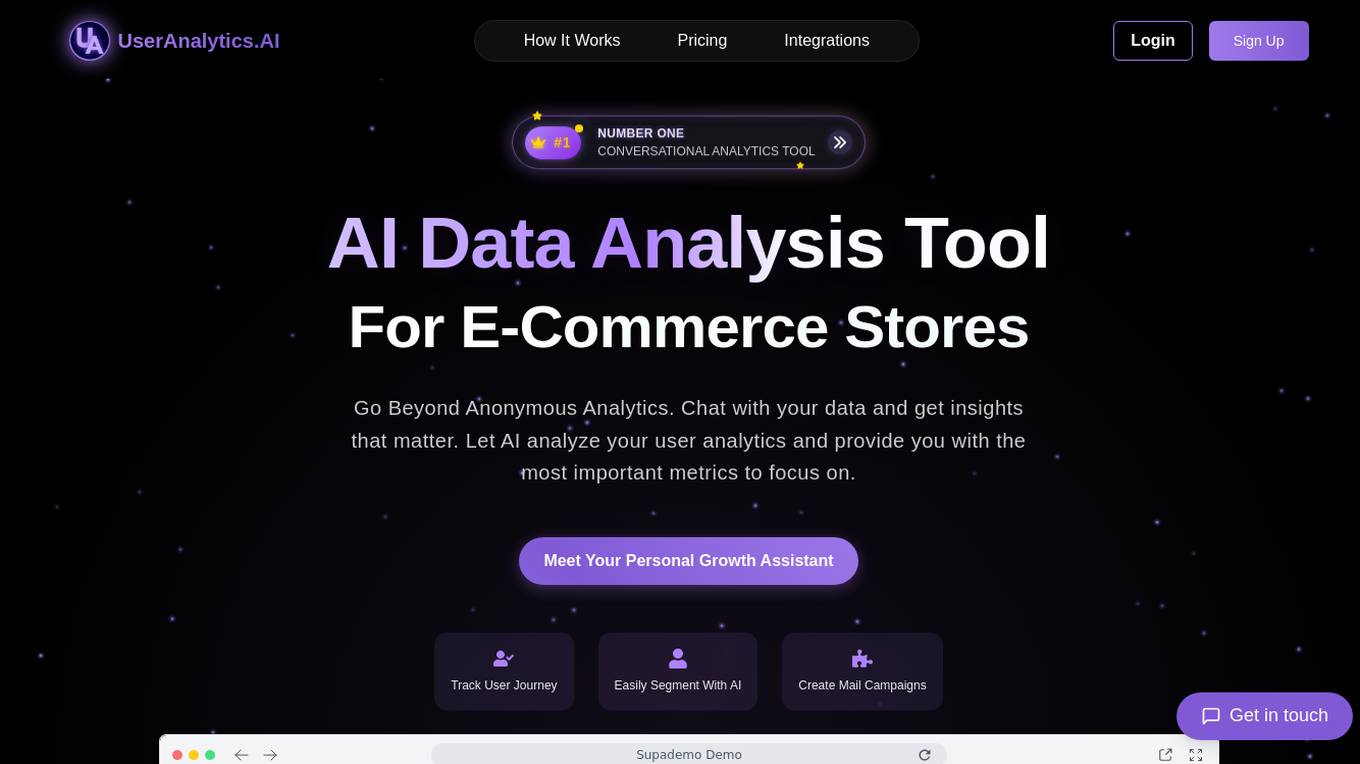

UserAnalytics.AI

UserAnalytics.AI is an advanced AI data analysis tool designed for e-commerce platforms such as Shopify, WooCommerce, and SaaS. It provides in-depth analytics and insights to help businesses optimize their online performance and make data-driven decisions. With powerful AI algorithms, UserAnalytics.AI offers a user-friendly interface for users to track and analyze various metrics related to their online stores, customer behavior, and sales performance.

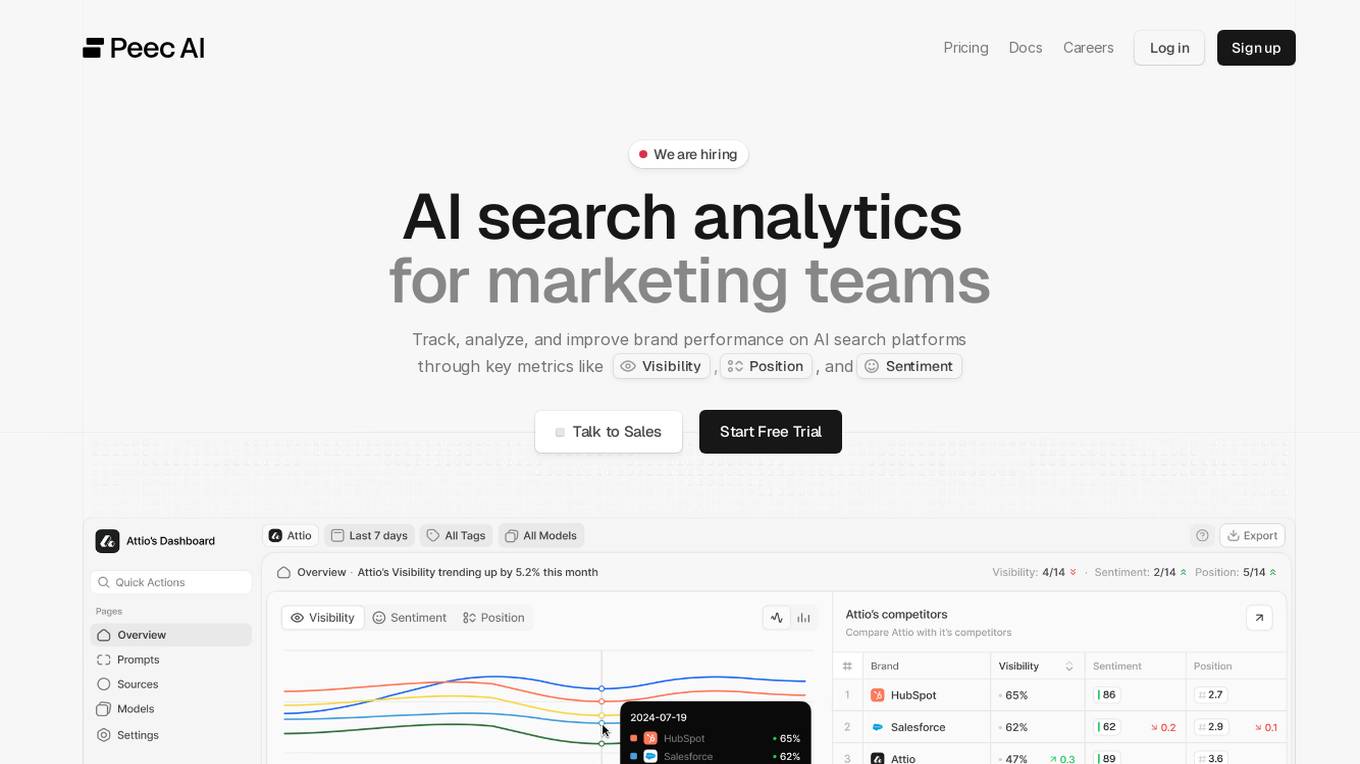

Peec AI

Peec AI is an AI search analytics tool designed for marketing teams to track, analyze, and improve brand performance on AI search platforms. It provides key metrics such as Visibility, Position, and Sentiment to help businesses understand how AI perceives their brand. The platform offers insights on AI visibility, prompts analysis, and competitor tracking to enhance marketing strategies in the era of AI and generative search.

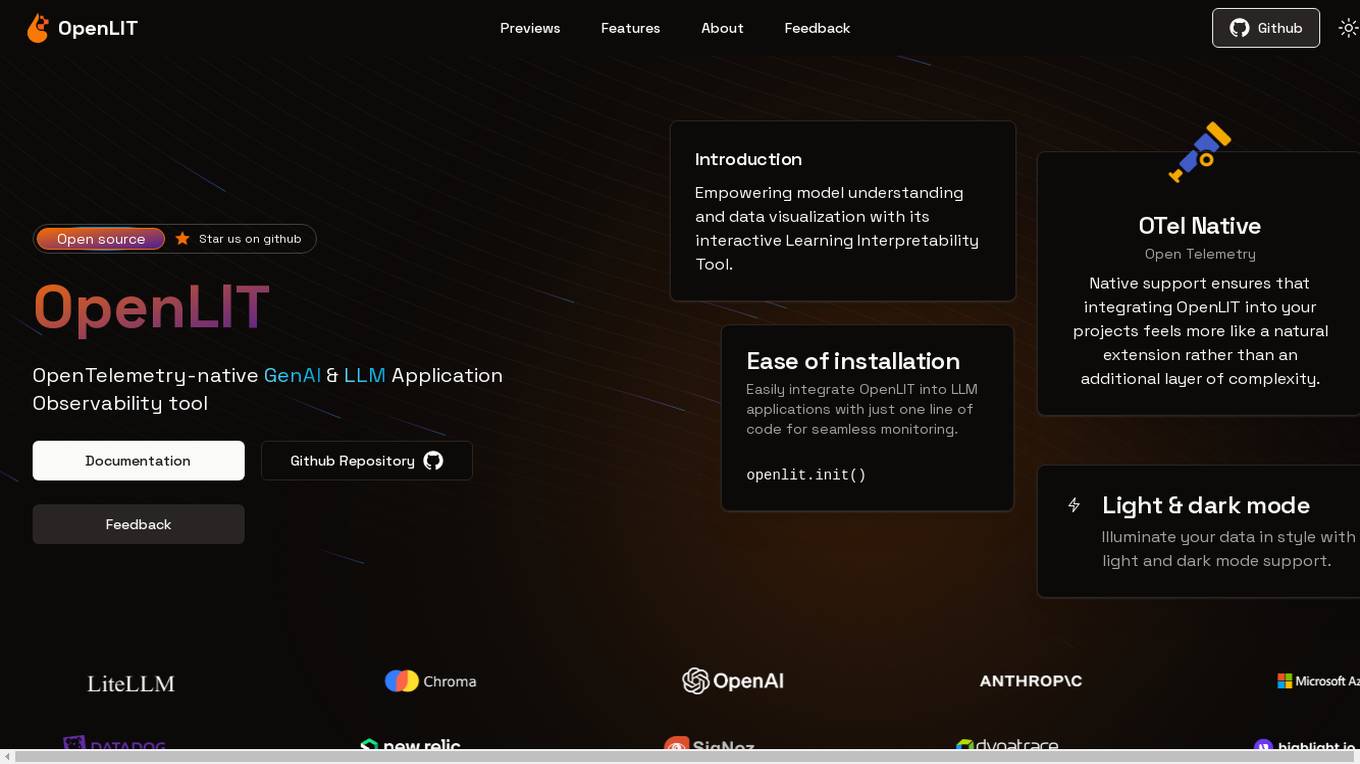

OpenLIT

OpenLIT is an AI application designed as an Observability tool for GenAI and LLM applications. It empowers model understanding and data visualization through an interactive Learning Interpretability Tool. With OpenTelemetry-native support, it seamlessly integrates into projects, offering features like fine-tuning performance, real-time data streaming, low latency processing, and visualizing data insights. The tool simplifies monitoring with easy installation and light/dark mode options, connecting to popular observability platforms for data export. Committed to OpenTelemetry community standards, OpenLIT provides valuable insights to enhance application performance and reliability.

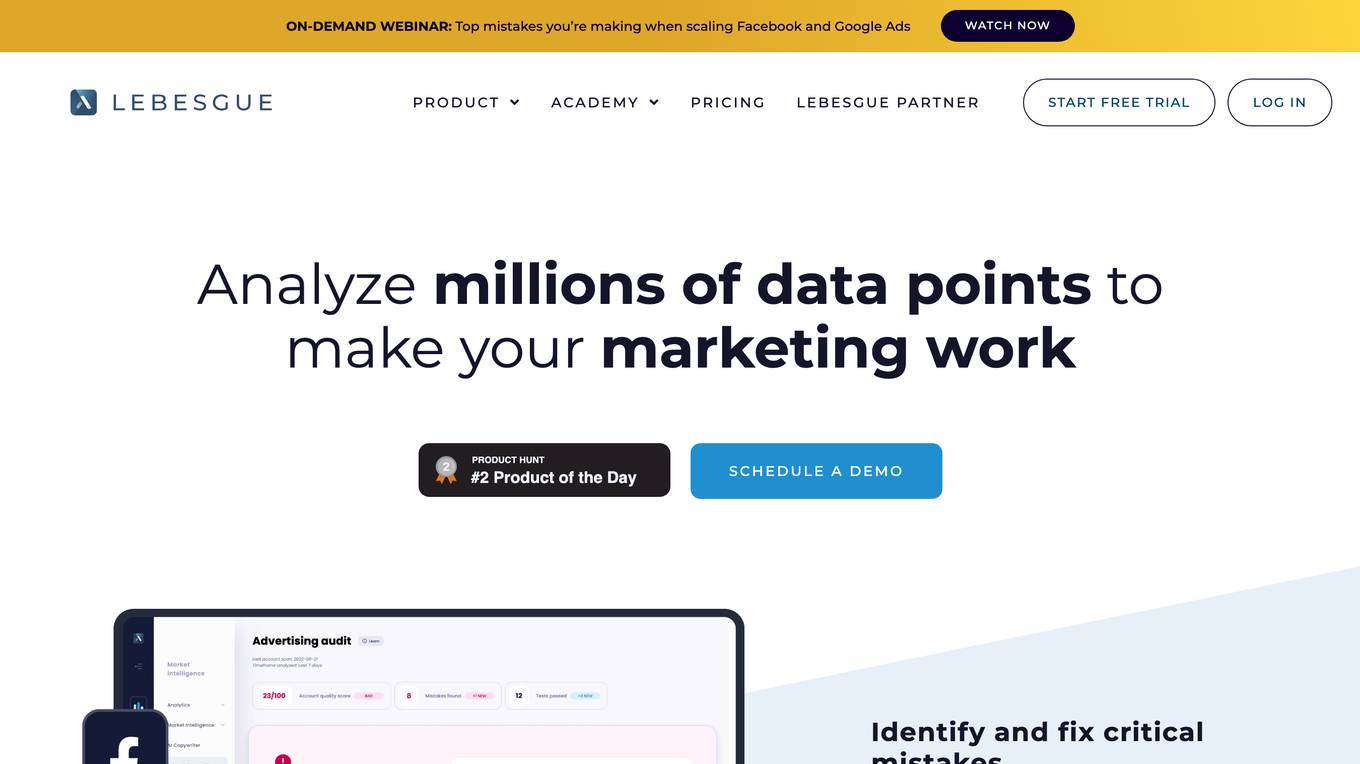

Lebesgue: AI CMO

Lebesgue: AI CMO is an AI-powered marketing tool designed to provide advanced insights and analytics for e-commerce businesses. It offers features such as competitor tracking, product intelligence, stock inventory strategy, and creative strategist. The tool helps businesses make data-driven decisions by analyzing marketing trends and competitor activities. Lebesgue aims to enhance marketing strategies and improve overall performance by leveraging AI technology and first-party data analytics.

illumex

illumex is a generative semantic fabric platform designed to streamline the process of data and analytics interpretation and rationalization for complex enterprises. It offers augmented analytics creation, suggestive data and analytics utilization monitoring, and automated knowledge documentation to enhance agentic performance for analytics. The platform aims to solve the challenges of traditional tedious data analysis, incongruent data and metrics, and tribal knowledge of data teams.

Fetcher

Fetcher is an AI-powered recruitment platform that simplifies the hiring process by providing curated batches of talented candidates tailored to specific needs. It eliminates manual sourcing by leveraging advanced AI technology and human expertise to deliver high-quality candidate profiles directly to the user's inbox. With features like personalized email outreach, recruitment analytics, and diversity metrics monitoring, Fetcher optimizes the recruitment pipeline and enhances candidate engagement. Trusted by top recruiting teams worldwide, Fetcher offers unlimited candidate connections and email performance analytics to streamline the hiring strategy.

0 - Open Source AI Tools

20 - OpenAI Gpts

Performance Testing Advisor

Ensures software performance meets organizational standards and expectations.

SalesforceDevops.net

Guides users on Salesforce Devops products and services in the voice of Vernon Keenan from SalesforceDevops.net

DevOps Mentor

A formal, expert guide for DevOps pros advancing their skills. Your DevOps GYM

The Dock - Your Docker Assistant

Technical assistant specializing in Docker and Docker Compose. Lets Debug !

Project Performance Monitoring Advisor

Guides project success through comprehensive performance monitoring.