Best AI tools for< Monitor Cluster Resources >

20 - AI tool Sites

Pulse

Pulse is a world-class expert support tool for BigData stacks, specifically focusing on ensuring the stability and performance of Elasticsearch and OpenSearch clusters. It offers early issue detection, AI-generated insights, and expert support to optimize performance, reduce costs, and align with user needs. Pulse leverages AI for issue detection and root-cause analysis, complemented by real human expertise, making it a strategic ally in search cluster management.

Cast AI

Cast AI is an intelligent Kubernetes automation platform that offers live migration for AWS EKS, enabling users to migrate stateful workloads with zero downtime. The platform provides application performance automation by automating and optimizing the entire application stack, including Kubernetes cluster optimization, security, workload optimization, LLM optimization for AIOps, cost monitoring, and database optimization. Cast AI integrates with various cloud services and tools, offering solutions for migration of stateful workloads, inference at scale, and cutting AI costs without sacrificing scale. The platform helps users improve performance, reduce costs, and boost productivity through end-to-end application performance automation.

Meticulous

Meticulous is an AI tool that revolutionizes frontend testing by automatically generating and maintaining test suites for web applications. It eliminates the need for manual test writing and maintenance, ensuring comprehensive test coverage without the hassle. Meticulous uses AI to monitor user interactions, generate test suites, and provide visual end-to-end testing capabilities. It offers lightning-fast testing, parallelized across a compute cluster, and integrates seamlessly with existing test suites. The tool is battle-tested to handle complex applications and provides developers with confidence in their code changes.

Mixpeek Solutions

Mixpeek Solutions offers a Multimodal Data Warehouse for Developers, providing a Developer-First API for AI-native Content Understanding. The platform allows users to search, monitor, classify, and cluster unstructured data like video, audio, images, and documents. Mixpeek Solutions offers a range of features including Unified Search, Automated Classification, Unsupervised Clustering, Feature Extractors for Every Data Type, and various specialized extraction models for different data types. The platform caters to a wide range of industries and provides seamless model upgrades, cross-model compatibility, A/B testing infrastructure, and simplified model management.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

MailSynth

MailSynth is the first Gmail assistant designed specifically for individuals with ADHD. It helps users stay organized, informed, and stress-free by automatically labeling, highlighting, and archiving emails based on user instructions. The tool offers a daily briefing, custom auto-labeling, and archiving features to keep inboxes focused and clutter-free. MailSynth is praised for its ability to filter distractions, show key updates first, and help users save time and money by catching relevant discounts. It also allows users to enjoy newsletter content without the clutter and monitor keywords in emails. The tool prioritizes user privacy by ensuring emails stay within Google's ecosystem and running 100% on Google Infrastructure.

Keywordly

Keywordly is an AI-powered SEO Content Workflow Platform designed to help users optimize, write, research, and monitor content for better rankings. It offers features such as AI-powered content creation, content research and planning, content optimization, and more. With Keywordly, users can generate high-quality content aligned with keyword clusters, identify high-value keywords, repurpose and optimize existing content, and boost brand visibility on search engines and platforms like Google and ChatGPT. The platform streamlines the content creation process by providing tools for keyword discovery, clustering, and content strategy planning, helping users drive organic traffic and improve their SEO efforts.

LLMMM Marketing Monitor

LLMMM is an AI tool designed to monitor how AI models perceive and present brands. It offers real-time monitoring and cross-model insights to help brands understand their digital presence across various leading AI platforms. With automated analysis and lightning-fast results, LLMMM provides immediate visibility into how AI chatbots interpret brands. The tool focuses on brand intelligence, brand safety monitoring, misalignment detection, and cross-model brand intelligence. Users can create an account in minutes and access a range of features to track and analyze their brand's performance in the AI landscape.

New Relic

New Relic is an AI monitoring platform that offers an all-in-one observability solution for monitoring, debugging, and improving the entire technology stack. With over 30 capabilities and 750+ integrations, New Relic provides the power of AI to help users gain insights and optimize performance across various aspects of their infrastructure, applications, and digital experiences.

Arize AI

Arize AI is an AI Observability & LLM Evaluation Platform that helps you monitor, troubleshoot, and evaluate your machine learning models. With Arize, you can catch model issues, troubleshoot root causes, and continuously improve performance. Arize is used by top AI companies to surface, resolve, and improve their models.

Devi

Devi is an AI-powered social media lead generation and outreach tool that helps businesses find and engage with potential customers on Facebook, LinkedIn, Twitter, Reddit, and other platforms. It uses artificial intelligence to monitor keywords and identify high-intent leads, and then provides users with tools to reach out to those leads and build relationships. Devi also offers a variety of other features, such as AI-generated content, scheduling, and analytics.

Hexowatch

Hexowatch is an AI-powered website monitoring and archiving tool that helps businesses track changes to any website, including visual, content, source code, technology, availability, or price changes. It provides detailed change reports, archives snapshots of pages, and offers side-by-side comparisons and diff reports to highlight changes. Hexowatch also allows users to access monitored data fields as a downloadable CSV file, Google Sheet, RSS feed, or sync any update via Zapier to over 2000 different applications.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

KWatch.io

KWatch.io is a social listening tool that helps businesses monitor keywords on social media platforms like LinkedIn, Twitter, Reddit, and Hacker News. It uses AI to analyze the sentiment around keywords and provides real-time alerts when specific keywords are mentioned. KWatch.io can be used for a variety of purposes, including attracting customers, getting feedback, watching competitors, conducting market intelligence, and providing customer support. It offers various plans, including a free plan, an essential plan for $19/month, a business plan for $79/month, and an enterprise plan for $199/month.

AI Spend

AI Spend is an AI application designed to help users monitor their AI costs and prevent surprises. It allows users to keep track of their OpenAI usage and costs, providing fast insights, a beautiful dashboard, cost insights, notifications, usage analytics, and details on models and tokens. The application ensures simple pricing with no additional costs and securely stores API keys. Users can easily remove their data if needed, emphasizing privacy and security.

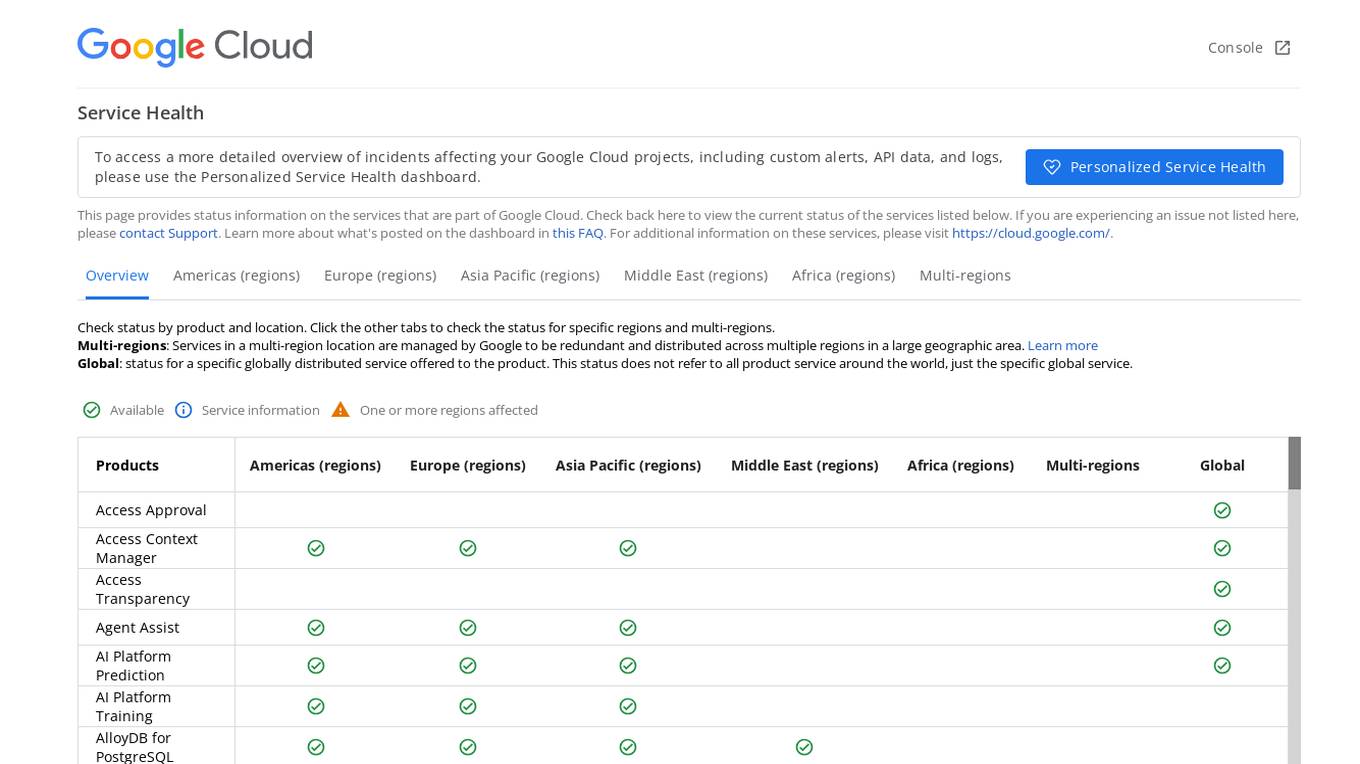

Google Cloud Service Health Console

Google Cloud Service Health Console provides status information on the services that are part of Google Cloud. It allows users to check the current status of services, view detailed overviews of incidents affecting their Google Cloud projects, and access custom alerts, API data, and logs through the Personalized Service Health dashboard. The console also offers a global view of the status of specific globally distributed services and allows users to check the status by product and location.

Vocera

Vocera is an AI voice agent testing tool that allows users to test and monitor voice AI agents efficiently. It enables users to launch voice agents in minutes, ensuring a seamless conversational experience. With features like testing against AI-generated datasets, simulating scenarios, and monitoring AI performance, Vocera helps in evaluating and improving voice agent interactions. The tool provides real-time insights, detailed logs, and trend analysis for optimal performance, along with instant notifications for errors and failures. Vocera is designed to work for everyone, offering an intuitive dashboard and data-driven decision-making for continuous improvement.

Browse AI

Browse AI is a powerful AI-powered data extraction platform that allows users to scrape and monitor data from any website without the need for coding. With Browse AI, users can easily extract data, monitor websites for changes, turn websites into APIs, and integrate data with over 7,000 apps. The platform offers prebuilt robots for various use cases like e-commerce, real estate, recruitment, and more. Browse AI is trusted by over 740,000 users worldwide for its reliability, scalability, and ease of use.

Fiddler AI

Fiddler AI is an AI Observability platform that provides tools for monitoring, explaining, and improving the performance of AI models. It offers a range of capabilities, including explainable AI, NLP and CV model monitoring, LLMOps, and security features. Fiddler AI helps businesses to build and deploy high-performing AI solutions at scale.

Veriti

Veriti is an AI-driven platform that proactively monitors and safely remediates exposures across the entire security stack, without disrupting the business. It helps organizations maximize their security posture while ensuring business uptime. Veriti offers solutions for safe remediation, MITRE ATT&CK®, healthcare, MSSPs, and manufacturing. The platform correlates exposures to misconfigurations, continuously assesses exposures, integrates with various security solutions, and prioritizes remediation based on business impact. Veriti is recognized for its role in exposure assessments and remediation, providing a consolidated security platform for businesses to neutralize threats before they happen.

1 - Open Source AI Tools

Helios

Helios is a powerful open-source tool for managing and monitoring your Kubernetes clusters. It provides a user-friendly interface to easily visualize and control your cluster resources, including pods, deployments, services, and more. With Helios, you can efficiently manage your containerized applications and ensure high availability and performance of your Kubernetes infrastructure.

20 - OpenAI Gpts

Docker and Docker Swarm Assistant

Expert in Docker and Docker Swarm solutions and troubleshooting.

Quake and Volcano Watch Iceland

Seismic and volcanic monitor with in-depth data and visuals.

Qtech | FPS

Frost Protection System is an AI bot optimizing open field farming of fruits, vegetables, and flowers, combining real-time data and AI to boost yield, cut costs, and foster sustainable practices in a user-friendly interface.

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Financial Cybersecurity Analyst - Lockley Cash v1

stunspot's advisor for all things Financial Cybersec

AML/CFT Expert

Specializes in Anti-Money Laundering/Counter-Financing of Terrorism compliance and analysis.

Quality Assurance Advisor

Ensures product quality through systematic process monitoring and evaluation.

SkyNet - Global Conflict Analyst

Global Conflict Analyst that will provide a 'wartime update' on the worst global conflict atm.

Network Operations Advisor

Ensures efficient and effective network performance and security.