Best AI tools for< Monitor Cloud Performance >

20 - AI tool Sites

Cloud Observability Middleware

The website provides Full-Stack Cloud Observability services with a focus on Middleware. It offers comprehensive monitoring and analysis tools to help businesses optimize their cloud infrastructure performance. The platform enables users to gain insights into their middleware applications, identify bottlenecks, and improve overall system efficiency.

Cast AI

Cast AI is an intelligent Kubernetes automation platform that offers live migration for AWS EKS, enabling users to migrate stateful workloads with zero downtime. The platform provides application performance automation by automating and optimizing the entire application stack, including Kubernetes cluster optimization, security, workload optimization, LLM optimization for AIOps, cost monitoring, and database optimization. Cast AI integrates with various cloud services and tools, offering solutions for migration of stateful workloads, inference at scale, and cutting AI costs without sacrificing scale. The platform helps users improve performance, reduce costs, and boost productivity through end-to-end application performance automation.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

Cloudhumans

The website en.cloudhumans.com is experiencing a DNS issue where the IP address is prohibited, causing a conflict within Cloudflare's system. Users encountering this error are advised to refer to the provided link for troubleshooting details. The site is hosted on the Cloudflare network, and the owner is instructed to log in and change the DNS A records to resolve the issue. Additionally, the page provides information about Cloudflare Ray ID and user's IP address, along with performance and security features by Cloudflare.

Jyotax.ai

Jyotax.ai is an AI-powered tax solution that revolutionizes tax compliance by simplifying the tax process with advanced AI solutions. It offers comprehensive bookkeeping, payroll processing, worldwide tax returns and filing automation, profit recovery, contract compliance, and financial modeling and budgeting services. The platform ensures accurate reporting, real-time compliance monitoring, global tax solutions, customizable tax tools, and seamless data integration. Jyotax.ai optimizes tax workflows, ensures compliance with precise AI tax calculations, and simplifies global tax operations through innovative AI solutions.

Arize AI

Arize AI is an AI observability tool designed to monitor and troubleshoot AI models in production. It provides configurable and sophisticated observability features to ensure the performance and reliability of next-gen AI stacks. With a focus on ML observability, Arize offers automated setup, a simple API, and a lightweight package for tracking model performance over time. The tool is trusted by top companies for its ability to surface insights, simplify issue root causing, and provide a dedicated customer success manager. Arize is battle-hardened for real-world scenarios, offering unparalleled performance, scalability, security, and compliance with industry standards like SOC 2 Type II and HIPAA.

New Relic

New Relic is an AI monitoring platform that offers an all-in-one observability solution for monitoring, debugging, and improving the entire technology stack. With over 30 capabilities and 750+ integrations, New Relic provides the power of AI to help users gain insights and optimize performance across various aspects of their infrastructure, applications, and digital experiences.

Pulse

Pulse is a world-class expert support tool for BigData stacks, specifically focusing on ensuring the stability and performance of Elasticsearch and OpenSearch clusters. It offers early issue detection, AI-generated insights, and expert support to optimize performance, reduce costs, and align with user needs. Pulse leverages AI for issue detection and root-cause analysis, complemented by real human expertise, making it a strategic ally in search cluster management.

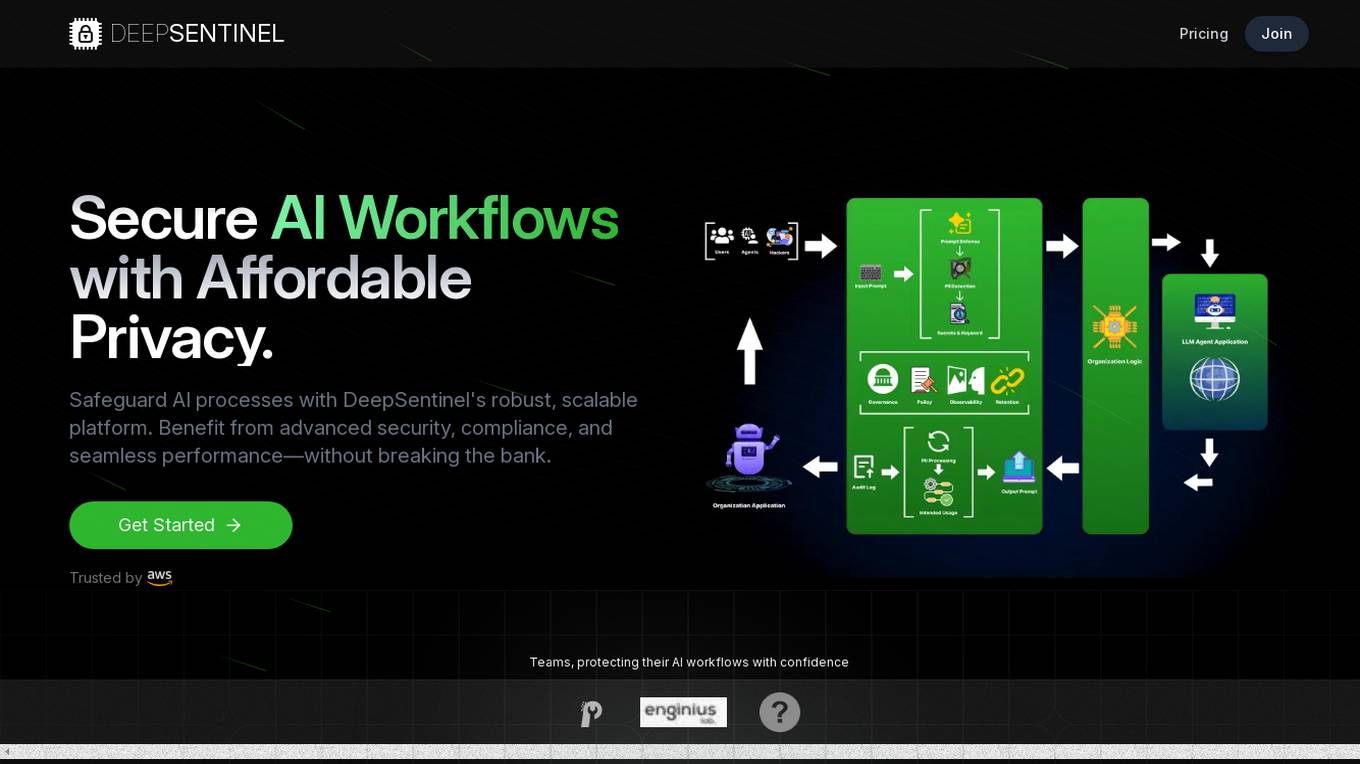

DeepSentinel

DeepSentinel is an AI application that provides secure AI workflows with affordable deep data privacy. It offers a robust, scalable platform for safeguarding AI processes with advanced security, compliance, and seamless performance. The platform allows users to track, protect, and control their AI workflows, ensuring secure and efficient operations. DeepSentinel also provides real-time threat monitoring, granular control, and global trust for securing sensitive data and ensuring compliance with international regulations.

DevSecCops

DevSecCops is an AI-driven automation platform designed to revolutionize DevSecOps processes. The platform offers solutions for cloud optimization, machine learning operations, data engineering, application modernization, infrastructure monitoring, security, compliance, and more. With features like one-click infrastructure security scan, AI engine security fixes, compliance readiness using AI engine, and observability, DevSecCops aims to enhance developer productivity, reduce cloud costs, and ensure secure and compliant infrastructure management. The platform leverages AI technology to identify and resolve security issues swiftly, optimize AI workflows, and provide cost-saving techniques for cloud architecture.

Picterra

Picterra is a geospatial AI platform that offers reliable solutions for sustainability, compliance, monitoring, and verification. It provides an all-in-one plot monitoring system, professional services, and interactive tours. Users can build custom AI models to detect objects, changes, or patterns using various geospatial imagery data. Picterra aims to revolutionize geospatial analysis with its category-leading AI technology, enabling users to solve challenges swiftly, collaborate more effectively, and scale further.

Netify

Netify provides network intelligence and visibility. Its solution stack starts with a Deep Packet Inspection (DPI) engine that passively collects data on the local network. This lightweight engine identifies applications, protocols, hostnames, encryption ciphers, and other network attributes. The software can be integrated into network devices for traffic identification, firewalling, QoS, and cybersecurity. Netify's Informatics engine collects data from local DPI engines and uses the power of a public or private cloud to transform it into network intelligence. From device identification to cybersecurity risk detection, Informatics provides a way to take a proactive approach to manage network threats, bottlenecks, and usage. Lastly, Netify's Data Feeds provide data to help vendors understand how applications behave on the Internet.

deepset

deepset is an AI platform that offers enterprise-level products and solutions for AI teams. It provides deepset Cloud, a platform built with Haystack, enabling fast and accurate prototyping, building, and launching of advanced AI applications. The platform streamlines the AI application development lifecycle, offering processes, tools, and expertise to move from prototype to production efficiently. With deepset Cloud, users can optimize solution accuracy, performance, and cost, and deploy AI applications at any scale with one click. The platform also allows users to explore new models and configurations without limits, extending their team with access to world-class AI engineers for guidance and support.

HostAI

HostAI is a platform that allows users to host their artificial intelligence models and applications with ease. It provides a user-friendly interface for managing and deploying AI projects, eliminating the need for complex server setups. With HostAI, users can seamlessly run their AI algorithms and applications in a secure and efficient environment. The platform supports various AI frameworks and libraries, making it versatile for different AI projects. HostAI simplifies the process of AI deployment, enabling users to focus on developing and improving their AI models.

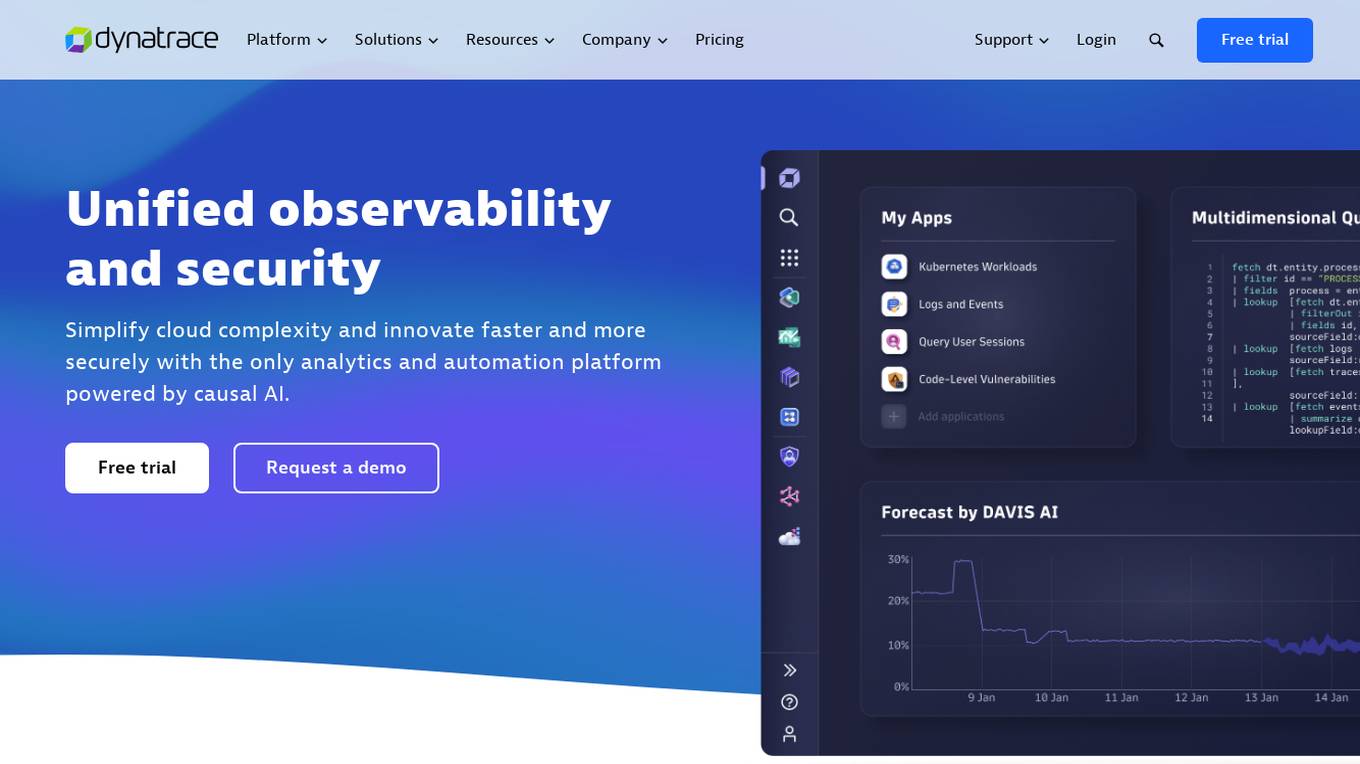

Dynatrace

Dynatrace is a modern cloud platform that offers unified observability and security solutions to simplify cloud complexity and drive innovation. Powered by causal AI, Dynatrace provides analytics and automation capabilities to help businesses monitor and secure their full stack, solve digital challenges, and make better business decisions in real-time. Trusted by thousands of global brands, Dynatrace empowers teams to deliver flawless digital experiences, drive intelligent cloud ecosystem automations, and solve any use-case with custom solutions.

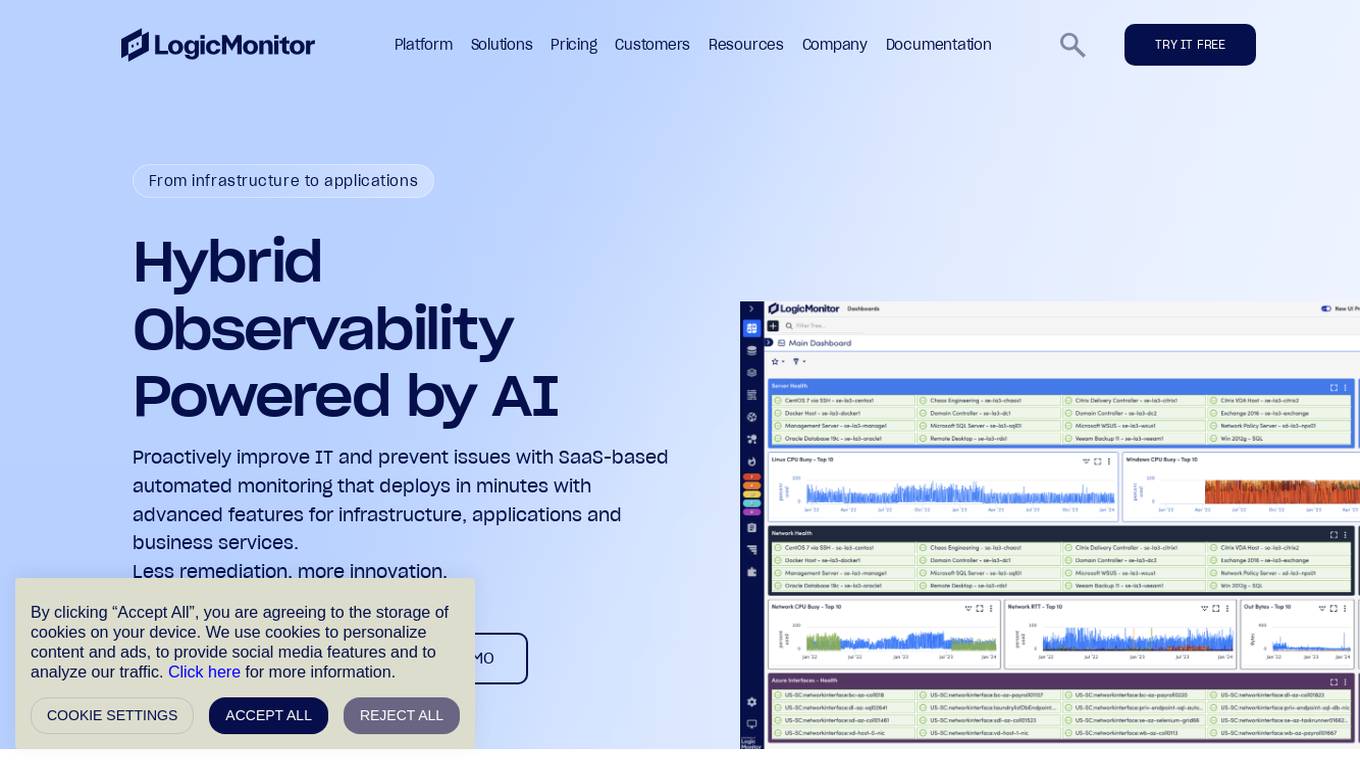

LogicMonitor

LogicMonitor is a cloud-based infrastructure monitoring platform that provides real-time insights and automation for comprehensive, seamless monitoring with agentless architecture. It offers a wide range of features including infrastructure monitoring, network monitoring, server monitoring, remote monitoring, virtual machine monitoring, SD-WAN monitoring, database monitoring, storage monitoring, configuration monitoring, cloud monitoring, container monitoring, AWS Monitoring, GCP Monitoring, Azure Monitoring, digital experience SaaS monitoring, website monitoring, APM, AIOPS, Dexda Integrations, security dashboards, and platform demo logs. LogicMonitor's AI-driven hybrid observability helps organizations simplify complex IT ecosystems, accelerate incident response, and thrive in the digital landscape.

Heroku

Heroku is a cloud platform that lets companies build, deliver, monitor, and scale apps. It simplifies the process of deploying applications by providing a seamless experience for developers. With Heroku, developers can focus on writing code without worrying about infrastructure management. The platform supports various programming languages and frameworks, making it versatile for different types of applications.

Heroku

Heroku is a cloud platform that enables developers to build, deliver, monitor, and scale applications quickly and easily. It supports multiple programming languages and provides a seamless deployment process. With Heroku, developers can focus on coding without worrying about infrastructure management.

Cerebium

Cerebium is a serverless AI infrastructure platform that allows teams to build, test, and deploy AI applications quickly and efficiently. With a focus on speed, performance, and cost optimization, Cerebium offers a range of features and tools to simplify the development and deployment of AI projects. The platform ensures high reliability, security, and compliance while providing real-time logging, cost tracking, and observability tools. Cerebium also offers GPU variety and effortless autoscaling to meet the diverse needs of developers and businesses.

CareCloud

CareCloud is a cloud-based and AI-powered healthcare solution that offers a comprehensive suite of tools to enhance financial performance, patient care, and operational efficiency for healthcare providers. The platform includes features such as Revenue Cycle Management, Electronic Health Records, Practice Management, Patient Experience Management, and Telehealth. CareCloud leverages AI technology to automate documentation, streamline workflows, optimize collections, and provide intelligent analytics for smarter healthcare management.

0 - Open Source AI Tools

20 - OpenAI Gpts

Cloud Architecture Advisor

Guides cloud strategy and architecture to optimize business operations.

Docker and Docker Swarm Assistant

Expert in Docker and Docker Swarm solutions and troubleshooting.

DevOps Mentor

A formal, expert guide for DevOps pros advancing their skills. Your DevOps GYM

The Dock - Your Docker Assistant

Technical assistant specializing in Docker and Docker Compose. Lets Debug !

Cloud Services Management Advisor

Manages and optimizes organization's cloud resources and services.

AzurePilot | Steer & Streamline Your Cloud Costs🌐

Specialized advisor on Azure costs and optimizations

Azure Mentor

Expert in Azure's latest services, including Application Insights, API Management, and more.