Best AI tools for< Manage Concurrency >

20 - AI tool Sites

Kinaxis

Kinaxis is an AI-powered platform that offers end-to-end supply chain orchestration solutions for businesses. It helps companies respond with agility by coordinating all parts of the supply chain using artificial intelligence. With features like transparency, collaboration, adaptability, and intelligence, Kinaxis enables users to make faster, smarter, and more confident decisions. The platform offers solutions for supply chain control tower, S&OP, demand planning, inventory management, scheduling, order management, transportation, and returns management. Kinaxis is designed to streamline operations, improve efficiency, and drive sustainable supply chain practices.

SingleStore

SingleStore is a real-time data platform designed for apps, analytics, and gen AI. It offers faster hybrid vector + full-text search, fast-scaling integrations, and a free tier. SingleStore can read, write, and reason on petabyte-scale data in milliseconds. It supports streaming ingestion, high concurrency, first-class vector support, record lookups, and more.

Concurrence.ai

Concurrence.ai is an AI-powered platform designed to help users manage their online communities effectively. It offers advanced features such as spam and ad filtering, custom filters, and multi-language support. With 24/7 uptime and unlimited message support, community owners can ensure a seamless experience for their users. The platform also provides affordable usage-based payment plans and regular updates to enhance user experience. Concurrence.ai aims to simplify community management tasks and elevate the overall engagement within online communities.

OpenResty

The website is currently displaying a '403 Forbidden' error message, which indicates that the server is refusing to respond to the request. This error is often caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the message is a web platform based on NGINX and LuaJIT, commonly used for building high-performance web applications. It is designed to handle a large number of concurrent connections and provide a scalable and efficient web server solution.

OpenResty

The website is currently displaying a '403 Forbidden' error, which indicates that the server is refusing to respond to the request. This error is often caused by insufficient permissions or misconfiguration on the server side. The 'openresty' mentioned in the error message is a web platform based on NGINX and LuaJIT, commonly used for building high-performance web applications. It is designed to handle a large number of concurrent connections and provide advanced features for web development.

Botonomous

Botonomous is an AI-powered platform that helps businesses automate their workflows. With Botonomous, you can create advanced automations for any domain, check your flows for potential errors before running them, run multiple nodes concurrently without waiting for the completion of the previous step, create complex, non-linear flows with no-code, and design human interactions to participate in your automations. Botonomous also offers a variety of other features, such as webhooks, scheduled triggers, secure secret management, and a developer community.

Retell AI

Retell AI is a powerful voice agent platform that enables users to build, test, deploy, and monitor AI voice agents at scale. It offers features such as call transfer, appointment booking, IVR navigation, batch calling, and post-call analysis. Retell AI provides advantages like verified phone numbers, branded call ID, custom analysis, and case studies. However, some disadvantages include the need for initial setup by an engineer, ongoing maintenance, and potential concurrent call limitations. The application is suitable for various industries and use cases, with multilingual support and compliance with industry standards.

SocialBee

SocialBee is an AI-powered social media management tool that helps businesses and individuals manage their social media accounts efficiently. It offers a range of features, including content creation, scheduling, analytics, and collaboration, to help users plan, create, and publish engaging social media content. SocialBee also provides insights into social media performance, allowing users to track their progress and make data-driven decisions.

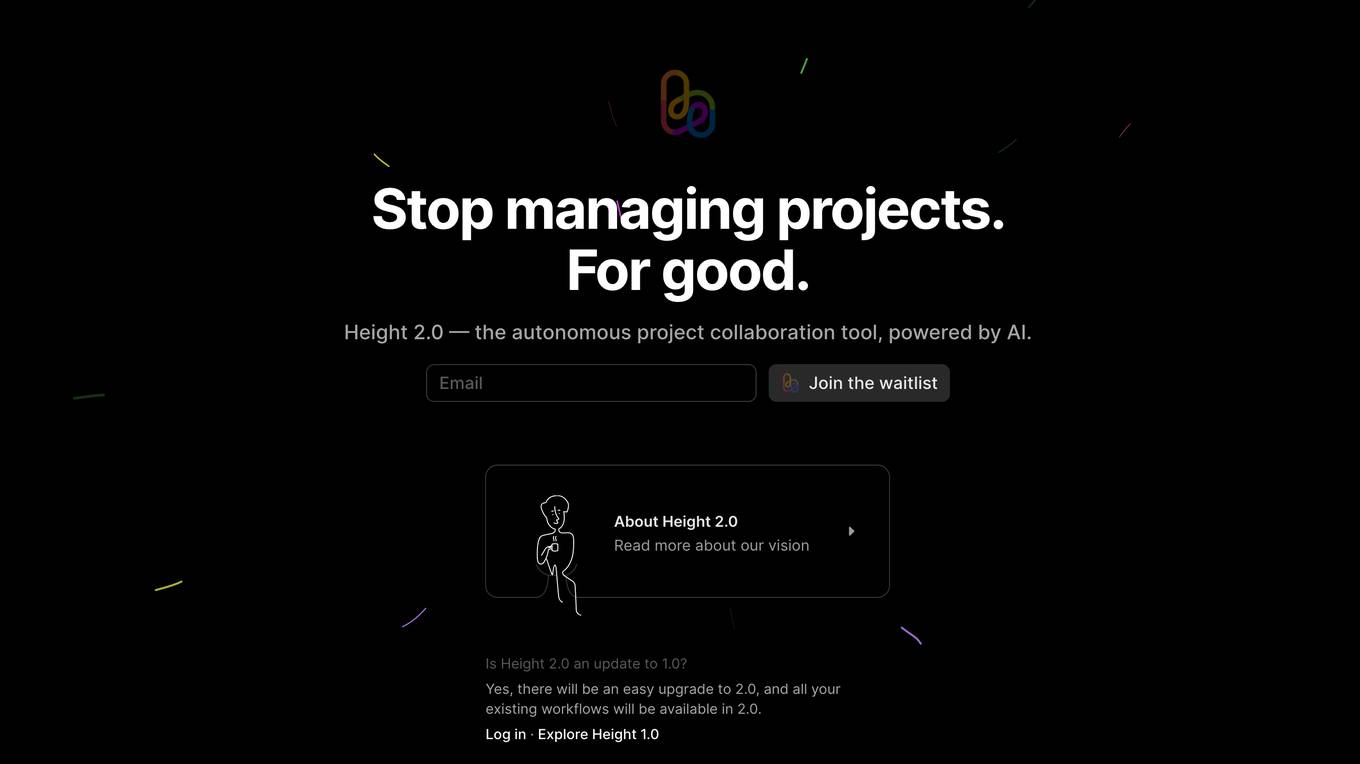

Height

Height is an autonomous project management tool designed for teams involved in designing and building projects. It automates manual tasks to provide space for collaborative work, focusing on backlog upkeep, spec updates, and bug triage. With project intelligence and collaboration features, Height offers a customizable workspace with autonomous capabilities to streamline project management. Users can discuss projects in context and benefit from an AI assistant for creating better stories. The tool aims to revolutionize project management by offloading routine tasks to an intelligent system.

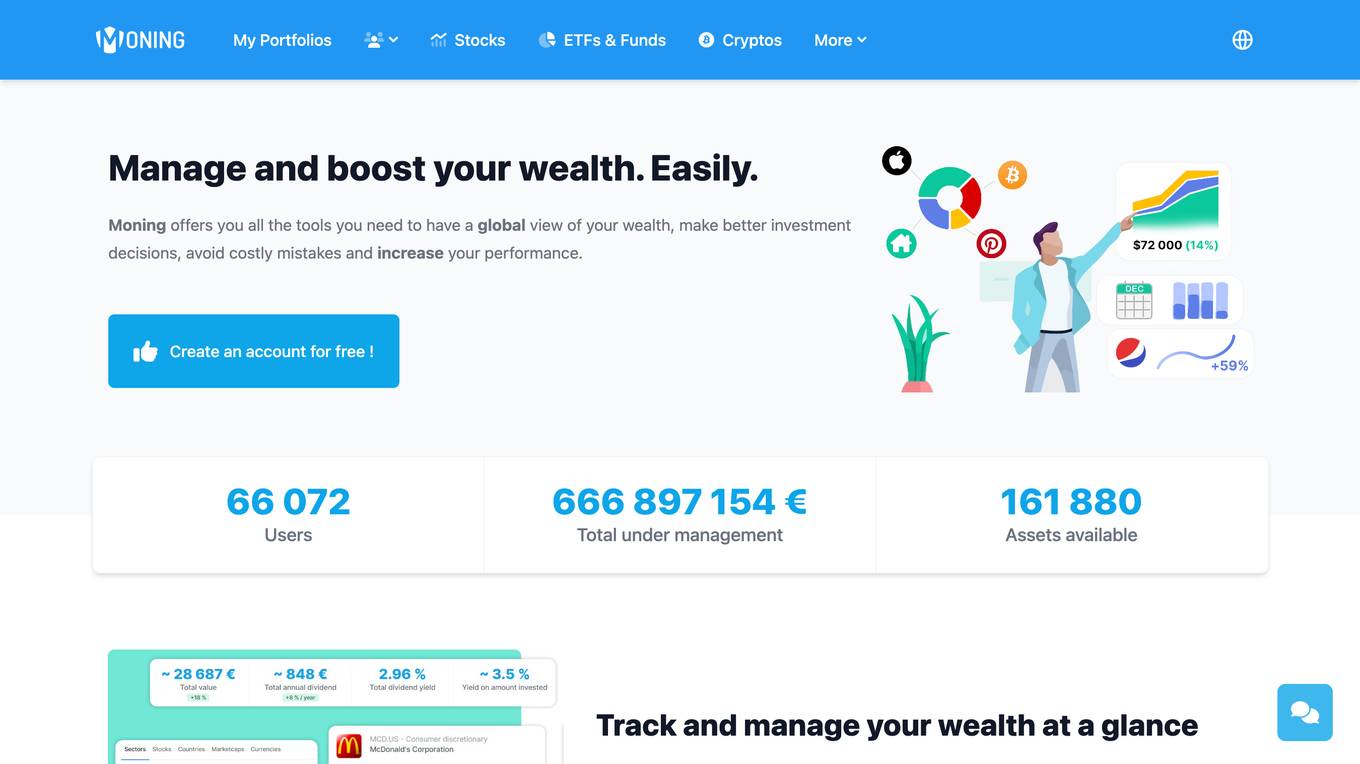

Moning

Moning is a platform designed to help users manage and boost their wealth easily. It provides tools for a global view of wealth, making better investment decisions, avoiding costly mistakes, and increasing performance. With features like AI Analysis, Dividends calendar, and Dividend and Growth Safety Scores, Moning offers a mix of Human & Artificial Intelligence to enhance investment knowledge and decision-making. Users can track and manage their wealth through a comprehensive dashboard, access detailed information on stocks, ETFs, and cryptos, and benefit from quick screeners to find the best investment opportunities.

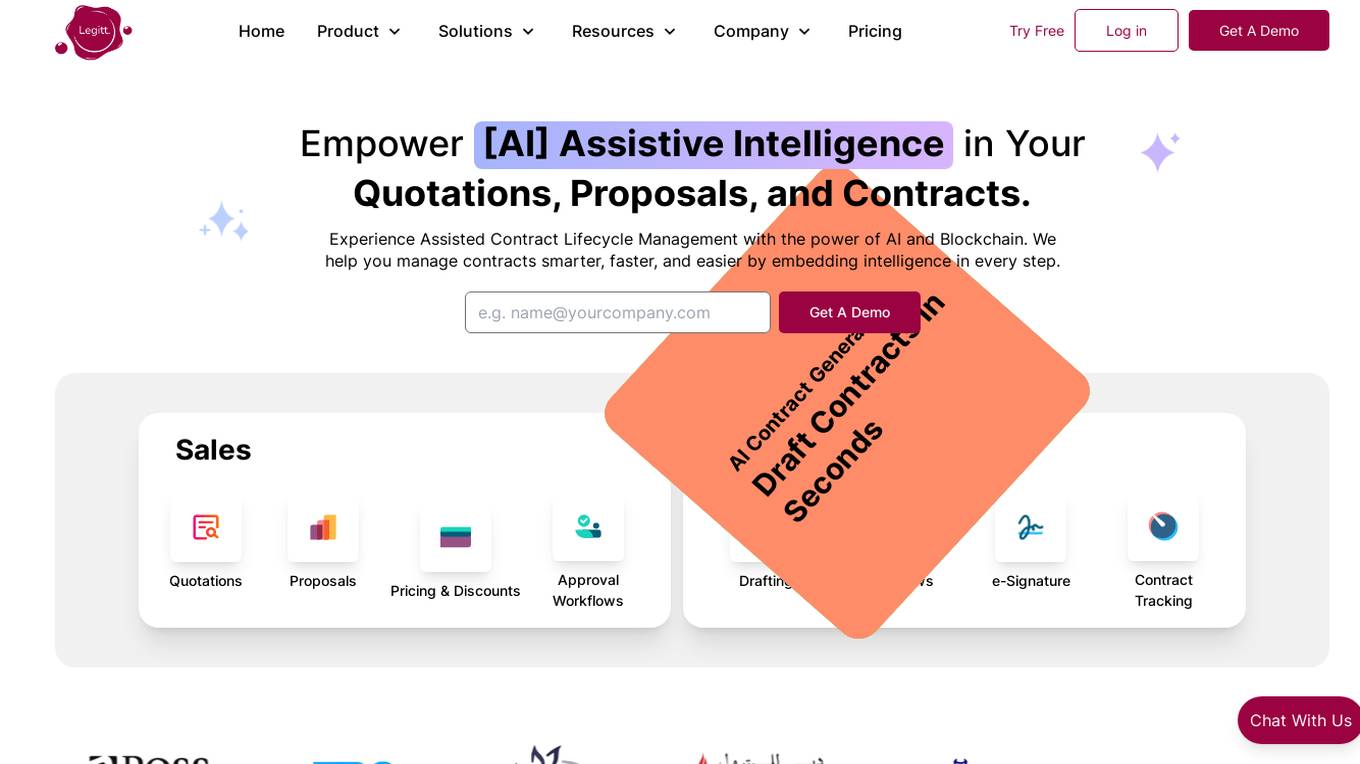

Legitt AI

Legitt AI is an AI-powered Contract Lifecycle Management platform that offers a comprehensive solution for managing contracts at scale. It combines automation and intelligence to revolutionize contract management, ensuring efficiency, accuracy, and compliance with legal standards. The platform streamlines contract creation, signing, tracking, and management processes by embedding intelligence in every step. Legitt AI enhances contract review processes, contract tracking, and contract intelligence at scale, providing users with insights, recommendations, and automated workflows. With robust security measures, scalable infrastructure, and integrations with popular business tools, Legitt AI empowers businesses to manage contracts with precision and efficiency.

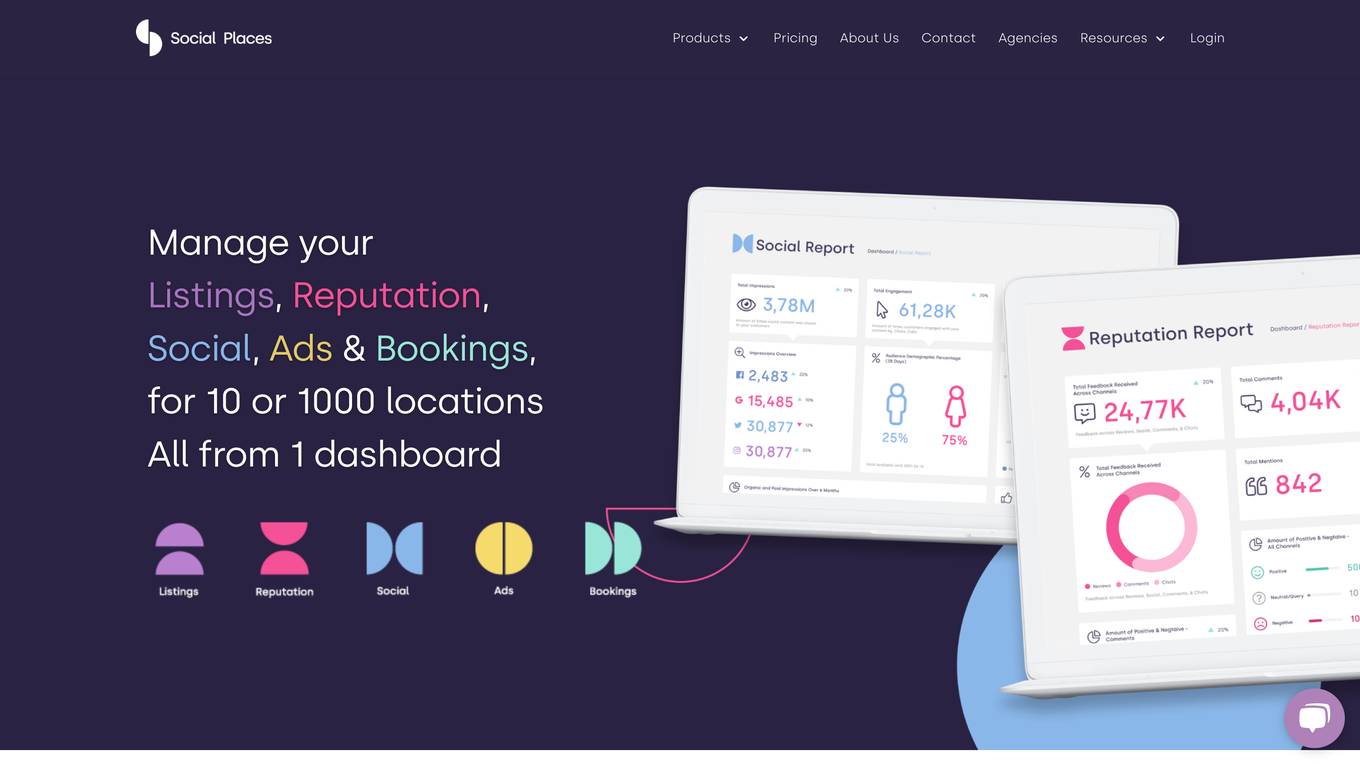

Social Places

Social Places is a leading franchise marketing agency that provides a suite of tools to help businesses with multiple locations manage their online presence. The platform includes tools for managing listings, reputation, social media, ads, and bookings. Social Places also offers a conversational AI chatbot and a custom feedback form builder.

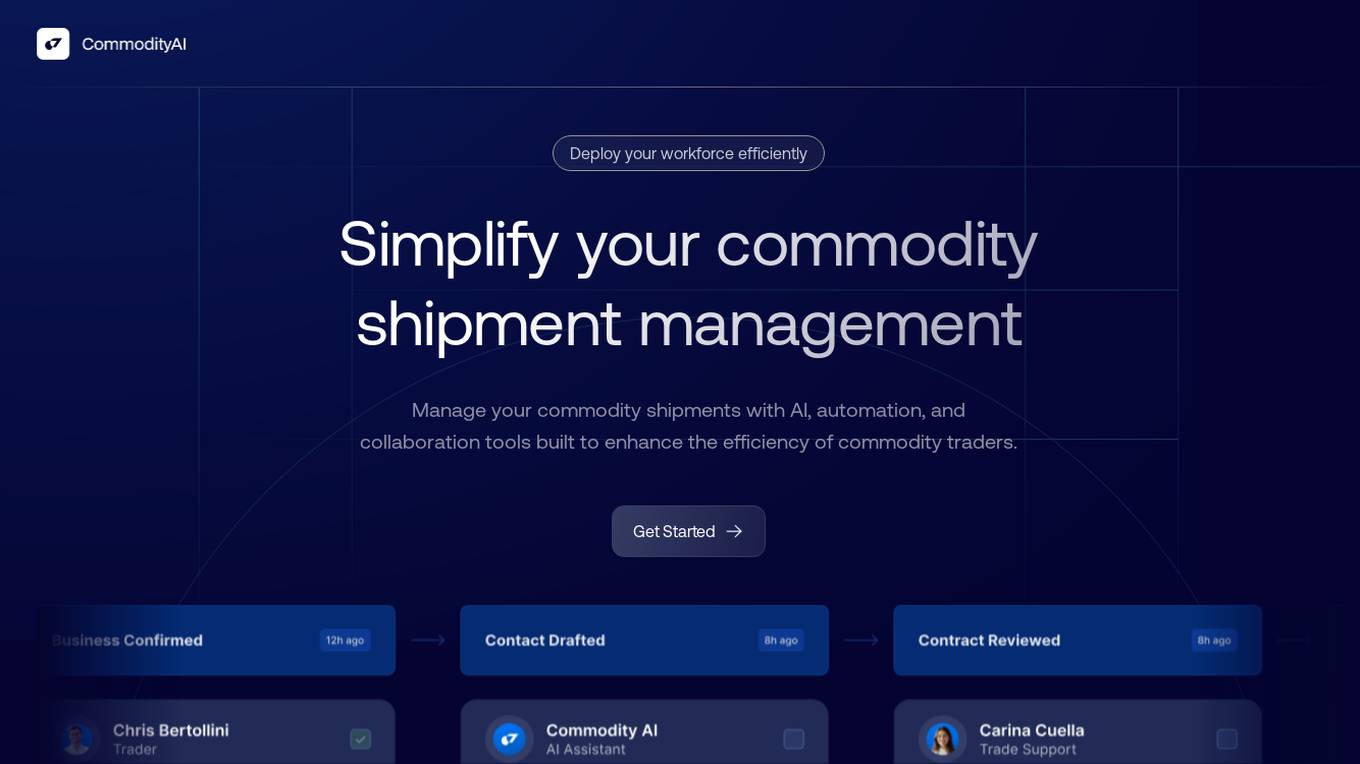

CommodityAI

CommodityAI is a web-based platform that uses AI, automation, and collaboration tools to help businesses manage their commodity shipments and supply chains more efficiently. The platform offers a range of features, including shipment management automation, intelligent document processing, stakeholder collaboration, and supply-chain automation. CommodityAI can help businesses improve data accuracy, eliminate manual processes, and streamline communication and collaboration. The platform is designed for the commodities industry and offers commodity-specific automations, ERP integration, and AI-powered insights.

Gideon Legal

Gideon Legal is an automated intake and document automation software designed to help law firms manage client journeys from contact to contract and intake to eSign. It uses bots, built-in integrations, and no-code technology to maximize revenue and streamline operations by automating client workflows.

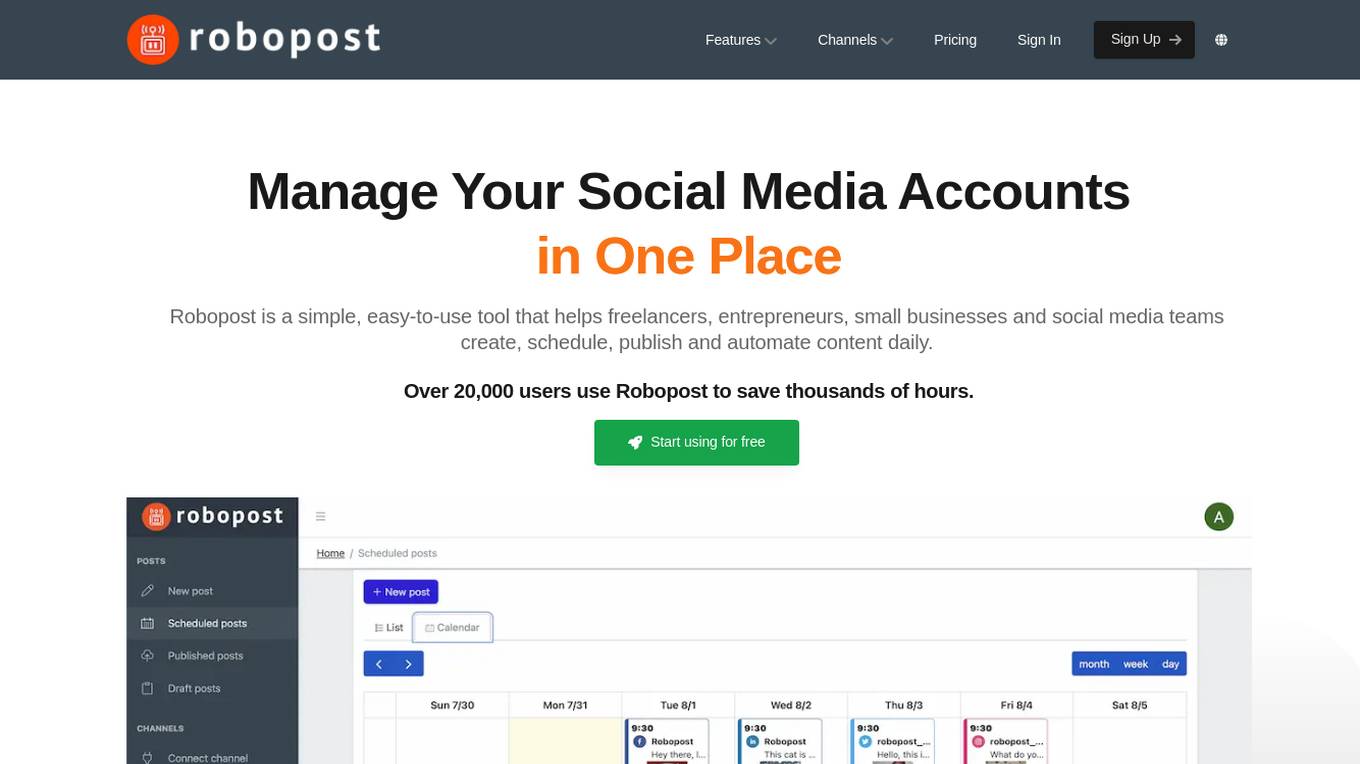

Robopost

Robopost is a social media scheduling and automation tool designed to help freelancers, entrepreneurs, small businesses, and social media teams create, schedule, publish, and automate content daily. With over 20,000 users, Robopost offers essential tools such as social media post scheduling, AI-powered content creation, team management, multi-image and video posts scheduling, AI assistance for generating captions, automations, calendar view, post ideas generation with AI, posts collection organization, and comprehensive support for numerous social media platforms.

ideas-generator.com

ideas-generator.com is a domain registrar service provided by Cloudflare. It helps users manage and renew their domain registrations. The service allows domain owners to easily access their account through the Cloudflare Dashboard and provides options for domain renewal. With ideas-generator.com, users can efficiently handle their domain registration needs within the Cloudflare ecosystem.

Waitlyst.co

Waitlyst.co is a website that is currently under development and not yet launched. The domain is registered at Dynadot.com and is expected to be live in the future. The purpose and functionality of the website are not disclosed in the provided text, but it seems to be related to managing waitlists or reservations for events, services, or products. The website is expected to offer a platform for users to create and manage waitlists efficiently.

Sequential

Sequential is a work management platform that uses AI to help teams deliver more work, faster. It is inspired by the best practices of history's most effective organizations and is powered by the latest AI models.

Servcy

Servcy is an all-in-one business management tool that helps you consolidate data from all your tools in one place. With Servcy, you can manage your communications, tasks, documents, payments, and time tracking all in one place. Servcy also uses AI to help you prioritize and respond to the most important messages, organize and manage your tasks, and get answers from your documents.

SocialBee

SocialBee is an AI-powered social media management tool that helps businesses and individuals manage their social media accounts efficiently. It offers a range of features, including content creation, scheduling, analytics, and collaboration, to help users plan, create, and track their social media campaigns. SocialBee also provides access to a team of social media experts who can help users with their social media strategy and execution.

1 - Open Source AI Tools

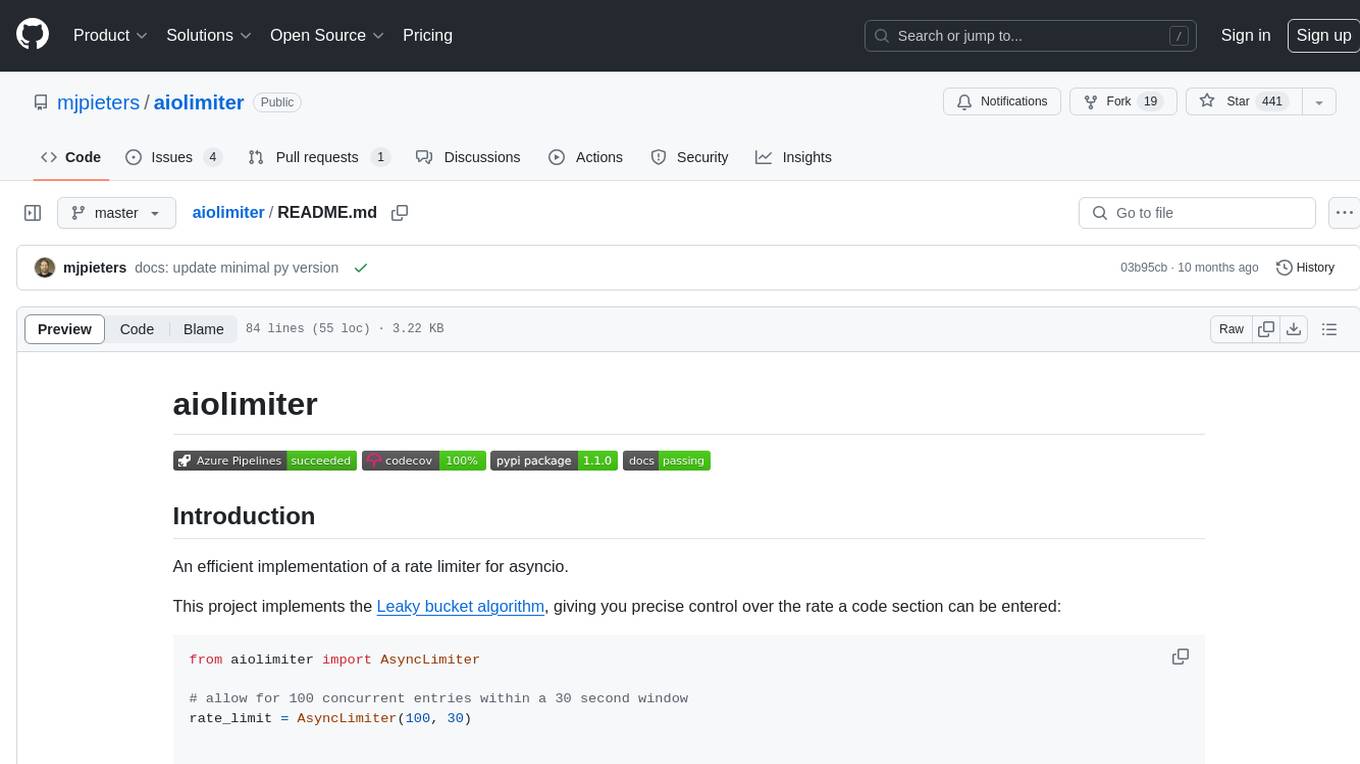

aiolimiter

An efficient implementation of a rate limiter for asyncio using the Leaky bucket algorithm, providing precise control over the rate a code section can be entered. It allows for limiting the number of concurrent entries within a specified time window, ensuring that a section of code is executed a maximum number of times in that period.

20 - OpenAI Gpts

FODMAPs Dietician

Dietician that helps those with IBS manage their symptoms via FODMAPs. FODMAP stands for fermentable oligosaccharides, disaccharides, monosaccharides and polyols. These are the chemical names of 5 naturally occurring sugars that are not well absorbed by your small intestine.

Cognitive Behavioral Coach

Provides cognitive-behavioral and emotional therapy guidance, helping users understand and manage their thoughts, behaviors, and emotions.

1ACulma - Management Coach

Cross-cultural management. Useful for those who relocate to another country or manage cross-cultural teams.

Finance Butler(ファイナンス・バトラー)

I manage finances securely with encryption and user authentication.

GroceriesGPT

I manage your grocery lists to help you stay organized. *1/ Tell me what to add to a list. 2/ Ask me to add all ingredients for a receipe. 3/ Upload a receipt to remove items from your lists 4/ Add an item by simply uploading a picture. 5/ Ask me what items I would recommend you add to your lists.*

Family Legacy Assistant

Helps users manage and preserve family heirlooms with empathy and practical advice.

AI Home Doctor (Guided Care)

Give me your syptoms and I will provide instructions for how to manage your illness.

MixerBox ChatGSlide

Your AI Google Slides assistant! Effortlessly locate, manage, and summarize your presentations!

Herbal Healer: The Art of Botany

A simulation game where players learn grow medicinal plants, craft remedies, and manage a herbal healing garden. Another AI Tiny Game by Dave Lalande

ZenFin

💡 Tips and guidance to buy, sell, and manage BitCoins, Ether , and more for transactions under $50.

DivineFeed

As the Divine Apple II, I defy Moore's Law in this darkly humorous game where you, as God, manage global prayers, navigate celestial politics, and accept that omnipotence can't please everyone.

Couples Financial Planner

Aids couples in managing joint finances, budgeting for future goals, and navigating financial challenges together.

God Simulator

A God Simulator GPT, facilitating world creation and managing random events.