Best AI tools for< Manage Command History >

20 - AI tool Sites

Fluid

Fluid is a private AI assistant designed for Mac users, specifically those with Apple Silicon and macOS 14 or later. It offers offline capabilities and is powered by the advanced Llama 3 AI by Meta. Fluid ensures unparalleled privacy by keeping all chats and data on the user's Mac, without the need to send sensitive information to third parties. The application features voice control, one-click installation, easy access, security by design, auto-updates, history mode, web search capabilities, context awareness, and memory storage. Users can interact with Fluid by typing or using voice commands, making it a versatile and user-friendly AI tool for various tasks.

Ultra AI

Ultra AI is an all-in-one AI command center for products, offering features such as multi-provider AI gateway, prompts manager, semantic caching, logs & analytics, model fallbacks, and rate limiting. It is designed to help users efficiently manage and utilize AI capabilities in their products. The platform is privacy-focused, fast, and provides quick support, making it a valuable tool for businesses looking to leverage AI technology.

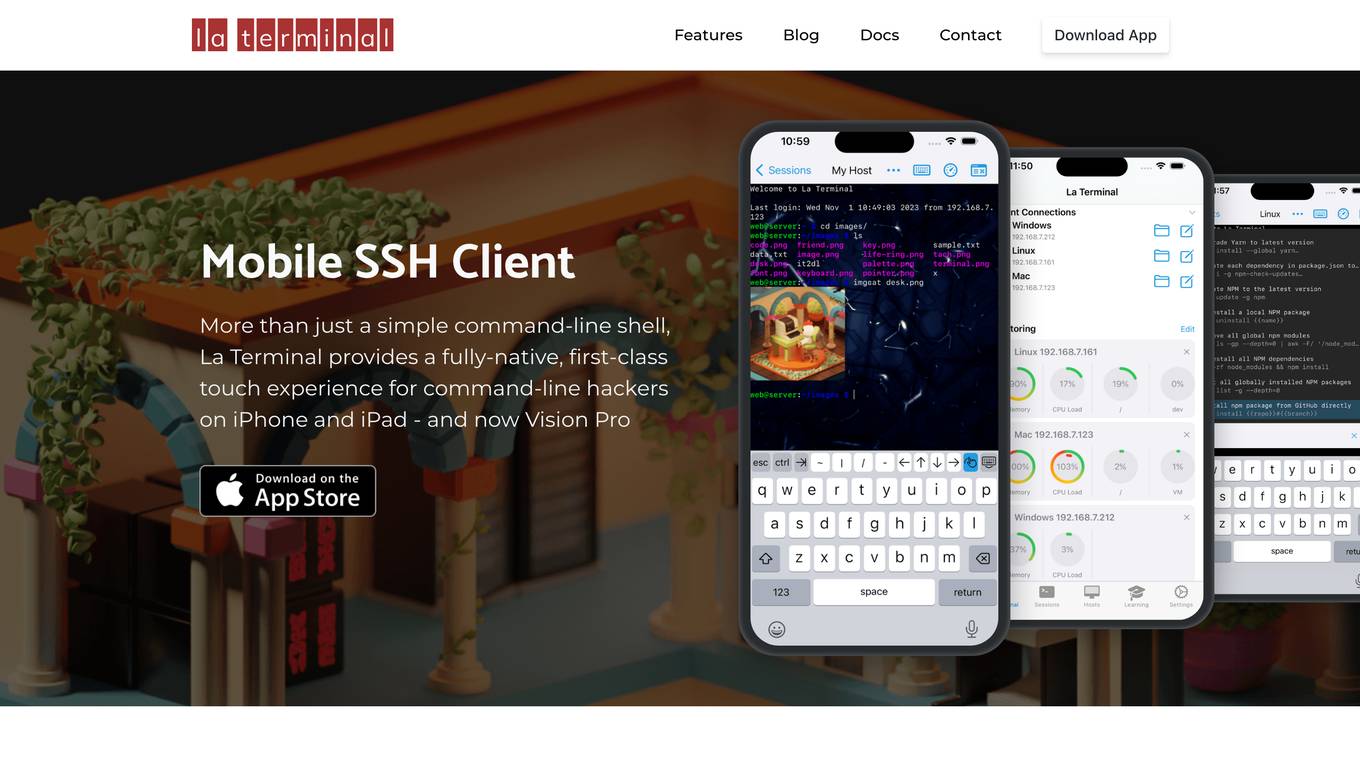

La Terminal

La Terminal is a fully-native, first-class touch experience for command-line hackers on iPhone and iPad. It provides a secure, open-source, and feature-rich environment for managing remote servers, automating tasks, and exploring the command line. With AI assistance from El Copiloto, La Terminal makes it easy to find and execute commands, even for beginners.

Swift

Swift is an AI-powered voice assistant that utilizes cutting-edge technologies such as Groq, Cartesia, VAD, and Vercel to provide users with a fast and efficient voice interaction experience. With Swift, users can perform various tasks using voice commands, making it a versatile tool for hands-free operation in different settings. The application aims to streamline daily tasks and enhance user productivity through seamless voice recognition capabilities.

CommandAI

CommandAI is a powerful command line utility tool that leverages the capabilities of artificial intelligence to enhance user experience and productivity. It allows users to interact with the command line interface using natural language commands, making it easier for both beginners and experienced users to perform complex tasks efficiently. With CommandAI, users can streamline their workflow, automate repetitive tasks, and access advanced features through simple text-based interactions. The tool is designed to simplify the command line experience and provide intelligent assistance to users in executing commands and managing their system effectively.

Aurora Terminal Agent

Aurora Terminal Agent is an AI-powered terminal assistant designed to enhance productivity and efficiency in terminal operations. The tool leverages artificial intelligence to provide real-time insights, automate tasks, and streamline workflows for terminal operators. With its intuitive interface and advanced algorithms, Aurora Terminal Agent revolutionizes the way terminals manage their operations, leading to improved performance and cost savings.

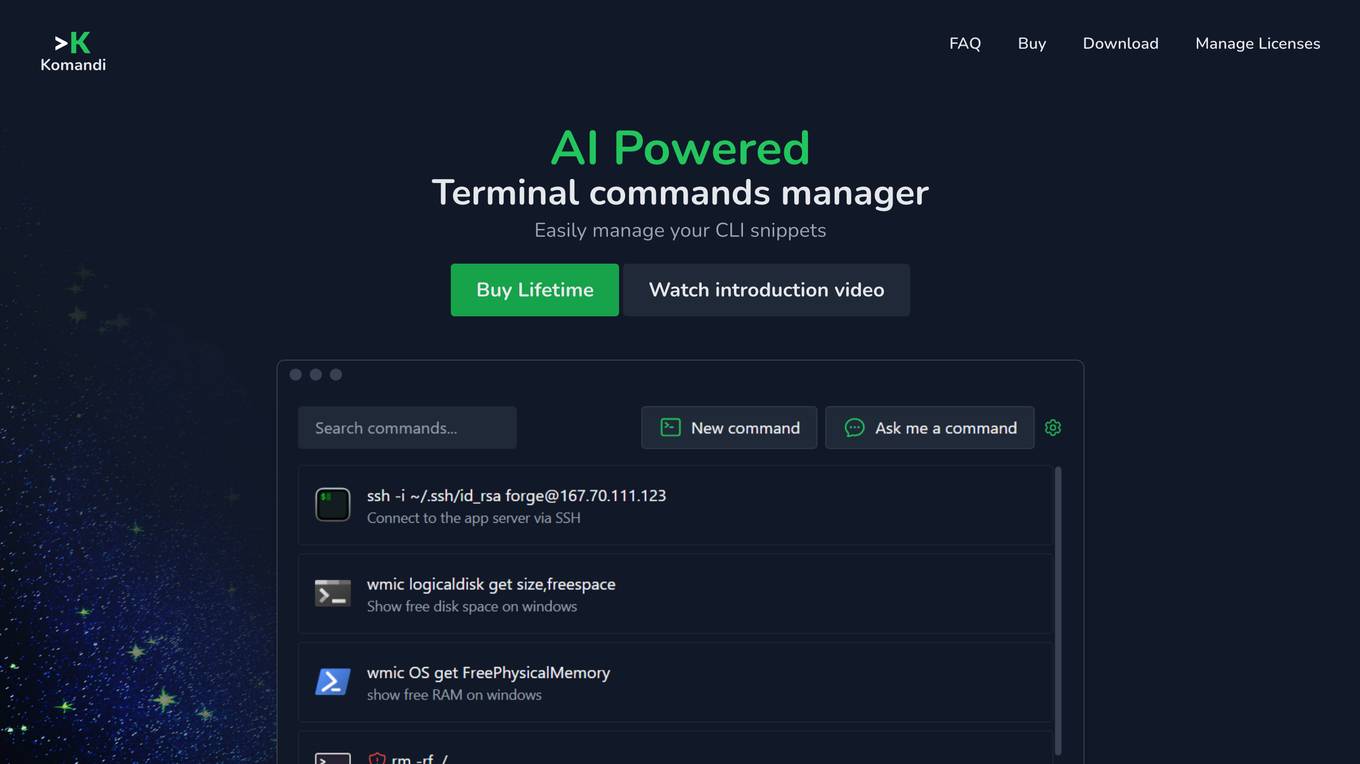

Komandi

Komandi is an AI-powered CLI/Terminal commands manager that allows users to easily manage their CLI snippets. It enables users to generate terminal commands from natural language prompts using AI, insert, favorite, copy, and execute commands, detect potentially dangerous commands, and more. Komandi is designed for developers and system administrators to streamline their command-line operations and enhance productivity.

AiPlus

AiPlus is an AI tool designed to serve as a cost-efficient model gateway. It offers users a platform to access and utilize various AI models for their projects and tasks. With AiPlus, users can easily integrate AI capabilities into their applications without the need for extensive development or resources. The tool aims to streamline the process of leveraging AI technology, making it accessible to a wider audience.

Spoke.ai

Spoke.ai is an AI tool designed to enhance communication and streamline workflow processes. The platform aims to help users communicate more effectively and build projects faster by leveraging artificial intelligence technology. By joining Salesforce to support Slack AI, Spoke.ai is at the forefront of revolutionizing the future of work. The tool offers various features to improve collaboration and productivity, making it a valuable asset for teams and individuals alike.

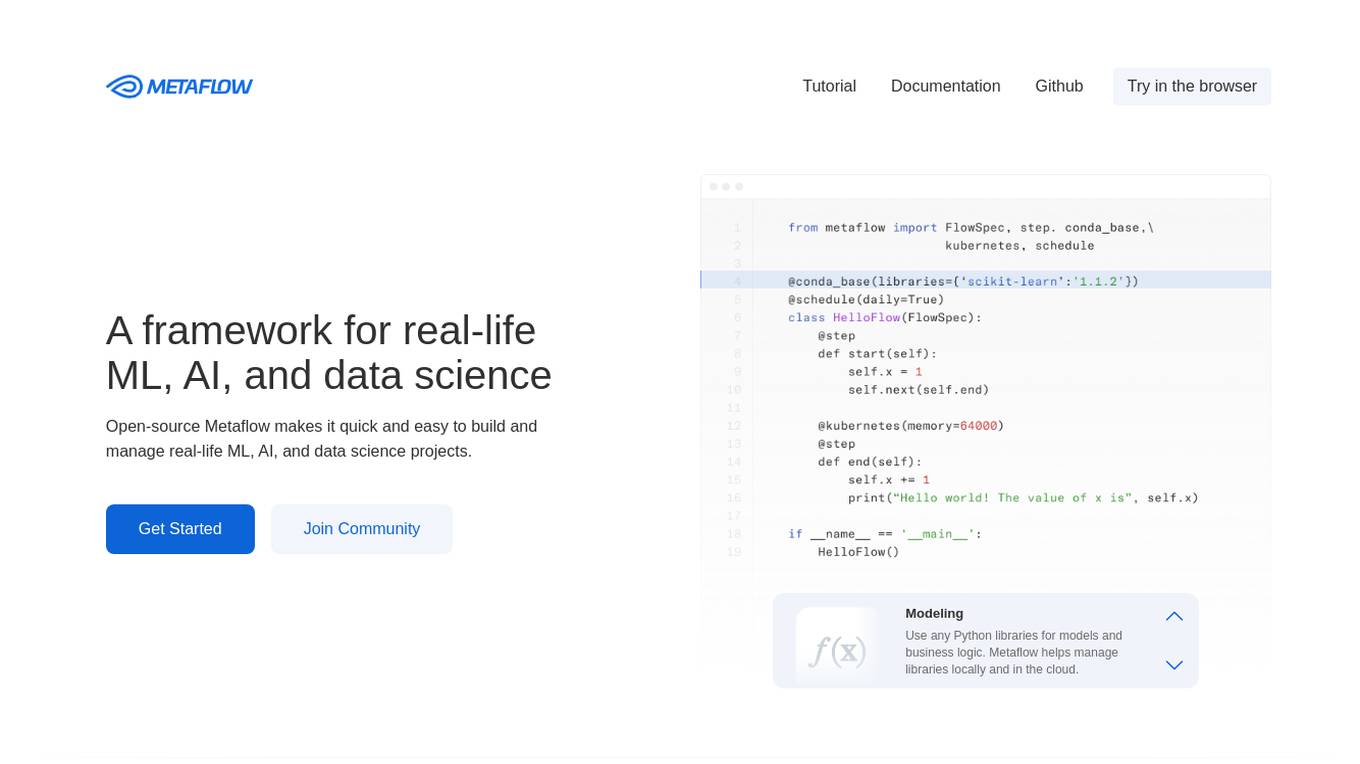

Metaflow

Metaflow is an open-source framework for building and managing real-life ML, AI, and data science projects. It makes it easy to use any Python libraries for models and business logic, deploy workflows to production with a single command, track and store variables inside the flow automatically for easy experiment tracking and debugging, and create robust workflows in plain Python. Metaflow is used by hundreds of companies, including Netflix, 23andMe, and Realtor.com.

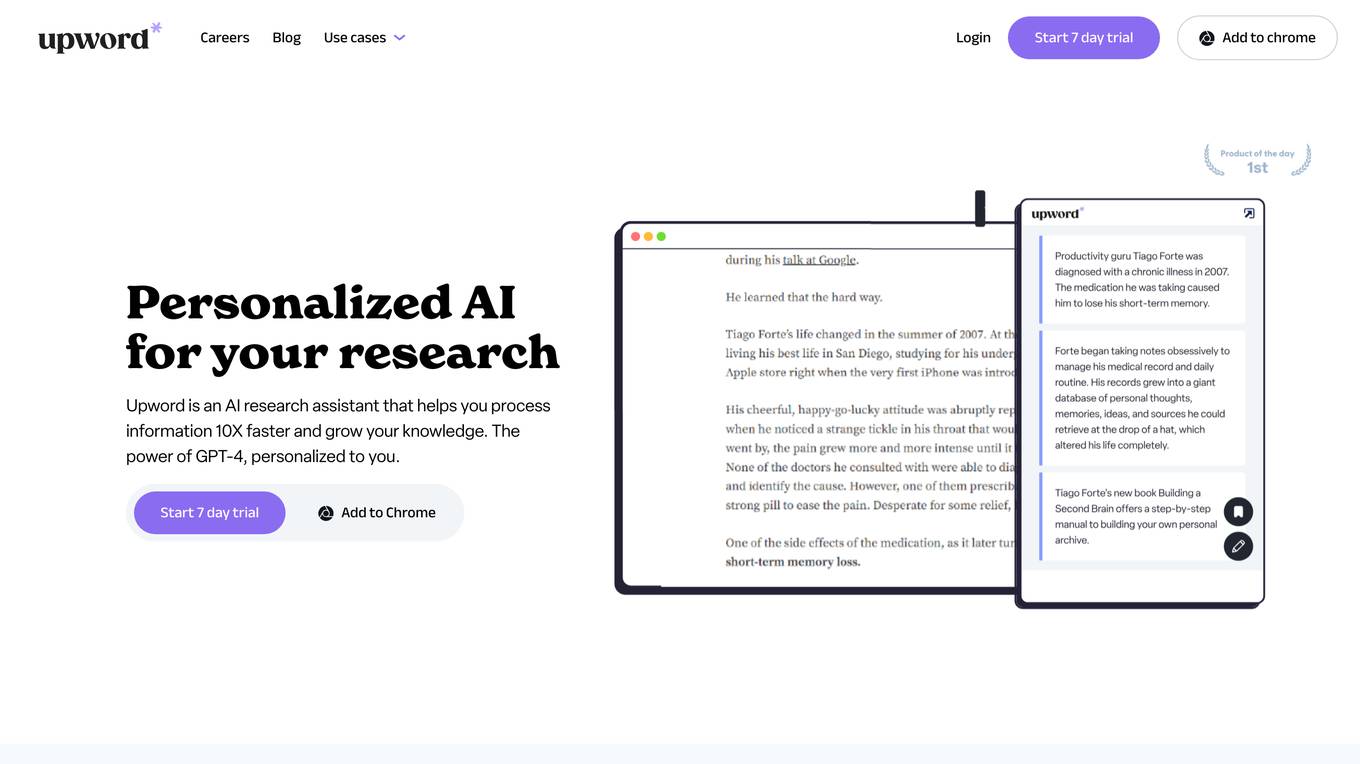

Upword

Upword is an AI-powered research assistant that allows users to control the AI research process, trust the results, and customize every step of the AI research process. Users can summarize various content types, create reports, manage research papers, and collaborate on research projects. Upword offers personalized AI chatbots, research templates, content curation, and a command center for managing research tasks efficiently.

KubeHelper

KubeHelper is an AI-powered tool designed to reduce Kubernetes downtime by providing troubleshooting solutions and command searches. It seamlessly integrates with Slack, allowing users to interact with their Kubernetes cluster in plain English without the need to remember complex commands. With features like troubleshooting steps, command search, infrastructure management, scaling capabilities, and service disruption detection, KubeHelper aims to simplify Kubernetes operations and enhance system reliability.

Razzle

Razzle is a messaging tool designed to help you stay focused and get more done. It is minimal and distraction-free, with a focus mode that is on by default. Razzle also has a quick and easy search function from your command bar, and it comes with 2 embedded AI models that can help you with writing marketing copy or data extraction. Razzle also has first party support for Zoom and Google Meets, so you can easily call your colleagues with one click.

Razzle

Razzle is a messaging tool designed to help you stay focused and get more done. It is minimal and distraction-free, with a focus mode that is on by default. Razzle also has a quick and easy search function from your command bar, and it comes with 2 embedded AI models that can help you with writing marketing copy or data extraction. Razzle also has first party support for Zoom and Google Meets, so you can easily call your colleagues with one click.

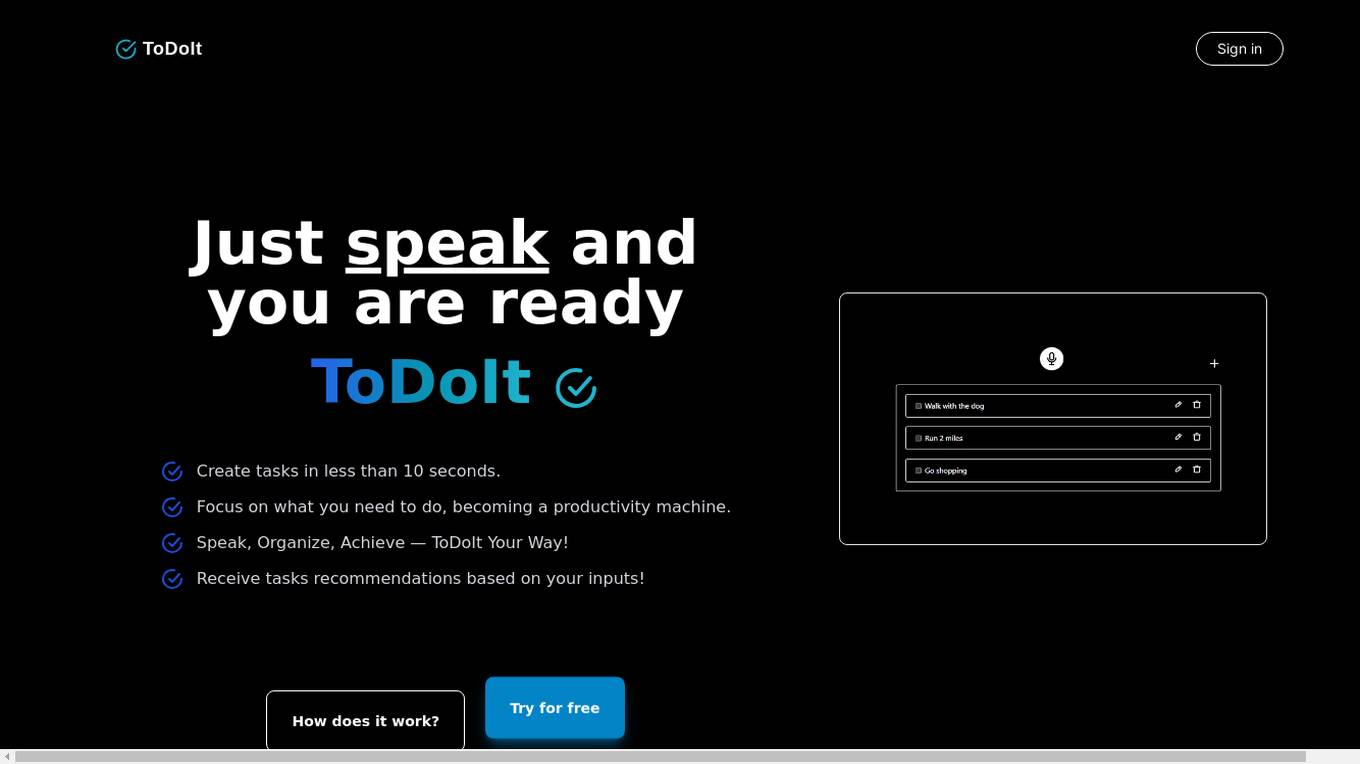

ToDoIt

ToDoIt is a voice and AI-powered to-do list application that helps users manage their tasks efficiently using natural language. Users can create tasks in less than 10 seconds by speaking, receive task recommendations based on their inputs, and enjoy smart task automation for improved productivity. The app offers different pricing plans with features like AI voice transcription, AI-powered task recommendations, and unlimited task recommendation refreshes. ToDoIt prioritizes user privacy and security by securely storing data and deleting audio files after transcription. Users can leave feedback through Insighto and benefit from the app's responsive web version.

Stream Chat A.I.

Stream Chat A.I. is an AI-powered Twitch chat bot that provides a smart and engaging chat experience for communities. It offers unique features such as a fully customizable chat-bot with a unique personality, bespoke overlays for multimedia editing, custom !commands for boosting interaction, and ongoing development with community input. The application is not affiliated with Twitch Interactive, Inc. and encourages user creativity and engagement.

CommandBar

CommandBar is an AI-powered user assistance platform designed to provide personalized support and guidance to product, marketing, and customer teams. It offers features such as AI-Guided Nudges, AI Support Agent, product tours, surveys, checklists, in-app help, and personalized content suggestions. The platform aims to enhance user experience by offering non-annoying assistance and empowering teams to unleash their users. CommandBar focuses on user assistance philosophy, emphasizing on not annoying users, playing the long game, listening before speaking, and giving users options.

Sidekick AI

Sidekick AI is a powerful AI-powered tool that helps you stay on top of your work by tracking important information, setting reminders, sorting tasks, and summarizing long conversations. It integrates with various platforms and applications, including Slack, Salesforce, Jira, Zendesk, and Google Docs, to provide a comprehensive view of your work and streamline your workflow. With Sidekick AI, you can stay organized, efficient, and informed, ensuring that nothing falls through the cracks.

PayGenie

The website offers an AI-powered invoicing assistant that enables users to create and manage invoices effortlessly using voice commands and automation. It aims to save users time by automating invoice creation and reducing errors. The tool provides customizable templates, real-time insights, and smart time tracking features to streamline the invoicing process. Users can join the waitlist to experience the future of invoicing with AI-driven automation.

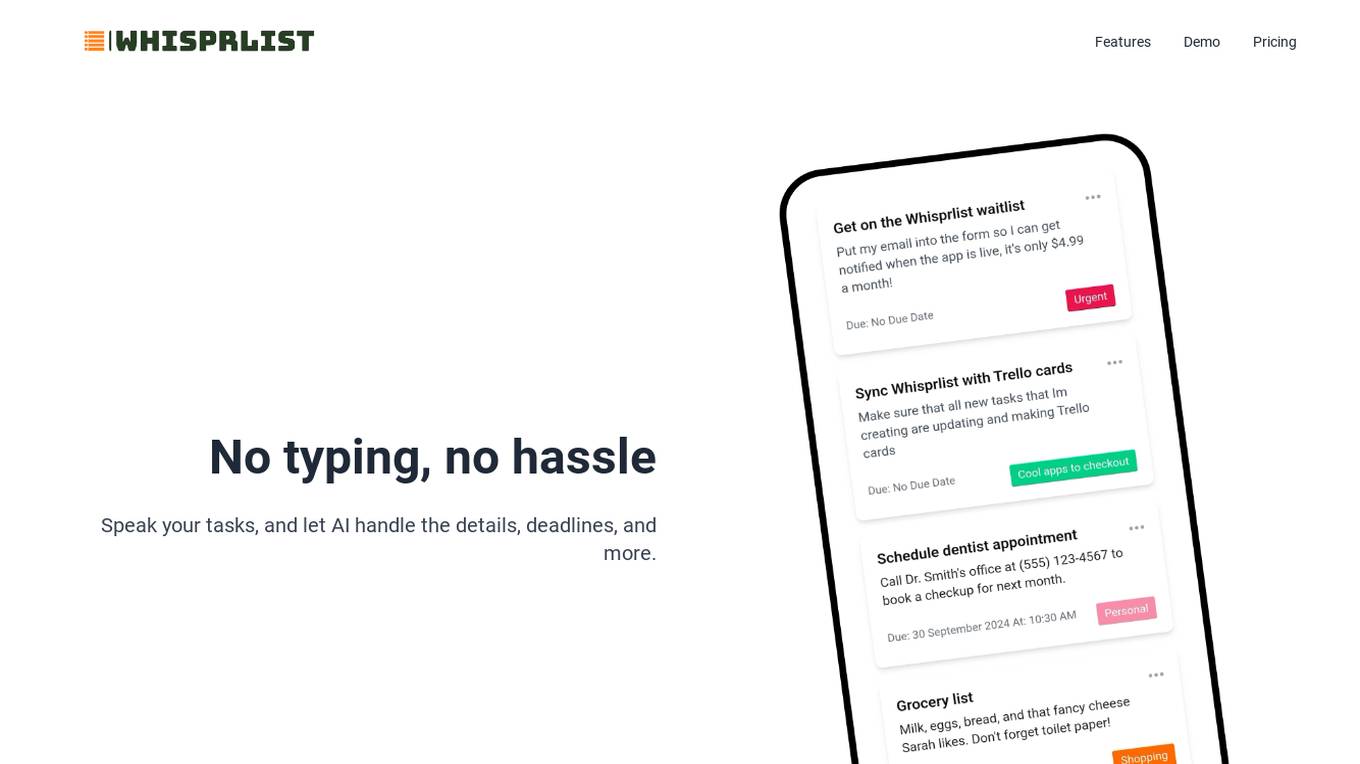

Whisprlist

Whisprlist is an AI-powered task management application that allows users to create and organize tasks effortlessly through voice commands. The application provides personalized assistance by understanding user commands and generating daily agenda emails to help users stay focused and productive. With a simple pricing structure and a range of features, Whisprlist aims to streamline task management for individuals and teams.

1 - Open Source AI Tools

ShellOracle

ShellOracle is an innovative terminal utility designed for intelligent shell command generation, bringing a new level of efficiency to your command-line interactions. It supports seamless shell command generation from written descriptions, command history for easy reference, Unix pipe support for advanced command chaining, self-hosted for full control over your environment, and highly configurable to adapt to your preferences. It can be easily installed using pipx, upgraded with simple commands, and used as a BASH/ZSH widget activated by the CTRL+F keyboard shortcut. ShellOracle can also be run as a Python module or using its entrypoint 'shor'. The tool supports providers like Ollama, OpenAI, and LocalAI, with detailed instructions for each provider. Configuration options are available to customize the utility according to user preferences and requirements. ShellOracle is compatible with BASH and ZSH on macOS and Linux, with no specific hardware requirements for cloud providers like OpenAI.

20 - OpenAI Gpts

BashEmulator GPT

BashEmulator GPT: A Virtualized Bash Environment for Linux Command Line Interaction. It virtualized all network interfaces and local network

BASHer GPT || Your Bash & Linux Shell Tutor!

Adaptive and clear Bash guide with command execution. Learn by poking around in the code interpreter's isolated Kubernetes container!

Instant Command GPT

Executes tasks via short commands instantly, using a single seesion to customize commands.

RFP Proposal Pro (IT / Software Sales assistant)

Step 1: Upload RFP Step 2: Prompt: I need a comprehensive summary of the RFP. Split the summary in multiple blocks / section. After giving me one section wait for my command to move to the next section. Step 3: Prompt: Move to the next section, please :)

DALL· 3 Ultra: image generator & logo art mj

Advanced Dalle-3 engine for image creation. Generate 1 to 4 images using the command "/number your-image-request". v3.6

Angular GPT - Project Builder

Dream an app, tell Cogo your packages, and wishes. Cogo will outline, pseudocode, and code at your command.

![U.S. Acquisition Pro [GPT 4.5 Unofficial] Screenshot](/screenshots_gpts/g-0nYPkElr9.jpg)

U.S. Acquisition Pro [GPT 4.5 Unofficial]

Contracting, Legal, Program and Financial Management Expert. Type '/help' for commands