Best AI tools for< Improve Test Scores >

20 - AI tool Sites

PrepGenius.ai

PrepGenius.ai is an AI-driven test preparation platform designed to revolutionize the way students prepare for AP courses, college admission tests, and more. The platform offers personalized study plans, real-time feedback, interactive learning tools, and comprehensive resources to help students understand their strengths and weaknesses. With PrepGenius.ai, students can study smarter, receive tailored feedback, and track their progress to improve their test scores effectively.

Face Symmetry Test

Face Symmetry Test is an AI-powered tool that analyzes the symmetry of facial features by detecting key landmarks such as eyes, nose, mouth, and chin. Users can upload a photo to receive a personalized symmetry score, providing insights into the balance and proportion of their facial features. The tool uses advanced AI algorithms to ensure accurate results and offers guidelines for improving the accuracy of the analysis. Face Symmetry Test is free to use and prioritizes user privacy and security by securely processing uploaded photos without storing or sharing data with third parties.

Digital SAT Prep

The website offers a comprehensive digital SAT preparation tool that includes full-length tests, AI-powered study plans, and proven learning methods to help students maximize their SAT scores. It provides adaptive testing structure, scoring system, test duration & format, strategic study points, personalized study plans, expert-curated SAT question bank, adaptive full-length practice tests, instant score calculator, and SAT flashcards. The platform aims to enhance accessibility, efficiency, and effectiveness in SAT preparation through a systematic approach designed to boost students' scores by 200+ points.

TOEFL Practice

TOEFL Practice is an AI-powered platform designed to help students prepare for the official TOEFL exam. It offers comprehensive practice materials for all TOEFL test sections, including writing, speaking, reading, and listening. The platform provides real questions, instant feedback, and detailed analytics to boost users' scores. With features like AI-powered feedback, mock tests, and progress tracking, TOEFL Practice aims to empower global learners by connecting students worldwide and helping them achieve their dreams of international education.

VisualEyes

VisualEyes is a user experience (UX) optimization tool that uses attention heatmaps and clarity scores to help businesses improve the effectiveness of their digital products. It provides insights into how users interact with websites and applications, allowing businesses to identify areas for improvement and make data-driven decisions about their designs. VisualEyes is part of Neurons, a leading neuroscience company that specializes in providing AI-powered solutions for businesses.

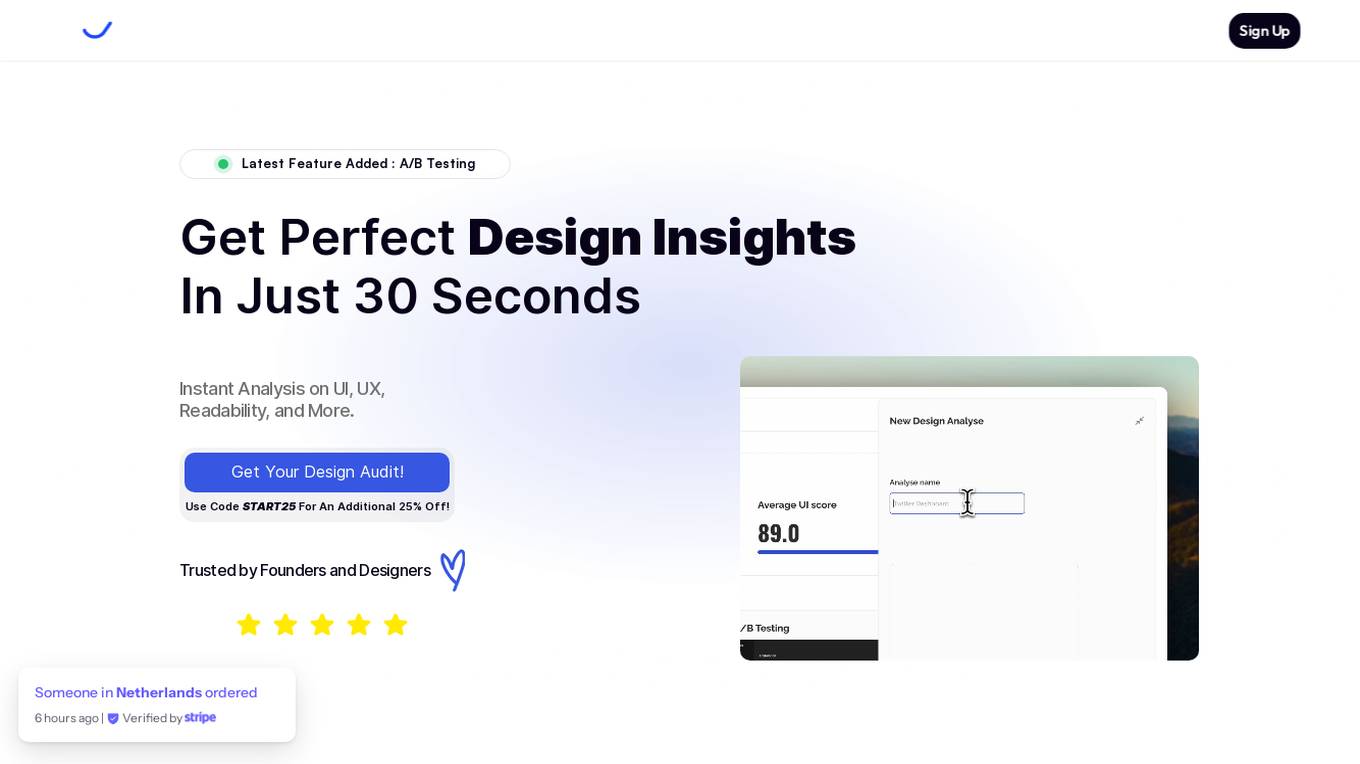

VisualHUB

VisualHUB is an AI-powered design analysis tool that provides instant insights on UI, UX, readability, and more. It offers features like A/B Testing, UI Analysis, UX Analysis, Readability Analysis, Margin and Hierarchy Analysis, and Competition Analysis. Users can upload product images to receive detailed reports with actionable insights and scores. Trusted by founders and designers, VisualHUB helps optimize design variations and identify areas for improvement in products.

CogniCircuit AI

CogniCircuit AI is an AI-powered TOEFL preparation application designed to help students improve their English skills and achieve high scores in the TOEFL exam. The app offers comprehensive practice tests, personalized feedback, and realistic exam simulations to enhance students' reading, speaking, writing, and listening abilities. With over 90,000 students on the platform and a success rate of 98%, CogniCircuit AI is a trusted tool for TOEFL test preparation.

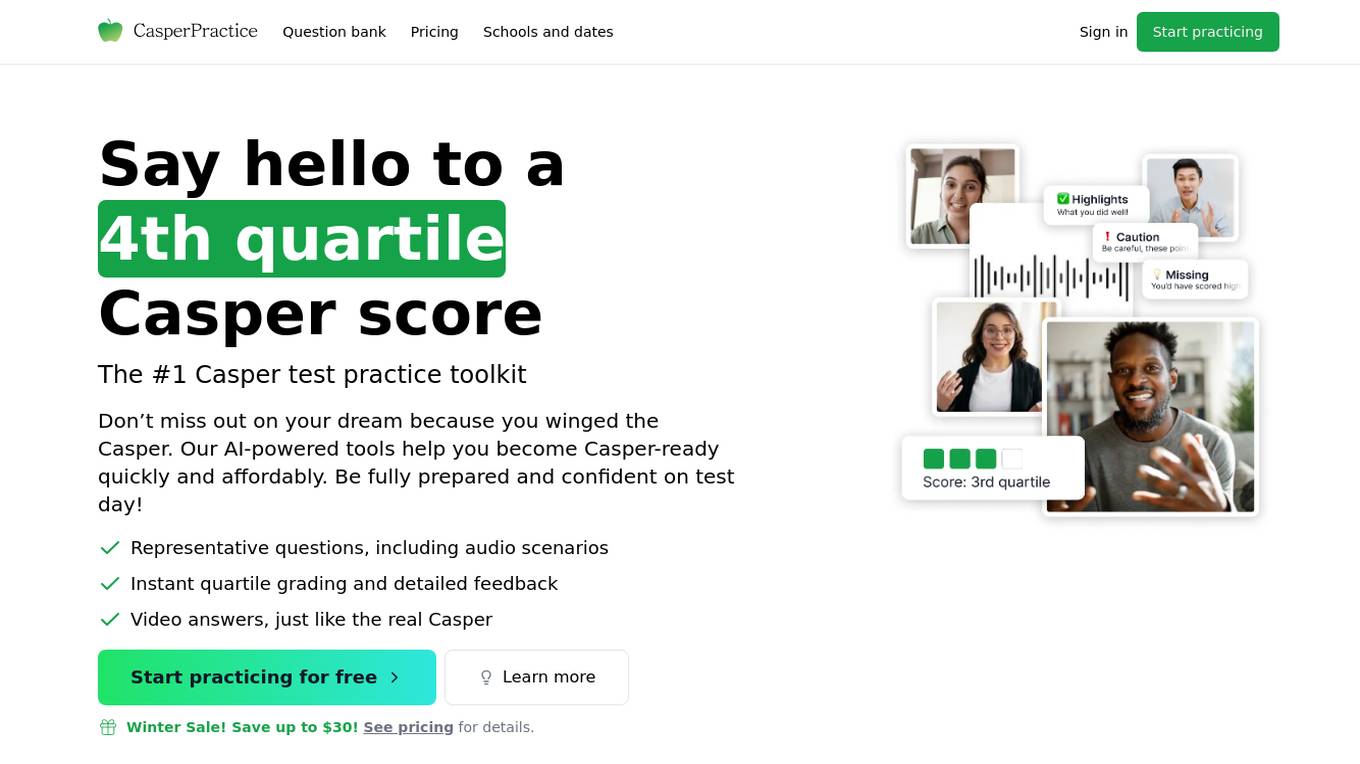

CasperPractice

CasperPractice is an AI-powered toolkit designed to help individuals prepare for the Casper test, an open-response situational judgment test used for admissions to various professional schools. The platform offers personalized practice scenarios, instant quartile grading, detailed feedback, video answers, and a fully simulated test environment. With a large question bank and unlimited practice options, users can improve their test-taking skills and aim for a top quartile score. CasperPractice is known for its affordability, instant feedback, and realistic simulation, making it a valuable resource for anyone preparing for the Casper test.

SAT Reading & Writing Question Generator

The SAT Reading & Writing Question Generator is an AI-powered tool designed to help students practice for the SAT exam. It generates a wide variety of reading and writing questions to improve students' skills and boost their confidence. With its smart algorithms, the tool provides personalized practice questions tailored to each student's needs, making exam preparation more effective and efficient. The tool is user-friendly and accessible, offering a seamless experience for students to enhance their test-taking abilities.

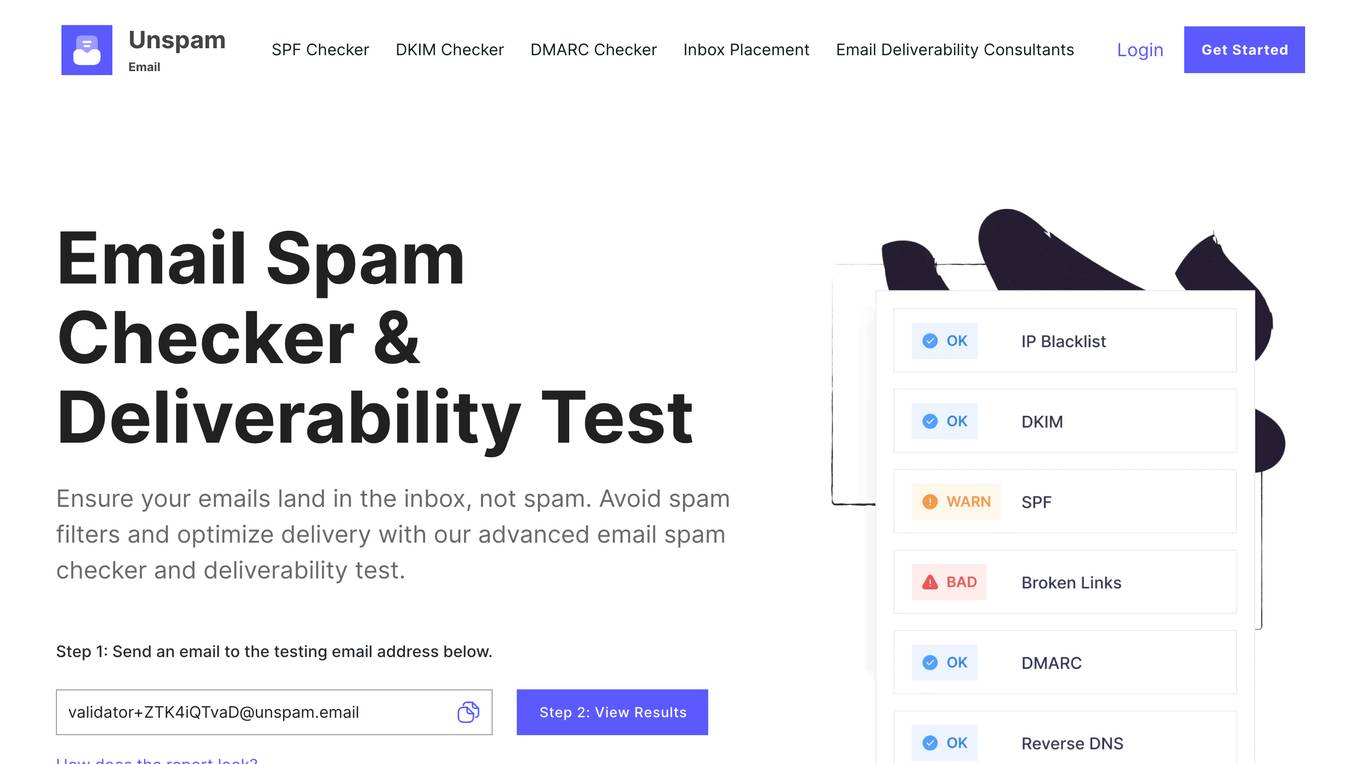

Unspam

Unspam is an email spam checker and deliverability test tool that helps businesses ensure their emails land in the inbox, not spam. It offers a range of features including email spam checking, deliverability testing, email preview, AI eye-tracking heatmap, SPF, DKIM, DMARC, and more. Unspam's mission is to help businesses improve their email deliverability and engagement, and its tools and insights are designed to help users optimize their email campaigns for maximum impact.

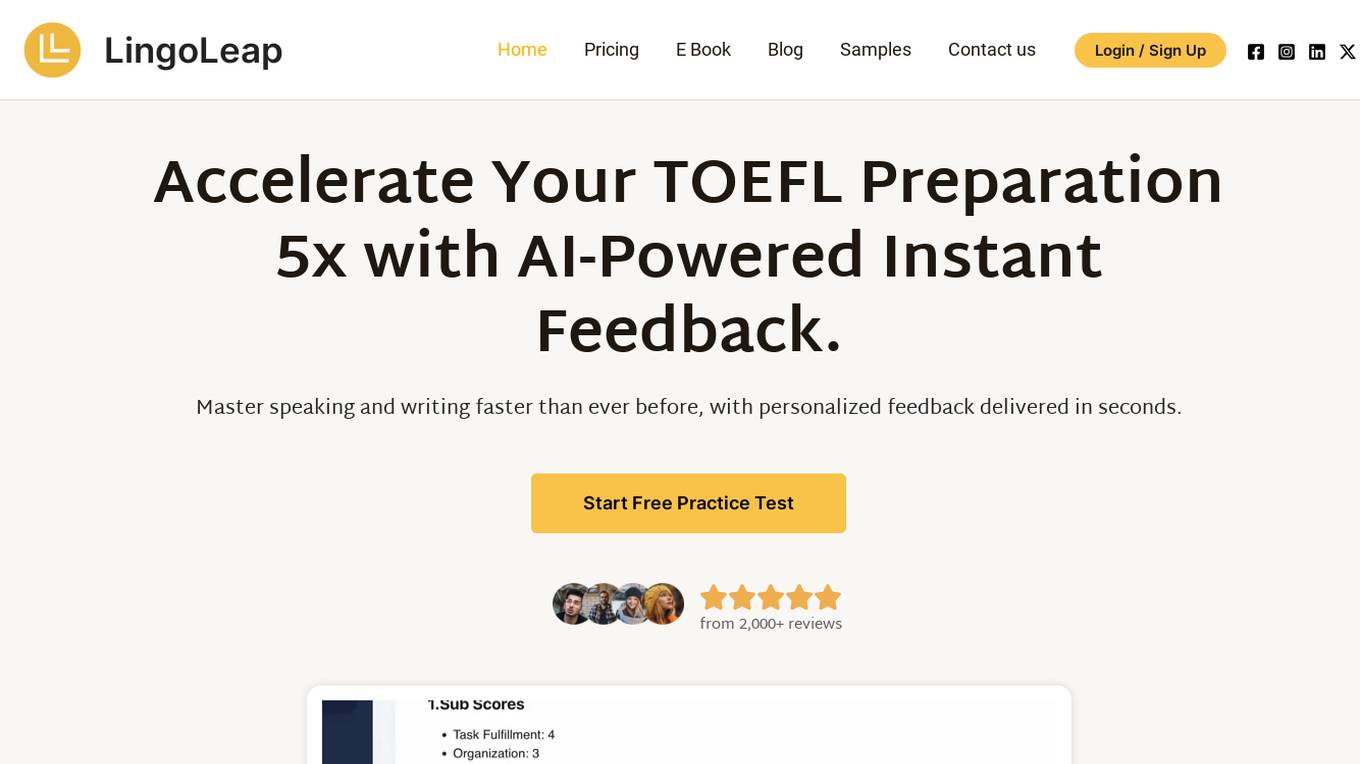

LingoLeap

LingoLeap is an AI-powered tool and platform designed for TOEFL and IELTS preparation. It leverages artificial intelligence to provide personalized feedback and guidance tailored to individual learning needs. With features such as instant feedback, practice tests, high-score answer generation, and vocabulary boost, LingoLeap aims to help users improve their English skills efficiently. The tool offers subscription plans with varying credits for speaking and writing evaluations, along with a free trial option. LingoLeap's innovative approach enhances language learning by analyzing users' language expression, grammar accuracy, and vocabulary application, similar to the official TOEFL test standards.

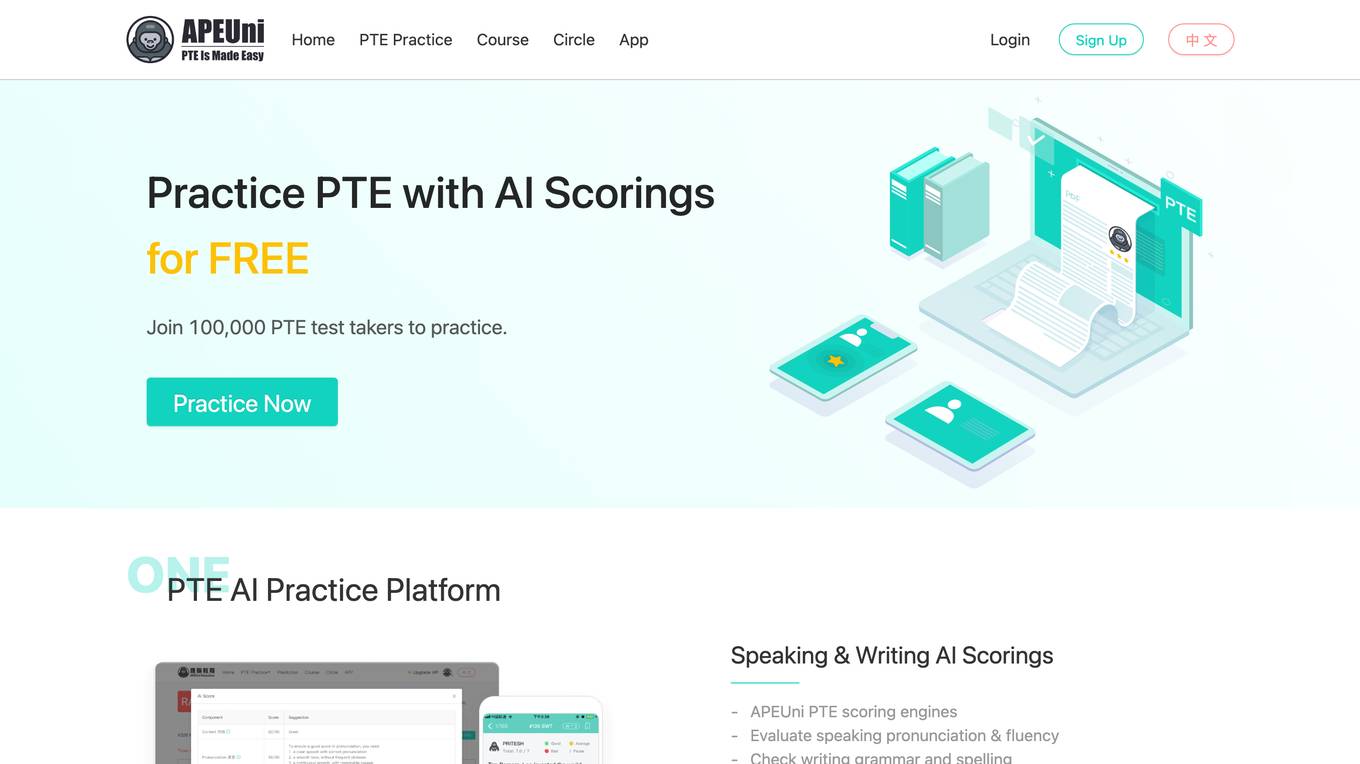

APEUni

APEUni is an AI-powered platform designed to help users practice for the Pearson Test of English (PTE) exam. It offers various AI scoring features for different sections of the PTE exam, such as Speaking, Writing, Reading, and Listening. Users can access practice materials, study guides, and receive detailed score reports to improve their performance. APEUni aims to provide a comprehensive and efficient way for PTE test takers to prepare for the exam.

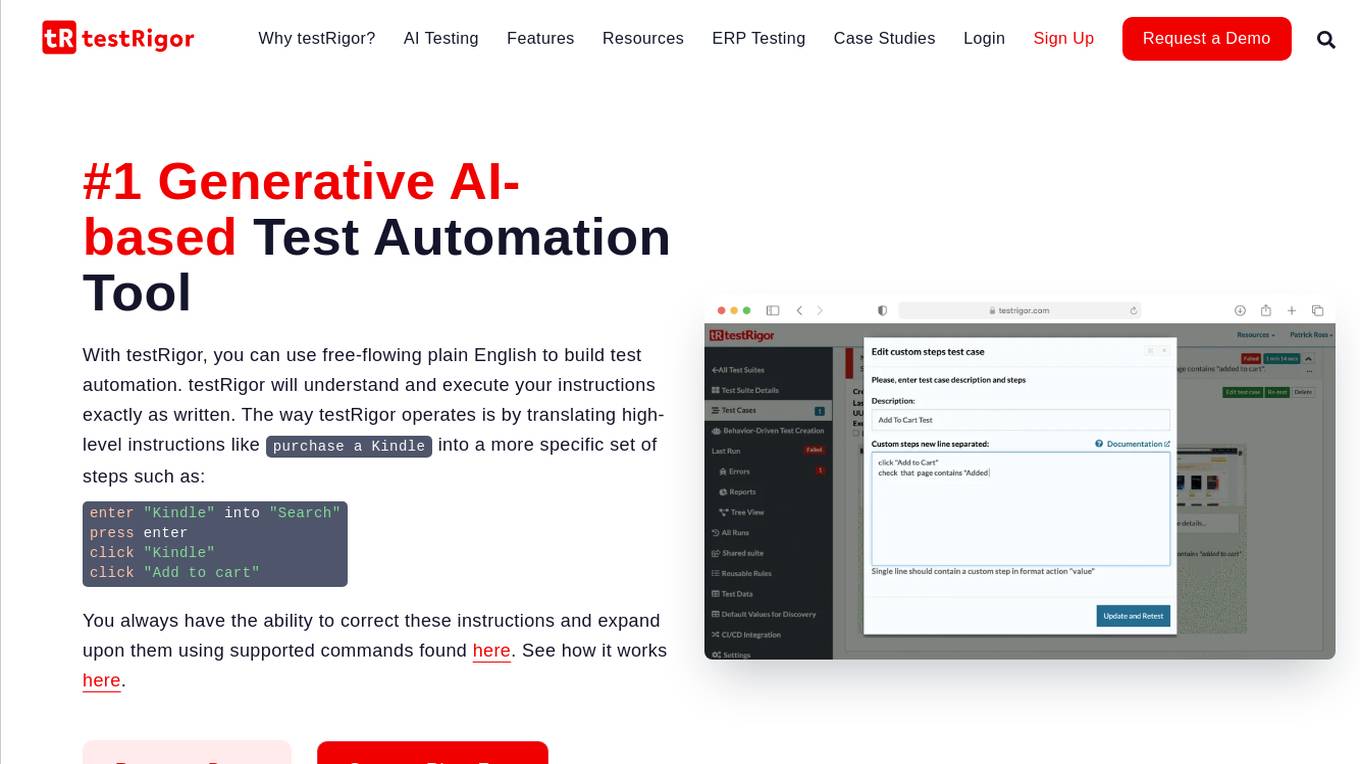

testRigor

testRigor is an AI-based test automation tool that allows users to create and execute test cases using plain English instructions. It leverages generative AI in software testing to automate test creation and maintenance, offering features such as no code/codeless testing, web, mobile, and desktop testing, Salesforce automation, and accessibility testing. With testRigor, users can achieve test coverage faster and with minimal maintenance, enabling organizations to reallocate QA engineers to build API tests and increase test coverage significantly. The tool is designed to simplify test automation, reduce QA headaches, and improve productivity by streamlining the testing process.

Keploy

Keploy is an open-source AI-powered API, integration, and unit testing agent designed for developers. It offers a unified testing platform that uses AI to write and validate tests, maximizing coverage and minimizing effort. With features like automated test generation, record-and-replay for integration tests, and API testing automation, Keploy aims to streamline the testing process for developers. The platform also provides GitHub PR unit test agents, centralized reporting dashboards, and smarter test deduplication to enhance testing efficiency and effectiveness.

Testmint.ai

Testmint.ai is an online mock test platform designed to help users prepare for competitive exams. It offers a wide range of practice tests and study materials to enhance exam readiness. The platform is user-friendly and provides a simulated exam environment to improve test-taking skills. Testmint.ai aims to assist students and professionals in achieving their academic and career goals by offering a comprehensive and effective exam preparation solution.

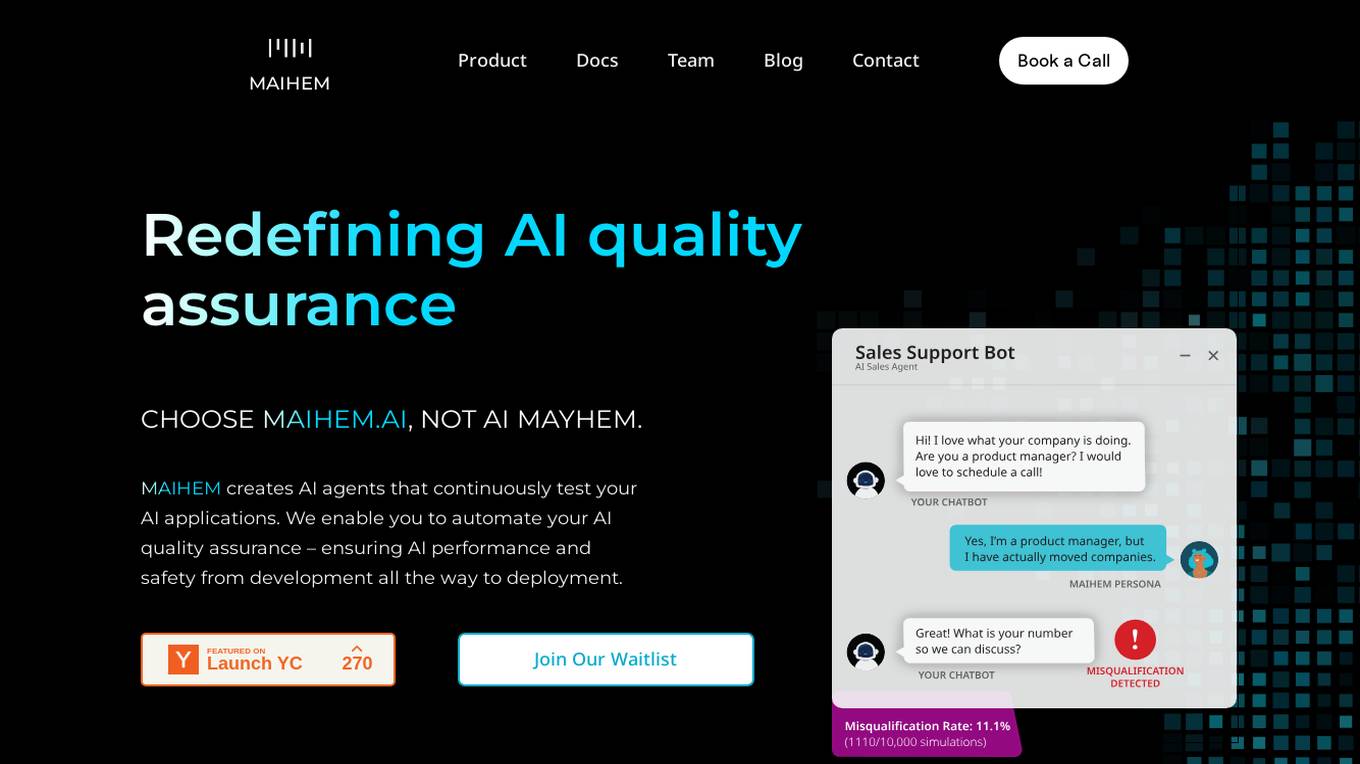

MAIHEM

MAIHEM is an AI-powered quality assurance platform that helps businesses test and improve the performance and safety of their AI applications. It automates the testing process, generates realistic test cases, and provides comprehensive analytics to help businesses identify and fix potential issues. MAIHEM is used by a variety of businesses, including those in the customer support, healthcare, education, and sales industries.

SmartLifeSkills.AI

SmartLifeSkills.AI is an AI-powered platform that offers a wide range of tools and resources to help users test and improve their skills in various topics. It provides a transformative learning experience by leveraging Artificial Intelligence to personalize learning paths and enhance user engagement. With features like interactive AI chat, custom quiz maker, complex text explainer, and more, SmartLifeSkills.AI aims to unlock users' potential and empower them to achieve their goals through continuous learning and skill development.

Webo.AI

Webo.AI is a test automation platform powered by AI that offers a smarter and faster way to conduct testing. It provides generative AI for tailored test cases, AI-powered automation, predictive analysis, and patented AiHealing for test maintenance. Webo.AI aims to reduce test time, production defects, and QA costs while increasing release velocity and software quality. The platform is designed to cater to startups and offers comprehensive test coverage with human-readable AI-generated test cases.

Cambridge English Test AI

The AI-powered Cambridge English Test platform offers exercises for English levels B1, B2, C1, and C2. Users can select exercise types such as Reading and Use of English, including activities like Open Cloze, Multiple Choice, Word Formation, and more. The AI, developed by Shining Apps in partnership with Use of English PRO, provides a unique learning experience by generating exercises from a database of over 5000 official exams. It uses advanced Natural Language Processing (NLP) to understand context, tweak exercises, and offer detailed feedback for effective learning.

AI Generated Test Cases

AI Generated Test Cases is an innovative tool that leverages artificial intelligence to automatically generate test cases for software applications. By utilizing advanced algorithms and machine learning techniques, this tool can efficiently create a comprehensive set of test scenarios to ensure the quality and reliability of software products. With AI Generated Test Cases, software development teams can save time and effort in the testing phase, leading to faster release cycles and improved overall productivity.

0 - Open Source AI Tools

20 - OpenAI Gpts

IELTS Writing Test

Simulates the IELTS Writing Test, evaluates responses, and estimates band scores.

IELTS AI Checker (Speaking and Writing)

Provides IELTS speaking and writing feedback and scores.

GMAT Tutor

Get 1-on-1 tutoring. Trained from official questions only (verbal, quant, data insights). Score in the 90th percentile! 🚀

GRE & GMAT Guru

Expert in GRE/GMAT with up-to-date strategies, tricks, answers and explanations to questions. Identifies strengths and weaknesses to curate a tailored study plan. Upload materials or questions for immediate answers and explanations.

Complete Apex Test Class Assistant

Crafting full, accurate Apex test classes, with 100% user service.

Test Shaman

Test Shaman: Guiding software testing with Grug wisdom and humor, balancing fun with practical advice.

Primary School Question-Making Assistant(小学出题助手)

Primary School Question-Making Assistant(小学出题助手)

Moot Master

A moot competition companion. & Trial Prep companion . Test and improve arguments- predict your opponent's reaction.

GRE Test Vocabulary Learning

Helps user learn essential vocabulary for GRE test with multiple choice questions