Best AI tools for< Improve Test Performance >

20 - AI tool Sites

Kobiton

Kobiton is a mobile device testing platform that accelerates app delivery, improves productivity, and maximizes mobile app impact. It offers a comprehensive suite of features for real-device testing, visual testing, performance testing, accessibility testing, and more. With AI-augmented testing and no-code validations, Kobiton helps enterprises streamline continuous delivery of mobile apps. The platform provides secure and scalable device lab management, mobile device cloud, and integration with DevOps toolchain for enhanced productivity and efficiency.

bottest.ai

bottest.ai is an AI-powered chatbot testing tool that focuses on ensuring quality, reliability, and safety in AI-based chatbots. The tool offers automated testing capabilities without the need for coding, making it easy for users to test their chatbots efficiently. With features like regression testing, performance testing, multi-language testing, and AI-powered coverage, bottest.ai provides a comprehensive solution for testing chatbots. Users can record tests, evaluate responses, and improve their chatbots based on analytics provided by the tool. The tool also supports enterprise readiness by allowing scalability, permissions management, and integration with existing workflows.

aqua

aqua is a comprehensive Quality Assurance (QA) management tool designed to streamline testing processes and enhance testing efficiency. It offers a wide range of features such as AI Copilot, bug reporting, test management, requirements management, user acceptance testing, and automation management. aqua caters to various industries including banking, insurance, manufacturing, government, tech companies, and medical sectors, helping organizations improve testing productivity, software quality, and defect detection ratios. The tool integrates with popular platforms like Jira, Jenkins, JMeter, and offers both Cloud and On-Premise deployment options. With AI-enhanced capabilities, aqua aims to make testing faster, more efficient, and error-free.

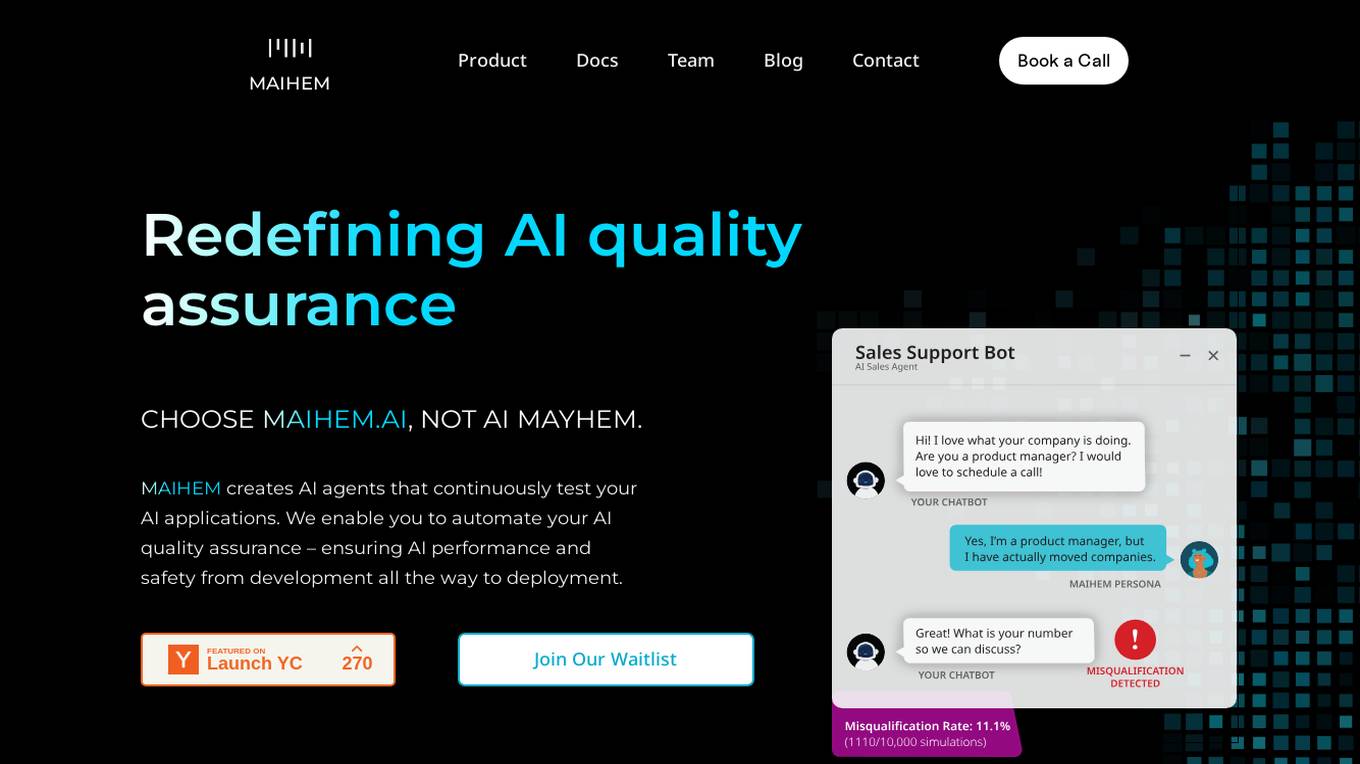

MAIHEM

MAIHEM is an AI-powered quality assurance platform that helps businesses test and improve the performance and safety of their AI applications. It automates the testing process, generates realistic test cases, and provides comprehensive analytics to help businesses identify and fix potential issues. MAIHEM is used by a variety of businesses, including those in the customer support, healthcare, education, and sales industries.

Webo.AI

Webo.AI is a test automation platform powered by AI that offers a smarter and faster way to conduct testing. It provides generative AI for tailored test cases, AI-powered automation, predictive analysis, and patented AiHealing for test maintenance. Webo.AI aims to reduce test time, production defects, and QA costs while increasing release velocity and software quality. The platform is designed to cater to startups and offers comprehensive test coverage with human-readable AI-generated test cases.

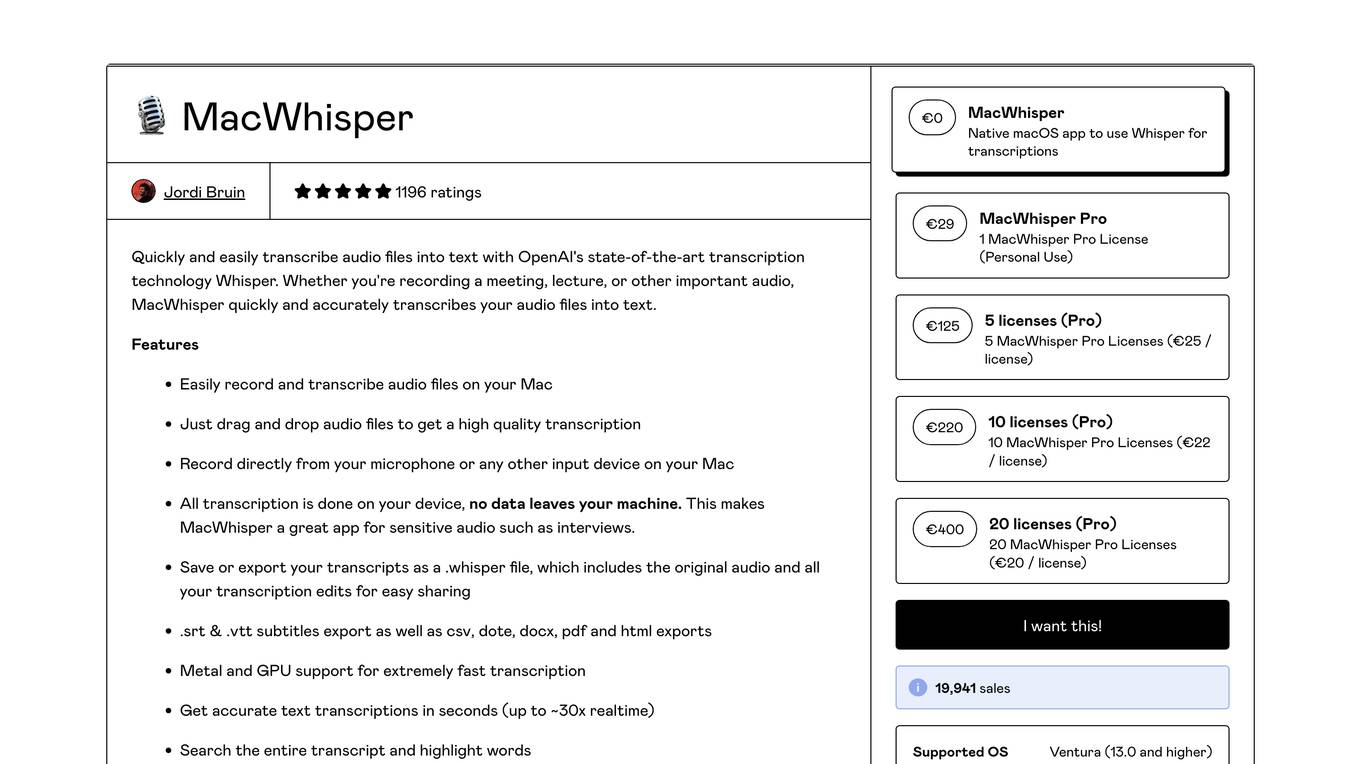

MacWhisper

MacWhisper is a native macOS application that utilizes OpenAI's Whisper technology for transcribing audio files into text. It offers a user-friendly interface for recording, transcribing, and editing audio, making it suitable for various use cases such as transcribing meetings, lectures, interviews, and podcasts. The application is designed to protect user privacy by performing all transcriptions locally on the device, ensuring that no data leaves the user's machine.

SmallTalk2Me

SmallTalk2Me is an AI-powered simulator designed to help users improve their spoken English. It offers a range of features, including mock job interviews, IELTS speaking test simulations, and daily stories and courses. The platform uses AI to provide users with instant feedback on their performance, helping them to identify areas for improvement and track their progress over time.

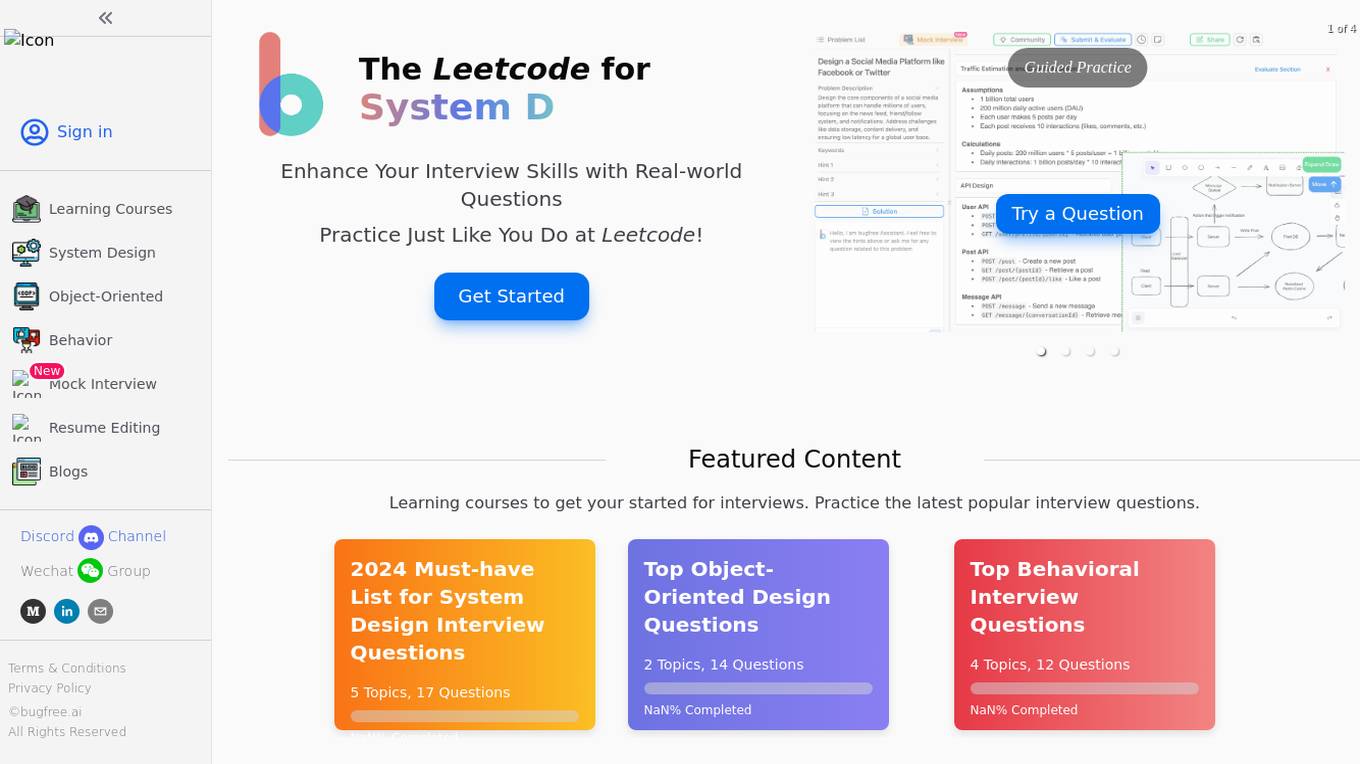

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

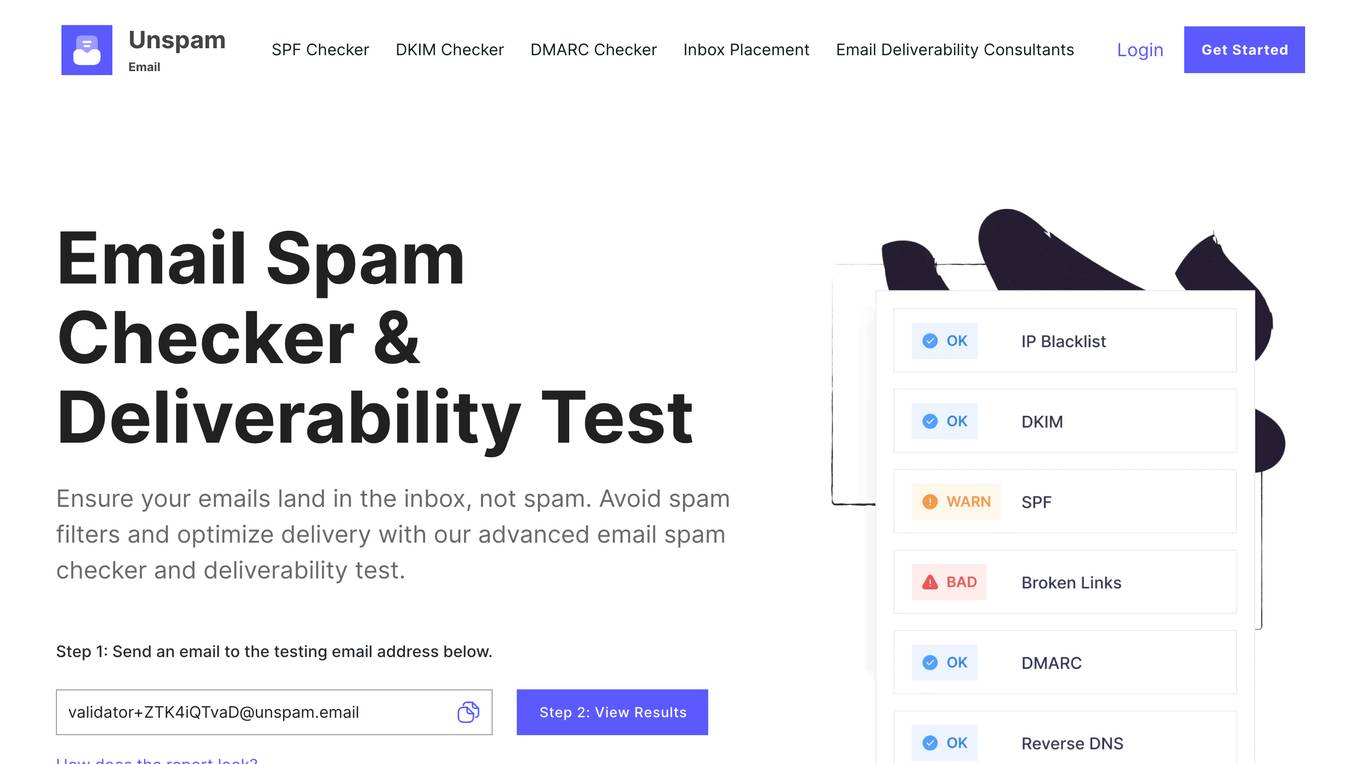

Unspam

Unspam is an email spam checker and deliverability test tool that helps businesses ensure their emails land in the inbox, not spam. It offers a range of features including email spam checking, deliverability testing, email preview, AI eye-tracking heatmap, SPF, DKIM, DMARC, and more. Unspam's mission is to help businesses improve their email deliverability and engagement, and its tools and insights are designed to help users optimize their email campaigns for maximum impact.

Lisapet.AI

Lisapet.AI is an AI prompt testing suite designed for product teams to streamline the process of designing, prototyping, testing, and shipping AI features. It offers a comprehensive platform with features like best-in-class AI playground, variables for dynamic data inputs, structured outputs, side-by-side editing, function calling, image inputs, assertions & metrics, performance comparison, data sets organization, shareable reports, comments & feedback, token & cost stats, and more. The application aims to help teams save time, improve efficiency, and ensure the reliability of AI features through automated prompt testing.

A11YBoost

A11YBoost is an automated website accessibility monitoring and reporting tool that helps businesses improve the accessibility, performance, UX, design, and SEO of their websites. It provides instant and detailed accessibility reports that cover key issues, their impact, and how to fix them. The tool also offers analytics history to track progress over time and covers not just core accessibility issues but also performance, UX, design, and SEO. A11YBoost uses a unique blend of AI testing, traditional testing, and human expertise to deliver results and has an expanding test suite with 25+ tests across five categories.

Byterat

Byterat is a cloud-based platform that provides battery data management, visualization, and analytics. It offers an end-to-end data pipeline that automatically synchronizes, processes, and visualizes materials, manufacturing, and test data from all labs. Byterat also provides 24/7 access to experiments from anywhere in the world and integrates seamlessly with current workflows. It is customizable to specific cell chemistries and allows users to build custom visualizations, dashboards, and analyses. Byterat's AI-powered battery research has been published in leading journals, and its team has pioneered a new class of models that extract tell-tale signals of battery health from electrical signals to forecast future performance.

SAT Reading & Writing Question Generator

The SAT Reading & Writing Question Generator is an AI-powered tool designed to help students practice for the SAT exam. It generates a wide variety of reading and writing questions to improve students' skills and boost their confidence. With its smart algorithms, the tool provides personalized practice questions tailored to each student's needs, making exam preparation more effective and efficient. The tool is user-friendly and accessible, offering a seamless experience for students to enhance their test-taking abilities.

TestMarket

TestMarket is an AI-powered sales optimization platform for online marketplace sellers. It offers a range of services to help sellers increase their visibility, boost sales, and improve their overall performance on marketplaces such as Amazon, Etsy, and Walmart. TestMarket's services include product promotion, keyword analysis, Google Ads and SEO optimization, and advertising optimization.

Prompt Dev Tool

Prompt Dev Tool is an AI application designed to boost prompt engineering efficiency by helping users create, test, and optimize AI prompts for better results. It offers an intuitive interface, real-time feedback, model comparison, variable testing, prompt iteration, and advanced analytics. The tool is suitable for both beginners and experts, providing detailed insights to enhance AI interactions and improve outcomes.

Elixir

Elixir is an AI tool designed for observability and testing of AI voice agents. It offers features such as automated testing, call review, monitoring, analytics, tracing, scoring, and reviewing. Elixir helps in simulating realistic test calls, analyzing conversations, identifying mistakes, and debugging issues with audio snippets and call transcripts. It provides detailed traces for complex abstractions, streamlines manual review processes, and allows for simulating thousands of calls for full test coverage. The tool is suitable for monitoring agent performance, detecting anomalies in real-time, and improving conversational systems through human-in-the-loop feedback.

APEUni

APEUni is an AI-powered platform designed to help users practice for the Pearson Test of English (PTE) exam. It offers various AI scoring features for different sections of the PTE exam, such as Speaking, Writing, Reading, and Listening. Users can access practice materials, study guides, and receive detailed score reports to improve their performance. APEUni aims to provide a comprehensive and efficient way for PTE test takers to prepare for the exam.

ExamSamur.ai

ExamSamur.ai is an AI-powered platform designed to help students prepare for exams efficiently. It offers a wide range of study materials, practice tests, and personalized study plans to enhance learning outcomes. The platform utilizes advanced algorithms to analyze students' performance and provide targeted feedback for improvement. With a user-friendly interface and interactive features, ExamSamur.ai aims to make exam preparation engaging and effective for students of all levels.

Phrasee

Phrasee is a generative AI platform that helps enterprise marketers create and optimize marketing messages across various channels, including email, SMS, push notifications, web and app, and social media. It uses AI to generate billions of marketing messages tailored to specific audiences and brands, ensuring consistent experiences and maximizing customer engagement. Phrasee's platform provides marketers with tools for testing, optimizing, and personalizing content, enabling them to improve performance, conversions, and ROI.

Abstracta Solutions

Abstracta Solutions is an AI software development company that provides holistic solutions for software quality. They offer services such as AI software development, testing strategy, functional testing, test automation, performance testing, tool development, accessibility testing, security testing, and DevOps services. Abstracta Solutions empowers organizations with AI-driven solutions to streamline software development processes and enhance customer experiences. They focus on continuously delivering high-quality software by co-creating quality strategies and leveraging expertise in different areas of software development.

0 - Open Source AI Tools

20 - OpenAI Gpts

IELTS AI Checker (Speaking and Writing)

Provides IELTS speaking and writing feedback and scores.

Wordon, World's Worst Customer | Divergent AI

I simulate tough Customer Support scenarios for Agent Training.

Complete Apex Test Class Assistant

Crafting full, accurate Apex test classes, with 100% user service.

Test Shaman

Test Shaman: Guiding software testing with Grug wisdom and humor, balancing fun with practical advice.

Primary School Question-Making Assistant(小学出题助手)

Primary School Question-Making Assistant(小学出题助手)

Moot Master

A moot competition companion. & Trial Prep companion . Test and improve arguments- predict your opponent's reaction.

GRE Test Vocabulary Learning

Helps user learn essential vocabulary for GRE test with multiple choice questions

Academic Hook Test

Upload your manuscript introduction. Get 'Reviewer 2' grade feedback in return.😎

GED Math Test Prep

Your personal math coach for mastering GED Math, turning challenges into achievements with engaging, tailored support.

STAAR EOC U.S. History Test Prep

Dive into history with History Ace, blending interactive quizzes, personalized learning, and dynamic storytelling to master your U.S. history test!