Best AI tools for< Impose Temporal Constraints >

2 - AI tool Sites

EDGE

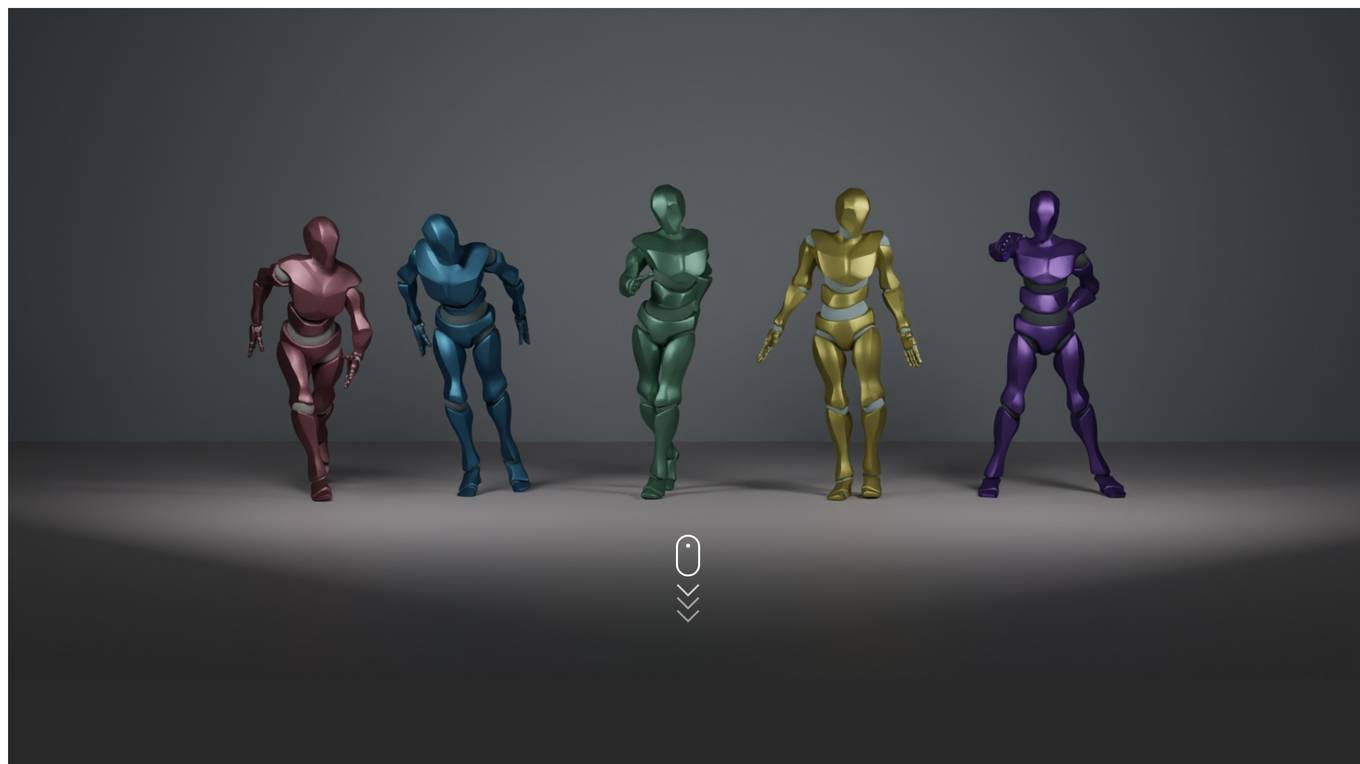

EDGE is a powerful method for editable dance generation that creates realistic dances faithful to input music. It uses a transformer-based diffusion model with Jukebox for music feature extraction. EDGE offers editing capabilities like joint-wise conditioning, motion in-betweening, and dance continuation. Human raters prefer dances generated by EDGE over other methods due to its physical realism and powerful editing features.

N/A

The website appears to be experiencing an 'Access Denied' error, preventing users from accessing the specified page. The error message indicates that the user does not have permission to view the content on the server. This issue may be related to server configurations, security settings, or restrictions imposed by the website owner. The error message includes a reference number that can be used for troubleshooting purposes. It seems to be a technical issue rather than an AI tool or application.