Best AI tools for< Identify Security Risks >

20 - AI tool Sites

TeddyAI Web Security Checker

The website teddyai.oiedu.co.uk appears to be experiencing a privacy error, indicating that the connection is not private. The error message warns that attackers might be attempting to steal sensitive information such as passwords, messages, or credit card details. The security certificate for the website is from cpcalendars.bigcityrealty.net, suggesting a potential misconfiguration or a security threat. Users are advised to proceed with caution due to the security risks associated with the site.

MLSecOps

MLSecOps is an AI tool designed to drive the field of MLSecOps forward through high-quality educational resources and tools. It focuses on traditional cybersecurity principles, emphasizing people, processes, and technology. The MLSecOps Community educates and promotes the integration of security practices throughout the AI & machine learning lifecycle, empowering members to identify, understand, and manage risks associated with their AI systems.

DryRun Security

DryRun Security is an AI-driven application security tool that provides Contextual Security Analysis to detect and prevent logic flaws, authorization gaps, IDOR, and other code risks. It offers features like code insights, natural language code policies, and customizable notifications and reporting. The tool benefits CISOs, security leaders, and developers by enhancing code security, streamlining compliance, increasing developer engagement, and providing real-time feedback. DryRun Security supports various languages and frameworks and integrates with GitHub and Slack for seamless collaboration.

Concentric AI

Concentric AI is a Managed Data Security Posture Management tool that utilizes Semantic Intelligence to provide comprehensive data security solutions. The platform offers features such as autonomous data discovery, data risk identification, centralized remediation, easy deployment, and data security posture management. Concentric AI helps organizations protect sensitive data, prevent data loss, and ensure compliance with data security regulations. The tool is designed to simplify data governance and enhance data security across various data repositories, both in the cloud and on-premises.

Dataminr

Dataminr is a leading provider of real-time event and risk detection. Its AI platform processes billions of public data units daily to deliver real-time alerts on high-impact events and emerging risks. Dataminr's products are used by businesses, public sector organizations, and newsrooms to plan for and respond to crises, manage risks, and stay informed about the latest events.

Dataminr

Dataminr is a leading AI company that provides real-time event, risk, and threat detection. Its revolutionary real-time AI Platform discovers the earliest signals of events, risks, and threats from within public data. Dataminr's products deliver critical information first—so organizations can respond quickly and manage crises effectively.

Graphio

Graphio is an AI-driven employee scoring and scenario builder tool that leverages continuous, real-time scoring with AI agents to assess potential, predict flight risks, and identify future leaders. It replaces subjective evaluations with AI-driven insights to ensure accurate, unbiased decisions in talent management. Graphio uses AI to remove bias in talent management, providing real-time, data-driven insights for fair decisions in promotions, layoffs, and succession planning. It offers compliance features and rules that users can control, ensuring accurate and secure assessments aligned with legal and regulatory requirements. The platform focuses on security, privacy, and personalized coaching to enhance employee engagement and reduce turnover.

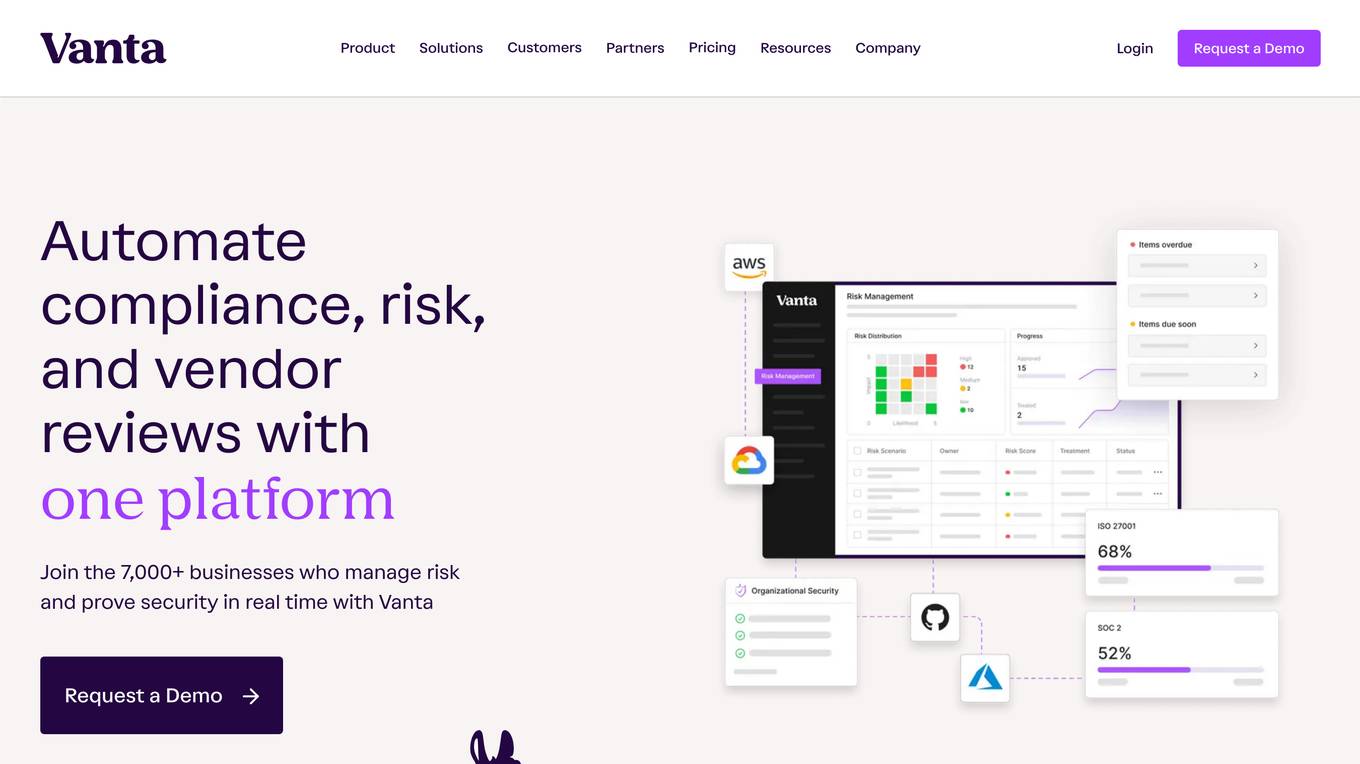

Vanta

Vanta is a trust management platform that helps businesses automate compliance, streamline security reviews, and build trust with customers. It offers a range of features to help businesses manage risk and prove security in real time, including: * **Compliance automation:** Vanta automates up to 90% of the work for security and privacy frameworks, making it easy for businesses to achieve and maintain compliance. * **Real-time monitoring:** Vanta provides real-time visibility into the state of a business's security posture, with hourly tests and alerts for any issues. * **Holistic risk visibility:** Vanta offers a single view across key risk surfaces in a business, including employees, assets, and vendors, to help businesses identify and mitigate risks. * **Efficient audits:** Vanta streamlines the audit process, making it easier for businesses to prepare for and complete audits. * **Integrations:** Vanta integrates with a range of tools and platforms to help businesses automate security and compliance tasks.

CUBE3.AI

CUBE3.AI is a real-time crypto fraud prevention tool that utilizes AI technology to identify and prevent various types of fraudulent activities in the blockchain ecosystem. It offers features such as risk assessment, real-time transaction security, automated protection, instant alerts, and seamless compliance management. The tool helps users protect their assets, customers, and reputation by proactively detecting and blocking fraud in real-time.

Smaty.xyz

Smaty.xyz is a comprehensive platform that provides a suite of tools for code generation and security auditing. With Smaty.xyz, developers can quickly and easily generate high-quality code in multiple programming languages, ensuring consistency and reducing development time. Additionally, Smaty.xyz offers robust security auditing capabilities, enabling developers to identify and address vulnerabilities in their code, mitigating risks and enhancing the overall security of their applications.

Cyble

Cyble is a leading threat intelligence platform offering products and services recognized by top industry analysts. It provides AI-driven cyber threat intelligence solutions for enterprises, governments, and individuals. Cyble's offerings include attack surface management, brand intelligence, dark web monitoring, vulnerability management, takedown and disruption services, third-party risk management, incident management, and more. The platform leverages cutting-edge AI technology to enhance cybersecurity efforts and stay ahead of cyber adversaries.

Privado AI

Privado AI is a privacy engineering tool that bridges the gap between privacy compliance and software development. It automates personal data visibility and privacy governance, helping organizations to identify privacy risks, track data flows, and ensure compliance with regulations such as CPRA, MHMDA, FTC, and GDPR. The tool provides real-time visibility into how personal data is collected, used, shared, and stored by scanning the code of websites, user-facing applications, and backend systems. Privado offers features like Privacy Code Scanning, programmatic privacy governance, automated GDPR RoPA reports, risk identification without assessments, and developer-friendly privacy guidance.

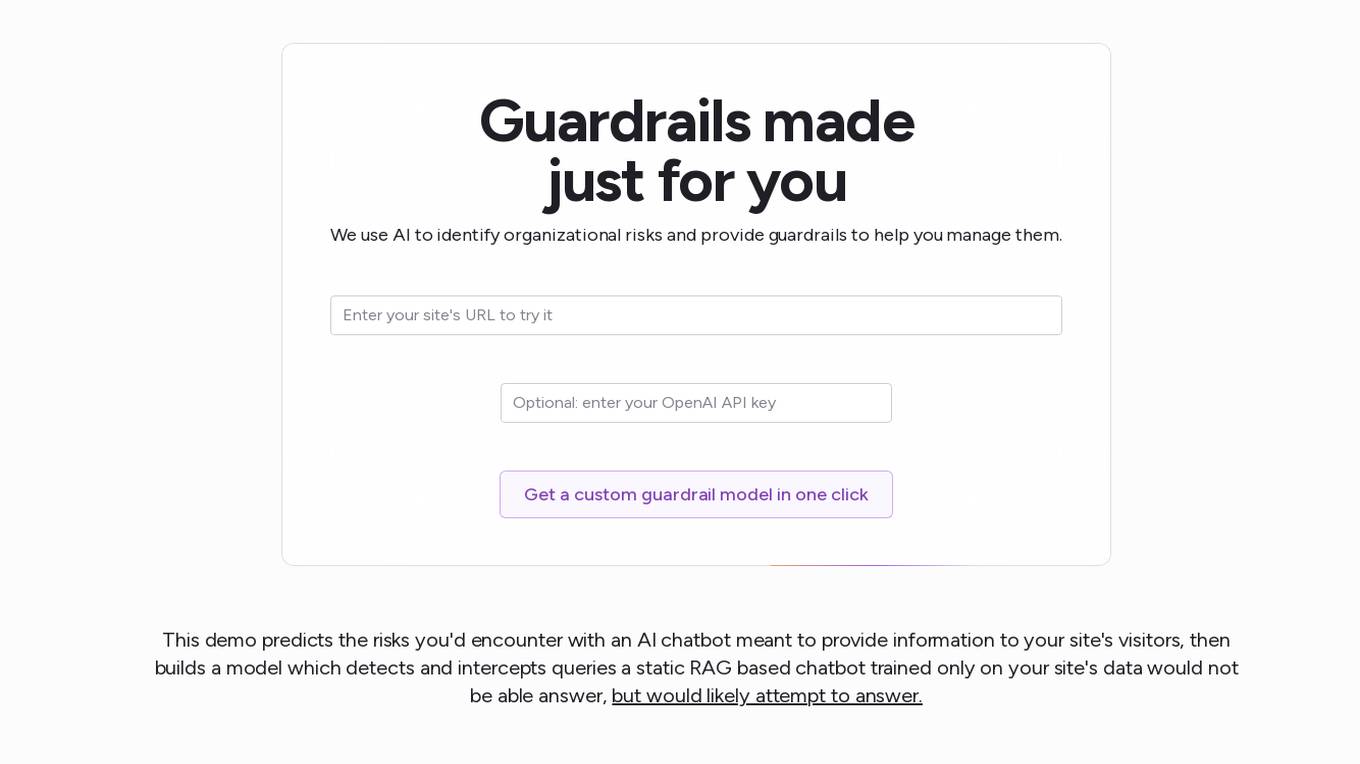

moderation.dev

moderation.dev is an AI tool that offers domain-specific guardrails to help organizations identify and manage risks efficiently. By leveraging AI technology, the tool provides custom guardrail models in just one click. It specializes in predicting risks associated with AI chatbots and creating models to intercept queries that a traditional chatbot might struggle to answer accurately.

Tenable AI Exposure

Tenable AI Exposure is an AI tool that helps organizations secure and understand their use of AI platforms. It provides visibility, context, and control to manage risks from enterprise AI platforms, enabling security leaders to govern AI usage, enforce policies, and prevent exposures. The tool allows users to track AI platform usage, identify and fix AI misconfigurations, protect against AI exploitation, and deploy quickly with industry-leading security for AI platform use.

Blackbird.AI

Blackbird.AI is a narrative and risk intelligence platform that helps organizations identify and protect against narrative attacks created by misinformation and disinformation. The platform offers a range of solutions tailored to different industries and roles, enabling users to analyze threats in text, images, and memes across various sources such as social media, news, and the dark web. By providing context and clarity for strategic decision-making, Blackbird.AI empowers organizations to proactively manage and mitigate the impact of narrative attacks on their reputation and financial stability.

Relyance AI

Relyance AI is a platform that offers 360 Data Governance and Trust solutions. It helps businesses safeguard against fines and reputation damage while enhancing customer trust to drive business growth. The platform provides visibility into enterprise-wide data processing, ensuring compliance with regulatory and customer obligations. Relyance AI uses AI-powered risk insights to proactively identify and address risks, offering a unified trust and governance infrastructure. It offers features such as data inventory and mapping, automated assessments, security posture management, and vendor risk management. The platform is designed to streamline data governance processes, reduce costs, and improve operational efficiency.

My Voice AI

My Voice AI is an advanced voice identity security infrastructure that provides privacy-preserving, real-time voice authentication and deepfake protection. It is designed to reduce fraud, impersonation, and identity risk in voice-based interactions by offering speaker verification, anti-spoofing, and deepfake detection capabilities. The platform operates as a voice identity layer integrated into existing infrastructure, offering enterprise-grade latency, privacy-first architecture, and deterministic behavior suitable for audits. My Voice AI is purpose-built for regulated environments, such as financial institutions, critical services, and governments, where identity assurance is crucial to mitigate operational risks.

Kodora AI

Kodora AI is a leading AI technology and advisory firm based in Australia, specializing in providing end-to-end AI services. They offer AI strategy development, use case identification, workforce AI training, and more. With a team of expert AI engineers and consultants, Kodora focuses on delivering practical outcomes for clients across various industries. The firm is known for its deep expertise, solution-focused approach, and commitment to driving AI adoption and innovation.

Greip

Greip is an AI-powered fraud prevention tool that offers a range of services to detect and prevent fraudulent activities in payments, validate card and IBAN details, detect profanity in text, identify VPN/proxy connections, provide IP location intelligence, and more. It combines AI-driven transaction analysis with advanced technology to safeguard financial security and enhance data integrity. Greip's services are trusted by businesses worldwide for secure and reliable protection against fraud.

Netify

Netify provides network intelligence and visibility. Its solution stack starts with a Deep Packet Inspection (DPI) engine that passively collects data on the local network. This lightweight engine identifies applications, protocols, hostnames, encryption ciphers, and other network attributes. The software can be integrated into network devices for traffic identification, firewalling, QoS, and cybersecurity. Netify's Informatics engine collects data from local DPI engines and uses the power of a public or private cloud to transform it into network intelligence. From device identification to cybersecurity risk detection, Informatics provides a way to take a proactive approach to manage network threats, bottlenecks, and usage. Lastly, Netify's Data Feeds provide data to help vendors understand how applications behave on the Internet.

0 - Open Source AI Tools

20 - OpenAI Gpts

Security Testing Advisor

Ensures software security through comprehensive testing techniques.

Securia

AI-powered audit ally. Enhance cybersecurity effortlessly with intelligent, automated security analysis. Safe, swift, and smart.

SSLLMs Advisor

Helps you build logic security into your GPTs custom instructions. Documentation: https://github.com/infotrix/SSLLMs---Semantic-Secuirty-for-LLM-GPTs

RobotGPT

Expert in ethical hacking, leveraging https://pentestbook.six2dez.com/ and https://book.hacktricks.xyz resources for CTFs and challenges.

STO Advisor Pro

Advisor on Security Token Offerings, providing insights without financial advice. Powered by Magic Circle

Thinks and Links Digest

Archive of content shared in Randy Lariar's weekly "Thinks and Links" newsletter about AI, Risk, and Security.

USA Web3 Privacy & Data Law Master

Expert in answering Web3 Privacy and Data Security Law queries for small businesses in the USA

Fluffy Risk Analyst

A cute sheep expert in risk analysis, providing downloadable checklists.