Best AI tools for< Host Locally >

20 - AI tool Sites

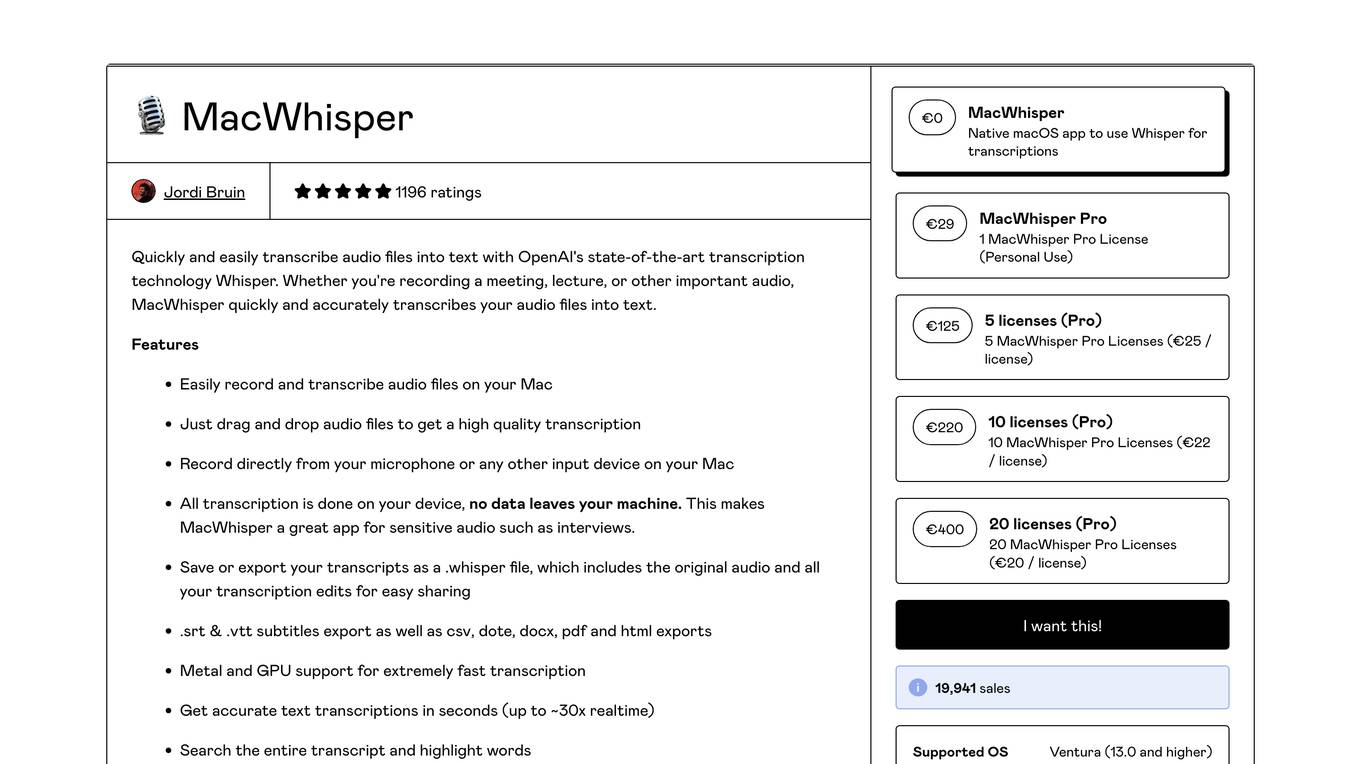

MacWhisper

MacWhisper is a native macOS application that utilizes OpenAI's Whisper technology for transcribing audio files into text. It offers a user-friendly interface for recording, transcribing, and editing audio, making it suitable for various use cases such as transcribing meetings, lectures, interviews, and podcasts. The application is designed to protect user privacy by performing all transcriptions locally on the device, ensuring that no data leaves the user's machine.

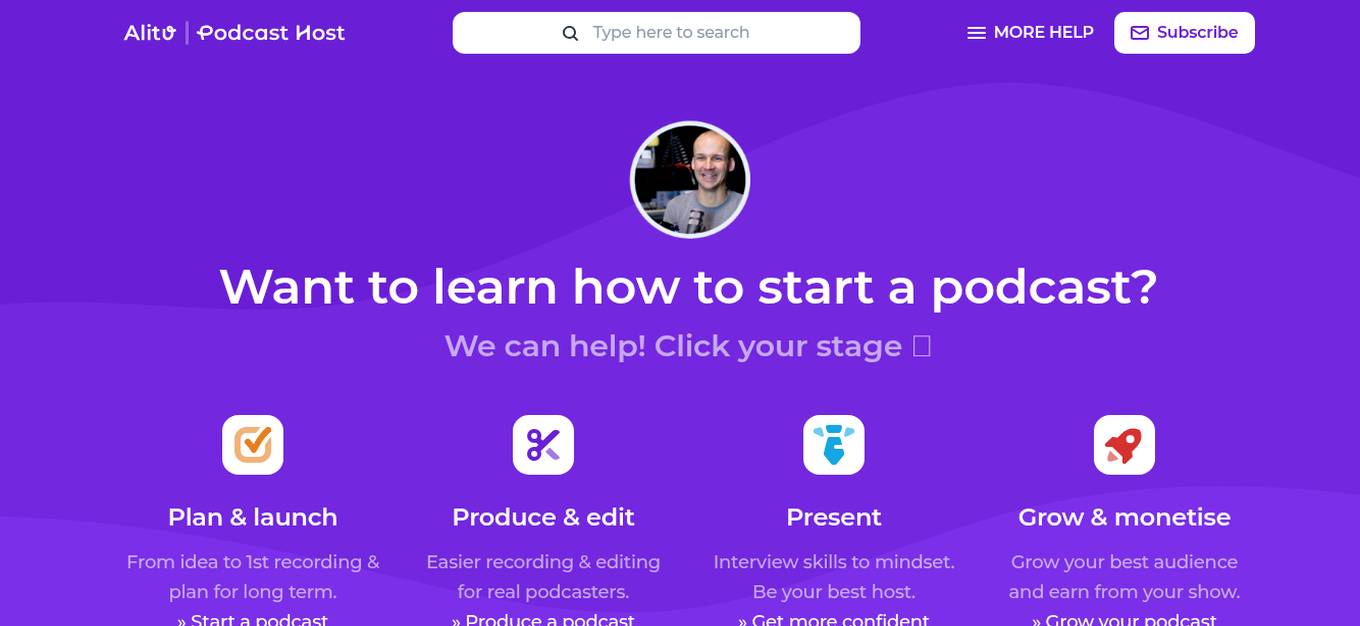

The Podcast Host

The Podcast Host is an AI-powered platform designed to assist users in launching, growing, and managing their podcasts efficiently. With features like Podcast Editor for quick editing, Podcast Planner for show planning assistance, and Podcraft Academy for personalized guidance, the platform aims to streamline the podcasting process. The website offers resources, tools, and expert advice to help podcasters at every stage of their journey, from recording and editing to audience growth and monetization.

Be.Live

Be.Live is a livestreaming studio that allows users to create beautiful livestreams and repurpose them into shorter videos and podcasts. It enables users to host live talk shows, invite guests on screen, and customize their streams with branding elements. With features like screen sharing, on-screen elements, and mobile streaming app, Be.Live aims to help coaches, hosts, infopreneurs, and influencers consistently produce and repurpose video content to engage their audience effectively.

CodeDesign.ai

CodeDesign.ai is an AI-powered website builder that helps users create and host websites in minutes. It offers a range of features, including a drag-and-drop interface, AI-generated content, and responsive design. CodeDesign.ai is suitable for both beginners and experienced users, and it offers a free plan as well as paid plans with additional features.

10Web

10Web is an AI-powered website builder that helps businesses create professional websites in minutes. With 10Web, you can generate tailored content and images based on your answers to a few simple questions. You can also choose from a library of pre-made layouts and customize your website with our intuitive drag-and-drop editor. 10Web also offers a range of hosting services, so you don't have to worry about finding a separate hosting provider.

Wave.video

Wave.video is an online video editor and hosting platform that allows users to create, edit, and host videos. It offers a wide range of features, including a live streaming studio, video recorder, stock library, and video hosting. Wave.video is easy to use and affordable, making it a great option for businesses and individuals who need to create high-quality videos.

Elementor

Elementor is a leading website builder platform for professionals on WordPress. It empowers users to create, manage, and host stunning websites with ease. Elementor's drag-and-drop interface, extensive library of widgets and templates, and seamless integration with WordPress make it an ideal choice for web designers, developers, and marketers alike. With Elementor, users can build professional-grade websites without the need for coding or technical expertise.

Replit

Replit is a software creation platform that provides an integrated development environment (IDE), artificial intelligence (AI) assistance, and deployment services. It allows users to build, test, and deploy software projects directly from their browser, without the need for local setup or configuration. Replit offers real-time collaboration, code generation, debugging, and autocompletion features powered by AI. It supports multiple programming languages and frameworks, making it suitable for a wide range of development projects.

Contrast

Contrast is a webinar platform that uses AI to help you create engaging and effective webinars. With Contrast, you can easily create branded webinars, add interactive elements like polls and Q&A, and track your webinar analytics. Contrast also offers a variety of tools to help you repurpose your webinar content, such as a summary generator, blog post creator, and clip maker.

Key.ai

Key.ai is an AI-powered professional networking platform that leverages artificial intelligence to enhance the networking experience. It eliminates friction in professional networking by understanding users' goals and surfacing valuable connections and opportunities. Key.ai offers AI-powered conversation starters, timely follow-ups, and contextual insights to facilitate meaningful relationships and create impactful outcomes. The platform enables users to create branded communities, host events, manage members efficiently, and gain actionable insights to grow their network. With features like smart event setup, automated reminders, and real-time surveys, Key.ai aims to revolutionize professional networking by making it more personalized and efficient.

Ausha

Ausha is an advanced podcast marketing solution offering tools to distribute, promote, analyze, and rank higher on platforms like Apple Podcasts and Spotify. It provides features such as AI-powered transcription, promotion statistics, podcast search optimization, hosting, distribution, and monetization. Ausha caters to independent podcasters, companies, agencies, and podcast networks, helping creators grow their audience from the first listeners to the next thousand. With a focus on visibility and audience engagement, Ausha offers personalized insights, keyword optimization, episode tracking, and competitor analysis to enhance podcast performance and reach.

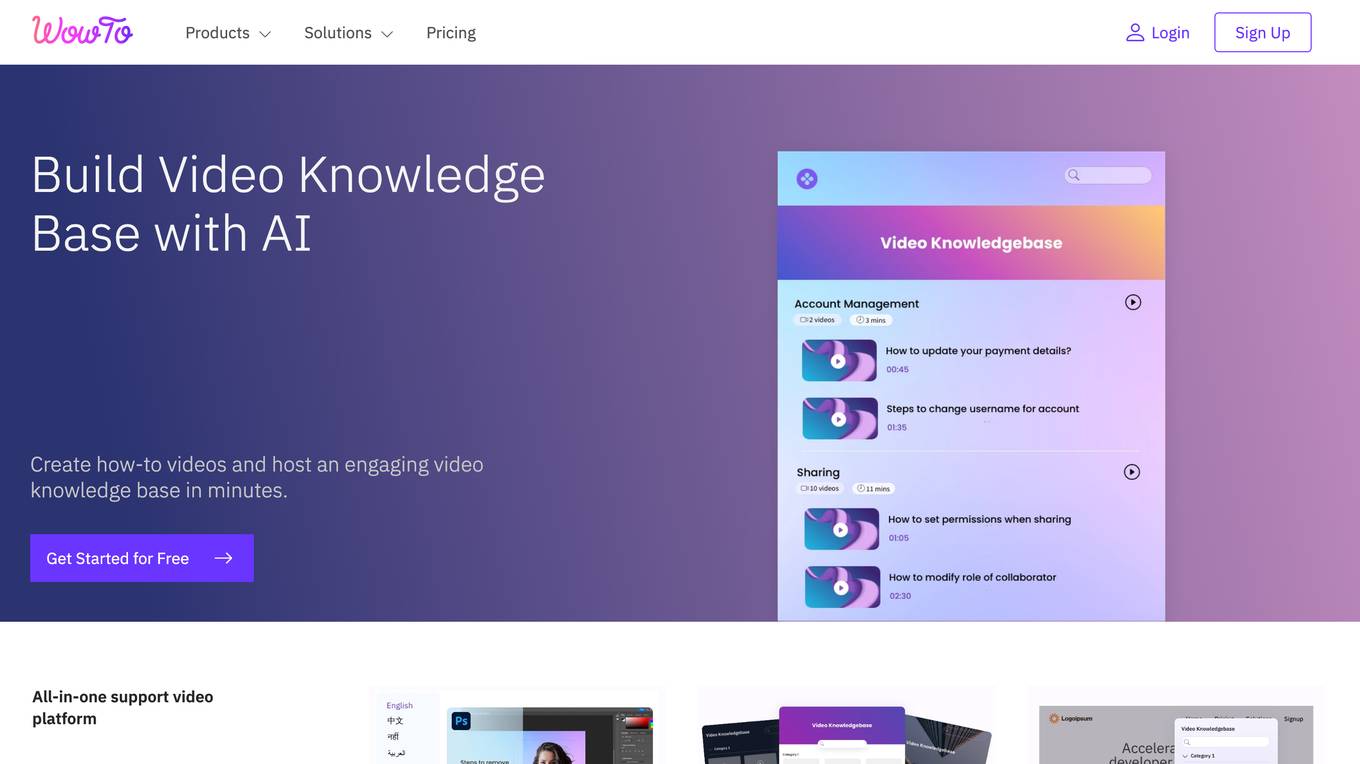

WowTo

WowTo is an all-in-one support video platform that helps businesses create how-to videos, host video knowledge bases, and provide in-app video help. With WowTo's AI-powered video creator, businesses can easily create step-by-step how-to videos without any prior design expertise. WowTo also offers a variety of pre-made video knowledge base layouts to choose from, making it easy to create a professional-looking video knowledge base that matches your brand. In addition, WowTo's in-app video widget allows businesses to provide contextual video help to their visitors, improving the customer support experience.

The Cognitive Revolution

The Cognitive Revolution is a weekly podcast hosted by Nathan Labenz that delves into the transformative impact AI will have in the near future. The show features in-depth expert interviews, 'AI Scouting Reports' on critical topics, and discussions with AI innovators. Covering a wide range of AI-related subjects, the podcast aims to provide exclusive insights from AI trailblazers and offer analysis on the forefront of the AI revolution.

The Video Calling App

The Video Calling App is an AI-powered platform designed to revolutionize meeting experiences by providing laser-focused, context-aware, and outcome-driven meetings. It aims to streamline post-meeting routines, enhance collaboration, and improve overall meeting efficiency. With powerful integrations and AI features, the app captures, organizes, and distills meeting content to provide users with a clearer perspective and free headspace. It offers seamless integration with popular tools like Slack, Linear, and Google Calendar, enabling users to automate tasks, manage schedules, and enhance productivity. The app's user-friendly interface, interactive features, and advanced search capabilities make it a valuable tool for global teams and remote workers seeking to optimize their meeting experiences.

AI Advances

AI Advances is a platform dedicated to democratizing access to artificial intelligence (AI) knowledge and tools. The website aims to empower individuals from all backgrounds to build their own AI systems, address unique challenges, and improve their lives. By bridging the gap and leveling the playing field, AI Advances envisions a future where AI is as ubiquitous and essential as reading and writing once were. The platform provides educational resources, tools, and a supportive community to make democratized AI a reality.

Satellitor

Satellitor is an AI-powered SEO tool that helps businesses create and manage SEO-optimized blogs. It automates the entire process of content creation, publishing, and ranking, freeing up business owners to focus on other aspects of their business. Satellitor's AI-generated content is of high quality and adheres to Google's best practices, ensuring that your blog ranks well in search results and attracts organic traffic to your website.

n8n

n8n is a powerful workflow automation software and tools that offer advanced AI capabilities. It is a popular platform for technical teams to automate workflows, integrate various services, and build autonomous agents. With over 400 integrations, n8n enables users to save time, streamline operations, and enhance security through AI-driven processes. The tool supports self-hosting, external libraries, and offers enterprise-ready solutions for scaling operations. n8n empowers users to code iteratively, explore advanced AI features, and create complex workflows with ease.

Podcastle

Podcastle is an all-in-one podcasting software that empowers creators of all backgrounds and experience levels with an intuitive, AI-powered platform. It offers a wide range of features, including a recording studio, audio editor, video editor, AI-generated voices, and hosting hub, making it easy to create, edit, and publish high-quality podcasts and videos. Podcastle is designed to be user-friendly and accessible, with no prior experience or technical expertise required.

GPT Engineer

GPT Engineer is an AI tool designed to help users build web applications 10x faster by chatting with AI. Users can sync their projects with GitHub and deploy them with a single click. The tool offers features like displaying top stories from Hacker News, creating landing pages for startups, tracking crypto portfolios, managing startup operations, and building front-end with React, Tailwind & Vite. GPT Engineer is currently in beta and aims to streamline the web development process for users.

n8n

n8n is a powerful workflow automation software and tool that offers advanced AI capabilities. It is a popular platform for technical teams to automate workflows, integrate various services, and build autonomous agents. With over 400 integrations, n8n enables users to save time, streamline operations, and enhance security through AI-powered solutions. The tool supports self-hosting, external libraries, and a user-friendly interface for both coding and non-coding users.

1 - Open Source AI Tools

DocsGPT

DocsGPT is an open-source documentation assistant powered by GPT models. It simplifies the process of searching for information in project documentation by allowing developers to ask questions and receive accurate answers. With DocsGPT, users can say goodbye to manual searches and quickly find the information they need. The tool aims to revolutionize project documentation experiences and offers features like live previews, Discord community, guides, and contribution opportunities. It consists of a Flask app, Chrome extension, similarity search index creation script, and a frontend built with Vite and React. Users can quickly get started with DocsGPT by following the provided setup instructions and can contribute to its development by following the guidelines in the CONTRIBUTING.md file. The project follows a Code of Conduct to ensure a harassment-free community environment for all participants. DocsGPT is licensed under MIT and is built with LangChain.

20 - OpenAI Gpts

Escape Room Host

Let's go on an Escape Room adventure! Do you have what it takes to escape?

Impractical Jokers: Shark Tank Edition Game

Host a comedic game show of absurd inventions!

Game Night (After Dark)

Your custom adult game night host! It learns your group's details for a tailored, lively experience. With a focus on sophistication and humor, it creates a safe, fun atmosphere, keeping up with the latest trends in adult entertainment.

Sports Nerds Trivia MCQ

I host a diverse range of sports trivia: Prompt a difficulty to begin

Homes Under The Hammer Bot

Consistent property auction game host with post-purchase renovation insights.