Best AI tools for< Format Tables >

20 - AI tool Sites

Tablesmith

Tablesmith is a free, privacy-first, and intuitive spreadsheet automation tool that allows users to build reusable data flows, effortlessly sort, filter, group, format, or split data across files/sheets based on cell values. It is designed to be easy to learn and use, with a focus on privacy and cross-platform compatibility. Tablesmith also offers an AI autofill feature that suggests and fills in information based on the user's prompt.

MD Editor

MD Editor is an AI-powered markdown editor designed for tech writers. It offers intelligent suggestions, formatting assistance, and code highlighting to streamline the writing process. With features like article management, powerful editor, sync & share, and customizable experience, MD Editor aims to boost productivity and improve the quality of technical writing. Users can import articles, generate drafts with AI, write from scratch, add code snippets, tables, images, and media, dictate articles using speech recognition, and get article metrics. The platform supports exporting to multiple formats and publishing to various platforms.

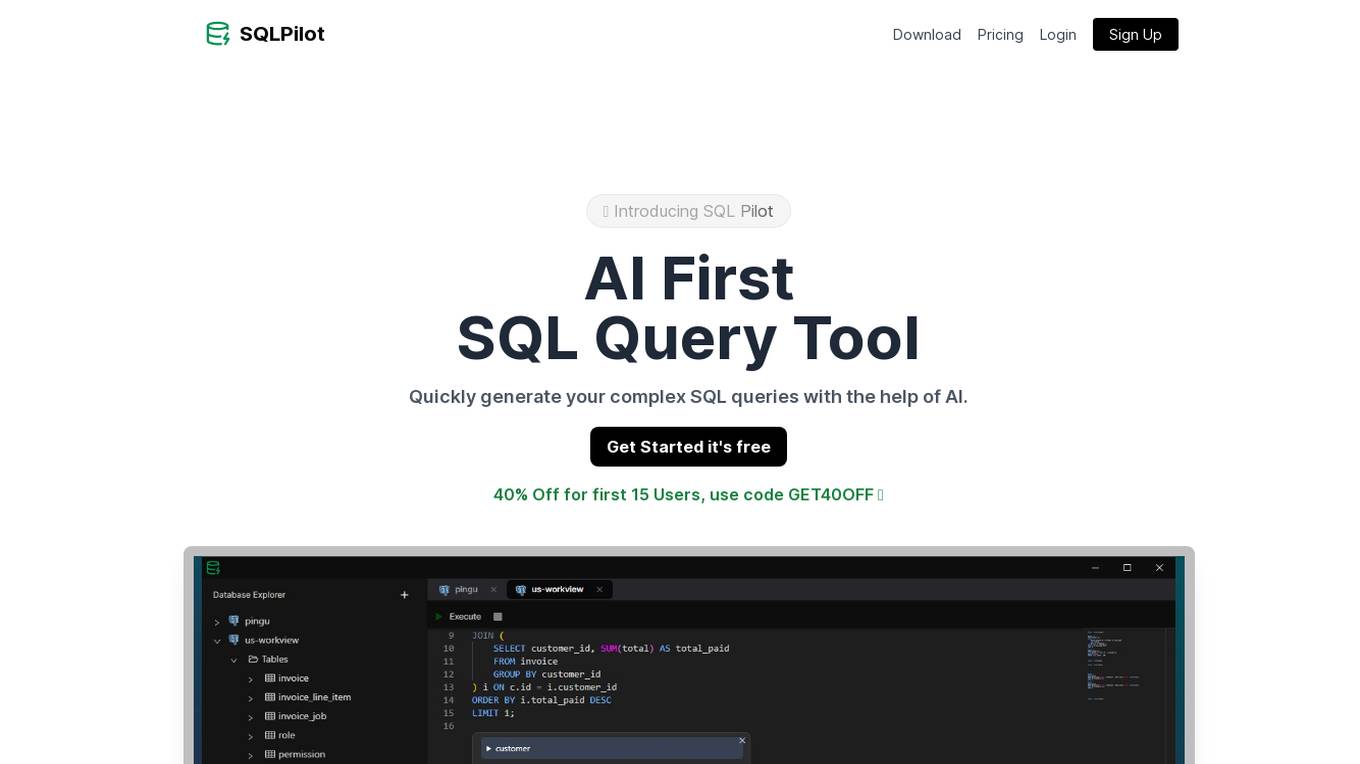

SQLPilot

SQLPilot is an AI-first SQL editor that leverages artificial intelligence to help users quickly generate complex SQL queries. The tool supports multiple GPT models, offers SQL autocomplete, ensures privacy and security by not storing user data, and allows users to download query results in CSV format. With SQLPilot, users can write prompts in natural language, mention required tables, and let the AI model generate the query with all the necessary context. Testimonials from users highlight the tool's efficiency, accuracy, and time-saving capabilities in database management.

Wonder Tales Blog

Wonder Tales Blog is a website that allows users to create personalized fairy tales for children. The website features a variety of templates and illustrations that can be used to create unique stories. The stories can be read in audio format or downloaded as PDFs. Wonder Tales Blog also offers a variety of resources for parents and educators, including tips on how to use fairy tales to teach children valuable life lessons.

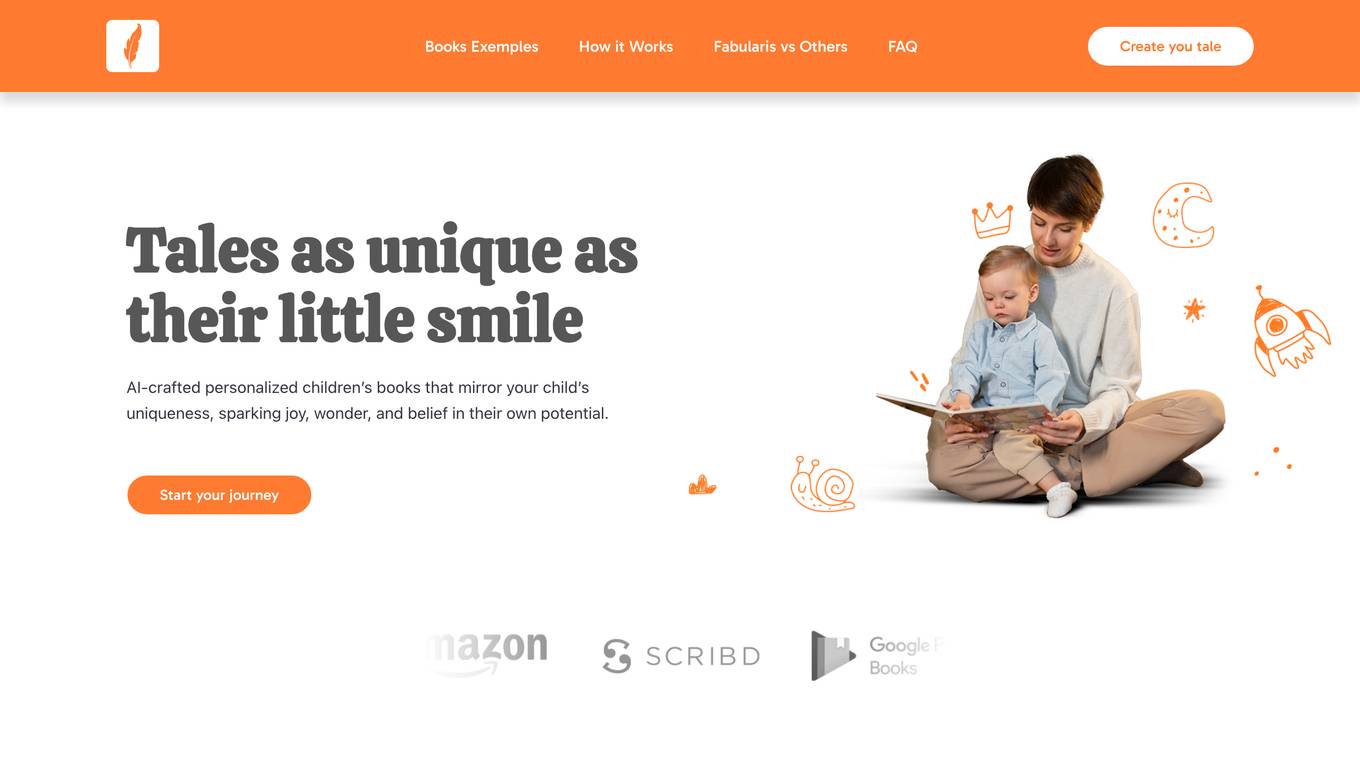

Fabularis

Fabularis is an AI-powered platform that creates personalized children's books tailored to each child's unique characteristics. By inputting basic details, the system crafts a narrative that resonates personally with the child, sparking joy, wonder, and belief in their potential. The platform offers a range of AI-crafted children's book examples, allowing users to choose the values they wish to emphasize and edit the stories to give them a personal touch. Users can enjoy their unique storybook in PDF format or opt to purchase a physical version. Fabularis stands out for its total personalization, premium illustrations, and educational value, making reading a captivating and personal journey for young readers.

AI Subtraction Learning Helper

AI Subtraction Learning Helper is an AI tool designed to assist students in learning subtraction through printable subtraction tables, charts, and worksheets. The application provides free resources in various formats, including PDF and JPG, to enhance math learning for children from Kindergarten to 4th Grade. It offers colored subtraction charts, number decomposition solutions, and subtraction games to make learning engaging and effective. Parents and teachers can use the tool to customize practice sessions and track students' progress in mastering fundamental subtraction concepts.

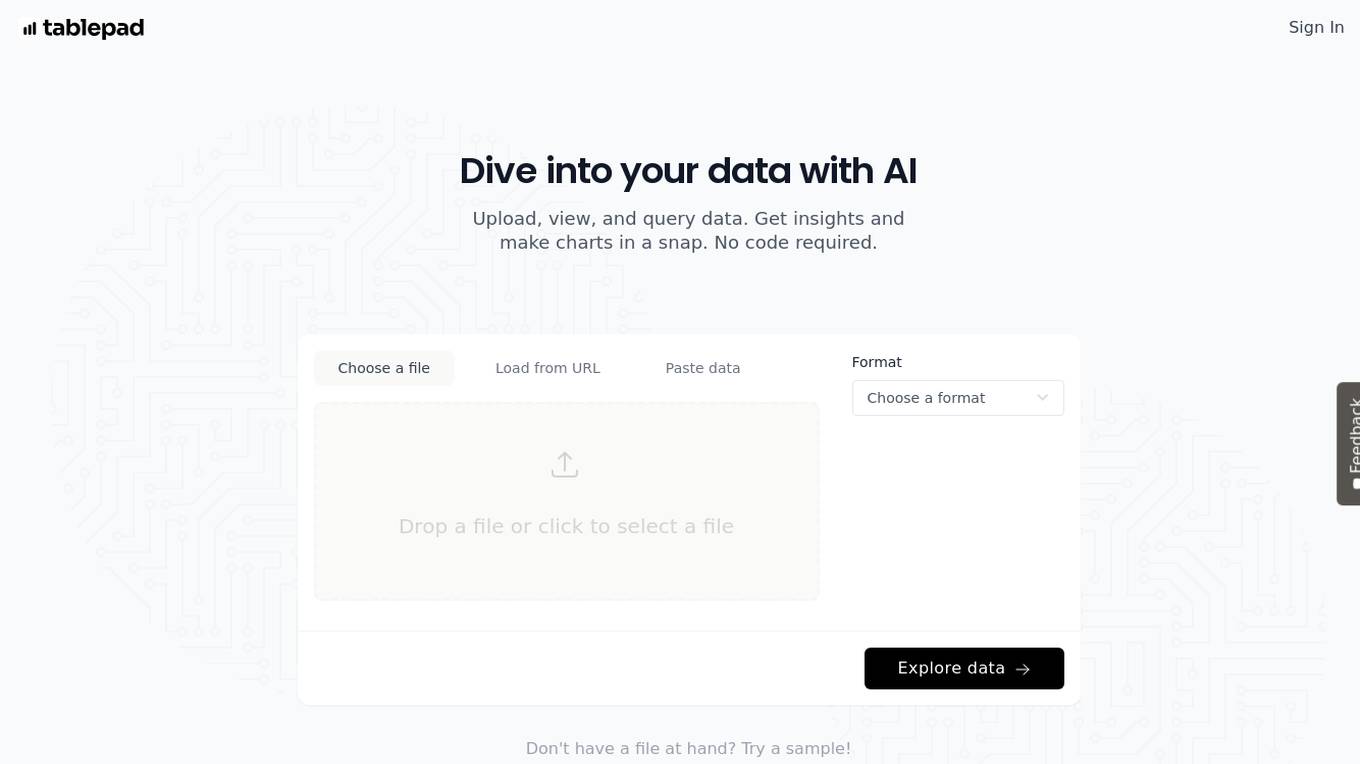

Tablepad

Tablepad is an AI-powered data analytics tool that allows users to upload, view, and query data effortlessly. With Tablepad, users can generate insights and create charts without the need for coding skills. The tool supports various file formats and offers automated visual insights by generating graphs and charts based on plain English questions. Tablepad simplifies data exploration and visualization, making it easy for users to uncover valuable insights from their data.

Plnar

Plnar is a smartphone imagery platform powered by AI that transforms smartphone photos into accurate 3D models, precise measurements, and fast estimates. It allows users to capture spatial-ready imagery without special equipment, enabling self-service for policyholders and providing field solutions for adjusters. Plnar standardizes data formats from a single smartphone image, generating reliable data for claims, underwriting, and more. The platform integrates services into one streamlined solution, eliminating inconsistencies and manual entry.

Format Magic

Format Magic is a one-click formatting platform powered by AI that transforms plain text into beautifully formatted documents within seconds. Users can select a template, paste their text, and let the AI automatically apply headings and styles to create professional resumes or documents effortlessly. The platform offers easy-to-use tools for quick and efficient formatting, making it a valuable resource for individuals looking to enhance the visual appeal of their written content.

Resumy

Resumy is an AI-powered resume builder that uses OpenAI's GPT-4 natural language processing model to generate polished and effective resumes. It analyzes a user's work experience, skills, and achievements to create a professional-looking resume in minutes. Resumy also offers proven templates and personalized help from resume writing experts.

AI Image Translator

AI Image Translator is an advanced tool that utilizes AI-powered OCR technology to translate images while retaining original text formats. It supports over 130 languages and offers features such as format preservation, background restoration, multi-language translation, intelligent text placement, and high-quality image export. The tool is ideal for tasks like e-commerce product image translation, app and software screenshot translation, marketing and advertisement translation, technical document translation, and educational content translation.

Letterfy

Letterfy is an AI-powered cover letter generator that helps job seekers create high-quality cover letters quickly and easily. With Letterfy, you can generate a professional cover letter in minutes, tailored to the specific job you're applying for. Letterfy's AI technology analyzes your resume and LinkedIn profile to identify your skills and experience, and then generates a cover letter that highlights your most relevant qualifications. You can also customize your cover letter with your own personal touch, and download it in PDF format.

Editby

Editby is an AI-powered content creation tool that helps users create SEO-optimized content that ranks on Google and social media. It offers a range of features to help users create high-quality content, including AI-powered recommendations, trending content suggestions, and plagiarism detection. Editby also integrates with a variety of platforms, making it easy to publish content anywhere you need it.

HooksAI

HooksAI is an AI-powered tool designed to help users achieve perfect prints on the first try. It utilizes advanced algorithms to analyze and optimize printing settings, ensuring high-quality results without the need for manual adjustments. With HooksAI, users can streamline their printing process and eliminate the frustration of trial and error. Whether you're a professional photographer, graphic designer, or hobbyist, HooksAI is your go-to solution for flawless prints every time.

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

Bibit AI

Bibit AI is a real estate marketing AI designed to enhance the efficiency and effectiveness of real estate marketing and sales. It can help create listings, descriptions, and property content, and offers a host of other features. Bibit AI is the world's first AI for Real Estate. We are transforming the real estate industry by boosting efficiency and simplifying tasks like listing creation and content generation.

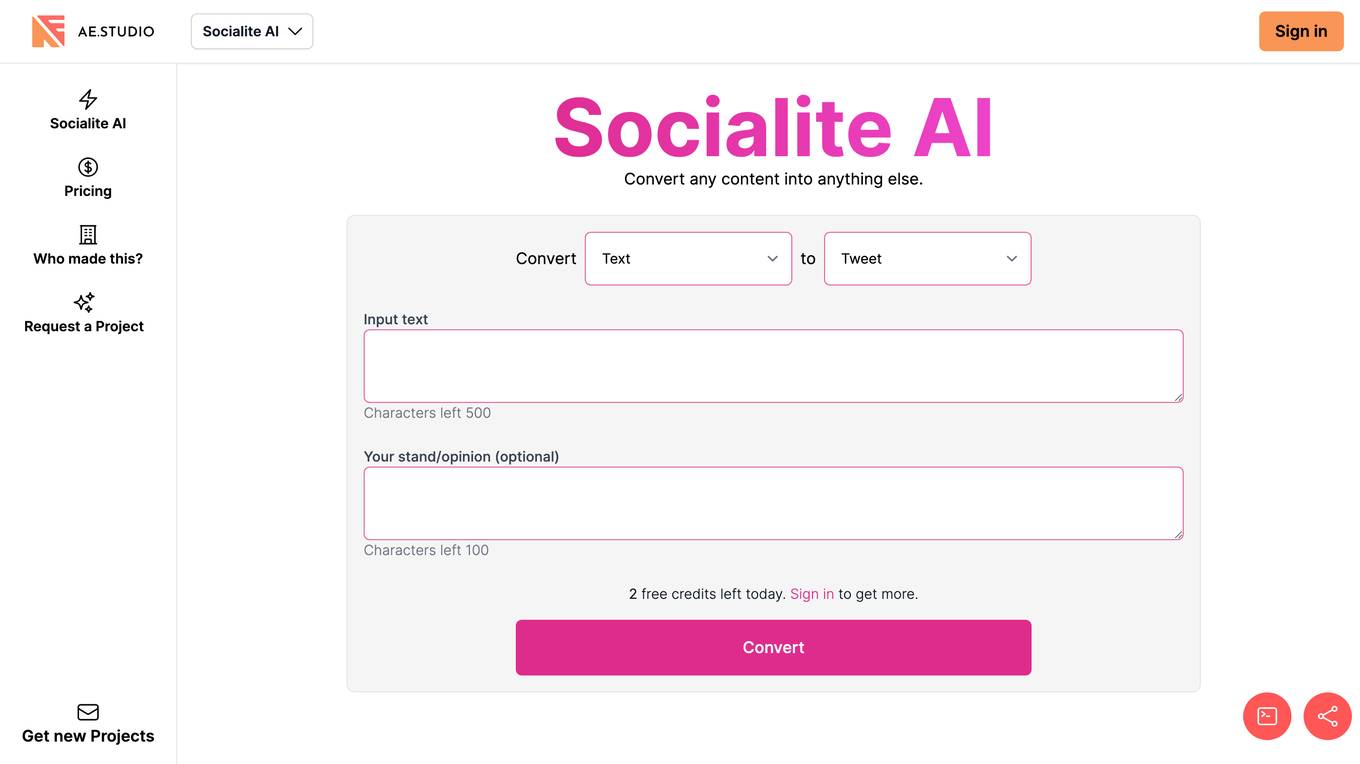

Socialite AI

Socialite AI is an AI tool that allows users to convert any content into something else. It provides a platform where users can input text and transform it into various forms. The tool is designed to assist users in creating new content or transforming existing content into different formats. Socialite AI aims to streamline the content creation process by offering a simple and efficient way to convert text. With its user-friendly interface, users can easily generate new ideas and explore creative possibilities.

PDFMerse

PDFMerse is an AI-powered data extraction tool that revolutionizes how users handle document data. It allows users to effortlessly extract information from PDFs with precision, saving time and enhancing workflow. With cutting-edge AI technology, PDFMerse automates data extraction, ensures data accuracy, and offers versatile output formats like CSV, JSON, and Excel. The tool is designed to dramatically reduce processing time and operational costs, enabling users to focus on higher-value tasks.

LogoliveryAI

LogoliveryAI is a free AI-powered logo generator that allows users to create logos in SVG format. The platform is easy to use and provides users with a variety of customization options. LogoliveryAI is perfect for entrepreneurs, small businesses, and anyone else who needs a professional-looking logo.

Watto AI

Watto AI is a platform that offers Conversational AI solutions to businesses, allowing them to build AI voice agents without the need for coding. The platform enables users to collect leads, automate customer support, and facilitate natural interactions through AI voice bots. Watto AI caters to various industries and scenarios, providing human-like conversational AI for mystery shopping, top-quality customer support, and restaurant assistance.

0 - Open Source AI Tools

20 - OpenAI Gpts

Assistente Codificação TUSS Exames com OCR

Portuguese OCR for medical test coding, outputs in table format.

MarkDown変換くん

入力した文章をMarkdown形式にコードとして正しく変換してくれます。文章を入力するだけでOKです!更に、読み手が読みやすいようにレイアウトも考えてくれます!途中で止まっても「続けてください」といえば大丈夫です。

PDF and Template Formatter

Assists with PDF and template formatting for a professional look.

Classical Music Audition Finder

I find classical music career opportunities in table format.

GLOBAL WAR INFO

Gathers and presents info on global wars in a table format with donation options.

Overleaf GPT

Overleaf GPT is an interactive assistant for writing detailed Overleaf documents. Overleaf GPT writes complete LaTeX reports, tailored to the user’s requirements. This GPT starts with conceptualizing the structure to iteratively developing the content and providing best-practice formatting in LaTeX.