Best AI tools for< Find Datasets >

20 - AI tool Sites

Prolific

Prolific is a platform that allows users to quickly find research participants they can trust. It offers a diverse participant pool, including domain experts and API integration. Prolific ensures high-quality human-powered datasets in less than 2 hours, trusted by over 3000 organizations. The platform is designed for ease of use, with self-serve options and scalability. It provides rich, accurate, and comprehensive responses from engaged participants, verified through manual and algorithmic quality checks.

iLovePhD

iLovePhD is a comprehensive research platform that serves as a one-stop solution for all research needs. It offers a wide range of services including access to journals, postdoc opportunities, scholarships, IoT security tools, job listings, datasets, and UGC-CARE journals. The platform also features ChatGPT for plagiarism detection and artificial intelligence applications. iLovePhD aims to assist researchers and academicians in publishing impactful work and staying updated with the latest trends in the academic world.

Prolific

Prolific is a platform that helps users quickly find research participants they can trust. It offers free representative samples, a participant pool of domain experts, the ability to bring your own participants, and an API for integration. Prolific ensures data quality by verifying participants with bank-grade ID checks, ongoing checks to identify bots, and no AI participants. The platform allows users to easily set up accounts, access rich and comprehensive responses, and scale research projects efficiently.

Voxel51

Voxel51 is an AI tool that provides open-source computer vision tools for machine learning. It offers solutions for various industries such as agriculture, aviation, driving, healthcare, manufacturing, retail, robotics, and security. Voxel51's main product, FiftyOne, helps users explore, visualize, and curate visual data to improve model performance and accelerate the development of visual AI applications. The platform is trusted by thousands of users and companies, offering both open-source and enterprise-ready solutions to manage and refine data and models for visual AI.

Dimensions AI

Dimensions AI is an advanced scientific research database that provides a suite of research applications and time-saving solutions for intelligent discovery and faster insight. It hosts the largest collection of interconnected global research data, including publications, clinical trials, patents, policy documents, grants, datasets, and online citations. The platform offers easy-to-understand visualizations, purpose-built applications, and integrated AI technology to speed up research interpretation and analysis. Dimensions is designed to propel research by connecting the dots across the research ecosystem and saving researchers hours of time.

Kanaries

Kanaries is an augmented analytics platform that uses AI to automate the process of data exploration and visualization. It offers a variety of features to help users quickly and easily find insights in their data, including: * **RATH:** An AI-powered engine that can automatically generate insights and recommendations based on your data. * **Graphic Walker:** A visual analytics tool that allows you to explore your data in a variety of ways, including charts, graphs, and maps. * **Data Painter:** A data cleaning and transformation tool that makes it easy to prepare your data for analysis. * **Causal Analysis:** A tool that helps you identify and understand the causal relationships between variables in your data. Kanaries is designed to be easy to use, even for users with no prior experience with data analysis. It is also highly scalable, so it can be used to analyze large datasets. Kanaries is a valuable tool for anyone who wants to quickly and easily find insights in their data. It can be used by businesses of all sizes, and it is particularly well-suited for organizations that are looking to improve their data-driven decision-making.

Datagrid

Datagrid is an AI-powered platform that acts as your co-worker, helping you find, enrich, and delegate information. It harnesses the power of AI to enrich datasets, access knowledge, execute tasks, and automate follow-ups. Datagrid AI Agents can free your team from the burden of enriching messy data, allowing them to focus on revenue-generating tasks. The platform offers features like AI enrichment, data processing, long-form content writing, generating insights, and creating a knowledge base.

NSFW AI Chat

NSFW AI Chat is a website that provides access to AI chatbots designed for adult audiences to engage in sexual or explicit conversations. These chatbots are trained on adult-themed data sets and are intended for sexual roleplay, sexting, and exploration of sexual fantasies. The website also includes a blog with articles on various topics related to NSFW AI chatbots, such as their benefits, risks, and how to use them.

Eightfold Talent Intelligence

Eightfold Talent Intelligence is an AI platform that offers a comprehensive suite of solutions for talent acquisition, talent management, workforce exchange, and resource management. Powered by deep-learning AI and global talent data sets, the platform helps organizations realize the full potential of their workforce by providing skills-driven insights and enabling better talent decisions. From finding and developing talent to matching employees with the right opportunities, Eightfold's AI technology revolutionizes the world of work by connecting people with possibilities.

Hackers.dev

Hackers.dev was an AI-powered platform that provided skill-based job recommendations for developers. The platform utilized an embeddings model fine-tuned on a dataset of 100,000 developer jobs to offer personalized job suggestions. Unfortunately, the website has been discontinued, but it previously connected developers with over 8,000 job opportunities from 500+ companies.

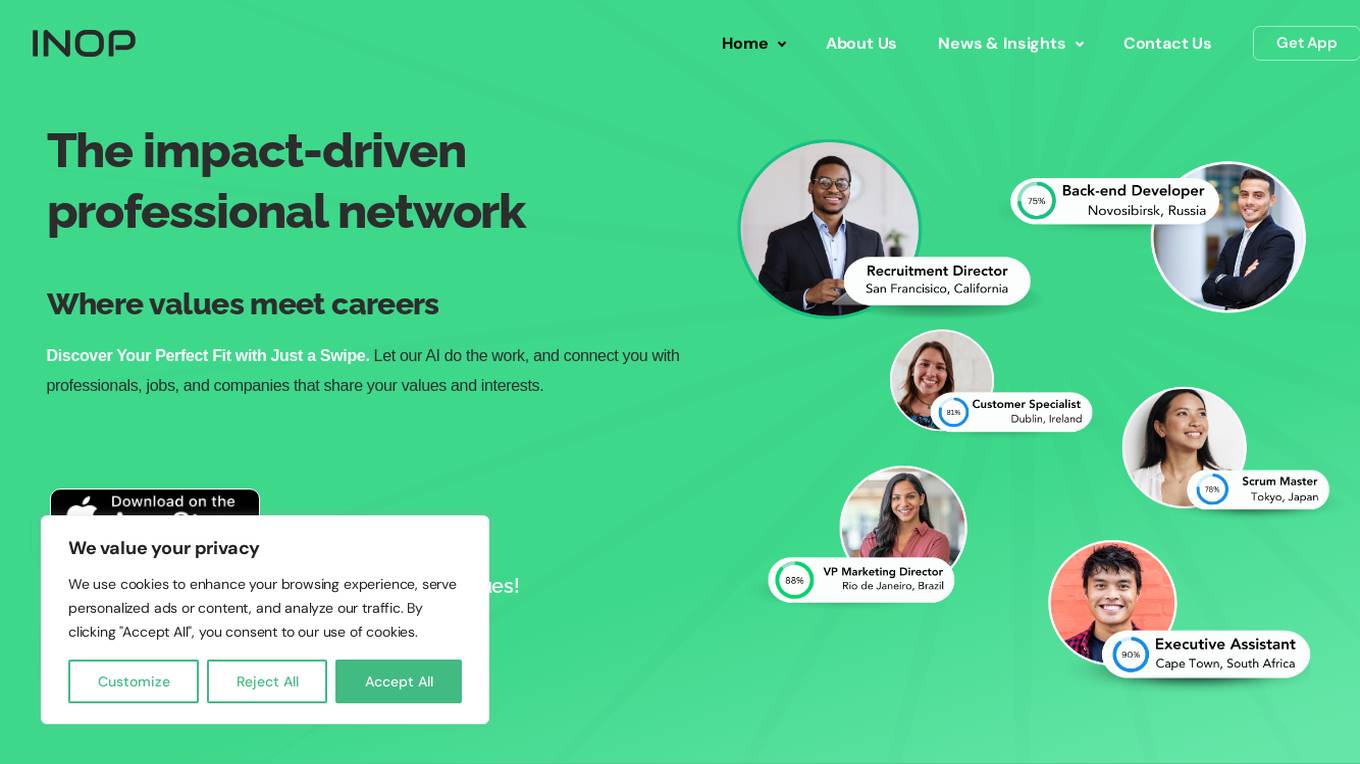

INOP

INOP is an impact-driven professional network that uses advanced AI matching algorithms to connect professionals with like-minded individuals, job opportunities, and companies that share their values and interests. The platform offers personalized job alerts, geolocation features, and actionable compensation insights. INOP goes beyond traditional networking platforms by providing rich enterprise-level insights on company culture, values, reputation, and ESG data sets. Users can access salary benchmarks, career path insights, and skills benchmarking to make informed career decisions.

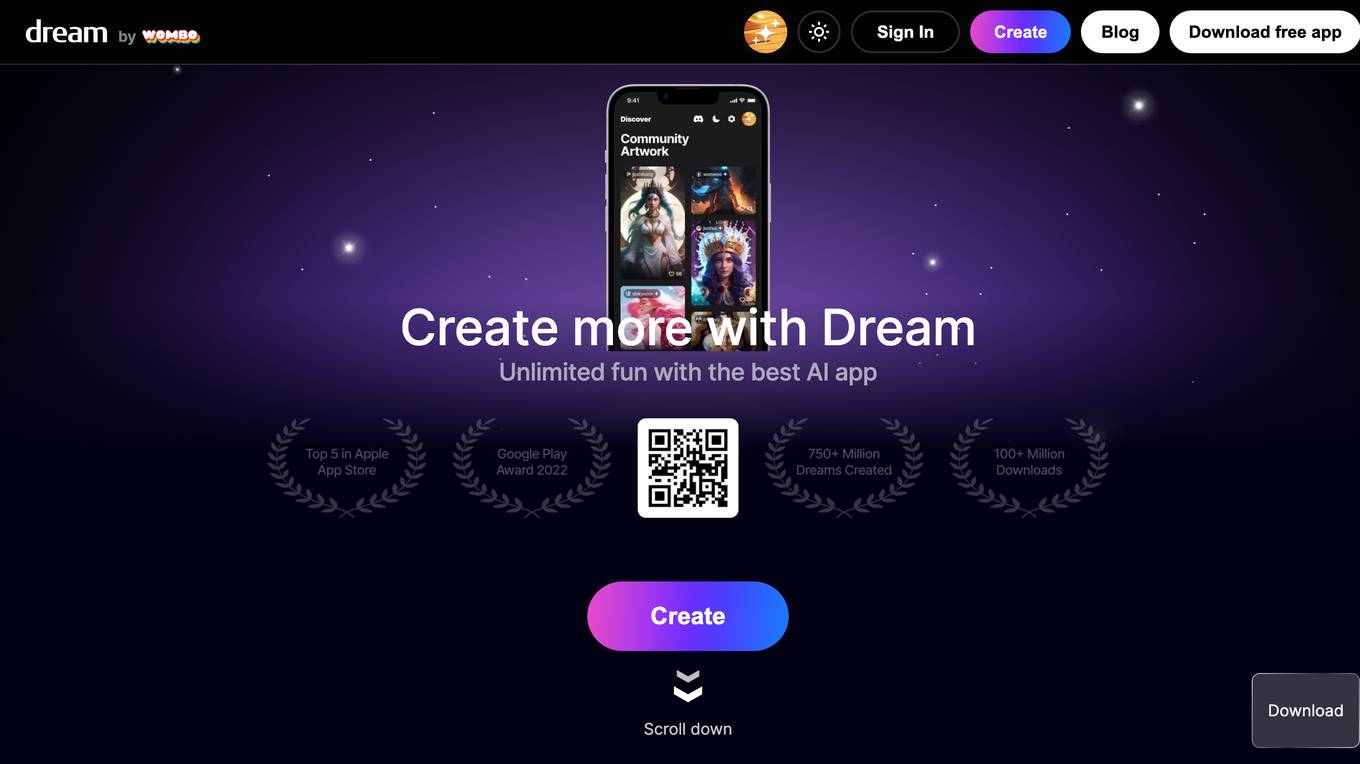

Dream by WOMBO

Dream by WOMBO is an AI-powered art generator that allows users to create unique and stunning images from text prompts. With its advanced algorithms and vast dataset of images, Dream by WOMBO can transform words into captivating visual masterpieces. Whether you're an artist, designer, or simply someone who appreciates the beauty of art, Dream by WOMBO empowers you to unleash your creativity and explore the limitless possibilities of AI-generated imagery.

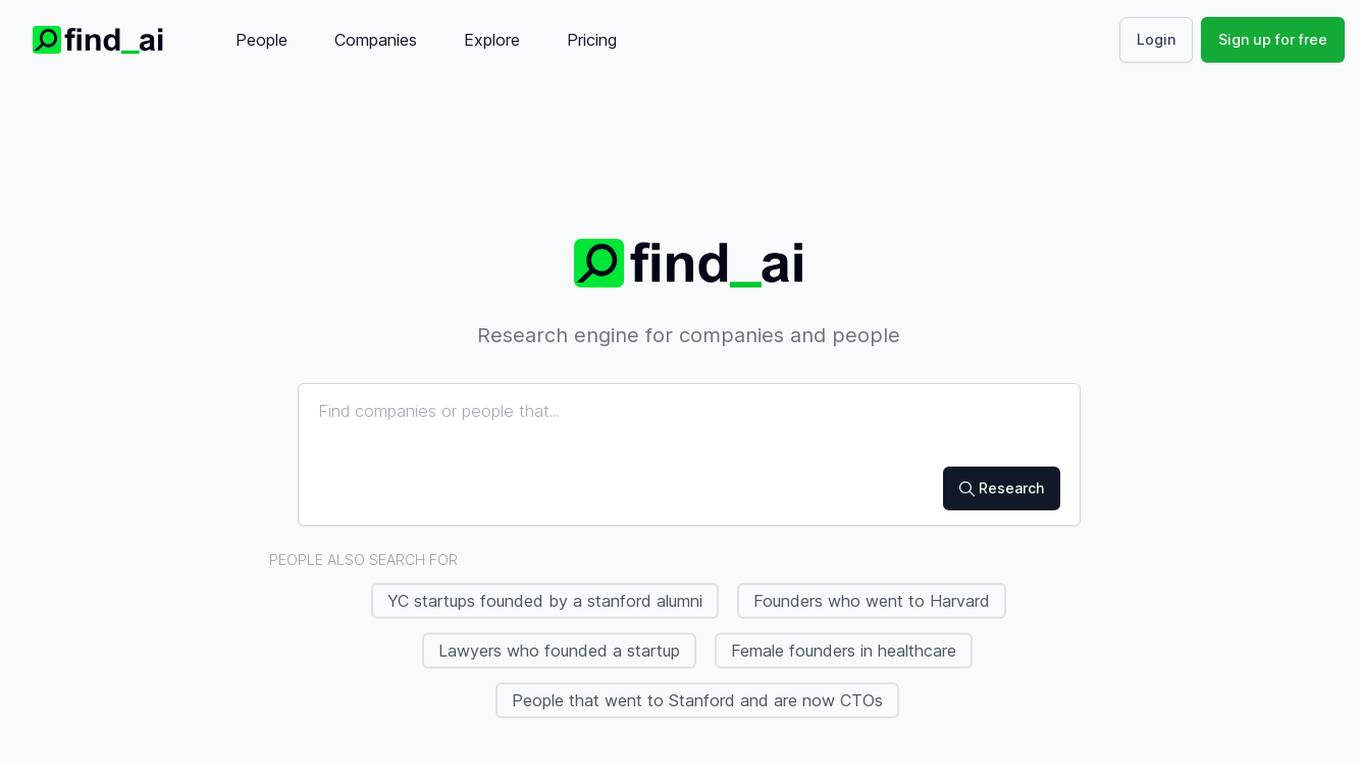

Find AI

Find AI is an AI-powered search engine that provides users with advanced search capabilities to unlock contact details and gain more accurate insights. The platform caters to individuals and companies looking to research people, companies, startups, founders, and more. Users can access email addresses and premium search features to explore a wide range of data related to various industries and sectors. Find AI offers a user-friendly interface and efficient search algorithms to deliver relevant results in a timely manner.

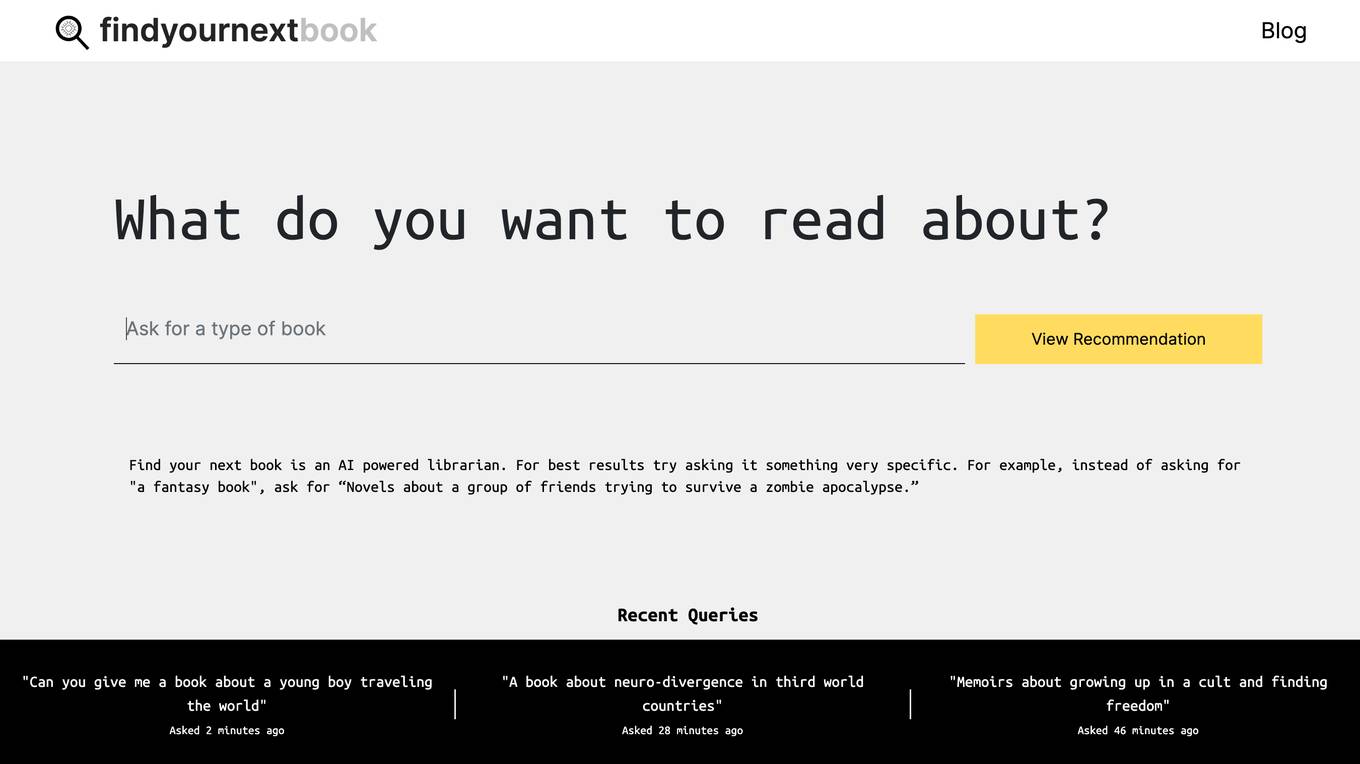

Find your next book

Find your next book is an AI-powered librarian that provides personalized book recommendations based on your preferences. It uses advanced algorithms to analyze your reading history, interests, and other factors to suggest books that you're likely to enjoy. The platform offers a wide range of genres and authors to choose from, making it easy to find your next favorite read.

Find Your AIs

Find Your AIs is an AI directory website that showcases a wide range of AI tools and applications. It offers a platform for users to explore and discover various AI-powered solutions across different categories such as digital wellness, marketing, text-to-image generation, resume customization, and more. The website aims to connect users with innovative AI technologies to enhance their daily lives and work efficiency.

Find My Remote

Find My Remote is an AI-powered job search platform that streamlines the job hunting process by leveraging artificial intelligence to find and structure job postings from various ATS platforms. Users can set their job preferences, receive personalized job matches, and save time by applying to curated job listings. The platform offers exclusive job opportunities not typically found on popular job search websites like LinkedIn. With features such as job discovery, application tracking, and faster application process, Find My Remote aims to revolutionize the way job seekers find and apply for jobs.

Find New AI

Find New AI is a comprehensive platform offering a variety of AI tools and efficiency solutions for different purposes such as SEO, content creation, marketing, link building, image manipulation, and more. The website provides reviews, tutorials, and guides on utilizing AI software effectively to enhance productivity and creativity in various domains.

Find My Size

Find My Size is a web application that provides personalized size recommendations for exclusive deals at hundreds of top retailers. Users can input their measurements and preferences to receive tailored suggestions for clothing items that will fit them perfectly. The platform aims to enhance the online shopping experience by helping customers find the right size and style without the need for multiple returns. Find My Size collaborates with various retailers to offer a wide range of products across different categories, including active & sportswear, young contemporary, business & workwear, lingerie & sleepwear, outerwear, maternity wear, plus size apparel, and swimwear.

PimEyes

PimEyes is an online face search engine that uses face recognition technology to find pictures containing given faces. It is a great tool to audit copyright infringement, protect your privacy, and find people.

Lexology

Lexology is a next-generation search tool designed to help users find the right lawyer for their needs. It offers a wide range of resources, including practical analysis, in-depth research tools, primary sources, and expert reports. The platform aims to be a go-to resource for legal professionals and individuals seeking legal expertise.

0 - Open Source AI Tools

20 - OpenAI Gpts

ResourceFinder

Assists in identifying and utilizing APIs and files effectively to enhance user-designed GPTs.

Chronic Disease Indicators Expert

This chatbot answers questions about the CDC’s Chronic Disease Indicators dataset

Find a Lawyer

Assists in finding suitable lawyers based on user needs. Disclaimer - always do your own extra research

Find First CS Job

A job assistant for CS grads, managing job applications and tracking in Excel.

Find Your Terminal

A specialist in recognizing flight tickets and providing terminal information.

RSS Finder | Find the RSS in any website

Finds and provides RSS feed URLs for given website links.

Yellowpages Navigator - Find Local Businesses Info

I assist with finding businesses on Yellowpages, providing factual and updated information.

Find Any GPT In The World

I help you find the perfect GPT model for your needs. From GPT Design, GPT Business, SEO, Content Creation or GPTs for Social Media we have you covered.

Find Top CPA Accountant Near You

This GPT assists in finding a top-rated accountant CPA - local or virtual. We account for their qualifications, experience, testimonials and reviews. Whether business or personal, provide a short description of the services wanted and city or state.