Best AI tools for< Extract Data From Bank Statements >

20 - AI tool Sites

AI Bank Statement Converter

The AI Bank Statement Converter is an industry-leading tool designed for accountants and bookkeepers to extract data from financial documents using artificial intelligence technology. It offers features such as automated data extraction, integration with accounting software, enhanced security, streamlined workflow, and multi-format conversion capabilities. The tool revolutionizes financial document processing by providing high-precision data extraction, tailored for accounting businesses, and ensuring data security through bank-level encryption. It also offers Intelligent Document Processing (IDP) using AI and machine learning techniques to process structured, semi-structured, and unstructured documents.

Rocket Statement

Rocket Statement is a leading bank statement conversion tool that helps users convert their PDF bank statements into Excel, CSV, or JSON formats quickly, securely, and easily. It supports over 100 major banks worldwide and can handle multilingual statements. The tool is trusted by professionals worldwide and offers a range of features, including bulk processing, clean data formatting, multiple export options, and an AI Copilot for smooth and flawless conversions.

Docsumo

Docsumo is an advanced Document AI platform designed for scalability and efficiency. It offers a wide range of capabilities such as pre-processing documents, extracting data, reviewing and analyzing documents. The platform provides features like document classification, touchless processing, ready-to-use AI models, auto-split functionality, and smart table extraction. Docsumo is a leader in intelligent document processing and is trusted by various industries for its accurate data extraction capabilities. The platform enables enterprises to digitize their document processing workflows, reduce manual efforts, and maximize data accuracy through its AI-powered solutions.

FormX.ai

FormX.ai is an AI-powered data extraction and conversion tool that automates the process of extracting data from physical documents and converting it into digital formats. It supports a wide range of document types, including invoices, receipts, purchase orders, bank statements, contracts, HR forms, shipping orders, loyalty member applications, annual reports, business certificates, personnel licenses, and more. FormX.ai's pre-configured data extraction models and effortless API integration make it easy for businesses to integrate data extraction into their existing systems and workflows. With FormX.ai, businesses can save time and money on manual data entry and improve the accuracy and efficiency of their data processing.

Evolution AI

Evolution AI is an AI data extraction tool that specializes in extracting data from financial documents such as financial statements, bank statements, invoices, and other related documents. The tool uses generative AI technology to automate the data extraction process, eliminating the need for manual entry. Evolution AI is trusted by global industry leaders and offers exceptional customer service, advanced technology, and a one-stop shop for data extraction.

AlgoDocs

AlgoDocs is a powerful AI Platform developed based on the latest technologies to streamline your processes and free your team from annoying and error-prone manual data entry by offering fast, secure, and accurate document data extraction.

DocsLoop

DocsLoop is a document extraction tool designed to simplify and automate document processing tasks for businesses, freelancers, and accountants. It offers a user-friendly interface, high accuracy in data extraction, and fully automated processing without the need for technical skills or human intervention. With DocsLoop, users can save hours every week by effortlessly extracting structured data from various document types, such as invoices and bank statements, and export it in their preferred format. The platform provides pay-as-you-go pricing plans with credits that never expire, catering to different user needs and business sizes.

Nanonets

Nanonets is an AI-powered intelligent document processing and workflow automation platform that offers data capture for various documents like invoices, bills of lading, purchase orders, passports, ID cards, bank statements, and receipts. It provides document and email workflows, AP automation, financial reconciliation, and AI agents across different functions such as finance & accounting, supply chain & operations, human resources, customer support, and legal, catering to industries like banking & finance, insurance, healthcare, logistics, and commercial real estate. Nanonets helps automate complex business processes with AI, extract valuable information from unstructured data, and make faster, more informed decisions.

Parsio

Parsio is an AI-powered document parser that can extract structured data from PDFs, emails, and other documents. It uses natural language processing to understand the context of the document and identify the relevant data points. Parsio can be used to automate a variety of tasks, such as extracting data from invoices, receipts, and emails.

Tablize

Tablize is a powerful data extraction tool that helps you turn unstructured data into structured, tabular format. With Tablize, you can easily extract data from PDFs, images, and websites, and export it to Excel, CSV, or JSON. Tablize uses artificial intelligence to automate the data extraction process, making it fast and easy to get the data you need.

Extracta.ai

Extracta.ai is an AI data extraction tool for documents and images that automates data extraction processes with easy integration. It allows users to define custom templates for extracting structured data without the need for training. The platform can extract data from various document types, including invoices, resumes, contracts, receipts, and more, providing accurate and efficient results. Extracta.ai ensures data security, encryption, and GDPR compliance, making it a reliable solution for businesses looking to streamline document processing.

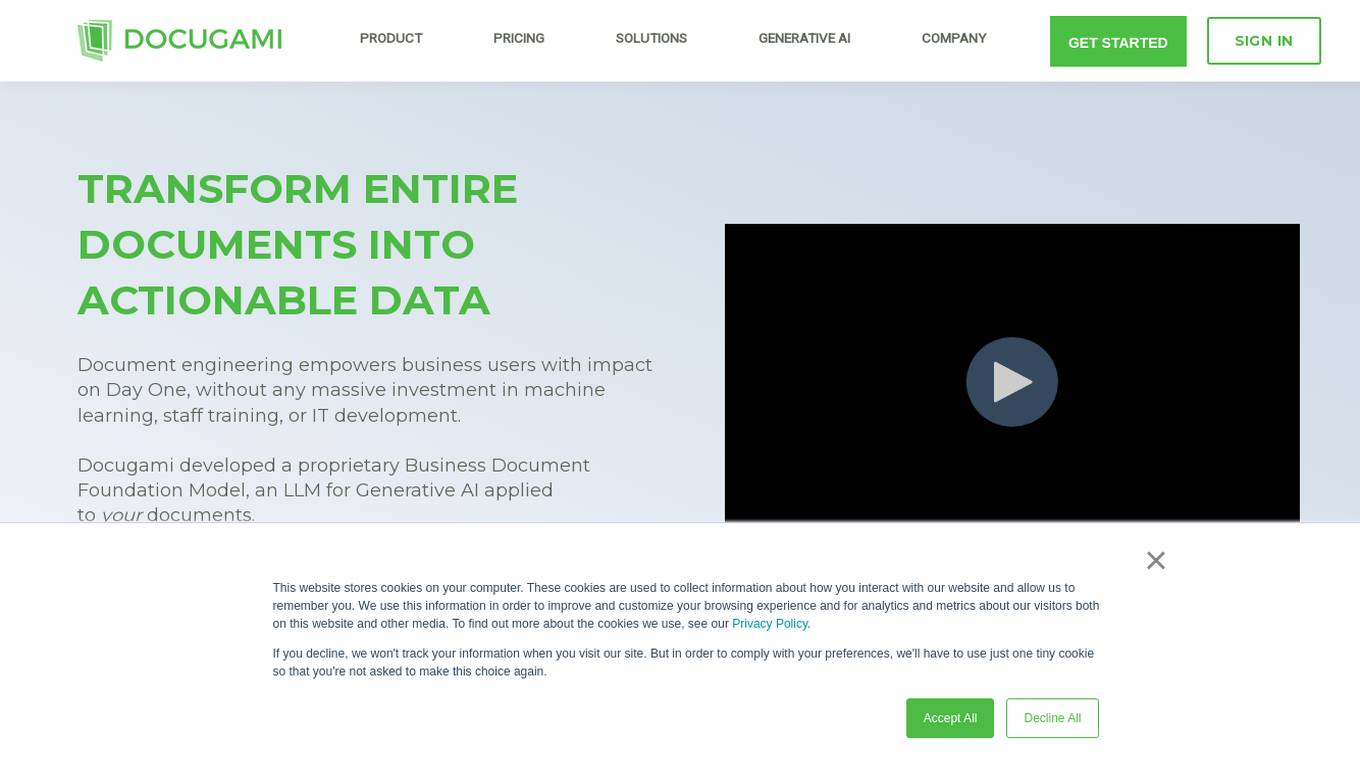

Docugami

Docugami is an AI-powered document engineering platform that enables business users to extract, analyze, and automate data from various types of documents. It empowers users with immediate impact without the need for extensive machine learning investments or IT development. Docugami's proprietary Business Document Foundation Model leverages Generative AI to transform unstructured text into structured information, allowing users to unlock insights and drive business processes efficiently.

super.AI

Super.AI provides Intelligent Document Processing (IDP) solutions powered by Large Language Models (LLMs) and human-in-the-loop (HITL) capabilities. It automates document processing tasks such as data extraction, classification, and redaction, enabling businesses to streamline their workflows and improve accuracy. Super.AI's platform leverages cutting-edge AI models from providers like Amazon, Google, and OpenAI to handle complex documents, ensuring high-quality outputs. With its focus on accuracy, flexibility, and scalability, Super.AI caters to various industries, including financial services, insurance, logistics, and healthcare.

Webscrape AI

Webscrape AI is a no-code web scraping tool that allows users to collect data from websites without writing any code. It is easy to use, accurate, and affordable, making it a great option for businesses of all sizes. With Webscrape AI, you can automate your data collection process and free up your time to focus on other tasks.

PDFMerse

PDFMerse is an AI-powered data extraction tool that revolutionizes how users handle document data. It allows users to effortlessly extract information from PDFs with precision, saving time and enhancing workflow. With cutting-edge AI technology, PDFMerse automates data extraction, ensures data accuracy, and offers versatile output formats like CSV, JSON, and Excel. The tool is designed to dramatically reduce processing time and operational costs, enabling users to focus on higher-value tasks.

Elicit

Elicit is an AI research assistant that helps researchers analyze research papers at superhuman speed. It automates time-consuming research tasks such as summarizing papers, extracting data, and synthesizing findings. Trusted by researchers, Elicit offers a plethora of features to speed up the research process and is particularly beneficial for empirical domains like biomedicine and machine learning.

Octoparse

Octoparse is an AI web scraping tool that offers a no-coding solution for turning web pages into structured data with just a few clicks. It provides users with the ability to build reliable web scrapers without any coding knowledge, thanks to its intuitive workflow designer. With features like AI assistance, automation, and template libraries, Octoparse is a powerful tool for data extraction and analysis across various industries.

Docugami

Docugami is an AI-powered document engineering platform that enables business users to extract, analyze, and automate data from various types of documents. It empowers users with immediate impact without the need for extensive machine learning investments or IT development. Docugami's proprietary Business Document Foundation Model and Generative AI technology transform unstructured text and tables into structured information, allowing users to unlock insights, increase productivity, and ensure compliance.

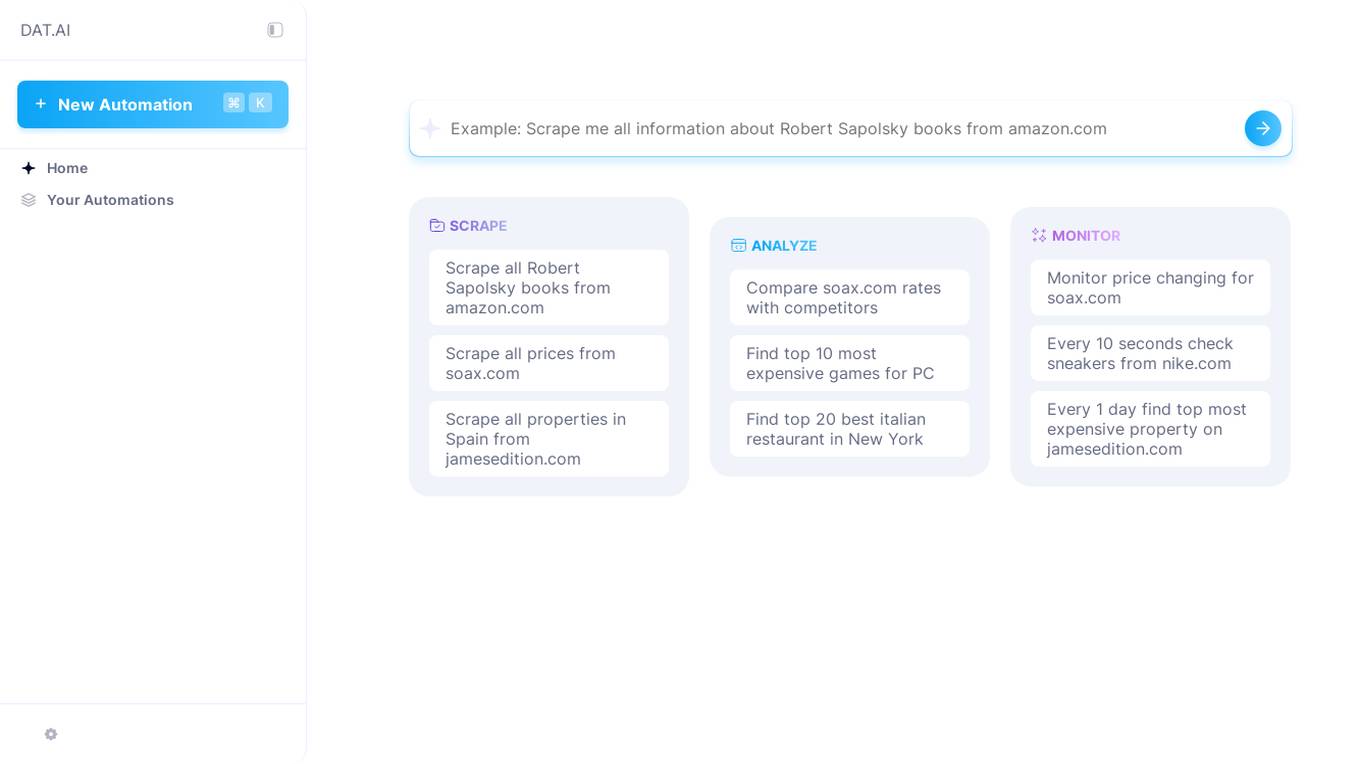

SOAX AI data collection

SOAX AI data collection is a powerful tool that utilizes artificial intelligence to gather and analyze data from various online sources. It automates the process of data collection, saving time and effort for users. The tool is designed to extract relevant information efficiently and accurately, providing valuable insights for businesses and researchers. With its advanced algorithms, SOAX AI data collection can handle large volumes of data quickly and effectively, making it a valuable asset for anyone in need of data-driven decision-making.

ScrapeComfort

ScrapeComfort is an AI-driven web scraping tool that offers an effortless and intuitive data mining solution. It leverages AI technology to extract data from websites without the need for complex coding or technical expertise. Users can easily input URLs, download data, set up extractors, and save extracted data for immediate use. The tool is designed to cater to various needs such as data analytics, market investigation, and lead acquisition, making it a versatile solution for businesses and individuals looking to streamline their data collection process.

0 - Open Source AI Tools

20 - OpenAI Gpts

PDF Ninja

I extract data and tables from PDFs to CSV, focusing on data privacy and precision.

Spreadsheet Composer

Magically turning text from emails, lists and website content into spreadsheet tables

Property Manager Document Assistant

Provides analysis and data extraction of Property Management documents and contracts for managers

Fill PDF Forms

Fill legal forms & complex PDF documents easily! Upload a file, provide data sources and I'll handle the rest.

Email Thread GPT

I'm EmailThreadAnalyzer, here to help you with your email thread analysis.

Regex Wizard

Generate and explain regex patterns from your description, it support English and Chinese.

Metaphor API Guide - Python SDK

Teaches you how to use the Metaphor Search API using our Python SDK

Receipt CSV Formatter

Extract from receipts to CSV: Date of Purchase, Item Purchased, Quantity Purchased, Units

Dissertation & Thesis GPT

An Ivy Leage Scholar GPT equipped to understand your research needs, formulate comprehensive literature review strategies, and extract pertinent information from a plethora of academic databases and journals. I'll then compose a peer review-quality paper with citations.