Best AI tools for< Extract >

20 - AI tool Sites

Extracta.ai

Extracta.ai is an AI data extraction tool for documents and images that automates data extraction processes with easy integration. It allows users to define custom templates for extracting structured data without the need for training. The platform can extract data from various document types, including invoices, resumes, contracts, receipts, and more, providing accurate and efficient results. Extracta.ai ensures data security, encryption, and GDPR compliance, making it a reliable solution for businesses looking to streamline document processing.

Extractify.co

Extractify.co is a website that offers a variety of tools and services for extracting information from different sources. The platform provides users with the ability to extract data from websites, documents, and other sources in a quick and efficient manner. With a user-friendly interface, Extractify.co aims to simplify the process of data extraction for individuals and businesses alike. Whether you need to extract text, images, or other types of data, Extractify.co has the tools to help you get the job done. The platform is designed to be intuitive and easy to use, making it accessible to users of all skill levels.

Extracto.bot

Extracto.bot is an AI web scraping tool that automates the process of extracting data from websites. It is a no-configuration, intelligent web scraper that allows users to collect data from any site using Google Sheets and AI technology. The tool is designed to be simple, instant, and intelligent, enabling users to save time and effort in collecting and organizing data for various purposes.

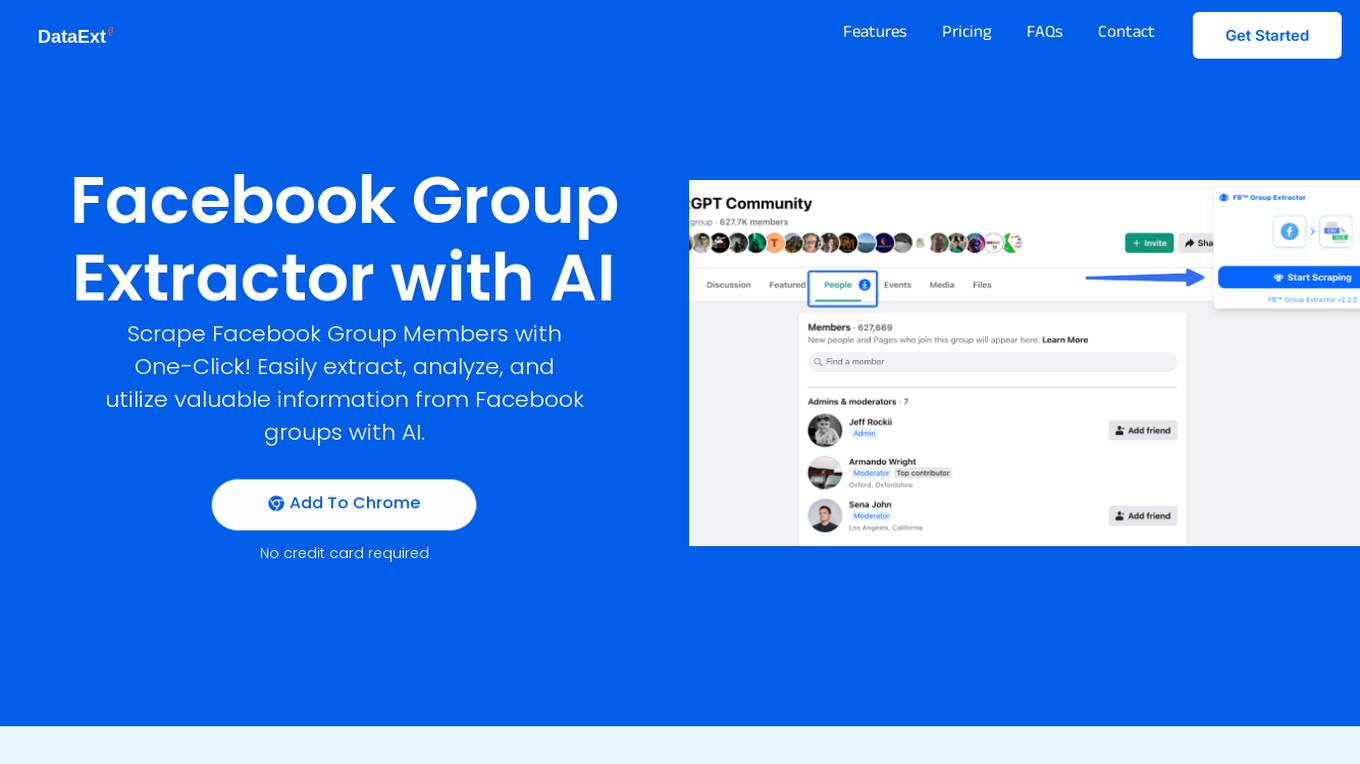

FB Group Extractor

FB Group Extractor is an AI-powered tool designed to scrape Facebook group members' data with one click. It allows users to easily extract, analyze, and utilize valuable information from Facebook groups using artificial intelligence technology. The tool provides features such as data extraction, behavioral analytics for personalized ads, content enhancement, user research, and more. With over 10k satisfied users, FB Group Extractor offers a seamless experience for businesses to enhance their marketing strategies and customer insights.

Reworkd

Reworkd is a web data extraction tool that uses AI to generate and repair web extractors on the fly. It allows users to retrieve data from hundreds of websites without the need for developers. Reworkd is used by businesses in a variety of industries, including manufacturing, e-commerce, recruiting, lead generation, and real estate.

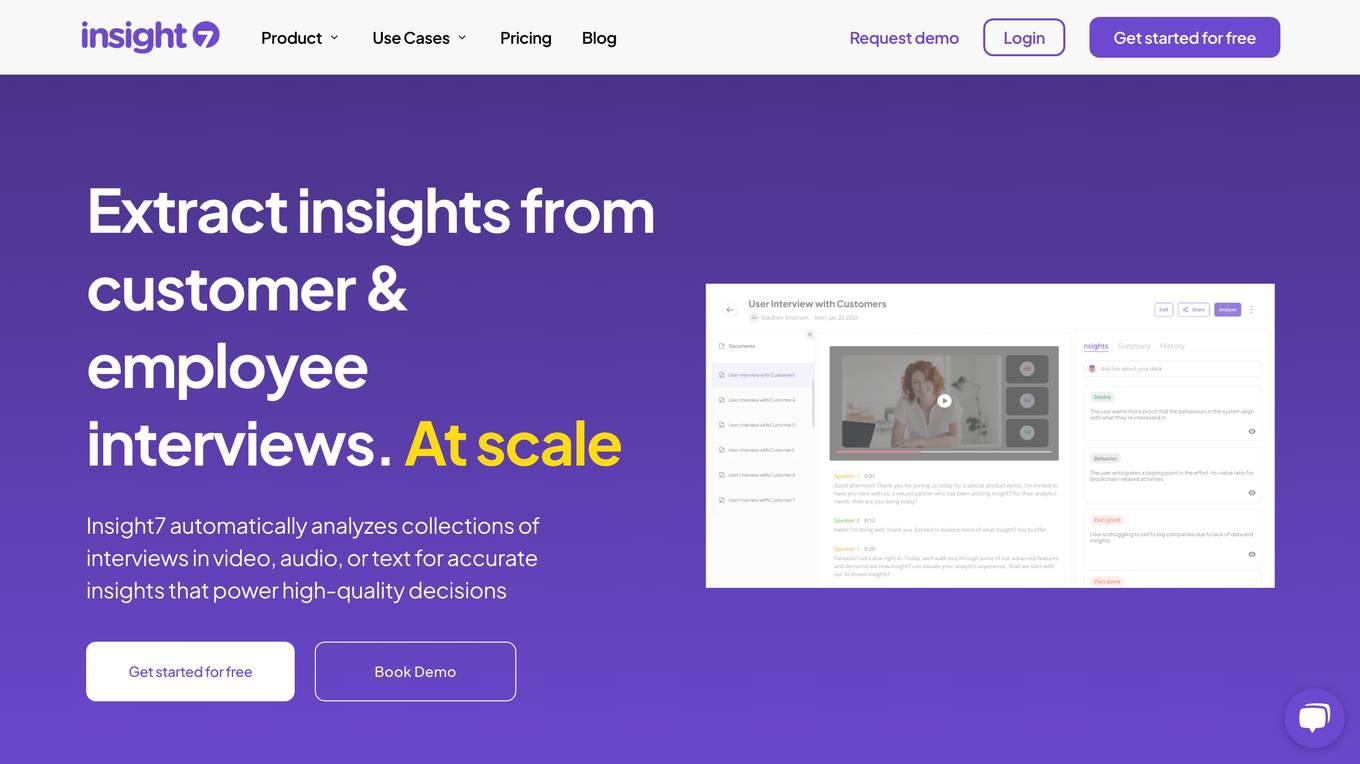

Insight7

Insight7 is a powerful AI-powered tool that helps businesses extract insights from customer and employee interviews. It uses natural language processing and machine learning to analyze large volumes of unstructured data, such as transcripts, audio recordings, and videos. Insight7 can identify key themes, trends, and sentiment, which can then be used to improve products, services, and customer experiences.

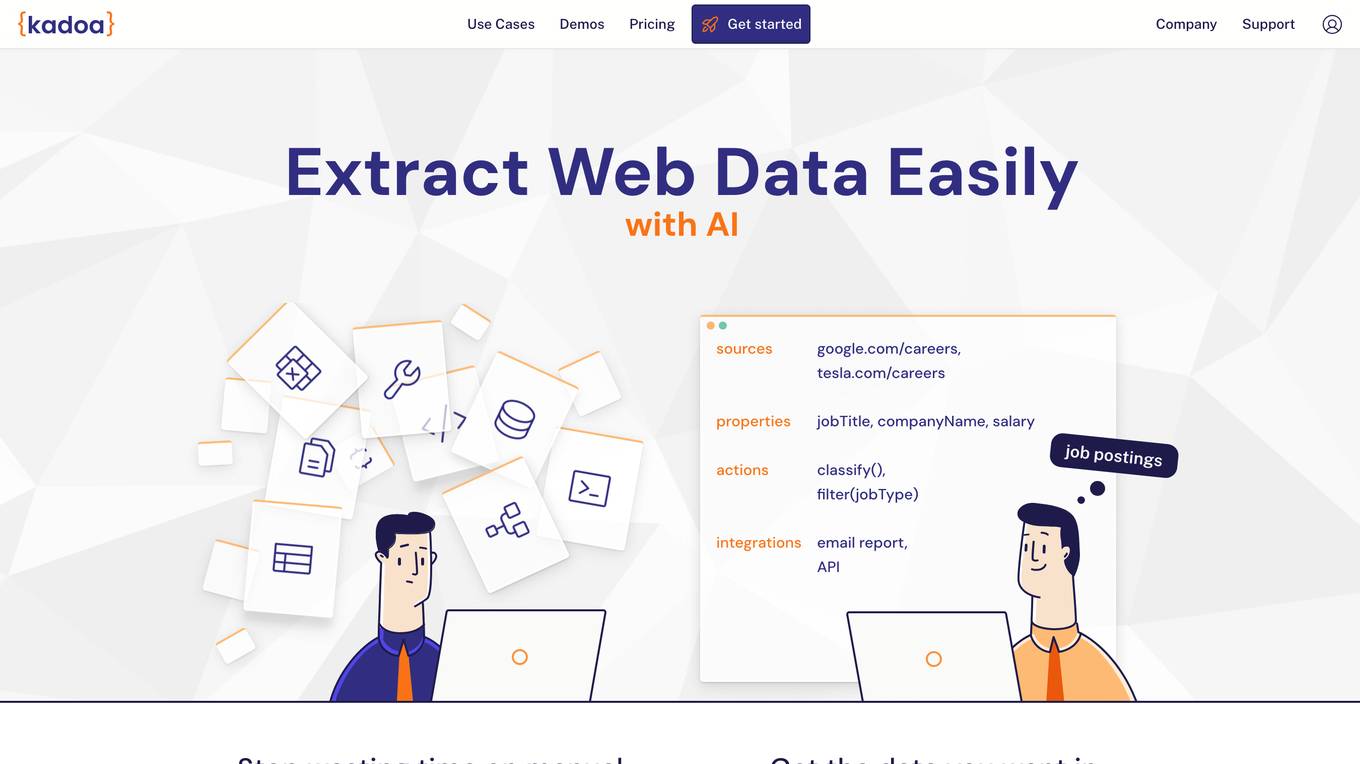

Kadoa

Kadoa is an AI web scraper tool that extracts unstructured web data at scale automatically, without the need for coding. It offers a fast and easy way to integrate web data into applications, providing high accuracy, scalability, and automation in data extraction and transformation. Kadoa is trusted by various industries for real-time monitoring, lead generation, media monitoring, and more, offering zero setup or maintenance effort and smart navigation capabilities.

Evolution AI

Evolution AI is an AI data extraction tool that specializes in extracting data from financial documents such as financial statements, bank statements, invoices, and other related documents. The tool uses generative AI technology to automate the data extraction process, eliminating the need for manual entry. Evolution AI is trusted by global industry leaders and offers exceptional customer service, advanced technology, and a one-stop shop for data extraction.

FormX.ai

FormX.ai is an AI-powered data extraction and conversion tool that automates the process of extracting data from physical documents and converting it into digital formats. It supports a wide range of document types, including invoices, receipts, purchase orders, bank statements, contracts, HR forms, shipping orders, loyalty member applications, annual reports, business certificates, personnel licenses, and more. FormX.ai's pre-configured data extraction models and effortless API integration make it easy for businesses to integrate data extraction into their existing systems and workflows. With FormX.ai, businesses can save time and money on manual data entry and improve the accuracy and efficiency of their data processing.

Parsio

Parsio is an AI-powered document parser that can extract structured data from PDFs, emails, and other documents. It uses natural language processing to understand the context of the document and identify the relevant data points. Parsio can be used to automate a variety of tasks, such as extracting data from invoices, receipts, and emails.

Jsonify

Jsonify is an AI tool that automates the process of exploring and understanding websites to find, filter, and extract structured data at scale. It uses AI-powered agents to navigate web content, replacing traditional data scrapers and providing data insights with speed and precision. Jsonify integrates with leading data analysis and business intelligence suites, allowing users to visualize and gain insights into their data easily. The tool offers a no-code dashboard for creating workflows and easily iterating on data tasks. Jsonify is trusted by companies worldwide for its ability to adapt to page changes, learn as it runs, and provide technical and non-technical integrations.

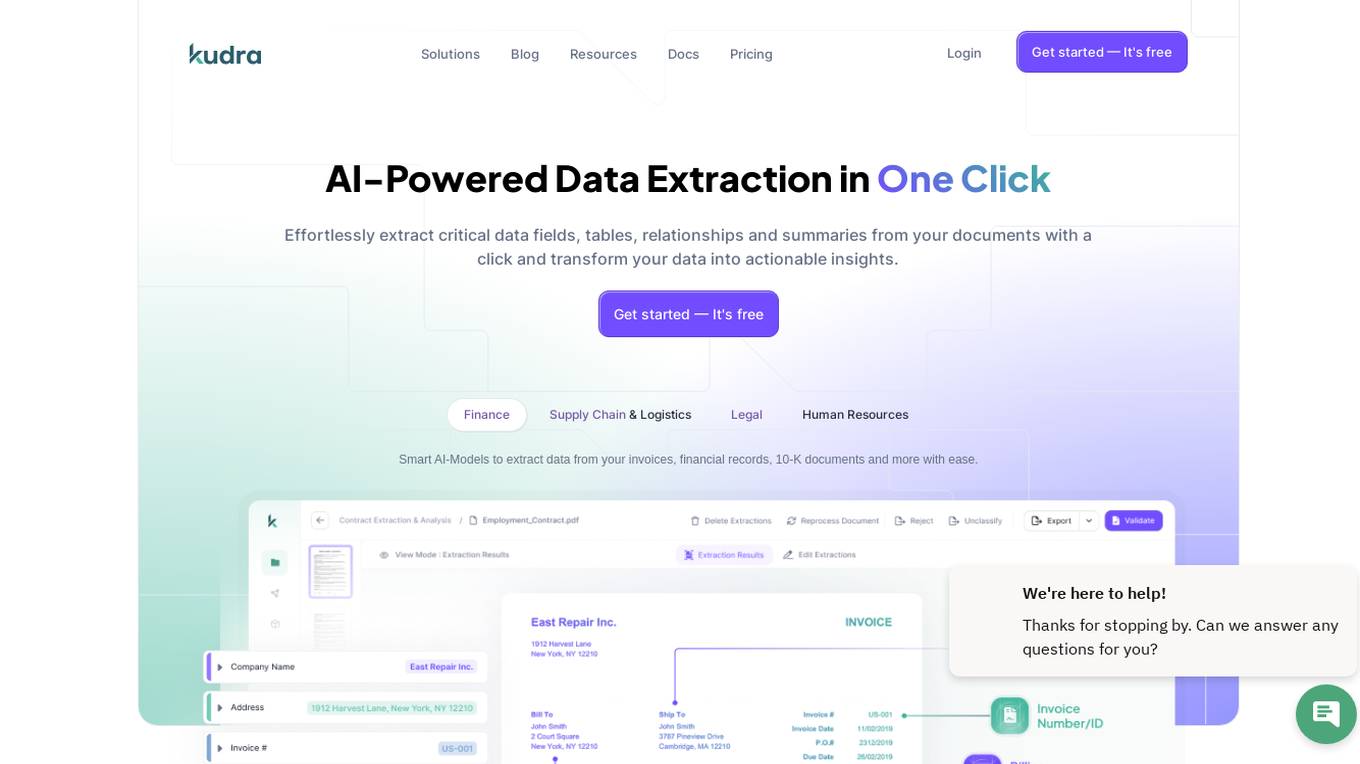

Kudra

Kudra is an AI-powered data extraction tool that offers dedicated solutions for finance, human resources, logistics, legal, and more. It effortlessly extracts critical data fields, tables, relationships, and summaries from various documents, transforming unstructured data into actionable insights. Kudra provides customizable AI models, seamless integrations, and secure document processing while supporting over 20 languages. With features like custom workflows, model training, API integration, and workflow builder, Kudra aims to streamline document processing for businesses of all sizes.

RIDO Protocol

RIDO Protocol is a decentralized data protocol that allows users to extract value from their personal data in Web2 and Web3. It provides users with a variety of features, including programmable data generation, programmable access control, and cross-application data sharing. RIDO also has a data marketplace where users can list or offer their data information and ownership. Additionally, RIDO has a DataFi protocol which promotes the flowing of data information and value.

AgentQL

AgentQL is an AI-powered tool for painless data extraction and web automation. It eliminates the need for fragile XPath or DOM selectors by using semantic selectors and natural language descriptions to find web elements reliably. With controlled output and deterministic behavior, AgentQL allows users to shape data exactly as needed. The tool offers features such as extracting data, filling forms automatically, and streamlining testing processes. It is designed to be user-friendly and efficient for developers and data engineers.

Tablize

Tablize is a powerful data extraction tool that helps you turn unstructured data into structured, tabular format. With Tablize, you can easily extract data from PDFs, images, and websites, and export it to Excel, CSV, or JSON. Tablize uses artificial intelligence to automate the data extraction process, making it fast and easy to get the data you need.

MapsScraperAI

MapsScraperAI is an AI-powered tool designed to extract leads and data from Maps. It offers businesses the ability to generate local B2B leads, conduct research, monitor competition, and obtain business contact details. With features like batch lookup, lightning-fast results, and the unique ability to extract email addresses, MapsScraperAI streamlines the process of data extraction without the need for coding. The tool mimics real user behavior to reduce the risk of being blocked by Maps and ensures timely updates to accommodate any changes on the Maps website.

Dataku.ai

Dataku.ai is an advanced data extraction and analysis tool powered by AI technology. It offers seamless extraction of valuable insights from documents and texts, transforming unstructured data into structured, actionable information. The tool provides tailored data extraction solutions for various needs, such as resume extraction for streamlined recruitment processes, review insights for decoding customer sentiments, and leveraging customer data to personalize experiences. With features like market trend analysis and financial document analysis, Dataku.ai empowers users to make strategic decisions based on accurate data. The tool ensures precision, efficiency, and scalability in data processing, offering different pricing plans to cater to different user needs.

Receipt OCR API

Receipt OCR API by ReceiptUp is an advanced tool that leverages OCR and AI technology to extract structured data from receipt and invoice images. The API offers high accuracy and multilingual support, making it ideal for businesses worldwide to streamline financial operations. With features like multilingual support, high accuracy, support for multiple formats, accounting downloads, and affordability, Receipt OCR API is a powerful tool for efficient receipt management and data extraction.

Podwise

Podwise is an AI-powered podcast tool designed for podcast lovers to extract structured knowledge from episodes at 10x speed. It offers features such as AI-powered summarization, mind mapping, content outlining, transcription, and seamless integration with knowledge management workflows. Users can subscribe to favorite content, get lightning-speed access to structured knowledge, and discover episodes of interest. Podwise aims to address the challenge of enjoying podcasts, recalling less, and forgetting quickly, by providing a meticulous, accurate, and impactful tool for efficient podcast referencing and note consolidation.

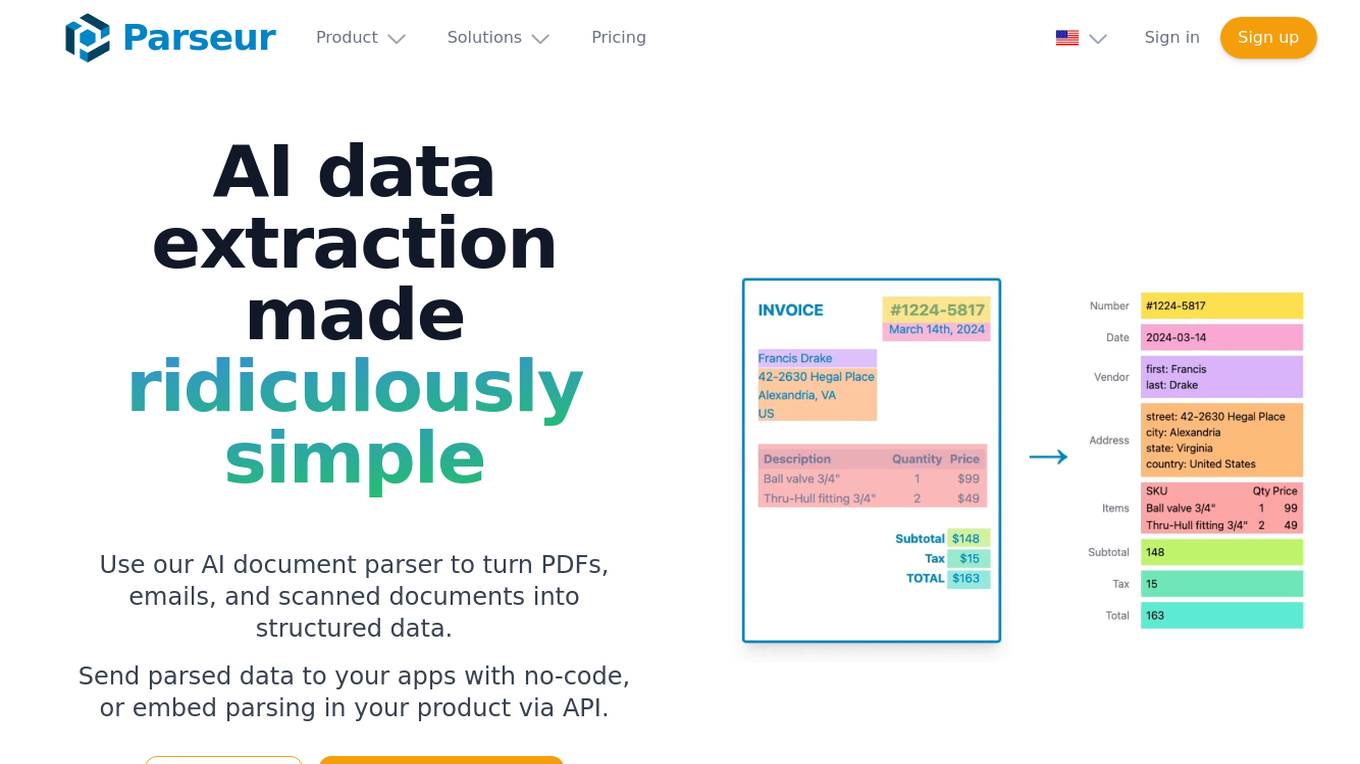

Parseur

Parseur is an AI data extraction software that uses artificial intelligence to extract structured data from various types of documents such as PDFs, emails, and scanned documents. It offers features like template-based data extraction, OCR software for character recognition, and dynamic OCR for extracting fields that move or change size. Parseur is trusted by businesses in finance, tech, logistics, healthcare, real estate, e-commerce, marketing, and human resources industries to automate data extraction processes, saving time and reducing manual errors.

1 - Open Source AI Tools

baml

BAML is a config file format for declaring LLM functions that you can then use in TypeScript or Python. With BAML you can Classify or Extract any structured data using Anthropic, OpenAI or local models (using Ollama) ## Resources  [Discord Community](https://discord.gg/boundaryml)  [Follow us on Twitter](https://twitter.com/boundaryml) * Discord Office Hours - Come ask us anything! We hold office hours most days (9am - 12pm PST). * Documentation - Learn BAML * Documentation - BAML Syntax Reference * Documentation - Prompt engineering tips * Boundary Studio - Observability and more #### Starter projects * BAML + NextJS 14 * BAML + FastAPI + Streaming ## Motivation Calling LLMs in your code is frustrating: * your code uses types everywhere: classes, enums, and arrays * but LLMs speak English, not types BAML makes calling LLMs easy by taking a type-first approach that lives fully in your codebase: 1. Define what your LLM output type is in a .baml file, with rich syntax to describe any field (even enum values) 2. Declare your prompt in the .baml config using those types 3. Add additional LLM config like retries or redundancy 4. Transpile the .baml files to a callable Python or TS function with a type-safe interface. (VSCode extension does this for you automatically). We were inspired by similar patterns for type safety: protobuf and OpenAPI for RPCs, Prisma and SQLAlchemy for databases. BAML guarantees type safety for LLMs and comes with tools to give you a great developer experience:  Jump to BAML code or how Flexible Parsing works without additional LLM calls. | BAML Tooling | Capabilities | | ----------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | | BAML Compiler install | Transpiles BAML code to a native Python / Typescript library (you only need it for development, never for releases) Works on Mac, Windows, Linux  | | VSCode Extension install | Syntax highlighting for BAML files Real-time prompt preview Testing UI | | Boundary Studio open (not open source) | Type-safe observability Labeling |

20 - OpenAI Gpts

ExtractWisdom

Takes in any text and extracts the wisdom from it like you spent 3 hours taking handwritten notes.

Data Extractor Pro

Expert in data extraction and context-driven analysis. Can read most filetypes including PDFS, XLSX, Word, TXT, CSV, EML, Etc.

Procedure Extraction and Formatting

Extracts and formats procedures from manuals into templates

Wisdom Witch Extractor

A conversational and engaging extractor of profound wisdom from texts, tailored to life's deeper themes.

FREE Keyword Extraction Tool

Keyword Extraction Tool: Efficiently extracts keywords from various texts, social media, and customer feedback with our user-friendly, scalable tool.

Acid Extractor Assistant

Hello Acid Extractor! I'm your dedicated Acid Extractor Assistant here to assist you with all your acid extraction needs. Let's work together for successful extractions!

PDF Ninja

I extract data and tables from PDFs to CSV, focusing on data privacy and precision.

Visual Storyteller

Extract the essence of the novel story according to the quantity requirements and generate corresponding images. The images can be used directly to create novel videos.小说推文图片自动批量生成,可自动生成风格一致性图片

Receipt CSV Formatter

Extract from receipts to CSV: Date of Purchase, Item Purchased, Quantity Purchased, Units

E&L and Pharmaceutical Regulatory Compliance AI

This GPT chat AI is specialized in understanding Extractables and Leachables studies, aligning with pharmaceutical guidelines, and aiding in the design and interpretation of relevant experiments.