Best AI tools for< Evaluate The Effectiveness Of Chronic Disease Programs >

20 - AI tool Sites

PyjamaHR

PyjamaHR is a leading AI-powered Applicant Tracking System (ATS) and recruitment software designed to streamline the hiring process for businesses of all sizes. It offers advanced features such as source management, candidate evaluation, collaboration tools, and AI-powered candidate tests to enhance the efficiency and effectiveness of the recruitment process. With a user-friendly interface and robust security measures, PyjamaHR is a trusted solution for managing talent acquisition and improving hiring outcomes.

Mangus

Mangus is an AI-powered learning platform that provides personalized learning paths for employees and students. It offers a wide range of courses and programs in various disciplines, including business, education, technology, and more. Mangus uses gamification and artificial intelligence to create an engaging and effective learning experience.

Inductor

Inductor is a developer tool for evaluating, ensuring, and improving the quality of your LLM applications – both during development and in production. It provides a fantastic workflow for continuous testing and evaluation as you develop, so that you always know your LLM app’s quality. Systematically improve quality and cost-effectiveness by actionably understanding your LLM app’s behavior and quickly testing different app variants. Rigorously assess your LLM app’s behavior before you deploy, in order to ensure quality and cost-effectiveness when you’re live. Easily monitor your live traffic: detect and resolve issues, analyze usage in order to improve, and seamlessly feed back into your development process. Inductor makes it easy for engineering and other roles to collaborate: get critical human feedback from non-engineering stakeholders (e.g., PM, UX, or subject matter experts) to ensure that your LLM app is user-ready.

edu720

edu720 is a science-backed learning platform that uses AI and nanolearning to redefine how workforces learn and achieve their goals. It provides pre-built learning modules on various topics, including cybersecurity, privacy, and AI ethics. edu720's 360-degree approach ensures that all employees, regardless of their status or location, fully understand and absorb the knowledge conveyed.

Should I Hire AI

Should I Hire AI is an AI application that helps businesses determine if investing in AI tools is the right decision for them. By answering a few questions, the application provides a personalized recommendation on whether AI could be a cost-effective solution. The tool is designed to assist businesses in making informed decisions about integrating AI into their operations, ultimately aiming to enhance efficiency and productivity.

Traceable

Traceable is an intelligent API security platform designed for enterprise-scale security. It offers unmatched API discovery, attack detection, threat hunting, and infinite scalability. The platform provides comprehensive protection against API attacks, fraud, and bot security, along with API testing capabilities. Powered by Traceable's OmniTrace Engine, it ensures unparalleled security outcomes, remediation, and pre-production testing. Security teams trust Traceable for its speed and effectiveness in protecting API infrastructures.

Skeptic Reader

Skeptic Reader is a Chrome plugin that detects biases and logical fallacies in real-time. It's powered by GPT4 and helps users to critically evaluate the information they consume online. The plugin highlights biases and fallacies, provides counter-arguments, and suggests alternative perspectives. It's designed to promote informed skepticism and encourage users to question the information they encounter online.

AI Tools Masters

AI Tools Masters is a comprehensive platform that empowers users to discover and evaluate the latest and most exceptional AI tools. Catering to diverse needs, from education to personal advancement, AI Tools Masters offers a curated collection of top-notch solutions tailored to specific requirements. With a user-friendly interface and extensive filtering options, users can effortlessly navigate through a wide range of AI tools, ensuring they find the perfect fit for their projects and goals.

InstantPersonas

InstantPersonas is an AI-powered SWOT Analysis Generator that helps organizations and individuals evaluate their Strengths, Weaknesses, Opportunities, and Threats. By using a company description, the tool generates a comprehensive SWOT Analysis, providing insights for strategic planning. Users can edit the analysis and download it as an image. InstantPersonas aims to assist in understanding target audience and market for more successful marketing strategies.

PubCompare

PubCompare is a powerful AI-powered tool that helps scientists search, compare, and evaluate experimental protocols. With over 40 million protocols in its database, PubCompare is the largest repository of trusted experimental protocols. PubCompare's AI-powered search features allow users to find similar protocols, highlight critical steps, and evaluate the reproducibility of protocols based on in-protocol citations. PubCompare is available from any computer and requires no download.

OpinioAI

OpinioAI is an AI-powered market research tool that allows users to gain business critical insights from data without the need for costly polls, surveys, or interviews. With OpinioAI, users can create AI personas and market segments to understand customer preferences, affinities, and opinions. The platform democratizes research by providing efficient, effective, and budget-friendly solutions for businesses, students, and individuals seeking valuable insights. OpinioAI leverages Large Language Models to simulate humans and extract opinions in detail, enabling users to analyze existing data, synthesize new insights, and evaluate content from the perspective of their target audience.

Evidently AI

Evidently AI is an open-source machine learning (ML) monitoring and observability platform that helps data scientists and ML engineers evaluate, test, and monitor ML models from validation to production. It provides a centralized hub for ML in production, including data quality monitoring, data drift monitoring, ML model performance monitoring, and NLP and LLM monitoring. Evidently AI's features include customizable reports, structured checks for data and models, and a Python library for ML monitoring. It is designed to be easy to use, with a simple setup process and a user-friendly interface. Evidently AI is used by over 2,500 data scientists and ML engineers worldwide, and it has been featured in publications such as Forbes, VentureBeat, and TechCrunch.

BenchLLM

BenchLLM is an AI tool designed for AI engineers to evaluate LLM-powered apps by running and evaluating models with a powerful CLI. It allows users to build test suites, choose evaluation strategies, and generate quality reports. The tool supports OpenAI, Langchain, and other APIs out of the box, offering automation, visualization of reports, and monitoring of model performance.

ELSA

ELSA is an AI-powered English speaking coach that helps you improve your pronunciation, fluency, and confidence. With ELSA, you can practice speaking English in short, fun dialogues and get instant feedback from our proprietary artificial intelligence technology. ELSA also offers a variety of other features, such as personalized lesson plans, progress tracking, and games to help you stay motivated.

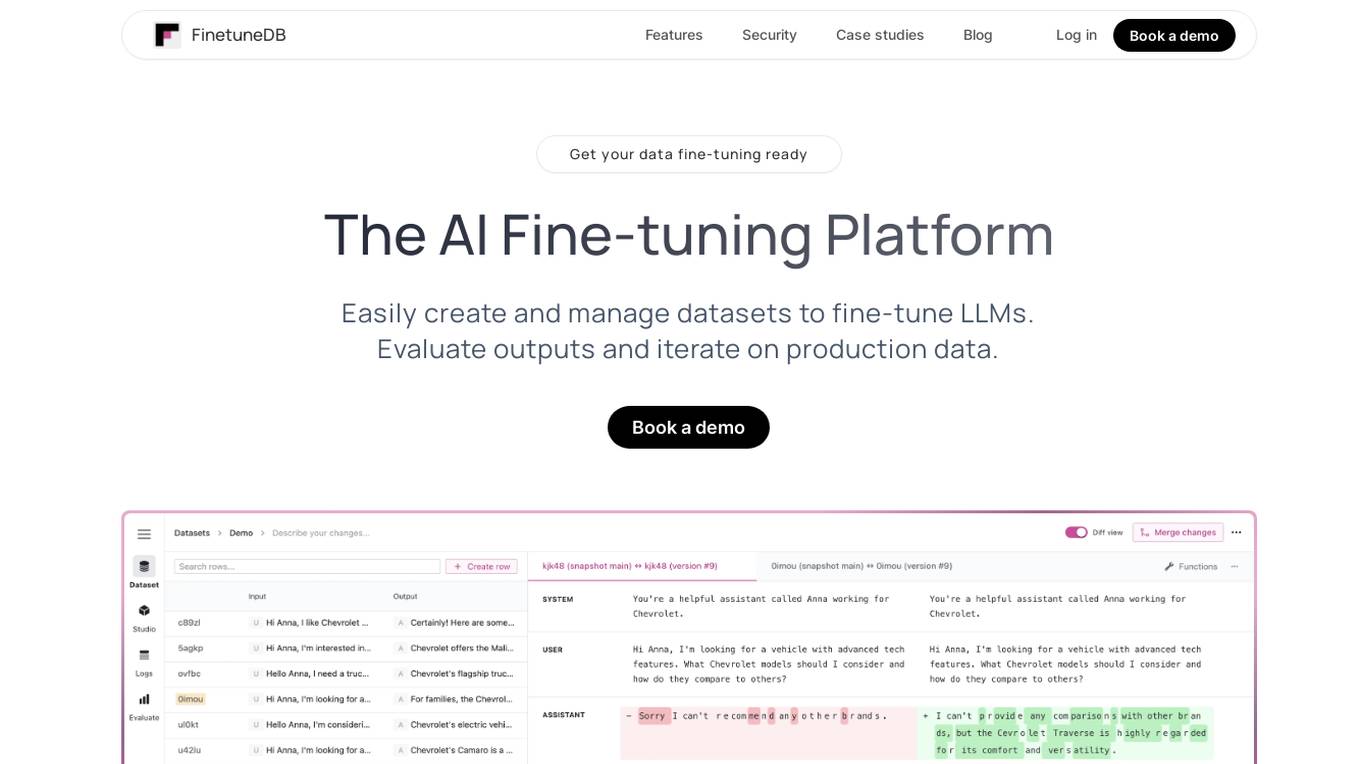

FinetuneDB

FinetuneDB is an AI fine-tuning platform that allows users to easily create and manage datasets to fine-tune LLMs, evaluate outputs, and iterate on production data. It integrates with open-source and proprietary foundation models, and provides a collaborative editor for building datasets. FinetuneDB also offers a variety of features for evaluating model performance, including human and AI feedback, automated evaluations, and model metrics tracking.

Galileo AI

Galileo AI is a platform that offers automated evaluations for AI applications, bringing automation and insight to AI evaluations to ensure reliable and confident shipping. It helps in eliminating 80% of evaluation time by replacing manual reviews with high-accuracy metrics, enabling rapid iteration, achieving real-time protection, and providing end-to-end visibility into agent completions. Galileo also allows developers to take control of AI complexity, de-risk AI in production, and deploy AI applications flexibly across different environments. The platform is trusted by enterprises and loved by developers for its accuracy, low-latency, and ability to run on L4 GPUs.

ThinkTask

ThinkTask is a project and team management tool that utilizes ChatGPT's capabilities to enhance productivity and streamline task management. It offers AI-generated reports and insights, AI usage tracking, Team Pulse for visualizing task types and status, Project Progress Table for monitoring project timelines and budgets, Task Insights for illustrating task interdependencies, and a comprehensive Overview for visualizing progress and managing dependencies. Additionally, ThinkTask features one-click auto-task creation with notes from ChatGPT, auto-tagging for task organization, and AI-suggested task assignments based on past experience and skills. It provides a unified workspace for notes, tasks, databases, collaboration, and customization.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

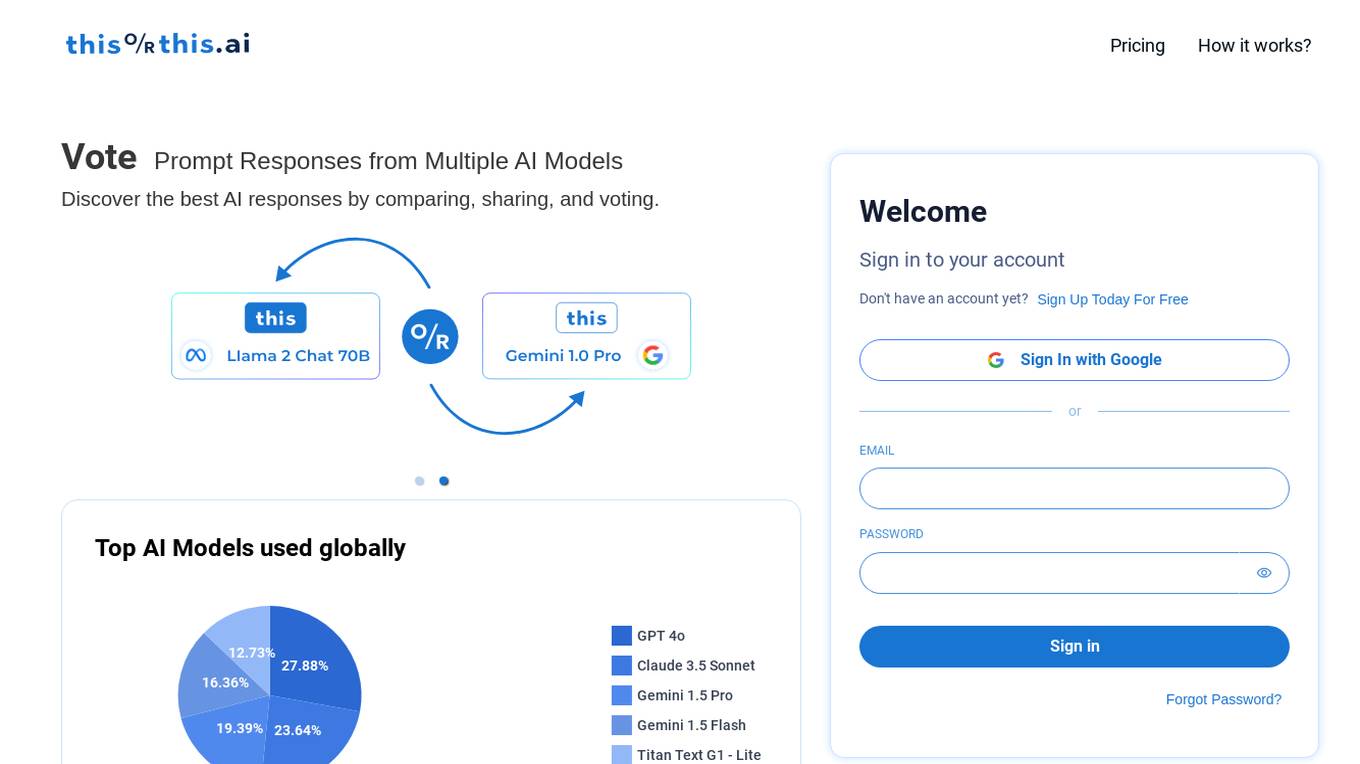

thisorthis.ai

thisorthis.ai is an AI tool that allows users to compare generative AI models and AI model responses. It helps users analyze and evaluate different AI models to make informed decisions. The tool requires JavaScript to be enabled for optimal functionality.

Robust Intelligence

Robust Intelligence is an end-to-end solution for securing AI applications. It automates the evaluation of AI models, data, and files for security and safety vulnerabilities and provides guardrails for AI applications in production against integrity, privacy, abuse, and availability violations. Robust Intelligence helps enterprises remove AI security blockers, save time and resources, meet AI safety and security standards, align AI security across stakeholders, and protect against evolving threats.

0 - Open Source AI Tools

20 - OpenAI Gpts

Chronic Disease Indicators Expert

This chatbot answers questions about the CDC’s Chronic Disease Indicators dataset

Calidad en Educación Superior

Puedo asesorar en temas relacionados con calidad en IES (planificación, autoevaluación, acreditación, mejora continua)

Policy Communication Advisor

Communicates policy processes and changes effectively within the organization.

HCDP - برنامج تنمية القدرات البشرية

خبير يقدم لك المعلومات حول برنامج تنمية القدرات البشرية (Human Capability Development Program) أحد برامج رؤية المملكة 2030 والذي يهدف إلى بناء استراتيجية وطنية طموحة لتنمية قدرات المواطن

Stick to the Point

I'll help you evaluate your writing to make sure it's engaging, informative, and flows well. Uses principles from "Made to Stick"

LabGPT

The main objective of a personalized ChatGPT for reading laboratory tests is to evaluate laboratory test results and create a spreadsheet with the evaluation results and possible solutions.

Investing in Biotechnology and Pharma

🔬💊 Navigate the high-risk, high-reward world of biotech and pharma investing! Discover breakthrough therapies 🧬📈, understand drug development 🧪📊, and evaluate investment opportunities 🚀💰. Invest wisely in innovation! 💡🌐 Not a financial advisor. 🚫💼

SearchQualityGPT

As a Search Quality Rater, you will help evaluate search engine quality around the world.

I4T Assessor - UNESCO Tech Platform Trust Helper

Helps you evaluate whether or not tech platforms match UNESCO's Internet for Trust Guidelines for the Governance of Digital Platforms

Education AI Strategist

I provide a structured way of using AI to support teaching and learning. I use the the CHOICE method (i.e., Clarify, Harness, Originate, Iterate, Communicate, Evaluate) to ensure that your use of AI can help you meet your educational goals.

IELTS Writing Test

Simulates the IELTS Writing Test, evaluates responses, and estimates band scores.

The IPO Strategy

Expert in IPO Strategy, offers detailed guidance on business ideas, market paths, and opportunities. Created by Christopher Perceptions

Recruiting Coach by The Players Circle

A scouting resource on high school basketball recruiting

The Learning Architect

An all-in-one, consultative L&D expert AI helping you build impactful, customized learning solutions for your organization.

Search Quality Evaluator GPT

Analyse content through the official Google Search Quality Rater Guidelines.

Charity Impact Assessor

Assesses and evaluates the impact and trustworthiness of various charity organizations.