Best AI tools for< Enforce Rate Limits >

20 - AI tool Sites

Backmesh

Backmesh is an AI tool that serves as a proxy on edge CDN servers, enabling secure and direct access to LLM APIs without the need for a backend or SDK. It allows users to call LLM APIs from their apps, ensuring protection through JWT verification and rate limits. Backmesh also offers user analytics for LLM API calls, helping identify usage patterns and enhance user satisfaction within AI applications.

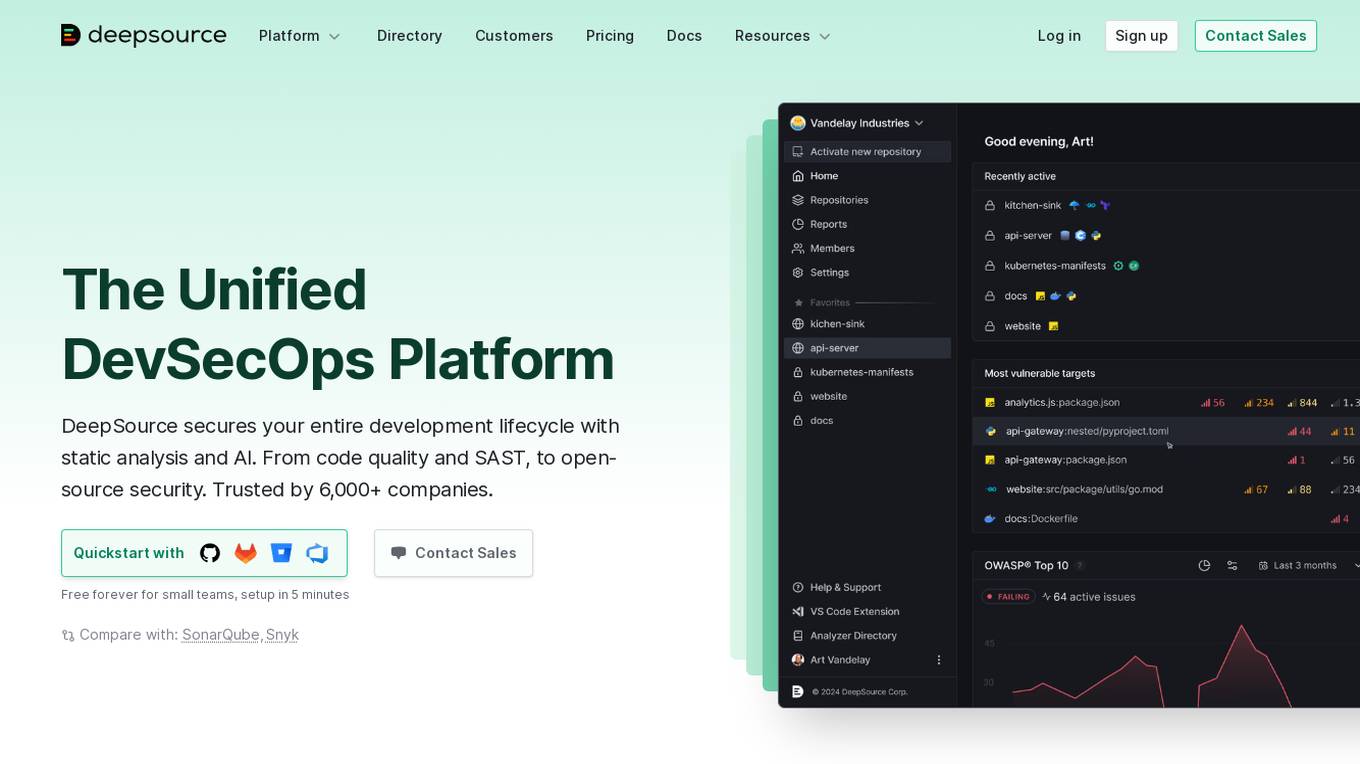

DeepSource

DeepSource is a Unified DevSecOps Platform that secures the entire development lifecycle with static analysis and AI. It offers code quality and SAST, open-source security, and is trusted by over 6,000 companies. The platform helps in finding and fixing security vulnerabilities before code is merged, with a low false-positive rate and customizable security gates for pull requests. DeepSource is built for modern software development, providing features like Autofix™ AI, code coverage, and integrations with popular tools like Jira and GitHub Issues. It offers detailed reports, issue suppression, and metric thresholds to ensure clean and secure code shipping.

DocDriven

DocDriven is an AI-powered documentation-driven API development tool that provides a shared workspace for optimizing the API development process. It helps in designing APIs faster and more efficiently, collaborating on API changes in real-time, exploring all APIs in one workspace, generating AI code, maintaining API documentation, and much more. DocDriven aims to streamline communication and coordination among backend developers, frontend developers, UI designers, and product managers, ensuring high-quality API design and development.

Transparency Coalition

The Transparency Coalition is a platform dedicated to advocating for legislation and transparency in the field of artificial intelligence. It aims to create AI safeguards for the greater good by focusing on training data, accountability, and ethical practices in AI development and deployment. The platform emphasizes the importance of regulating training data to prevent misuse and harm caused by AI systems. Through advocacy and education, the Transparency Coalition seeks to promote responsible AI innovation and protect personal privacy.

ModelOp

ModelOp is the leading AI Governance software for enterprises, providing a single source of truth for all AI systems, automated process workflows, real-time insights, and integrations to extend the value of existing technology investments. It helps organizations safeguard AI initiatives without stifling innovation, ensuring compliance, accelerating innovation, and improving key performance indicators. ModelOp supports generative AI, Large Language Models (LLMs), in-house, third-party vendor, and embedded systems. The software enables visibility, accountability, risk tiering, systemic tracking, enforceable controls, workflow automation, reporting, and rapid establishment of AI governance.

Writer

Writer is a full-stack generative AI platform that offers industry-leading models Palmyra-Med and Palmyra-Fin. It provides a secure enterprise platform to embed generative AI into any business process, enforce legal and brand compliance, and gain insights through analysis. Writer's platform abstracts complexity, allowing users to focus on AI-first workflows without the need to maintain infrastructure. The platform includes Palmyra LLMs, Knowledge Graph, and AI guardrails to ensure quality, control, transparency, accuracy, and security in AI applications.

Loti

Loti is an online protection tool designed for public figures, including major artists, athletes, executives, and creators. It scans the internet daily to identify instances where the user's face or voice appear, takes down infringing accounts and content, and recaptures revenue. Loti offers features such as protecting against fake accounts and deepfakes, enforcing licensing agreements, and detecting and eliminating fake social media accounts. It is a valuable tool for managing and safeguarding a public figure's online presence and brand image.

DryRun Security

DryRun Security is an AI-driven application security tool that provides Contextual Security Analysis to detect and prevent logic flaws, authorization gaps, IDOR, and other code risks. It offers features like code insights, natural language code policies, and customizable notifications and reporting. The tool benefits CISOs, security leaders, and developers by enhancing code security, streamlining compliance, increasing developer engagement, and providing real-time feedback. DryRun Security supports various languages and frameworks and integrates with GitHub and Slack for seamless collaboration.

DevOps Security Platform

DevOps Security Platform is an AI-native security tool designed to automate security requirements definition, enforcement, risk assessments, and threat modeling. It helps companies secure their applications by identifying risks early in the Software Development Lifecycle and enforcing security measures before go-live. The platform offers innovative features, customization options, and integrations with existing tools to streamline security processes.

Watchdog

Watchdog is an AI-powered chat moderation tool designed to fully automate chat moderation for Telegram communities. It helps community owners tackle rulebreakers, trolls, and spambots effortlessly, ensuring consistent rule enforcement and user retention. With features like automatic monitoring, customizable rule enforcement, and quick setup, Watchdog offers significant cost savings and eliminates the need for manual moderation. The tool is developed by Ben, a solo developer, who created it to address the challenges he faced in managing his own community. Watchdog aims to save time, money, and enhance user experience by swiftly identifying and handling rule violations.

Pulumi

Pulumi is an AI-powered infrastructure as code tool that allows engineers to manage cloud infrastructure using various programming languages like Node.js, Python, Go, .NET, Java, and YAML. It offers features such as generative AI-powered cloud management, security enforcement through policies, automated deployment workflows, asset management, compliance remediation, and AI insights over the cloud. Pulumi helps teams provision, automate, and evolve cloud infrastructure, centralize and secure secrets management, and gain security, compliance, and cost insights across all cloud assets.

Protect AI

Protect AI is a comprehensive platform designed to secure AI systems by providing visibility and manageability to detect and mitigate unique AI security threats. The platform empowers organizations to embrace a security-first approach to AI, offering solutions for AI Security Posture Management, ML model security enforcement, AI/ML supply chain vulnerability database, LLM security monitoring, and observability. Protect AI aims to safeguard AI applications and ML systems from potential vulnerabilities, enabling users to build, adopt, and deploy AI models confidently and at scale.

Tusk

Tusk is an AI-powered automated testing platform that helps engineering teams generate high-quality unit and integration tests with codebase and business context. It runs on pull requests to suggest verified test cases, enabling faster and safer code shipping. Tusk offers features like shift-left testing, autonomous test generation, self-healing tests, and seamless integration with CI/CD pipelines. Trusted by engineering leaders at fast-growing companies, Tusk aims to improve test coverage and code quality while reducing the release cycle time.

Varonis

Varonis is an AI-powered data security platform that provides end-to-end data security solutions for organizations. It offers automated outcomes to reduce risk, enforce policies, and stop active threats. Varonis helps in data discovery & classification, data security posture management, data-centric UEBA, data access governance, and data loss prevention. The platform is designed to protect critical data across multi-cloud, SaaS, hybrid, and AI environments.

Yokoy

Yokoy is an AI-powered spend management suite that helps midsize companies and global enterprises save money on every dollar spent. It automates accounts payable and expense management tasks, streamlines global data, keeps spend under control, and enforces compliance. Yokoy's API facilitates seamless integrations with enterprise-level systems for end-to-end automation across entities and geographies.

MineOS

MineOS is an automation-driven platform that focuses on privacy, security, and compliance. It offers a comprehensive suite of tools and solutions to help businesses manage their data privacy needs efficiently. By leveraging AI and special discovery methods, MineOS adapts unique data processes to universal privacy standards seamlessly. The platform provides features such as data mapping, AI governance, DSR automations, consent management, and security & compliance solutions to ensure data visibility and governance. MineOS is recognized as the industry's #1 rated data governance platform, offering cost-effective control of data systems and centralizing data subject request handling.

Domino Data Lab

Domino Data Lab is an enterprise AI platform that enables users to build, deploy, and manage AI models across any environment. It fosters collaboration, establishes best practices, and ensures governance while reducing costs. The platform provides access to a broad ecosystem of open source and commercial tools, and infrastructure, allowing users to accelerate and scale AI impact. Domino serves as a central hub for AI operations and knowledge, offering integrated workflows, automation, and hybrid multicloud capabilities. It helps users optimize compute utilization, enforce compliance, and centralize knowledge across teams.

Kubiya

Kubiya is an AI-powered platform designed to reduce Time-To-Automation for Developer and Infrastructure Operations teams. It allows users to focus on high-impact work by automating routine tasks and processes, powered by an Agentic-Native Platform. Kubiya is trusted by world-class engineering and operations teams, offering features such as AI teammates, JIT Permissions, Infrastructure Provisioning, Help Desk support, and Incident Response automation.

42Signals

42Signals is an AI-driven eCommerce analytics software that provides real-time insights and analytics for consumer brands. It empowers businesses to anticipate market trends, monitor competitors, optimize digital shelves, and drive sales growth with unmatched accuracy and speed. The platform offers a suite of tools including Digital Shelf Analytics, Competitor Analysis, Voice of Customer Analytics, and more to help businesses dominate the digital marketplace.

Operant

Operant is a cloud-native runtime protection platform that offers instant visibility and control from infrastructure to APIs. It provides AI security shield for applications, API threat protection, Kubernetes security, automatic microsegmentation, and DevSecOps solutions. Operant helps defend APIs, protect Kubernetes, and shield AI applications by detecting and blocking various attacks in real-time. It simplifies security for cloud-native environments with zero instrumentation, application code changes, or integrations.

0 - Open Source AI Tools

11 - OpenAI Gpts

IAC Code Guardian

Introducing IAC Code Guardian: Your Trusted IaC Security Expert in Scanning Opentofu, Terrform, AWS Cloudformation, Pulumi, K8s Yaml & Dockerfile

Boundary Coach

Boundary Coach is now fine-tuned and ready for use! It's an advanced guide for assertive boundary setting, offering nuanced advice, practical tips, and interactive exercises. It will provide tailored guidance, avoiding medical or legal advice and suggesting professional help when needed.

Term of Service Drafting Master

Legal Expert in drafting Term of Service (Powered by LegalNow ai.legalnow.xyz)

Trademarks GPT

Trademark Process Assistant, Not an Attorney & Definitely Not Legal Advice (independently verify info received). Gain insights on U.S. trademark process & concepts, USPTO resources, application steps & more - all while being reminded of the importance of consulting legal pros 4 specific guidance.

Seabiscuit IP Guardian

Secure Your Intellectual Property Innovations: Specializes in IP creation, management, and protection, offering expert guidance in U.S. copyright, trademark, patent, and trade secret laws ensuring your intellectual property is well-protected and leveraged effectively. (v1.15)