Best AI tools for< Do Summarization >

20 - AI tool Sites

Summate.it

Summate.it is a tool that uses OpenAI to quickly summarize web articles. It is simple and clean, and it can be used to summarize any web article by simply pasting the URL into the text box. Summate.it is a great way to quickly get the gist of an article without having to read the entire thing.

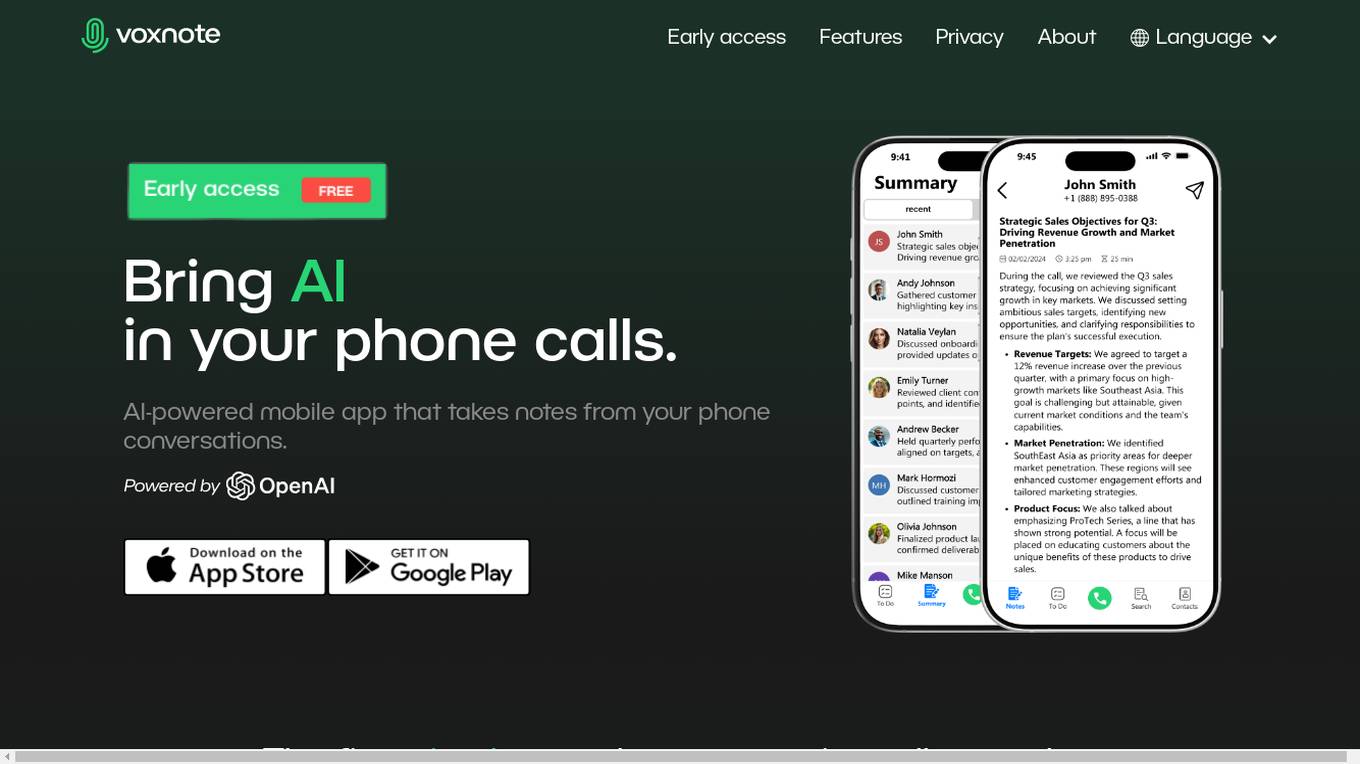

VoxNote

VoxNote is an AI-powered mobile app designed to bring AI into your phone calls by capturing and summarizing conversations. It automatically generates action items and tasks from your phone conversations, helping to boost productivity. With accurate call transcriptions and summaries, VoxNote ensures that no detail is missed. The app offers features like easily shareable summaries, customizable phone numbers, and a seamless user interface for a native-like experience. VoxNote is available in multiple languages and aims to streamline communication and organization through AI technology.

InspNote

InspNote is an AI-powered platform designed to help users capture and organize their fleeting ideas efficiently. It allows users to record their thoughts, which are then processed by AI to extract summaries and highlight key points. Users can generate structured content like to-do lists, blog posts, tweets, and emails effortlessly. InspNote offers privacy protection by not saving recordings, making it a convenient and secure tool for managing inspiration.

Noiz Video Summarizer

Noiz Video Summarizer is an AI-powered tool designed to summarize YouTube videos efficiently. With just one click, users can get expert-level summaries for videos of any topic and length. The tool offers smart summaries in 41 languages, transforms videos into readable text, and helps users save time by providing concise summaries. Noiz has received positive feedback from over 500,000 users who appreciate its time-saving features and efficiency in summarizing video content.

Audionotes

Audionotes is an AI-powered note-taking app that uses speech-to-text technology to transcribe and summarize audio recordings. It also offers a variety of features to help users organize and manage their notes, including the ability to create to-do lists, set reminders, and share notes with others. Audionotes is available as a web app, a mobile app, and a Chrome extension.

Otio

Otio is an AI research and writing partner powered by o3-mini, Claude 3.7, and Gemini 2.0. It offers a fast and efficient way to do research by summarizing and chatting with documents, writing and editing in an AI text editor, and automating workflows. Otio is trusted by over 200,000 researchers and students, providing detailed, structured AI summaries, automatic summaries for various types of content, chat capabilities, and workflow automation. Users can extract insights from research quickly, automate repetitive tasks, and edit their writing with AI assistance.

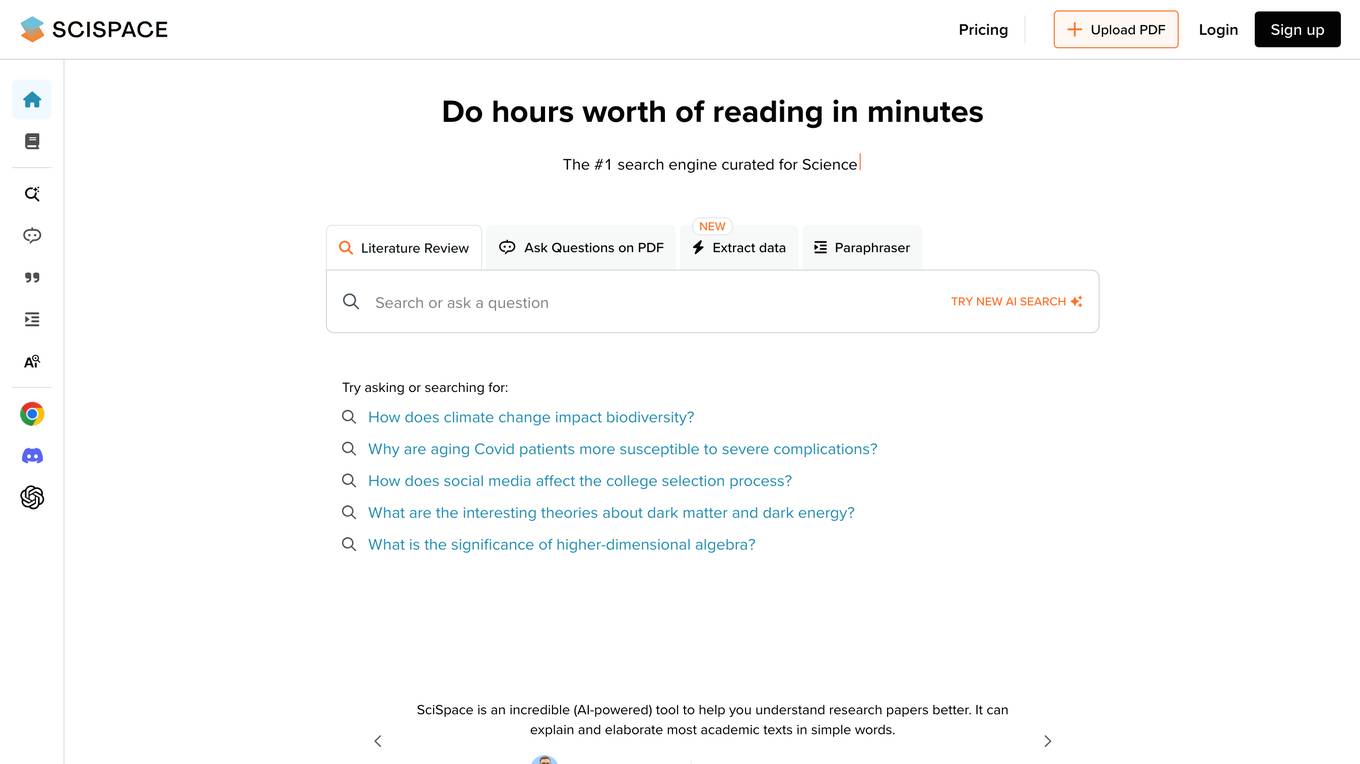

SciSpace

SciSpace is an AI-powered tool that helps researchers understand research papers better. It can explain and elaborate most academic texts in simple words. It is a great tool for students, researchers, and anyone who wants to learn more about a particular topic. SciSpace has a user-friendly interface and is easy to use. Simply upload a research paper or enter a URL, and SciSpace will do the rest. It will highlight key concepts, provide definitions, and generate a summary of the paper. SciSpace can also be used to generate citations and find related papers.

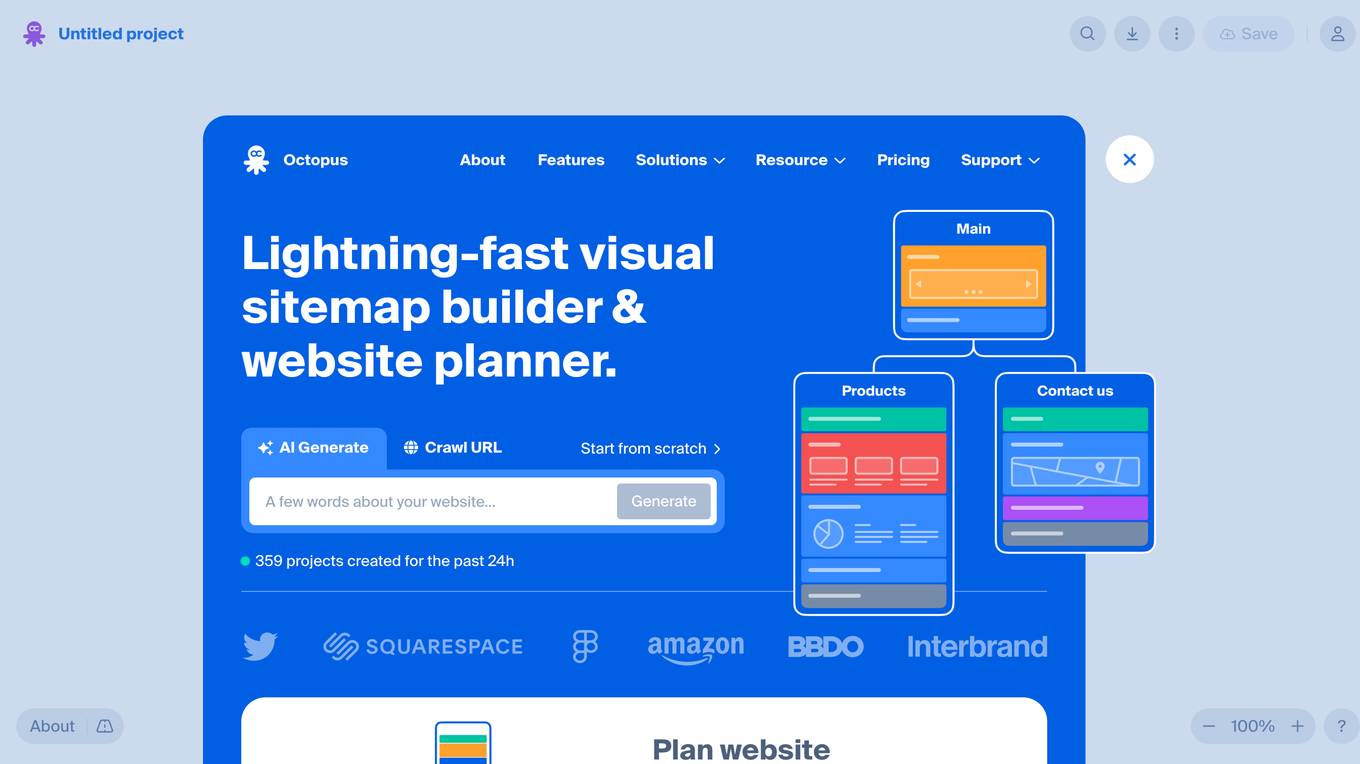

Octopus.do

Octopus.do is a lightning-fast visual sitemap builder and website planner that offers a seamless experience for website architecture planning. With the help of AI technology, users can easily generate colorful visual sitemaps and low-fidelity wireframes to visualize website content and layout. The platform allows users to prepare, manage, and collaborate on website content and SEO, making website planning fast, easy, and enjoyable. Octopus.do also provides a variety of sitemap templates for different types of websites, along with features for real-time collaboration, onsite SEO improvement, and integration with Figma designs.

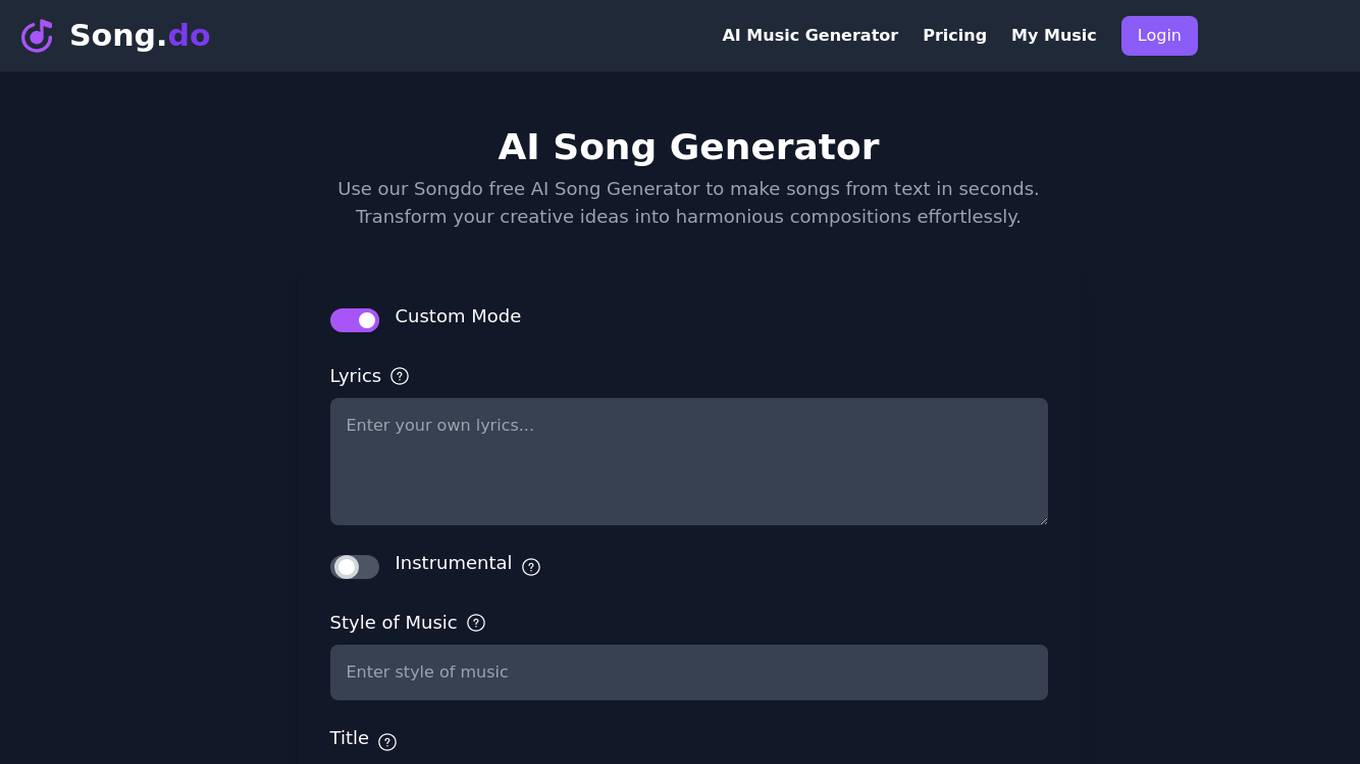

Song.do

Song.do is a free AI Song Generator tool that allows users to create beautiful and melodious songs from text in seconds. Users can input keywords, phrases, or sentences to customize lyrics and music styles, or simply enter simple words to generate a unique song effortlessly. The tool eliminates the need for expertise in music theory, melody creation, instrument playing, arrangement, recording, or lyric writing, making song creation accessible to everyone. With Song.do, users can make songs as gifts for various occasions or for personal enjoyment. The AI Song Generator supports a variety of music styles, including Blues, Classical, Rock, Pop, EDM, Funk, Instrumental, Metal, Jazz, and Rap.

Robots Do Marketing

Robots Do Marketing is an AI marketing platform designed for SaaS founders to plan, create, and optimize marketing campaigns 24/7. It provides the power of a growth marketing team without the agency fees and startup burnout. The platform aims to help businesses achieve marketing success efficiently and cost-effectively through AI-powered solutions.

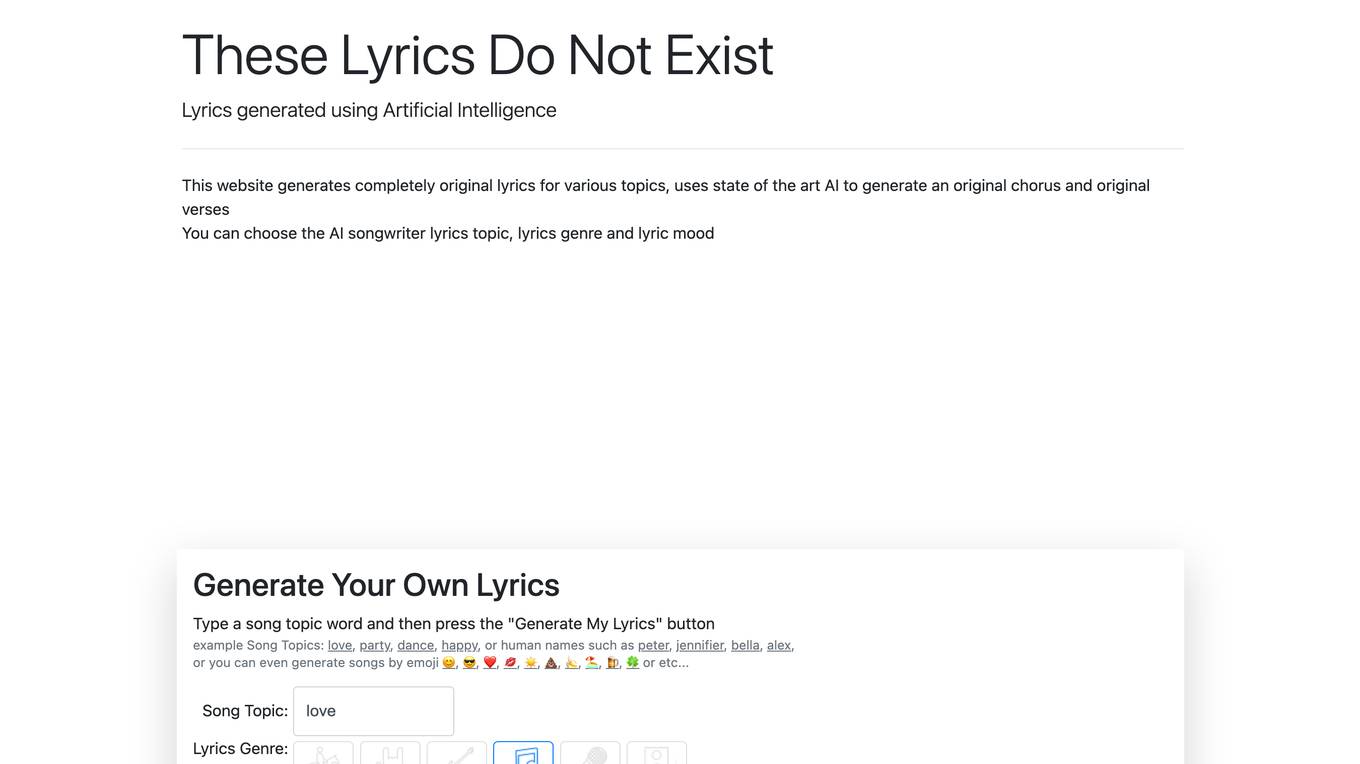

These Lyrics Do Not Exist

These Lyrics Do Not Exist is an AI-powered songwriter tool that generates completely original lyrics for various topics. Users can choose the topic, genre, and mood of the lyrics, and the tool uses state-of-the-art AI to create original verses and choruses. It aims to stimulate creative writing processes for songwriters, rappers, and freestylers, providing unlimited access to fresh and relevant lyrical ideas to overcome writer's block and enhance songwriting creativity and productivity.

How Old Do I Look?

This AI-powered age detection tool analyzes your photo to estimate how old you look. It utilizes advanced artificial intelligence technology to assess facial characteristics such as wrinkles, skin texture, and facial features, comparing them against a vast dataset to provide an approximation of your age. The tool is free to use and ensures privacy by automatically deleting uploaded photos after analysis.

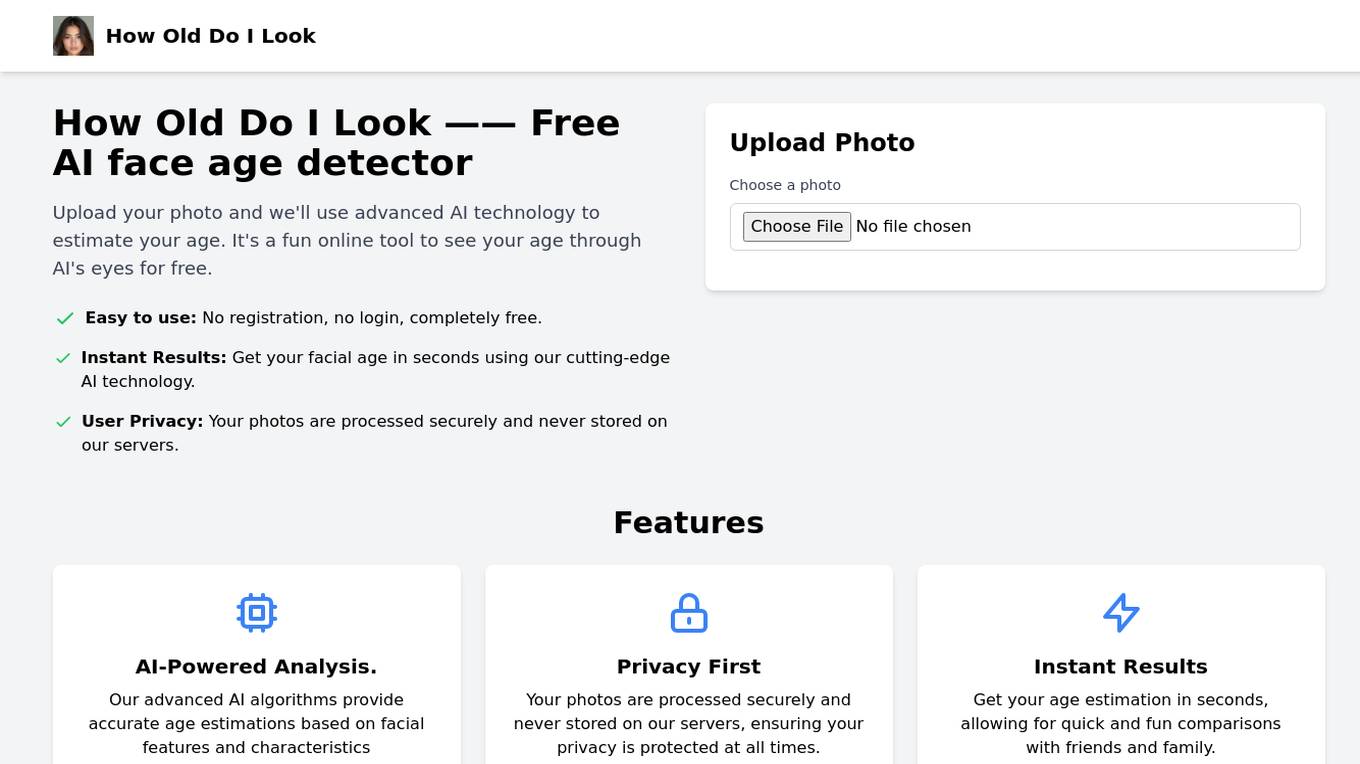

How Old Do I Look

How Old Do I Look is a free online AI face age detector that utilizes advanced AI technology to estimate the age of a person based on their uploaded photo. Users can easily upload a photo without the need for registration or login, ensuring a quick and fun experience. The tool provides instant results by analyzing facial features and characteristics through AI-powered algorithms. User privacy is prioritized as photos are securely processed and not stored on the servers. How Old Do I Look offers a unique way to see one's age through the eyes of AI, allowing for entertaining comparisons with friends and family.

Craft

Craft is a versatile and intuitive docs and notes editor designed to help users capture ideas, organize tasks, and bring big ideas to life. It offers a seamless writing experience across all devices, allowing users to transform quick notes into polished documents. Craft also provides features like templates, whiteboards, and AI-powered writing assistance. Users from various professions such as writers, educators, students, producers, and photographers utilize Craft for a wide range of tasks, from show notes and project outlines to shoot plans and travel itineraries.

Village

Village is an AI-powered platform that helps you build and manage your social capital. It provides you with the tools and insights you need to connect with the right people, build strong relationships, and achieve your goals.

Actor AI Assistant

Actor AI Assistant is an innovative AI tool designed to assist users in various tasks. It utilizes advanced artificial intelligence algorithms to provide personalized recommendations and streamline workflows. The tool is user-friendly and offers a seamless experience for individuals and businesses looking to enhance productivity and efficiency.

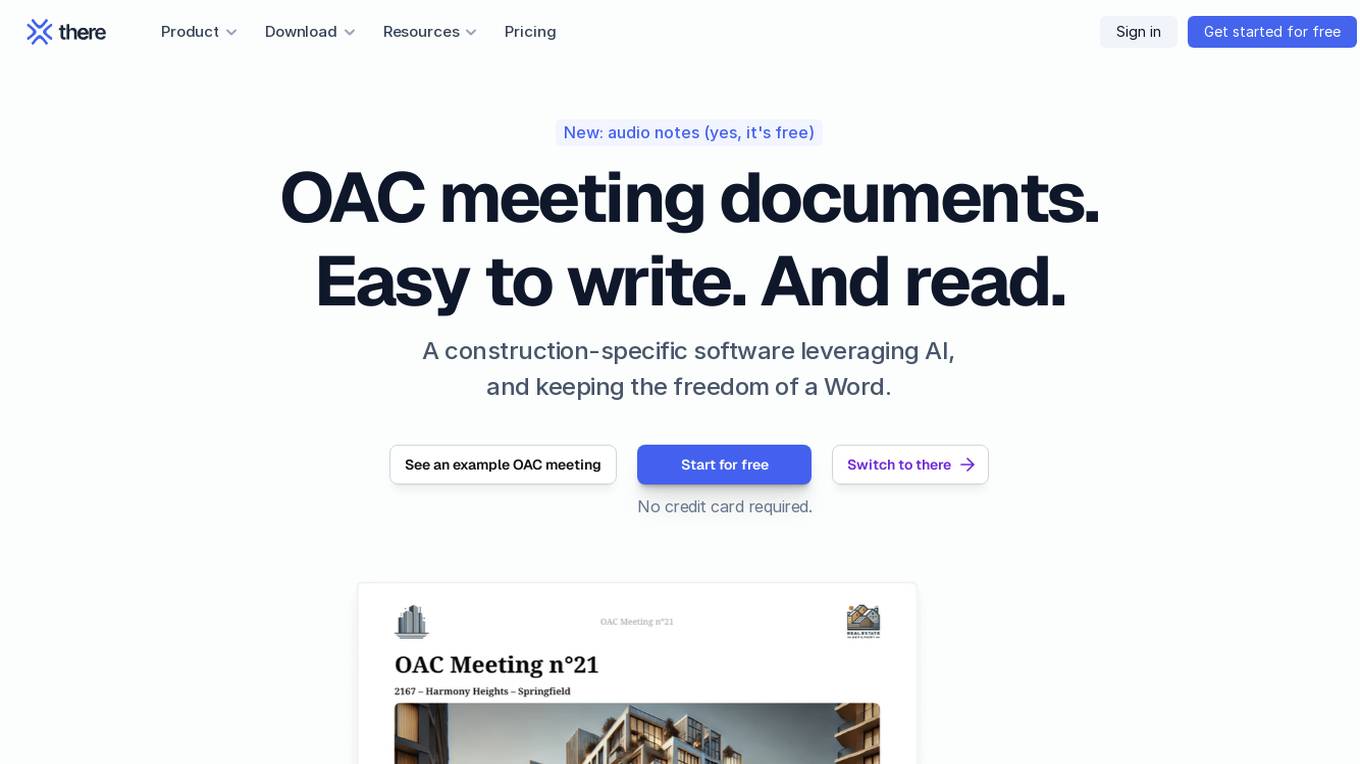

there

there is an AI-powered application designed for construction professionals to easily write and read meeting documents. It offers features such as taking notes with voice-to-text capabilities, structuring documents with AI, effortless sharing, and AI-powered summaries. Users can create professional documents quickly and efficiently, benefiting from a seamless workflow and enhanced productivity. The application aims to streamline the document creation process and provide a user-friendly experience for construction industry professionals.

Shadow

Shadow is a Bot-Free AI Autopilot tool designed to assist users with post-meeting tasks. It listens to and understands conversations without a bot, helping users complete follow-up tasks 20x faster. Shadow generates transcripts, performs tasks discussed during meetings, and handles follow-up emails and CRM updates. The tool prioritizes privacy by storing recordings locally and offers features like extracting action items, key insights, follow-up emails, and performance feedback. Shadow is constantly learning new skills and aims to streamline meeting workflows for users.

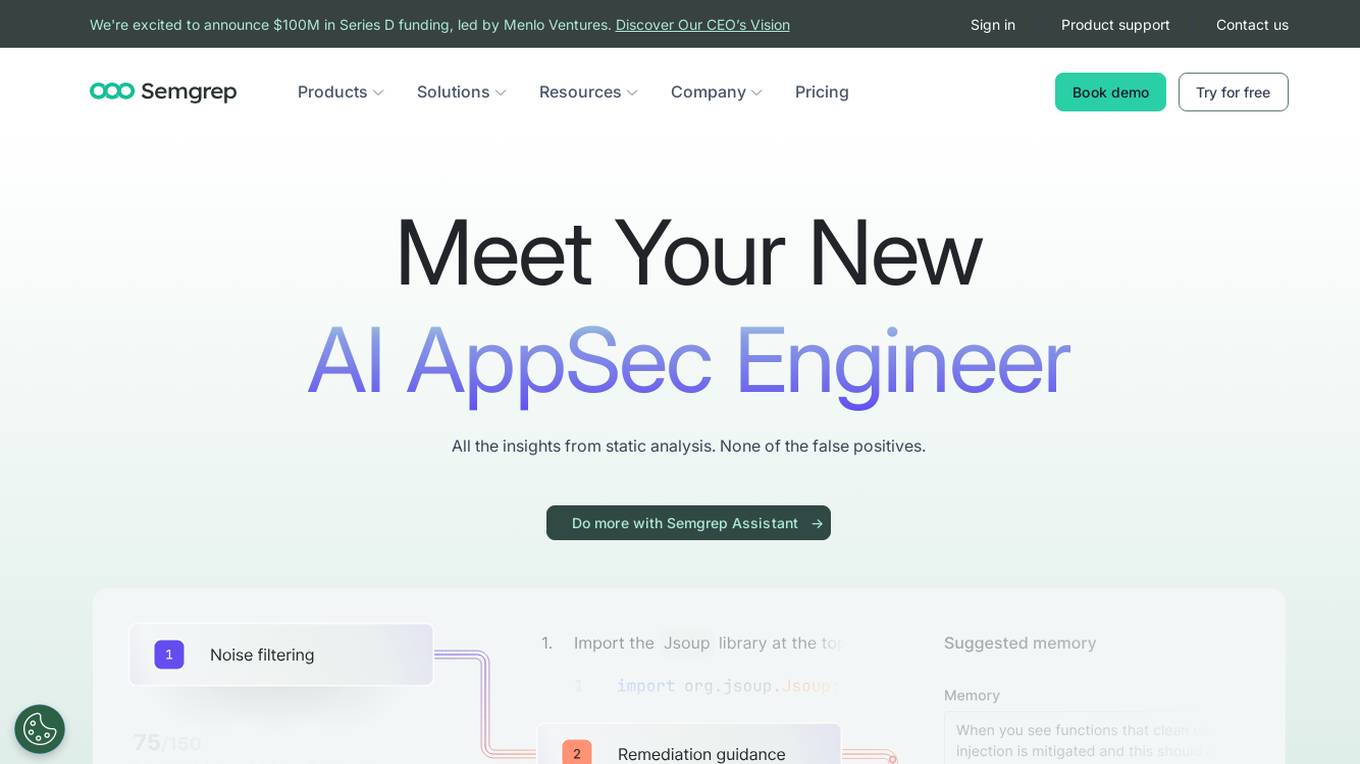

Semgrep

Semgrep is an AI-powered application designed for static analysis and security testing of code. It helps developers find and fix issues in their code, detect vulnerabilities in the software supply chain, and identify hardcoded secrets. Semgrep offers features such as AI-powered noise filtering, dataflow analysis, and tailored remediation guidance. It is known for its speed, transparency, and extensibility, making it a valuable tool for AppSec teams of all sizes.

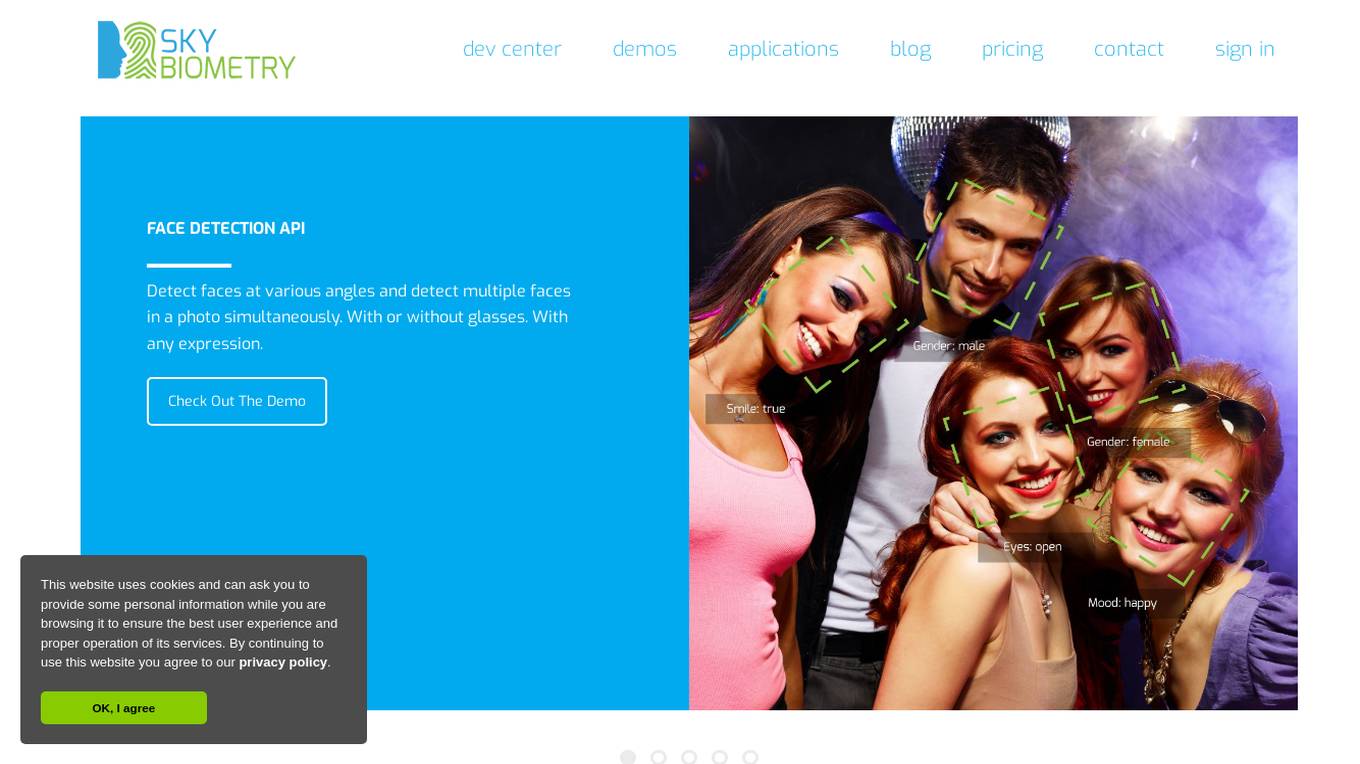

SkyBiometry

Skybiometry is a cloud-based face recognition API service that offers advanced features such as face detection, face recognition, face grouping, and attributes determination. It provides high-quality face recognition algorithms and the ability to detect faces at various angles with or without glasses, expressions, and other attributes. The service is suitable for applications in advertising campaigns, photo management, user authentication, community moderation, and specific projects. Skybiometry allows developers and marketers to integrate face recognition technology into their projects easily, enhancing customization and execution capabilities.

1 - Open Source AI Tools

ai-lab-recipes

This repository contains recipes for building and running containerized AI and LLM applications with Podman. It provides model servers that serve machine-learning models via an API, allowing developers to quickly prototype new AI applications locally. The recipes include components like model servers and AI applications for tasks such as chat, summarization, object detection, etc. Images for sample applications and models are available in `quay.io`, and bootable containers for AI training on Linux OS are enabled.

20 - OpenAI Gpts

Do you want fries with that?

This GPT is designed to act as a fast food customer service manager, analyzing customer feedback and crafting appropriate responses for both the customer and the employee(s) involved.

How Do I Play Any Game?

Free game play advice for any game on the internet! Brought to you by Gaming-Fans.com

Why do I live here?

I'm here to remind you of all the great things that exist where you live.

I do

Crafts personalized wedding vows, toasts, and anniversary letters based on user details

Guru do Marketing de Velas

Estrategista de marketing para vendas online de velas aromáticas.

Let do Projurista

Analista em licitações e contratos e advogada em mandado de segurança. V.1.1.

How do I get a job in IT? (not career advice)

Expert-led IT career guide with interactive coaching and real-world simulations.

Cria roteiro da vida do cara

Fale o nome da pessoa e criarei um roteiro de filme sobre ele

O cara do som

Expert in residential speaker systems, offering detailed advice and product recommendations.

Find Things To Do & Events in Bangkok

Discover the best of Bangkok with our AI-powered guide! Personalized, up-to-date recommendations from trusted sources to enhance your Bangkok experience.