Best AI tools for< Detect Phishing Links >

20 - AI tool Sites

Link Shield

Link Shield is an AI-powered malicious URL detection API platform that helps protect online security. It utilizes advanced machine learning algorithms to analyze URLs and identify suspicious activity, safeguarding users from phishing scams, malware, and other harmful threats. The API is designed for ease of integration, affordability, and flexibility, making it accessible to developers of all levels. Link Shield empowers businesses to ensure the safety and security of their applications and online communities.

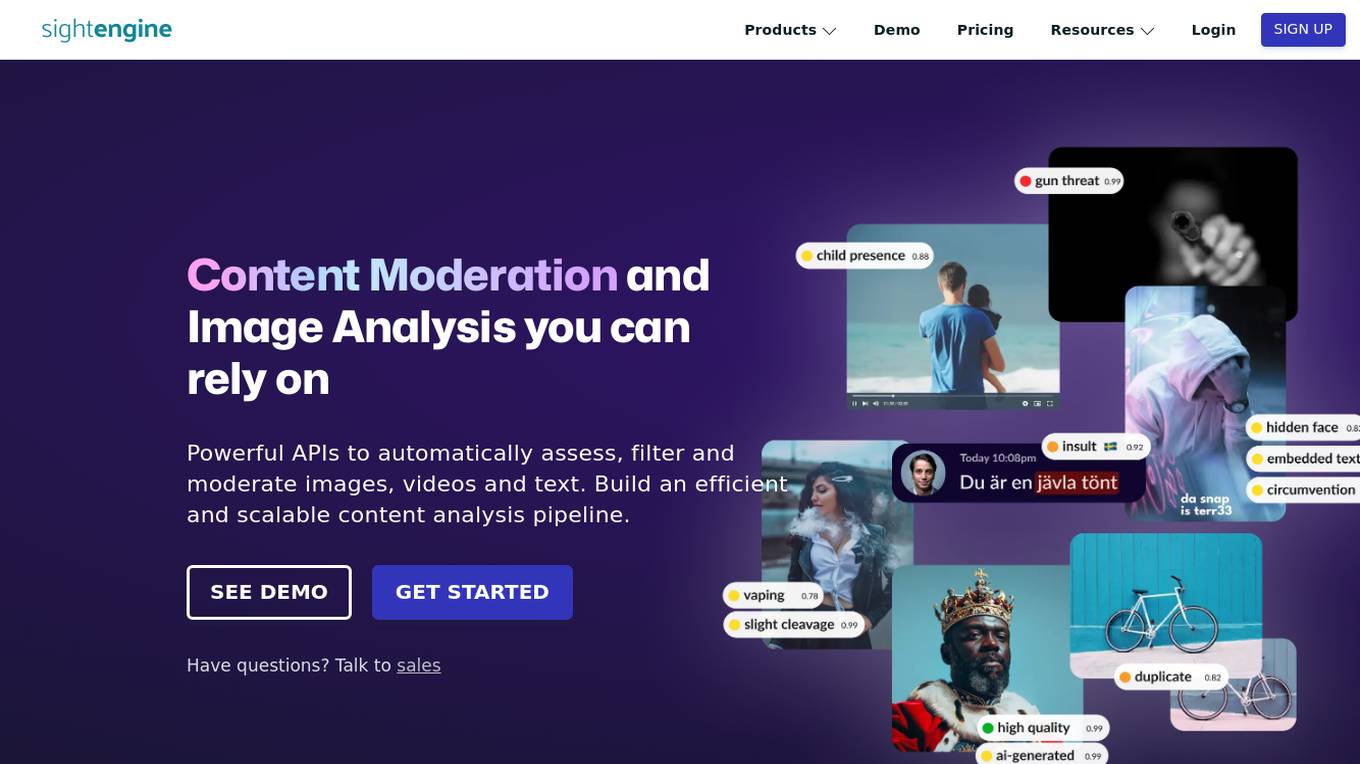

Sightengine

The website offers content moderation and image analysis products using powerful APIs to automatically assess, filter, and moderate images, videos, and text. It provides features such as image moderation, video moderation, text moderation, AI image detection, and video anonymization. The application helps in detecting unwanted content, AI-generated images, and personal information in videos. It also offers tools to identify near-duplicates, spam, and abusive links, and prevent phishing and circumvention attempts. The platform is fast, scalable, accurate, easy to integrate, and privacy compliant, making it suitable for various industries like marketplaces, dating apps, and news platforms.

Abnormal

Abnormal is an AI-powered platform that leverages superhuman understanding of human behavior to protect against email attacks such as phishing, social engineering, and account takeovers. The platform offers unified protection across email and cloud applications, behavioral anomaly detection, account compromise detection, data security, and autonomous AI agents for security operations. Abnormal is recognized as a leader in email security and AI-native security, trusted by over 3,000 customers, including 20% of the Fortune 500. The platform aims to autonomously protect humans, reduce risks, save costs, accelerate AI adoption, and provide industry-leading security solutions.

Abnormal Security

Abnormal Security is an AI-powered platform that leverages superhuman understanding of human behavior to protect against email threats such as phishing, social engineering, and account takeovers. The platform is trusted by over 3,000 customers, including 25% of the Fortune 500 companies. Abnormal Security offers a comprehensive cloud email security solution, behavioral anomaly detection, SaaS security, and autonomous AI security agents to provide multi-layered protection against advanced email attacks. The platform is recognized as a leader in email security and AI-native security, delivering unmatched protection and reducing the risk of phishing attacks by 90%.

Breacher.ai

Breacher.ai is an AI-powered cybersecurity solution that specializes in deepfake detection and protection. It offers a range of services to help organizations guard against deepfake attacks, including deepfake phishing simulations, awareness training, micro-curriculum, educational videos, and certification. The platform combines advanced AI technology with expert knowledge to detect, educate, and protect against deepfake threats, ensuring the security of employees, assets, and reputation. Breacher.ai's fully managed service and seamless integration with existing security measures provide a comprehensive defense strategy against deepfake attacks.

Darktrace

Darktrace is an essential AI cybersecurity platform that offers proactive protection, cloud-native AI security, comprehensive risk management, and user protection across various devices. It accelerates triage by 10x, defends with confidence, and connects with various integrations. Darktrace ActiveAI Security Platform spots novel threats across organizations, providing solutions for ransomware, APTs, phishing, data loss, and more. With a focus on defense, Darktrace aims to transform cybersecurity by detecting and responding to known and novel threats in real-time.

Darktrace

Darktrace is a cybersecurity platform that leverages AI technology to provide proactive protection against cyber threats. It offers cloud-native AI security solutions for networks, emails, cloud environments, identity protection, and endpoint security. Darktrace's AI Analyst investigates alerts at the speed and scale of AI, mimicking human analyst behavior. The platform also includes services such as 24/7 expert support and incident management. Darktrace's AI is built on a unique approach where it learns from the organization's data to detect and respond to threats effectively. The platform caters to organizations of all sizes and industries, offering real-time detection and autonomous response to known and novel threats.

Varonis

Varonis is an AI-powered data security platform that provides end-to-end data security solutions for organizations. It offers automated outcomes to reduce risk, enforce policies, and stop active threats. Varonis helps in data discovery & classification, data security posture management, data-centric UEBA, data access governance, and data loss prevention. The platform is designed to protect critical data across multi-cloud, SaaS, hybrid, and AI environments.

Face Shape Detect

Face Shape Detect is an AI-powered tool that allows users to analyze their unique facial structure and determine their face shape for personalized recommendations. Users can upload a photo to receive accurate face shape analysis and styling tips. The tool prioritizes privacy by securely processing images without storing them. It helps users understand their face shape for better fashion and beauty choices.

ZeroGPT

ZeroGPT is a trusted AI detector tool that specializes in detecting AI-generated content like ChatGPT, GPT4, and Gemini. It offers advanced features such as AI summarization, paraphrasing, grammar and spell checking, translation, word counting, and citation generation. The tool is designed to provide highly accurate results and supports multiple languages. ZeroGPT stands out for its highlighted sentences feature, batch file upload capability, high accuracy model, and automatically generated reports. It utilizes DeepAnalyse™ Technology, a multi-stage methodology that optimizes accuracy while minimizing false positives and negatives. Users can unlock premium features and API access to enhance their writing skills and integrate the tool on a large scale.

AI or Not

AI or Not is an AI-powered tool that helps businesses and individuals detect AI-generated images and audio. It uses advanced machine learning algorithms to analyze content and determine the likelihood of AI manipulation. With AI or Not, users can protect themselves from fraud, misinformation, and other malicious activities involving AI-generated content.

AIDP

AIDP is a comprehensive platform that helps you find and remove the fingerprints of AI in documents. It includes automatic and manual tools for revising content that was written by ChatGPT and other AI models. With AIDP, you can: * Detect and wipe the traces of AI instantly. * See what triggers AI detection. * Get suggestions for wording changes and rewrites. * Make AI sound human. * Get a tone analysis to determine how your document sounds. * Find and wipe AI from any document.

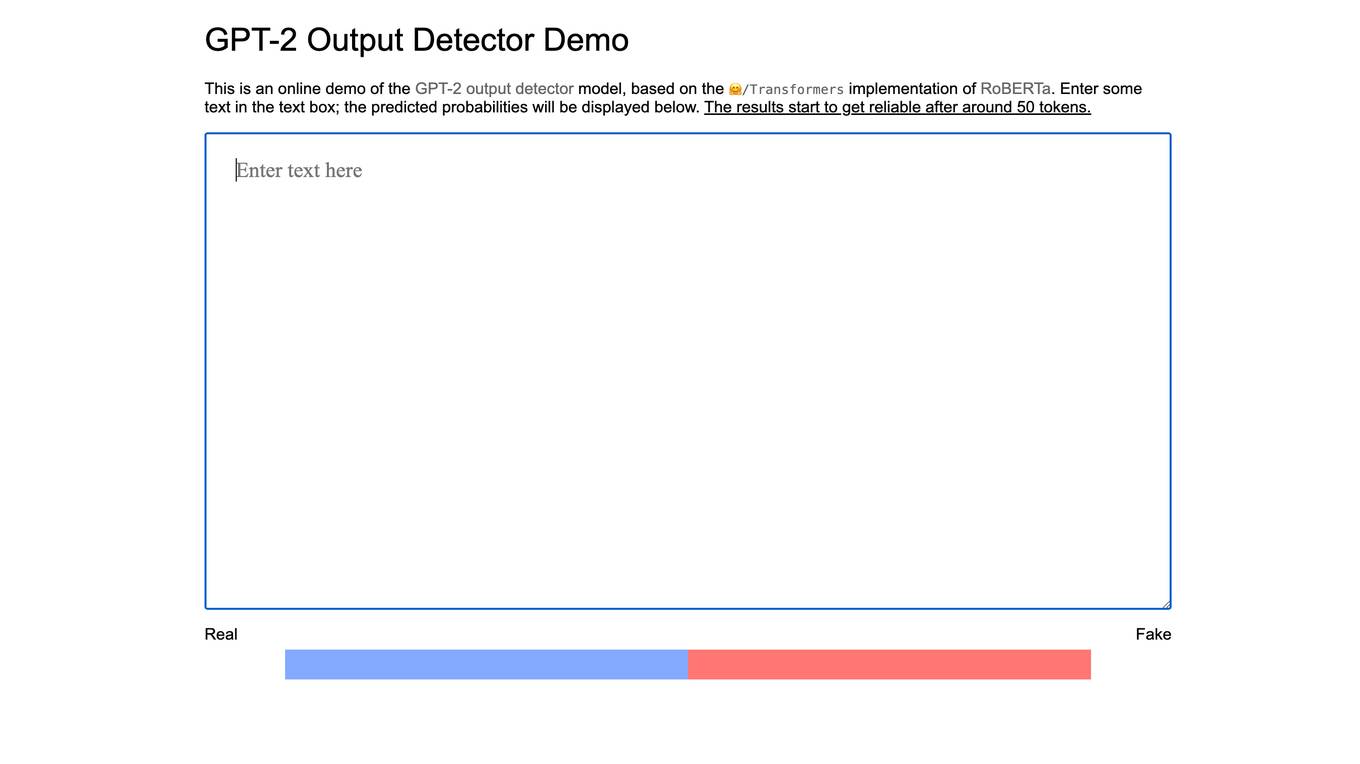

GPT-2 Output Detector

The GPT-2 Output Detector is an online tool that helps users identify whether a given text was generated by the GPT-2 language model. The tool is based on the RoBERTa implementation of Transformers, a popular natural language processing library. Users can enter text into the text box, and the tool will predict the probability that the text was generated by GPT-2. The results start to get reliable after around 50 tokens.

GRAIL

GRAIL is a healthcare company innovating to solve medicine’s most important challenges. Our team of leading scientists, engineers and clinicians are on an urgent mission to detect cancer early, when it is more treatable and potentially curable. GRAIL's Galleri® test is a first-of-its-kind multi-cancer early detection (MCED) test that can detect a signal shared by more than 50 cancer types and predict the tissue type or organ associated with the signal to help healthcare providers determine next steps.

AIDetect

AIDetect is a powerful AI content detector tool that allows users to identify AI-generated writing within any text. It offers cutting-edge features and high accuracy, comparable to Turnitin, to help users verify the authenticity of content. With advanced technology, AIDetect ensures that users can distinguish between human and AI-generated content effortlessly.

AI Detector

AI Detector is an online tool that uses advanced algorithms and machine learning to check if your written text is generated by AI or a human writer. It analyzes the writing style, sentence structure, and other linguistic patterns to determine the likelihood of AI authorship. The tool provides a percentage score indicating the probability of AI-generated content, helping users identify potential plagiarism or AI-assisted writing.

AI Checker

AI Checker is a free tool and plagiarism detector that accurately identifies if a text is generated by AI tools like GPT-3, GPT-4, Gemini, OpenAI, and others. It helps users protect their content by detecting AI-generated text and human-written content. The tool uses advanced algorithms to provide accurate results and percentage analysis of AI-generated content within a text. AI Checker is beneficial for writers, students, educators, content marketers, freelancers, editors, publishers, researchers, and content consumers across different languages and contexts.

HEALWELL AI

HEALWELL AI is a healthcare technology company focusing on preventative care through AI and data science. Their mission is to improve healthcare and save lives by early disease detection. HEALWELL provides AI tools for healthcare providers to screen and detect rare, complex, and chronic diseases. They have developed AI clinical co-pilot technologies to assist physicians in early disease detection, ultimately accelerating time to diagnosis and saving lives.

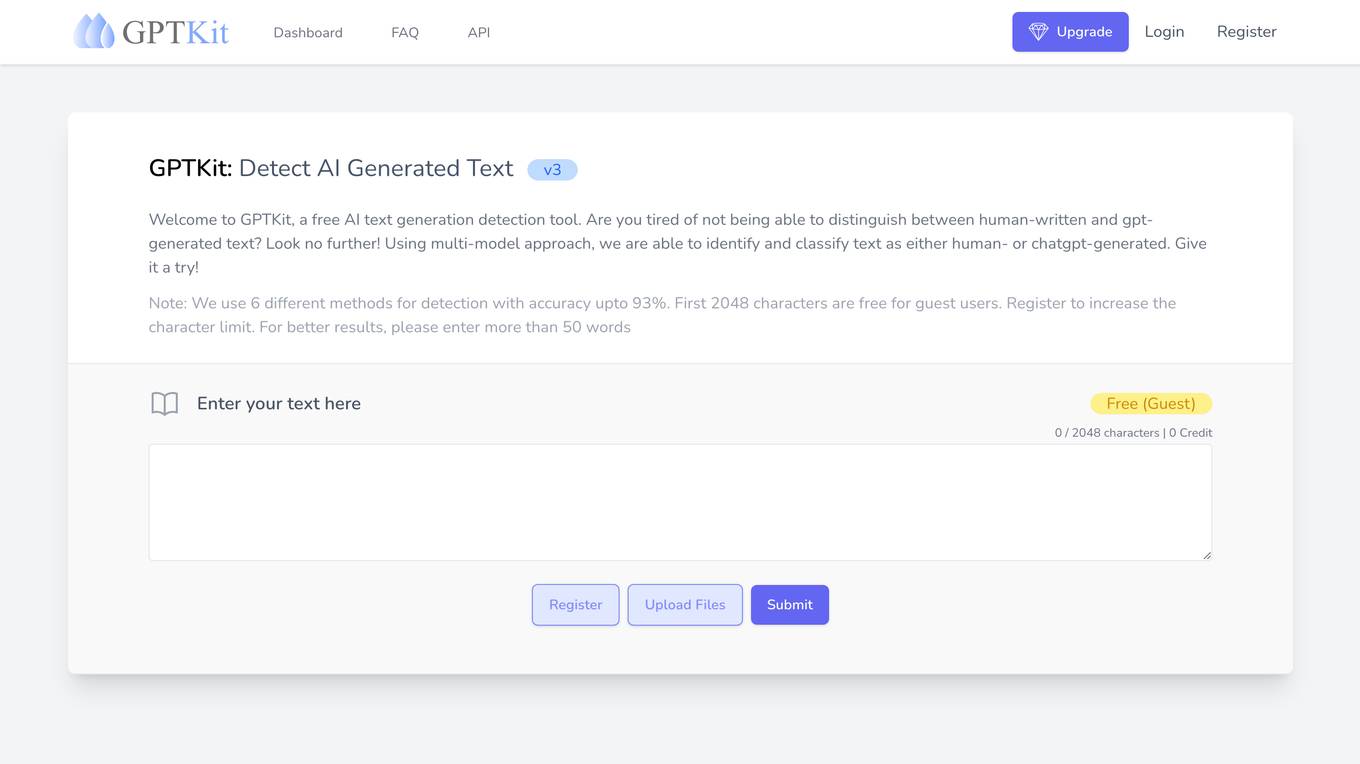

GPTKit

GPTKit is a free AI text generation detection tool that utilizes six different AI-based content detection techniques to identify and classify text as either human- or AI-generated. It provides reports on the authenticity and reality of the analyzed content, with an accuracy of approximately 93%. The first 2048 characters in every request are free, and users can register for free to get 2048 characters/request.

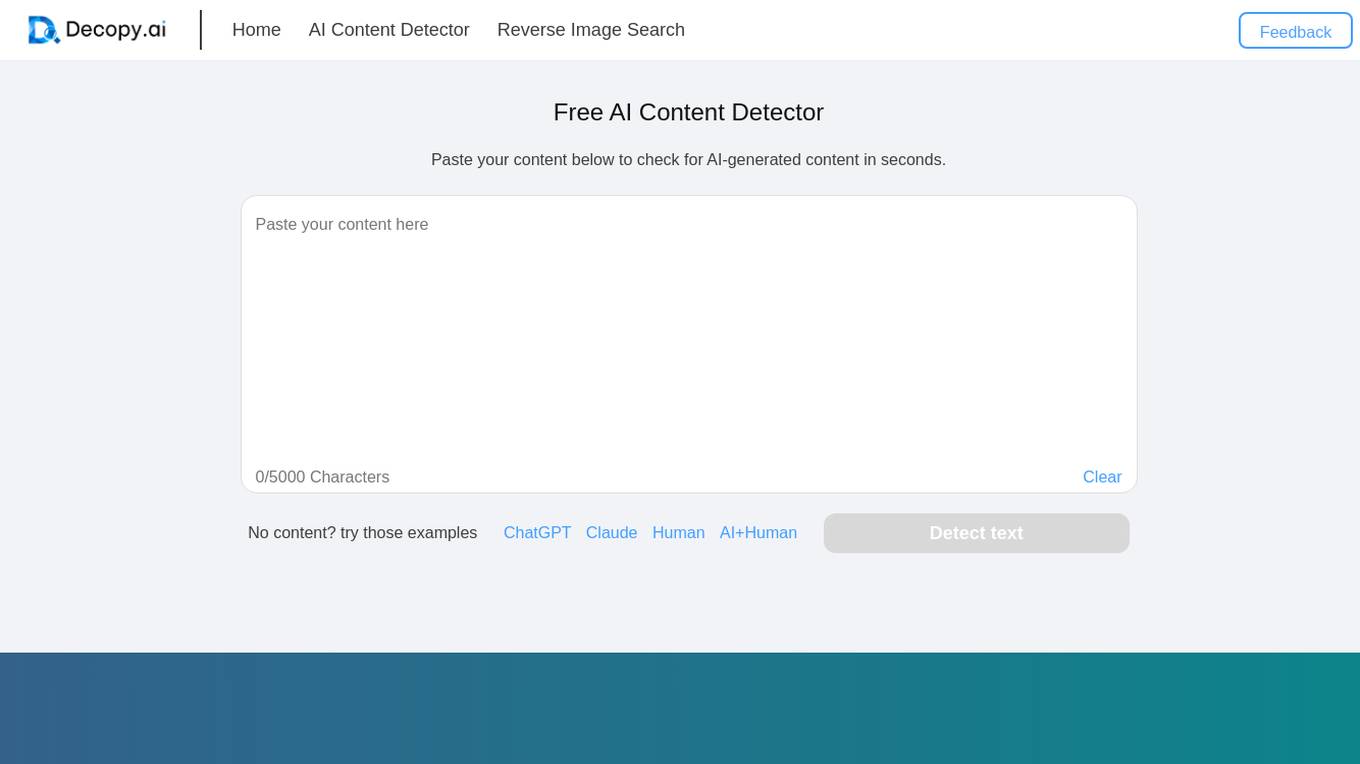

Decopy AI Content Detector

Decopy AI Content Detector is an AI tool designed to help users determine if a given text was written by a human or generated by AI. It accurately identifies AI-generated, paraphrased, and human-written content. The tool offers features such as AI content highlighting, superior detection accuracy, user-friendly interface, free AI detection, instant access without sign-up, and guaranteed privacy. Users can utilize the AI Detector for tasks like academic integrity checks, content creation, journalism verification, publishing standards maintenance, SEO content uniqueness, social media reliability checks, legal document originality verification, and corporate training material quality assurance.

0 - Open Source AI Tools

20 - OpenAI Gpts

Phish or No Phish Trainer

Hone your phishing detection skills! Analyze emails, texts, and calls to spot deception. Become a security pro!

FallacyGPT

Detect logical fallacies and lapses in critical thinking to help avoid misinformation in the style of Socrates

AI Detector

AI Detector GPT is powered by Winston AI and created to help identify AI generated content. It is designed to help you detect use of AI Writing Chatbots such as ChatGPT, Claude and Bard and maintain integrity in academia and publishing. Winston AI is the most trusted AI content detector.

Plagiarism Checker

Plagiarism Checker GPT is powered by Winston AI and created to help identify plagiarized content. It is designed to help you detect instances of plagiarism and maintain integrity in academia and publishing. Winston AI is the most trusted AI and Plagiarism Checker.

BS Meter Realtime

Detects and measures information credibility. Provides a "BS Score" (0-100) based on content analysis for misinformation signs, including factual inaccuracies and sensationalist language. Real-time feedback.

Wowza Bias Detective

I analyze cognitive biases in scenarios and thoughts, providing neutral, educational insights.

Defender for Endpoint Guardian

To assist individuals seeking to learn about or work with Microsoft's Defender for Endpoint. I provide detailed explanations, step-by-step guides, troubleshooting advice, cybersecurity best practices, and demonstrations, all specifically tailored to Microsoft Defender for Endpoint.

Prompt Injection Detector

GPT used to classify prompts as valid inputs or injection attempts. Json output.

Blue Team Guide

it is a meticulously crafted arsenal of knowledge, insights, and guidelines that is shaped to empower organizations in crafting, enhancing, and refining their cybersecurity defenses