Best AI tools for< Describe Images >

12 - AI tool Sites

Seeing AI

Seeing AI is a free app designed for the blind and low vision community. It utilizes AI technology to narrate the world around users, assisting with tasks such as reading, describing photos, and identifying products. The app is an ongoing research project that evolves based on feedback from the community and advancements in AI research.

3Play Media

3Play Media is a leading provider of AI-powered media accessibility solutions. Our mission is to make the world's media accessible to everyone, regardless of their abilities. We offer a suite of products and services that make it easy to add captions, transcripts, audio descriptions, and other accessibility features to your videos and audio content.

Be My Eyes

Be My Eyes is an AI-powered visual assistance application that connects blind and low-vision users with volunteers and companies worldwide. Users can request live video support, receive assistance through artificial intelligence, and access professional support from partners. The app aims to improve accessibility for individuals with visual impairments by providing a platform for real-time assistance and support.

Image In Words

Image In Words is a generative model designed for scenarios that require generating ultra-detailed text from images. It leverages cutting-edge image recognition technology to provide high-quality and natural image descriptions. The framework ensures detailed and accurate descriptions, improves model performance, reduces fictional content, enhances visual-language reasoning capabilities, and has wide applications across various fields. Image In Words supports English and has been trained using approximately 100,000 hours of English data. It has demonstrated high quality and naturalness in various tests.

CaptionBot

CaptionBot is an AI tool developed by Microsoft Cognitive Services that provides automated image captioning. It uses advanced artificial intelligence algorithms to analyze images and generate descriptive captions. Users can upload images to the platform and receive accurate and detailed descriptions of the content within the images. CaptionBot.ai aims to assist users in understanding and interpreting visual content more effectively through the power of AI technology.

AITag.Photo

AITag.Photo is an AI tool that helps users quickly generate tags, descriptions, and other keywords for their photos. It uses advanced image understanding technology to accurately generate content descriptions for each photo, making it easy to organize and manage photos efficiently. Users can create stories based on images, featuring dialogues or monologues of characters. AITag.Photo simplifies the process of describing photos, saving users time and effort in photo management.

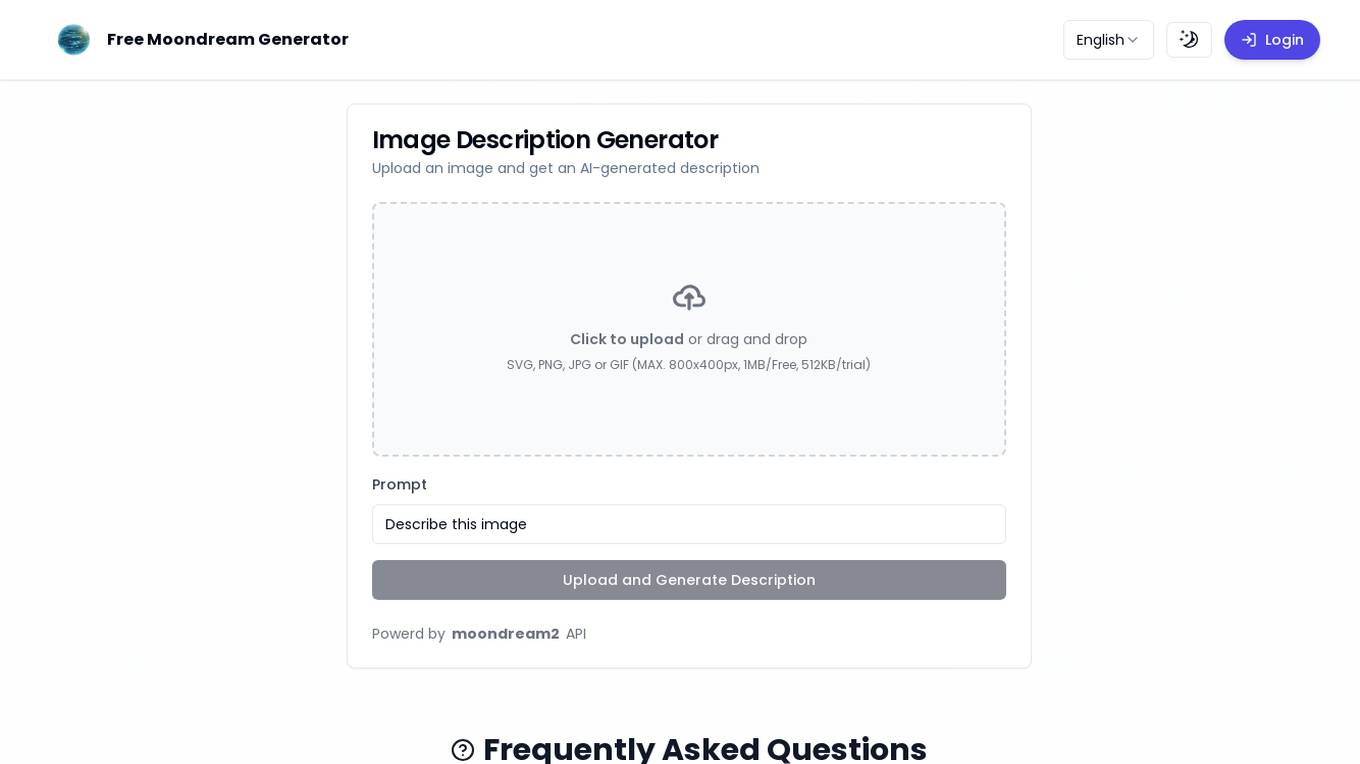

Free Moondream Generator

Free Moondream Generator is an AI tool that allows users to upload an image and receive an AI-generated description. The tool supports various image file types such as SVG, PNG, JPG, or GIF with specific size limitations. It is powered by the Moondream2 API, providing users with accurate and detailed image descriptions. The tool aims to simplify the process of generating descriptions for images through AI technology.

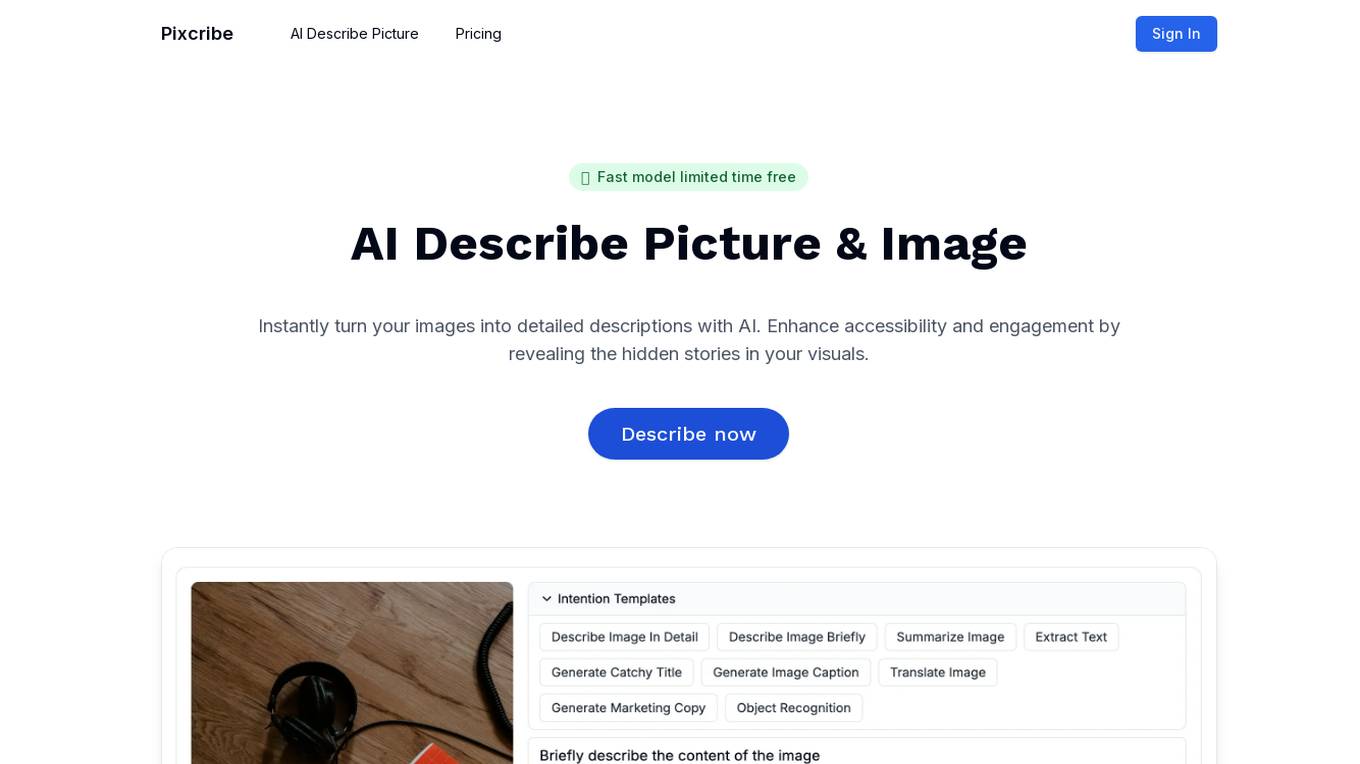

Pixcribe

Pixcribe is an AI-powered tool that instantly turns images into detailed descriptions, enhancing accessibility and engagement by revealing hidden stories in visuals. Users can harness AI to describe pictures and images, saving time and captivating audiences with rich visual narratives. The tool generates accurate, SEO-friendly descriptions in seconds, freeing users to focus on creating great content. Additionally, Pixcribe adapts to any industry, tailoring descriptions to specific fields and boosting relevance and conversions with industry-specific insights.

Describe.pictures

Describe.pictures is an AI tool designed to generate detailed descriptions of images. By utilizing advanced AI models, users can quickly obtain complete descriptions of various images. The tool allows users to select an image and input the desired way of describing it, such as providing detailed or brief descriptions. The generated descriptions are detailed and vivid, capturing the essence and details of the image. With a focus on enhancing user experience and providing accurate image descriptions, Describe.pictures is a valuable tool for various applications.

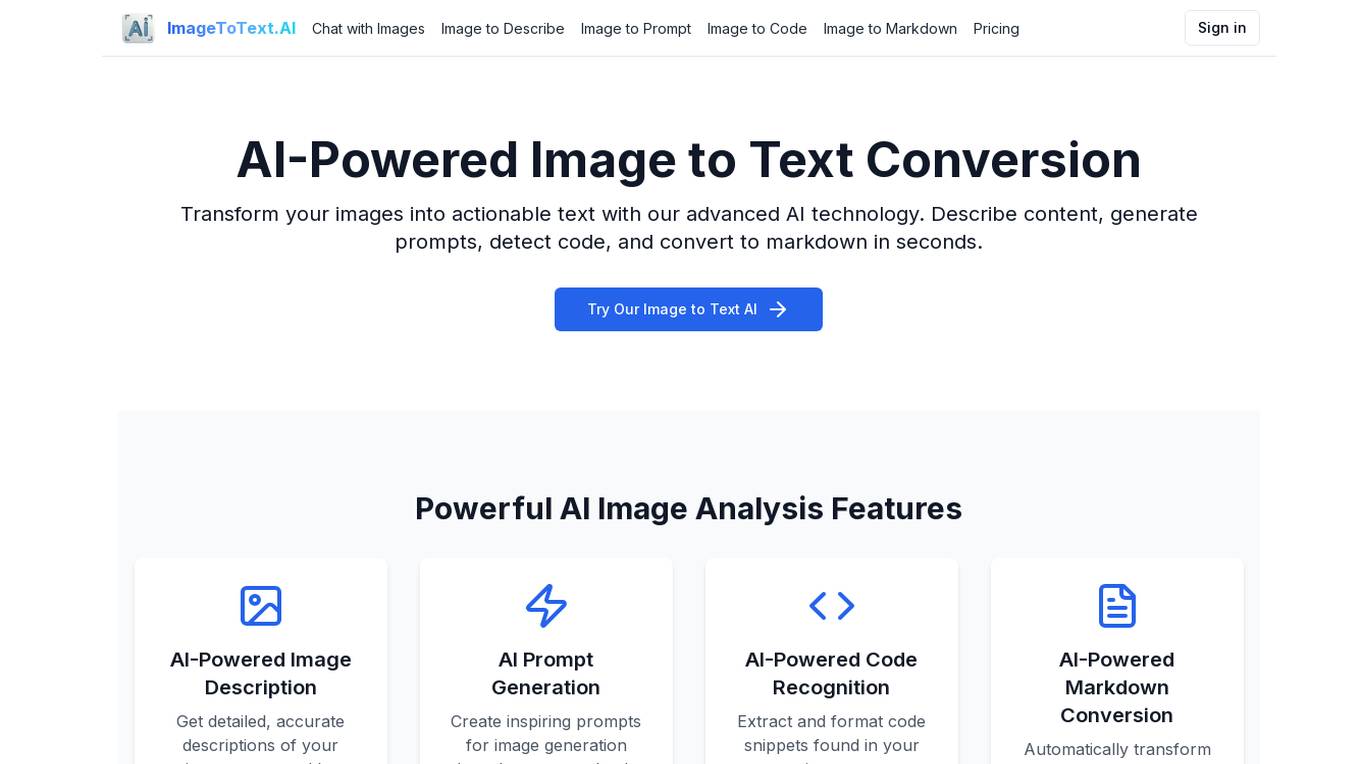

ImageToText.AI

ImageToText.AI is an AI-powered tool that allows users to convert images into actionable text using advanced AI technology. Users can describe image content, generate prompts, detect code, and convert to markdown in seconds. The tool offers powerful AI image analysis features such as image description, prompt generation, code recognition, and markdown conversion. With simple and transparent pricing options, users can choose between a one-time purchase or a monthly subscription plan. ImageToText.AI aims to provide users with a seamless experience in transforming images into text with the help of AI technology.

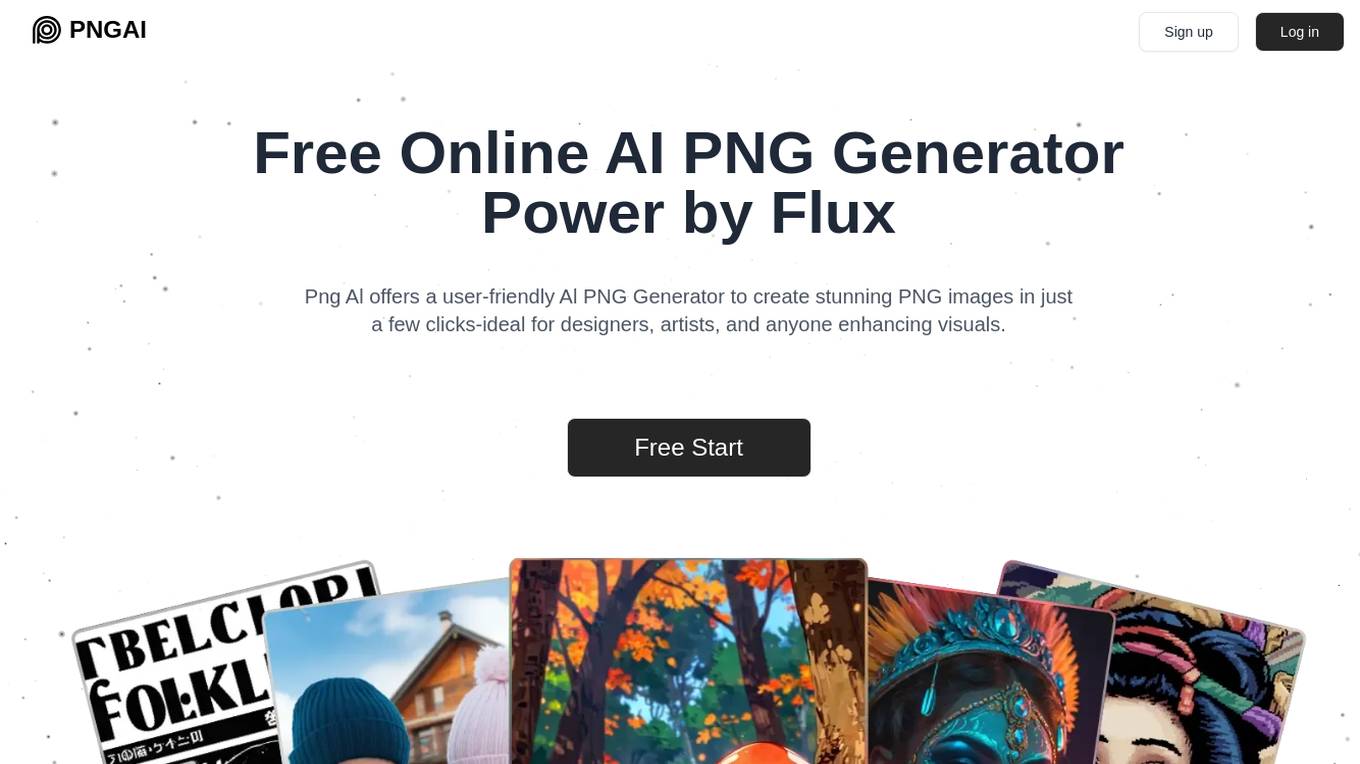

PNGAI

PNGAI is a free online AI PNG Generator powered by Flux, offering a user-friendly AI PNG Generator to create stunning PNG images in just a few clicks. Users can simply describe their image, and the AI PNG Generator will quickly generate diverse visuals, making it ideal for designers, artists, and content creators. The tool provides features like Text to PNG Generator, Image Remix, Image to Describe, and an Easy-to-Use PNG AI interface. PNGAI utilizes Flux as the core model for image generation, delivering top-quality images with advanced features and diverse options.

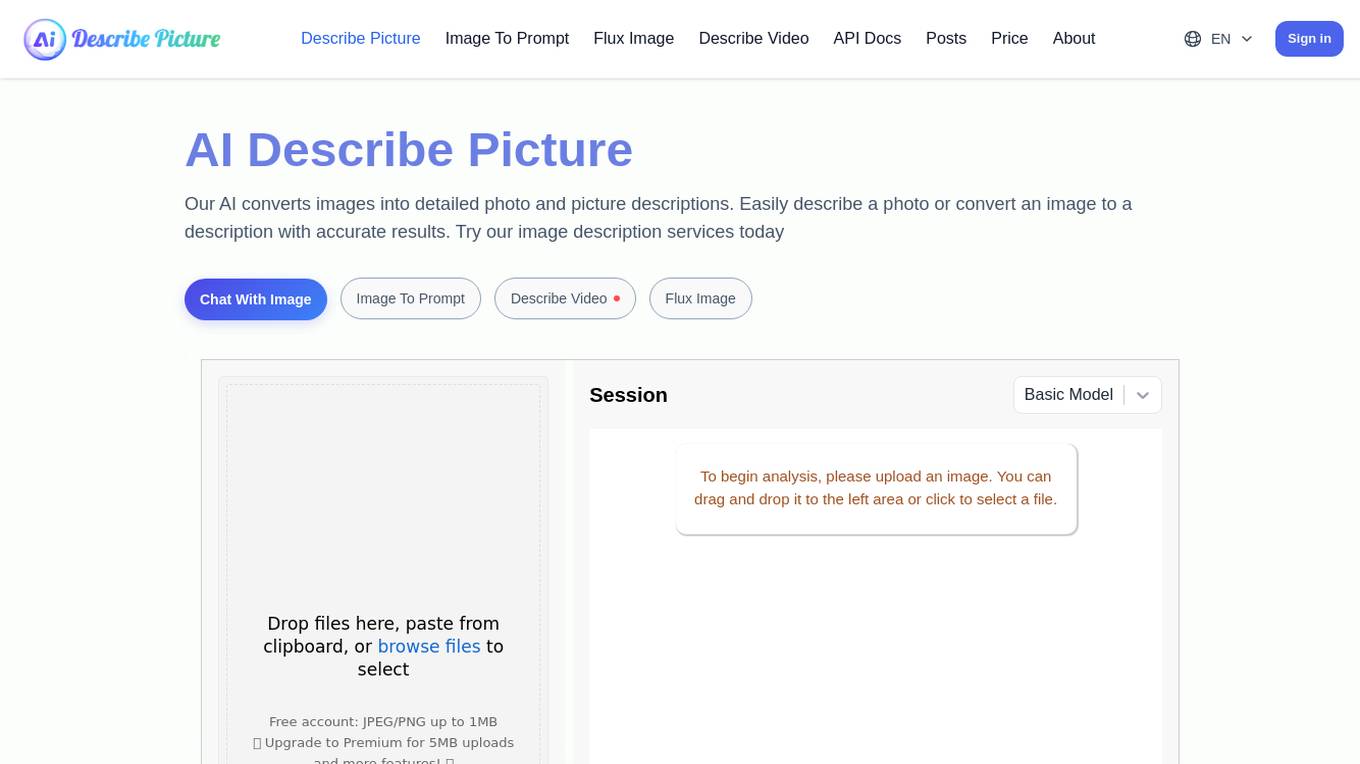

AI Describe Picture

AI Describe Picture is a free online tool that offers image description services, image-to-text conversion, and code conversion. The AI-powered platform allows users to easily describe photos, convert images to detailed descriptions, extract text from images, and convert screenshots into HTML, CSS, or JavaScript code. It also provides content extraction in Markdown format and personalized content creation. With features like intelligent image recognition, single-click code copying, and efficient text extraction, AI Describe Picture aims to enhance users' productivity and creativity in image processing tasks.

2 - Open Source AI Tools

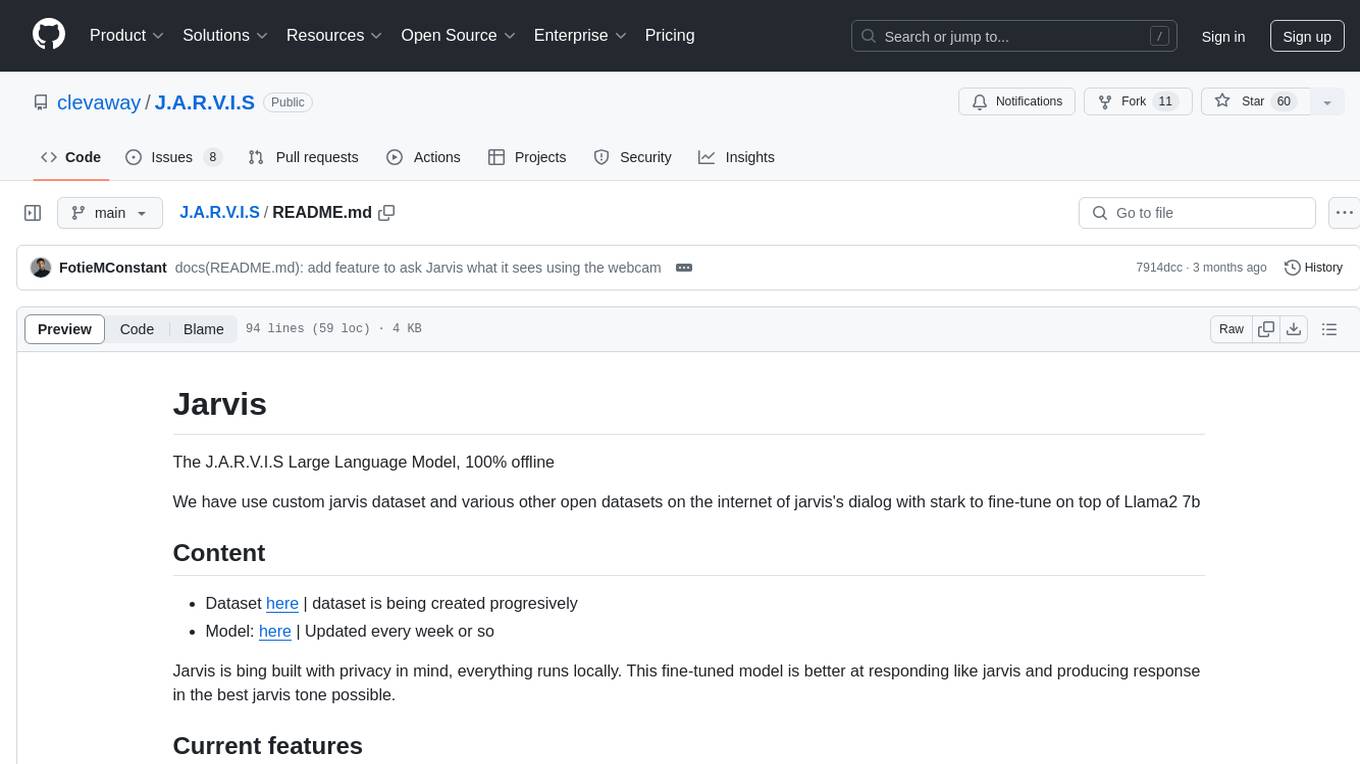

J.A.R.V.I.S

J.A.R.V.I.S. is an offline large language model fine-tuned on custom and open datasets to mimic Jarvis's dialog with Stark. It prioritizes privacy by running locally and excels in responding like Jarvis with a similar tone. Current features include time/date queries, web searches, playing YouTube videos, and webcam image descriptions. Users can interact with Jarvis via command line after installing the model locally using Ollama. Future plans involve voice cloning, voice-to-text input, and deploying the voice model as an API.

generative-ai-cdk-constructs-samples

This repository contains sample applications showcasing the use of AWS Generative AI CDK Constructs to build solutions for document exploration, content generation, image description, and deploying various models on SageMaker. It also includes samples for deploying Amazon Bedrock Agents and automating contract compliance analysis. The samples cover a range of backend and frontend technologies such as TypeScript, Python, and React.

10 - OpenAI Gpts

Journal Recognizer OCR

Optimized OCR for Handwritten Notebooks, up to 10 image transcript copy w/1-click. No text prompt necessary. Reads journals, reports, notes. All handwriting transcribed verbatim, then text summarized, graphic image features described. Ask to change any behavior.

Alt Tag Ace for Products

Professional, welcoming creator of detailed, SEO-optimized Alt Tags, specifically for products.

CP-Picture(看图说话)

帮您描述图片内容和情感,创作精炼独白,让分享更有个性。支持中英文,适合各种场合。 This tool assists in depicting the content and emotions of images, offering refined monologues to add personality to your shares. With bilingual support in Chinese and English, it's ideal for a variety of occasions.