Best AI tools for< Deployment >

20 - AI tool Sites

Deployment Manager

The website is a platform for deploying applications. It allows users to temporarily pause deployments and manage their deployment processes efficiently. Users can easily control the deployment status and make necessary adjustments as needed. The platform provides a seamless experience for managing deployment tasks and ensuring smooth application releases.

Deployment Manager

The website is a platform that allows users to deploy applications. It provides a service for managing and controlling the deployment process of software applications. Users can pause and resume deployments as needed, ensuring smooth and efficient deployment procedures. The platform offers a user-friendly interface for monitoring and managing deployment tasks.

Deployment Manager

The website is currently experiencing a temporary pause in deployment. It seems to be a technical issue related to a specific deployment code. The website may be undergoing maintenance or facing a technical glitch that requires attention. Users are advised to wait for further updates or contact the website administrators for assistance.

Deployment Manager

The website is a platform for managing software deployments. It allows users to control the deployment process, ensuring smooth and efficient delivery of software updates and changes. Users can monitor the status of deployments, pause or resume them as needed, and troubleshoot any issues that may arise during the deployment process.

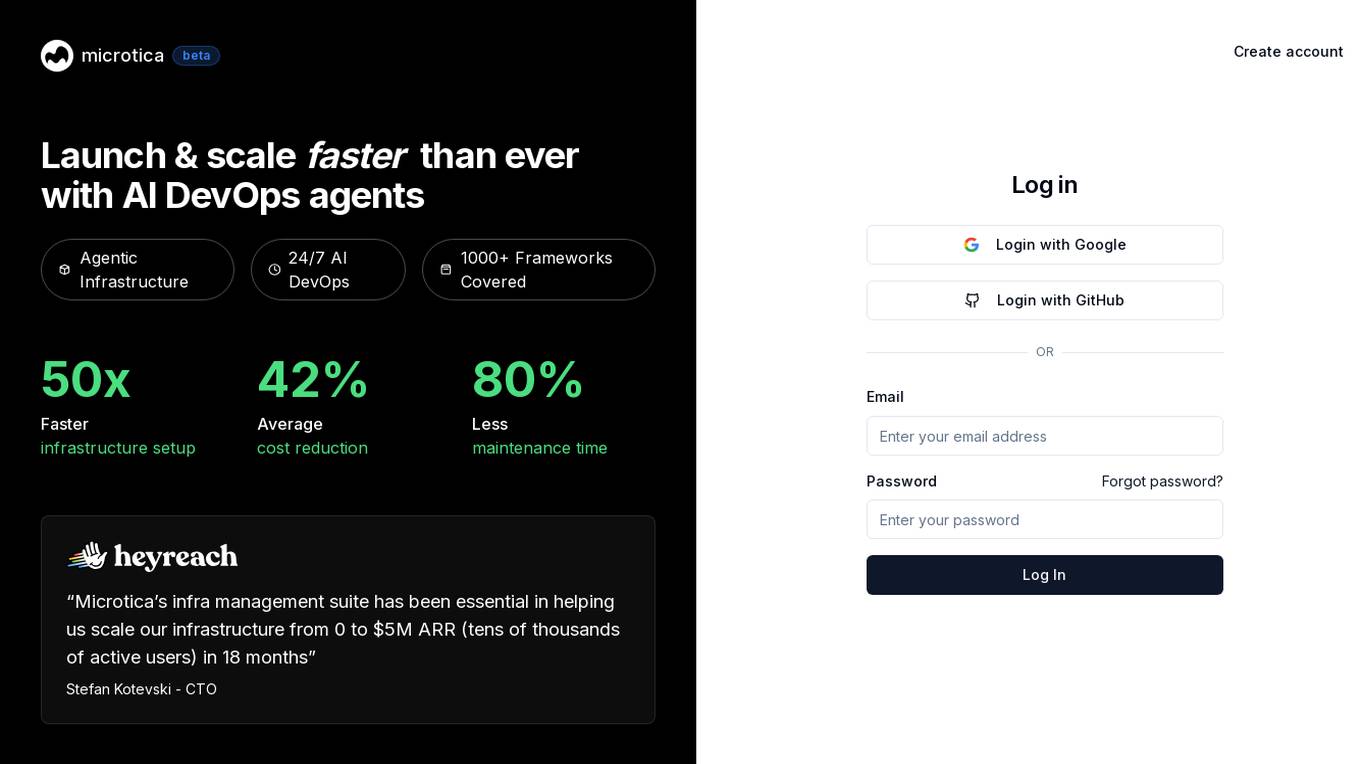

Microtica AI Deployment Tool

Microtica AI Deployment Tool is an advanced platform that leverages artificial intelligence to streamline the deployment process of cloud infrastructure. The tool offers features such as incident investigation with AI assistance, designing and deploying cloud infrastructure using natural language, and automated deployments. With Microtica, users can easily analyze logs, metrics, and system state to identify root causes and solutions, as well as get infrastructure-as-code and architecture diagrams. The platform aims to simplify the deployment process and enhance productivity by integrating AI capabilities into the workflow.

Render

Render is a platform that simplifies the deployment and scaling of web applications and services. It provides a seamless experience for developers to launch their applications quickly and efficiently. With Render, users can easily manage their infrastructure, monitor performance, and ensure high availability of their applications. The platform offers a range of features to streamline the deployment process and optimize the performance of web applications.

DeployMaster

The website is a platform for managing software deployments. It allows users to automate the deployment process, ensuring smooth and efficient delivery of software updates and changes to servers and applications. With features like version control, rollback options, and monitoring capabilities, users can easily track and manage their deployments. The platform simplifies the deployment process, reducing errors and downtime, and improving overall productivity.

Arthur

Arthur is an industry-leading MLOps platform that simplifies deployment, monitoring, and management of traditional and generative AI models. It ensures scalability, security, compliance, and efficient enterprise use. Arthur's turnkey solutions enable companies to integrate the latest generative AI technologies into their operations, making informed, data-driven decisions. The platform offers open-source evaluation products, model-agnostic monitoring, deployment with leading data science tools, and model risk management capabilities. It emphasizes collaboration, security, and compliance with industry standards.

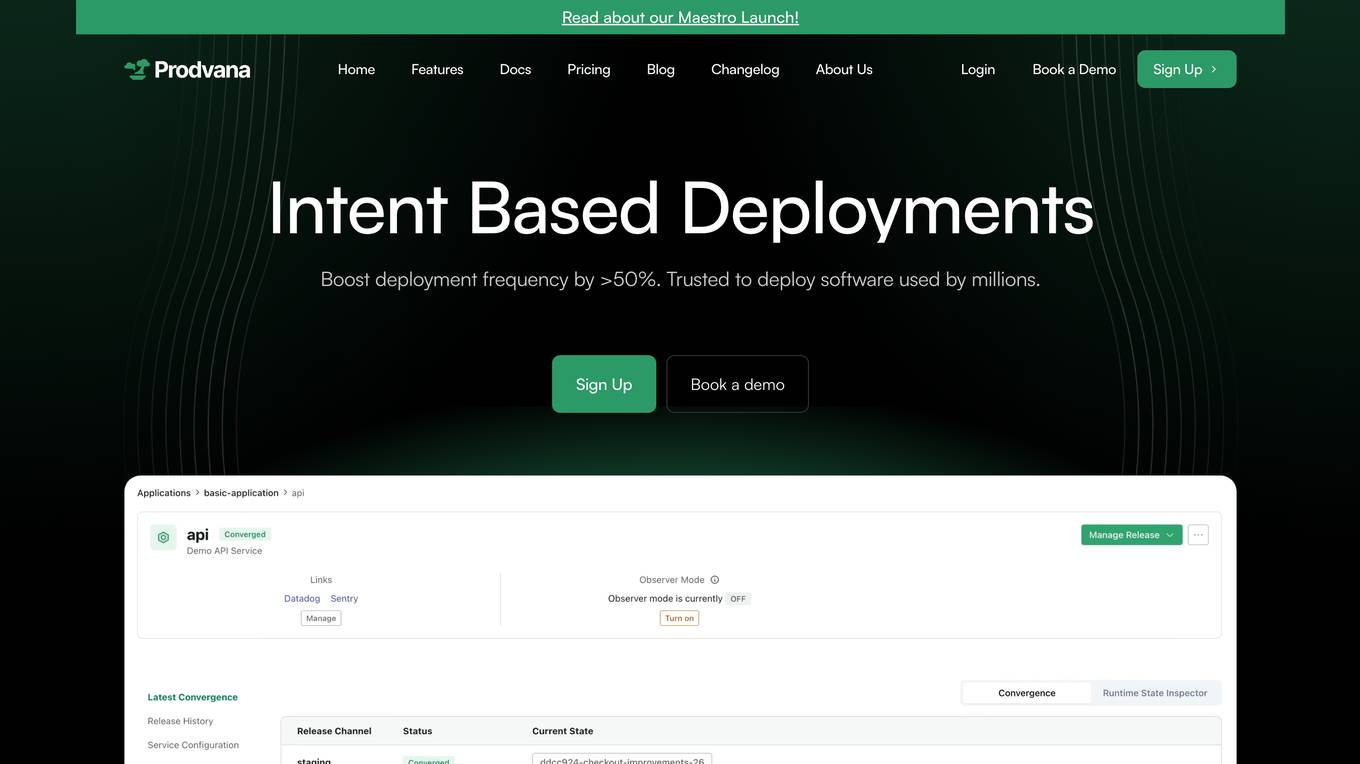

Prodvana

Prodvana is an intelligent deployment platform that helps businesses automate and streamline their software deployment process. It provides a variety of features to help businesses improve the speed, reliability, and security of their deployments. Prodvana is a cloud-based platform that can be used with any type of infrastructure, including on-premises, hybrid, and multi-cloud environments. It is also compatible with a wide range of DevOps tools and technologies. Prodvana's key features include: Intent-based deployments: Prodvana uses intent-based deployment technology to automate the deployment process. This means that businesses can simply specify their deployment goals, and Prodvana will automatically generate and execute the necessary steps to achieve those goals. This can save businesses a significant amount of time and effort. Guardrails for deployments: Prodvana provides a variety of guardrails to help businesses ensure the security and reliability of their deployments. These guardrails include approvals, database validations, automatic deployment validation, and simple interfaces to add custom guardrails. This helps businesses to prevent errors and reduce the risk of outages. Frictionless DevEx: Prodvana provides a frictionless developer experience by tracking commits through the infrastructure, ensuring complete visibility beyond just Docker images. This helps developers to quickly identify and resolve issues, and it also makes it easier to collaborate with other team members. Intelligence with Clairvoyance: Prodvana's Clairvoyance feature provides businesses with insights into the impact of their deployments before they are executed. This helps businesses to make more informed decisions about their deployments and to avoid potential problems. Easy integrations: Prodvana integrates seamlessly with a variety of DevOps tools and technologies. This makes it easy for businesses to use Prodvana with their existing workflows and processes.

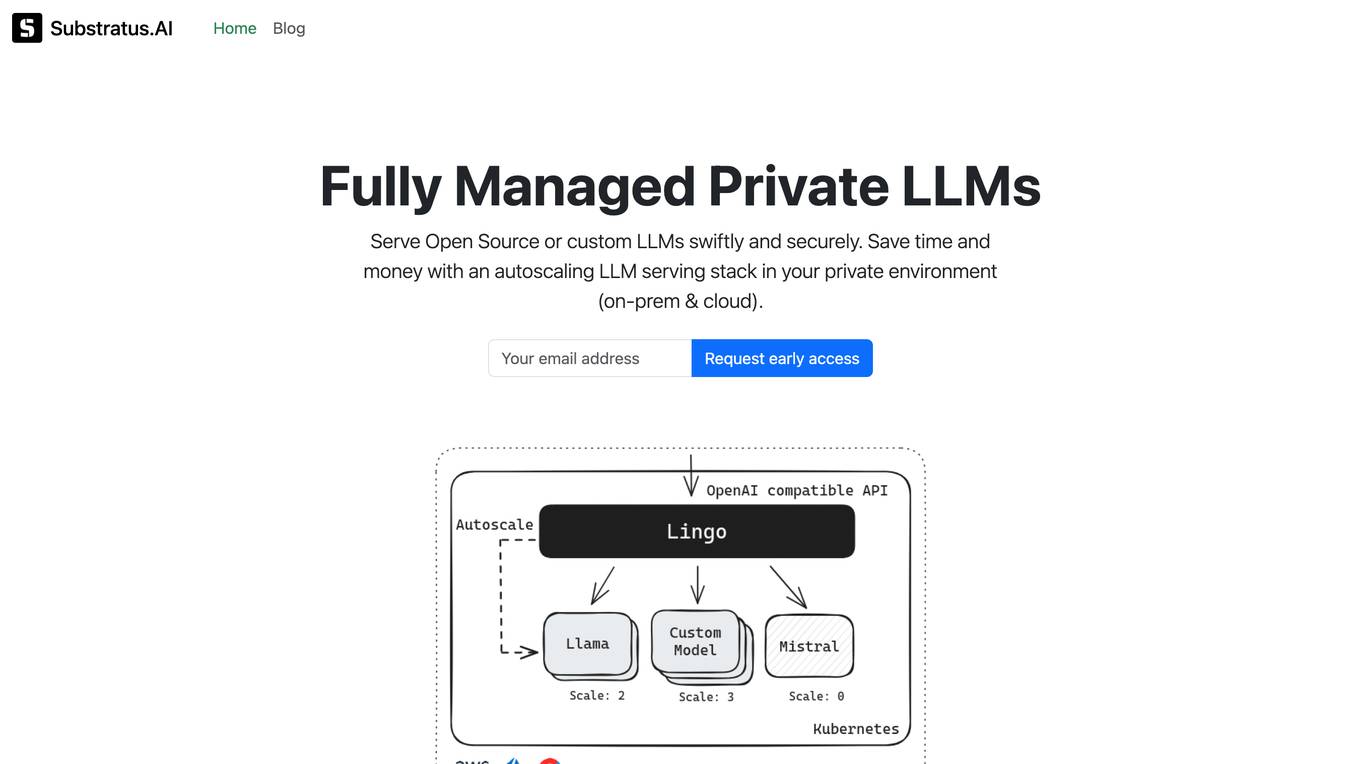

Substratus.AI

Substratus.AI is a fully managed private LLMs platform that allows users to serve LLMs (Llama and Mistral) in their own cloud account. It enables users to keep control of their data while reducing OpenAI costs by up to 10x. With Substratus.AI, users can utilize LLMs in production in hours instead of weeks, making it a convenient and efficient solution for AI model deployment.

Modular

Modular is a fast, scalable Gen AI inference platform that offers a comprehensive suite of tools and resources for AI development and deployment. It provides solutions for AI model development, deployment options, AI inference, research, and resources like documentation, models, tutorials, and step-by-step guides. Modular supports GPU and CPU performance, intelligent scaling to any cluster, and offers deployment options for various editions. The platform enables users to build agent workflows, utilize AI retrieval and controlled generation, develop chatbots, engage in code generation, and improve resource utilization through batch processing.

OnOut

OnOut is a platform that offers a variety of tools for developers to deploy web3 apps on their own domain with ease. It provides deployment tools for blockchain apps, DEX, farming, DAO, cross-chain setups, IDOFactory, NFT staking, and AI applications like Chate and AiGram. The platform allows users to customize their apps, earn commissions, and manage various aspects of their projects without the need for coding skills. OnOut aims to simplify the process of launching and managing decentralized applications for both developers and non-technical users.

BotGPT

BotGPT is a 24/7 custom AI chatbot assistant for websites. It offers a data-driven ChatGPT that allows users to create virtual assistants from their own data. Users can easily upload files or crawl their website to start asking questions and deploy a custom chatbot on their website within minutes. The platform provides a simple and efficient way to enhance customer engagement through AI-powered chatbots.

Deploya

Deploya is an AI-powered platform that allows users to create production-ready websites in seconds. By leveraging cutting-edge AI models, Deploya optimizes websites for performance and user experience. Users can simply describe their requirements, and Deploya will generate a website accordingly. The platform also offers features like automatic image selection, quick publishing, and flexible pricing options. Deploya stands out for its AI-driven web design capabilities and efficient website deployment process.

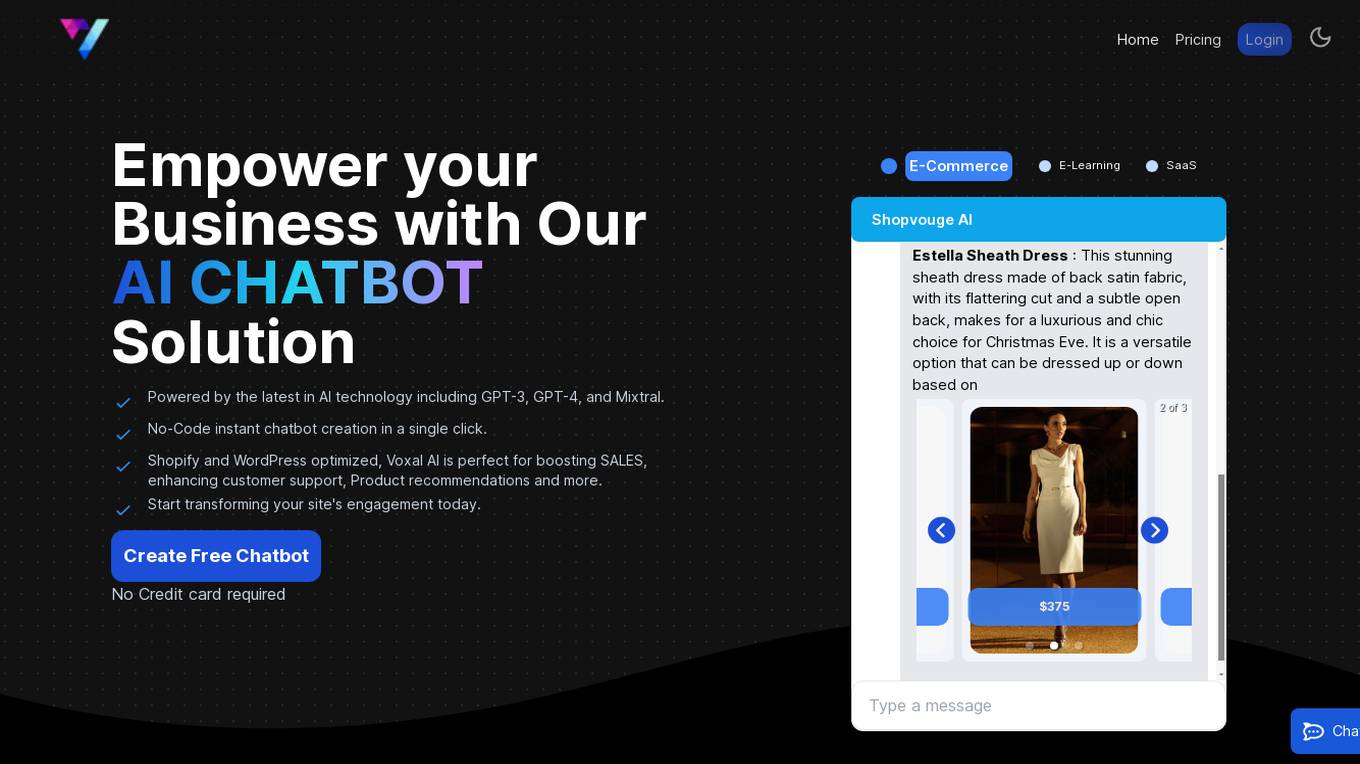

Voxal AI

Voxal AI is an AI-powered chatbot solution designed to enhance sales, customer support, and user engagement on websites. It offers a range of features including multiple AI models, A/B testing, cross-platform compatibility, multilingual support, advanced analytics, customization options, and multi-platform integration. Voxal AI is suitable for various industries such as e-commerce, e-learning, and SaaS, and can be used for tasks such as product recommendations, lead qualification, and automated customer support.

AIPage.dev

AIPage.dev is an AI-powered landing page generator that simplifies web development by utilizing cutting-edge AI technology. It allows users to create stunning landing pages with just a single prompt, eliminating the need for hours of coding and designing. The platform offers features like AI-driven design, intuitive editing interface, seamless cloud deployment, rapid development, effortless blog post creation, unlimited hosting for blog posts, lead collection, and seamless integration with leading providers. AIPage.dev aims to transform ideas into reality and empower users to showcase their projects and products effectively.

ChatBuild AI

ChatBuild AI is a website that allows users to create custom trained AI chatbots for their website in minutes. No coding experience is needed. Users can upload files to train their chatbot, and ChatBuild AI will generate a custom chatbot that is trained on the user's own data. ChatBuild AI also offers global support, so users can use their chatbot in any language.

Plansom

Plansom is an AI project management application designed to help users double their productivity by simplifying complex tasks and enabling operational excellence. With AI-powered plans and smart algorithms, Plansom assists in creating detailed business plans, prioritizing tasks, achieving goals quickly, and facilitating seamless collaboration. Users can track team achievements in real-time, roll out custom templates, and access ready-to-use strategy templates for various industries.

Genailia

Genailia is an AI platform that offers a range of products and services such as translation, transcription, chatbot, LLM, GPT, TTS, ASR, and social media insights. It harnesses AI to redefine possibilities by providing generative AI, linguistic interfaces, accelerators, and more in a single platform. The platform aims to streamline various tasks through AI technology, making it a valuable tool for businesses and individuals seeking efficient solutions.

Microtica

Microtica is an AI-powered cloud delivery platform that offers a comprehensive suite of DevOps tools to help users build, deploy, and optimize their infrastructure efficiently. With features like AI Incident Investigator, AI Infrastructure Builder, Kubernetes deployment simplification, alert monitoring, pipeline automation, and cloud monitoring, Microtica aims to streamline the development and management processes for DevOps teams. The platform provides real-time insights, cost optimization suggestions, and guided deployments, making it a valuable tool for businesses looking to enhance their cloud infrastructure operations.

1 - Open Source AI Tools

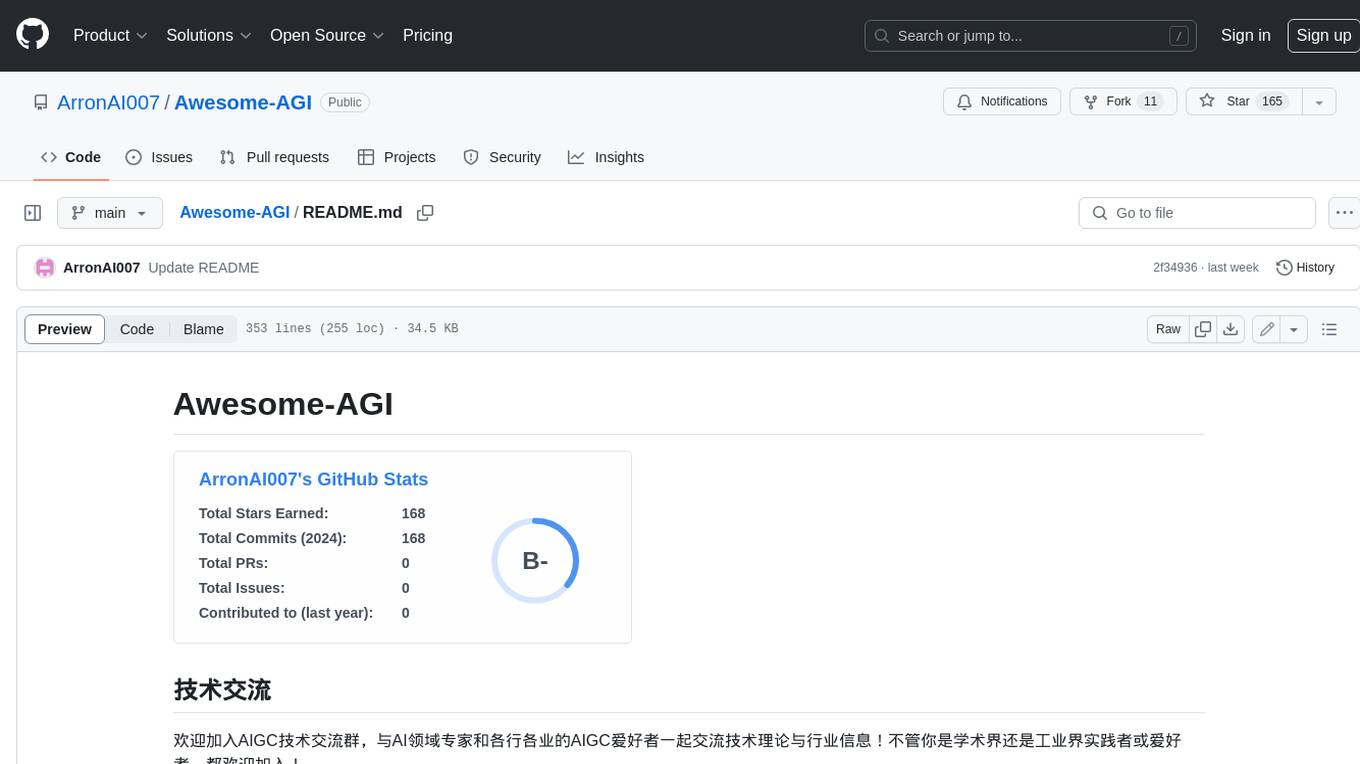

Awesome-AGI

Awesome-AGI is a curated list of resources related to Artificial General Intelligence (AGI), including models, pipelines, applications, and concepts. It provides a comprehensive overview of the current state of AGI research and development, covering various aspects such as model training, fine-tuning, deployment, and applications in different domains. The repository also includes resources on prompt engineering, RLHF, LLM vocabulary expansion, long text generation, hallucination mitigation, controllability and safety, and text detection. It serves as a valuable resource for researchers, practitioners, and anyone interested in the field of AGI.

20 - OpenAI Gpts

Continuous Integration/Deployment Advisor

Ensures efficient software updates through continuous integration and deployment.

Content Strategy Advisor

Drives content creation and deployment to enhance brand visibility and engagement.

API Architect

Create APIs from idea to deployment with beginner friendly instructions, structured layout, recommendations, etc

Azure Arc Expert

Azure Arc expert providing guidance on architecture, deployment, and management.

Mobile App Builder

Android app developer, guiding from concept to deployment with UX/UI expertise

Rust on ESP32 Expert

Expert in Rust coding for ESP32, offering detailed programming and deployment guidance.

Tech Mentor

Expert software architect with experience in design, construction, development, testing and deployment of Web, Mobile and Standalone software architectures

AppCrafty 🧰

Hello, I'm AppCrafty, your AI coding companion tailored for the creative and dynamic world of startups. I'm here to simplify the journey from concept to deployment across iOS, Android, and web platforms. Let's create something amazing together!

Contract Digitizer

Transforms regular contracts into digitized smart contracts. Response will include a diagram of the contract workflow as well as a link to easily auditable smart-contract source code ready for deployment.

SalesforceDevops.net

Guides users on Salesforce Devops products and services in the voice of Vernon Keenan from SalesforceDevops.net