Best AI tools for< Deploy On Remote Machine >

20 - AI tool Sites

fsck.ai

fsck.ai is an AI-powered software creation kit designed to help developers ship high-quality software faster. It offers cutting-edge AI tools that accelerate code reviews and identify potential problems in code. Similar to Copilot, fsck.ai is fully open-source and can run locally or on a remote machine. Users can sign up for early access to leverage the power of AI in their development workflow.

Picovoice

Picovoice is an on-device Voice AI and local LLM platform designed for enterprises. It offers a range of voice AI and LLM solutions, including speech-to-text, noise suppression, speaker recognition, speech-to-index, wake word detection, and more. Picovoice empowers developers to build virtual assistants and AI-powered products with compliance, reliability, and scalability in mind. The platform allows enterprises to process data locally without relying on third-party remote servers, ensuring data privacy and security. With a focus on cutting-edge AI technology, Picovoice enables users to stay ahead of the curve and adapt quickly to changing customer needs.

Qualcomm AI Hub

Qualcomm AI Hub is a platform that allows users to run AI models on Snapdragon® 8 Elite devices. It provides a collaborative ecosystem for model makers, cloud providers, runtime, and SDK partners to deploy on-device AI solutions quickly and efficiently. Users can bring their own models, optimize for deployment, and access a variety of AI services and resources. The platform caters to various industries such as mobile, automotive, and IoT, offering a range of models and services for edge computing.

Cirrascale Cloud Services

Cirrascale Cloud Services is an AI tool that offers cloud solutions for Artificial Intelligence applications. The platform provides a range of cloud services and products tailored for AI innovation, including NVIDIA GPU Cloud, AMD Instinct Series Cloud, Qualcomm Cloud, Graphcore, Cerebras, and SambaNova. Cirrascale's AI Innovation Cloud enables users to test and deploy on leading AI accelerators in one cloud, democratizing AI by delivering high-performance AI compute and scalable deep learning solutions. The platform also offers professional and managed services, tailored multi-GPU server options, and high-throughput storage and networking solutions to accelerate development, training, and inference workloads.

Leapwork

Leapwork is an AI-powered test automation platform that enables users to build, manage, maintain, and analyze complex data-driven testing across various applications, including AI apps. It offers a democratized testing approach with an intuitive visual interface, composable architecture, and generative AI capabilities. Leapwork supports testing of diverse application types, web, mobile, desktop applications, and APIs. It allows for scalable testing with reusable test flows that adapt to changes in the application under test. Leapwork can be deployed on the cloud or on-premises, providing full control to the users.

Caffe

Caffe is a deep learning framework developed by Berkeley AI Research (BAIR) and community contributors. It is designed for speed, modularity, and expressiveness, allowing users to define models and optimization through configuration without hard-coding. Caffe supports both CPU and GPU training, making it suitable for research experiments and industry deployment. The framework is extensible, actively developed, and tracks the state-of-the-art in code and models. Caffe is widely used in academic research, startup prototypes, and large-scale industrial applications in vision, speech, and multimedia.

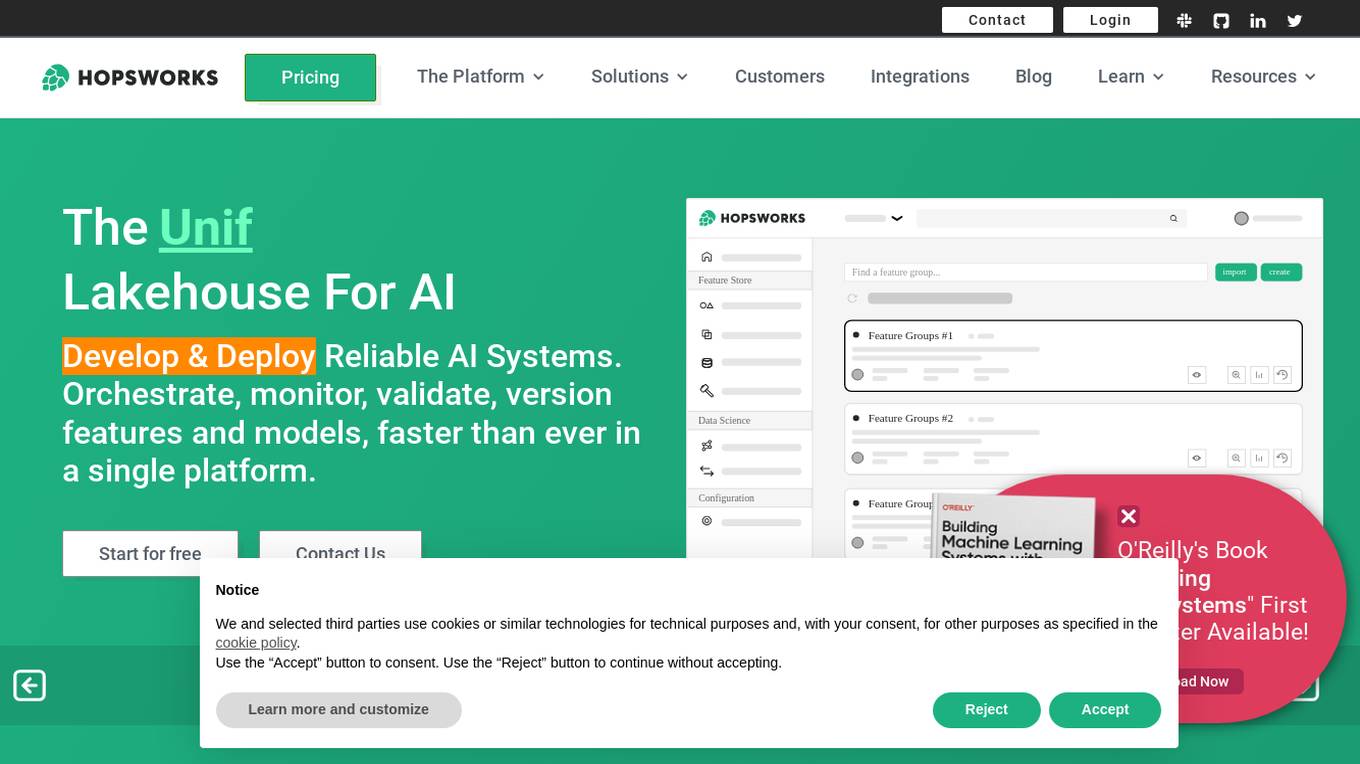

Hopsworks

Hopsworks is an AI platform that offers a comprehensive solution for building, deploying, and monitoring machine learning systems. It provides features such as a Feature Store, real-time ML capabilities, and generative AI solutions. Hopsworks enables users to develop and deploy reliable AI systems, orchestrate and monitor models, and personalize machine learning models with private data. The platform supports batch and real-time ML tasks, with the flexibility to deploy on-premises or in the cloud.

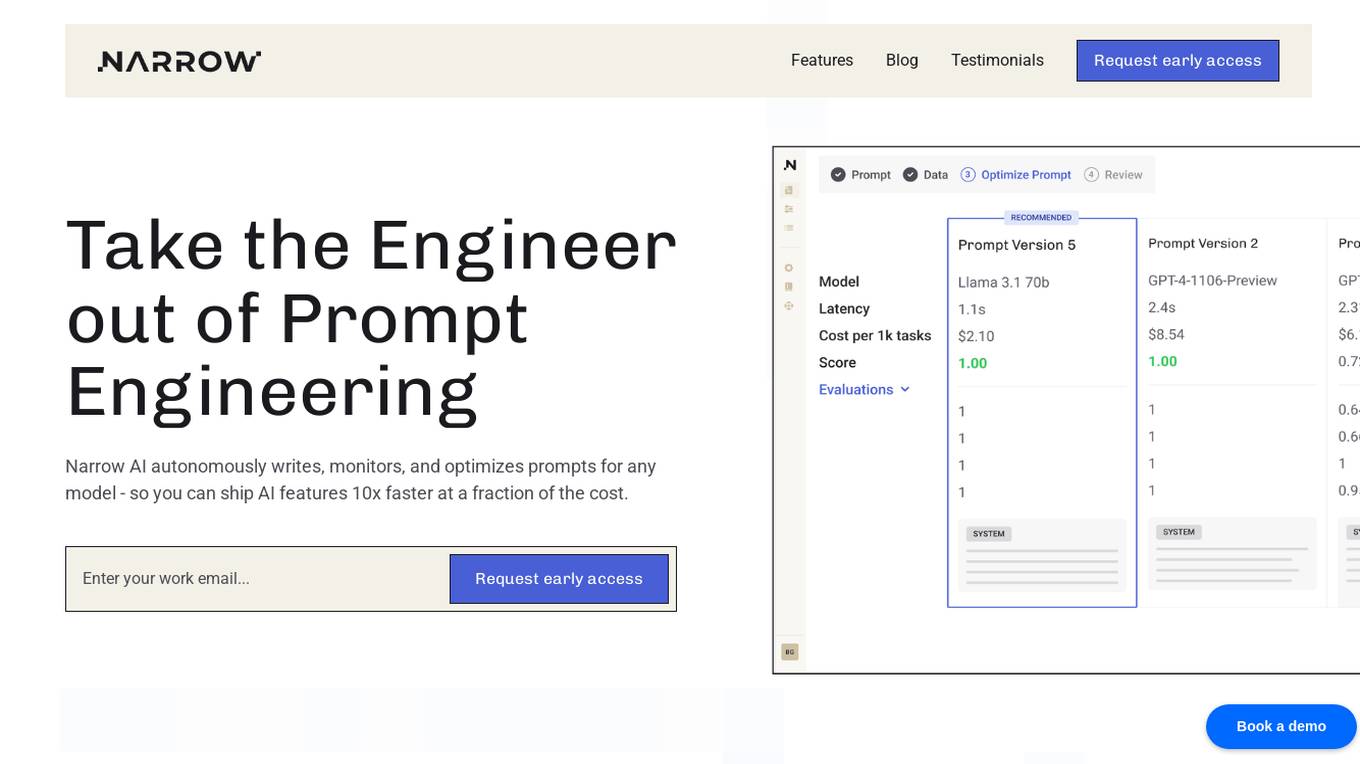

Narrow AI

Narrow AI is an AI application that autonomously writes, monitors, and optimizes prompts for any model, enabling users to ship AI features 10x faster at a fraction of the cost. It streamlines the workflow by allowing users to test new models in minutes, compare prompt performance, and deploy on the optimal model for their use case. Narrow AI helps users maximize efficiency by generating expert-level prompts, adapting prompts to new models, and optimizing prompts for quality, cost, and speed.

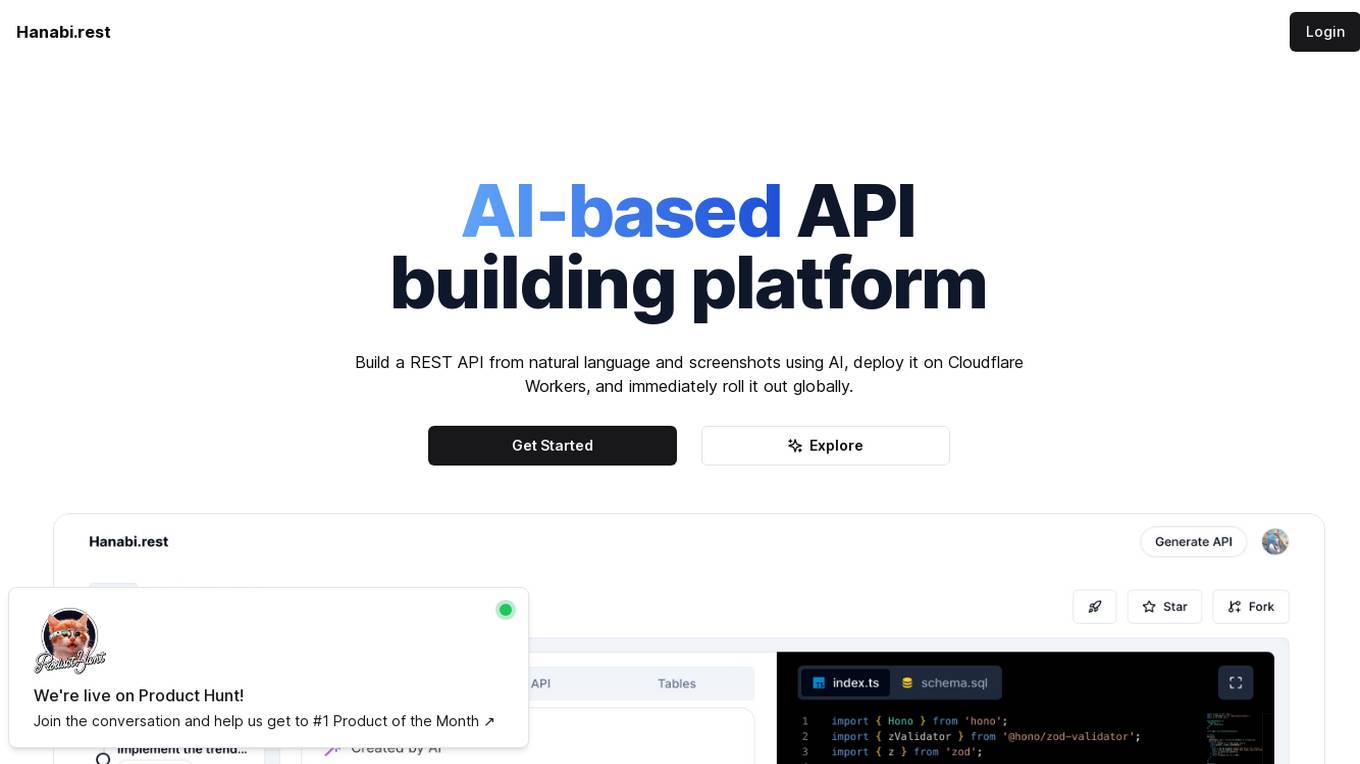

Hanabi.rest

Hanabi.rest is an AI-based API building platform that allows users to create REST APIs from natural language and screenshots using AI technology. Users can deploy the APIs on Cloudflare Workers and roll them out globally. The platform offers a live editor for testing database access and API endpoints, generates code compatible with various runtimes, and provides features like sharing APIs via URL, npm package integration, and CLI dump functionality. Hanabi.rest simplifies API design and deployment by leveraging natural language processing, image recognition, and v0.dev components.

Quidget

Quidget is a no-code AI Agent platform designed to build, train, and deploy AI assistants for websites, apps, or as standalone chatbots. It offers features such as answering customer support questions, engaging leads for sales, automating bookings, orders, and inquiries, as well as assisting with HR, finance, and operations. Quidget AI Agents are trained virtual assistants that go beyond basic chatbots by understanding, learning, and intelligently assisting customers. The platform allows customization of AI behavior, deployment on multiple channels, and integration with various tools. Quidget supports 45+ languages and ensures data security with end-to-end encryption and GDPR compliance.

Helix AI

Helix AI is a private GenAI platform that enables users to build AI applications using open source models. The platform offers tools for RAG (Retrieval-Augmented Generation) and fine-tuning, allowing deployment on-premises or in a Virtual Private Cloud (VPC). Users can access curated models, utilize Helix API tools to connect internal and external APIs, embed Helix Assistants into websites/apps for chatbot functionality, write AI application logic in natural language, and benefit from the innovative RAG system for Q&A generation. Additionally, users can fine-tune models for domain-specific needs and deploy securely on Kubernetes or Docker in any cloud environment. Helix Cloud offers free and premium tiers with GPU priority, catering to individuals, students, educators, and companies of varying sizes.

Tracecat

Tracecat is an open-source security automation platform that helps you automate security alerts, build AI-assisted workflows, orchestrate alerts, and close cases fast. It is a Tines / Splunk SOAR alternative that is built for builders and allows you to experiment for free. You can deploy Tracecat on your own infrastructure or use Tracecat Cloud with no maintenance overhead. Tracecat is Apache-2.0 licensed, which means it is open vision, open community, and open development. You can have your say in the future of security automation. Tracecat is no-code first, but you can also code as well. You can build automations fast with no-code and customize without vendor lock-in using Python. Tracecat has a click-and-drag workflow builder that allows you to automate SecOps using pre-built actions (API calls, webhooks, data transforms, AI tasks, and more) combined into workflows. No code is required. Tracecat also has a built-in case management system that allows you to open cases directly from workflows and track and manage security incidents all in one platform.

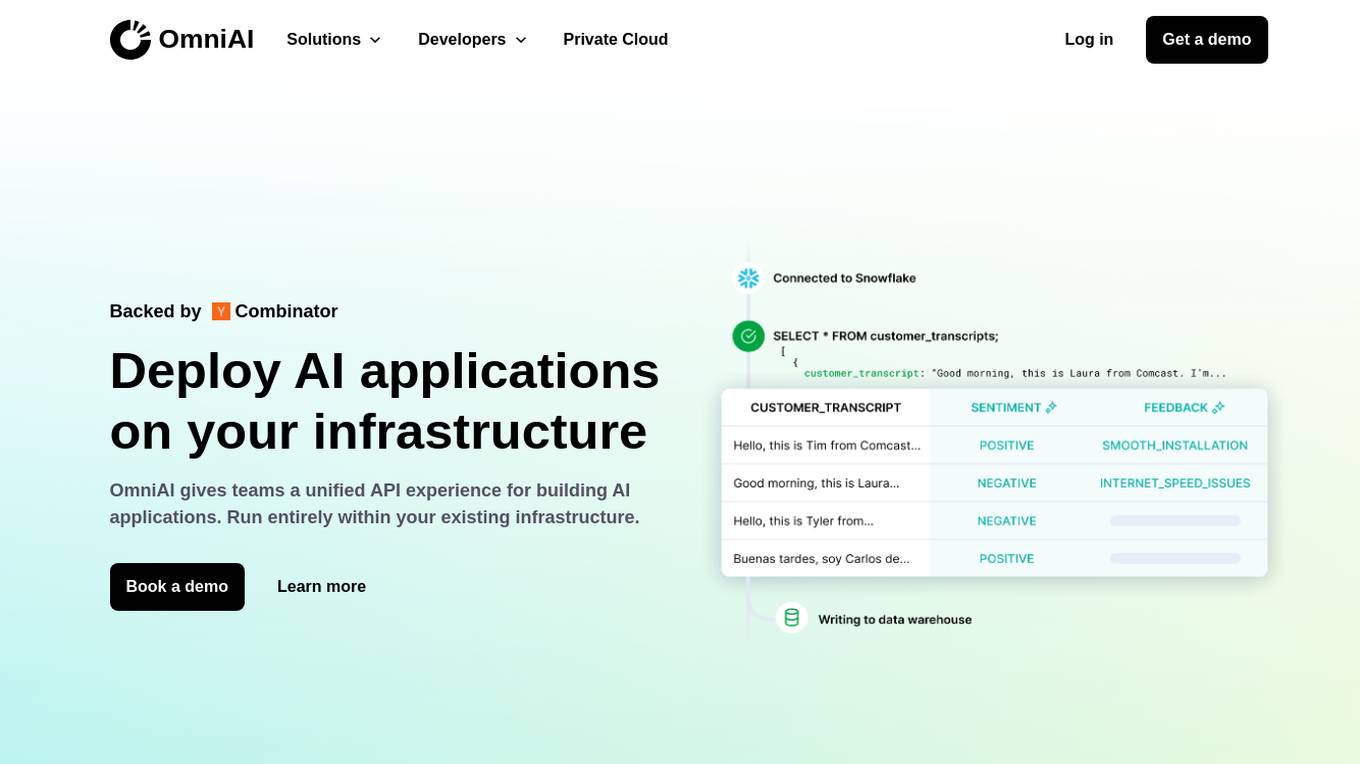

OmniAI

OmniAI is an AI tool that allows teams to deploy AI applications on their existing infrastructure. It provides a unified API experience for building AI applications and offers a wide selection of industry-leading models. With tools like Llama 3, Claude 3, Mistral Large, and AWS Titan, OmniAI excels in tasks such as natural language understanding, generation, safety, ethical behavior, and context retention. It also enables users to deploy and query the latest AI models quickly and easily within their virtual private cloud environment.

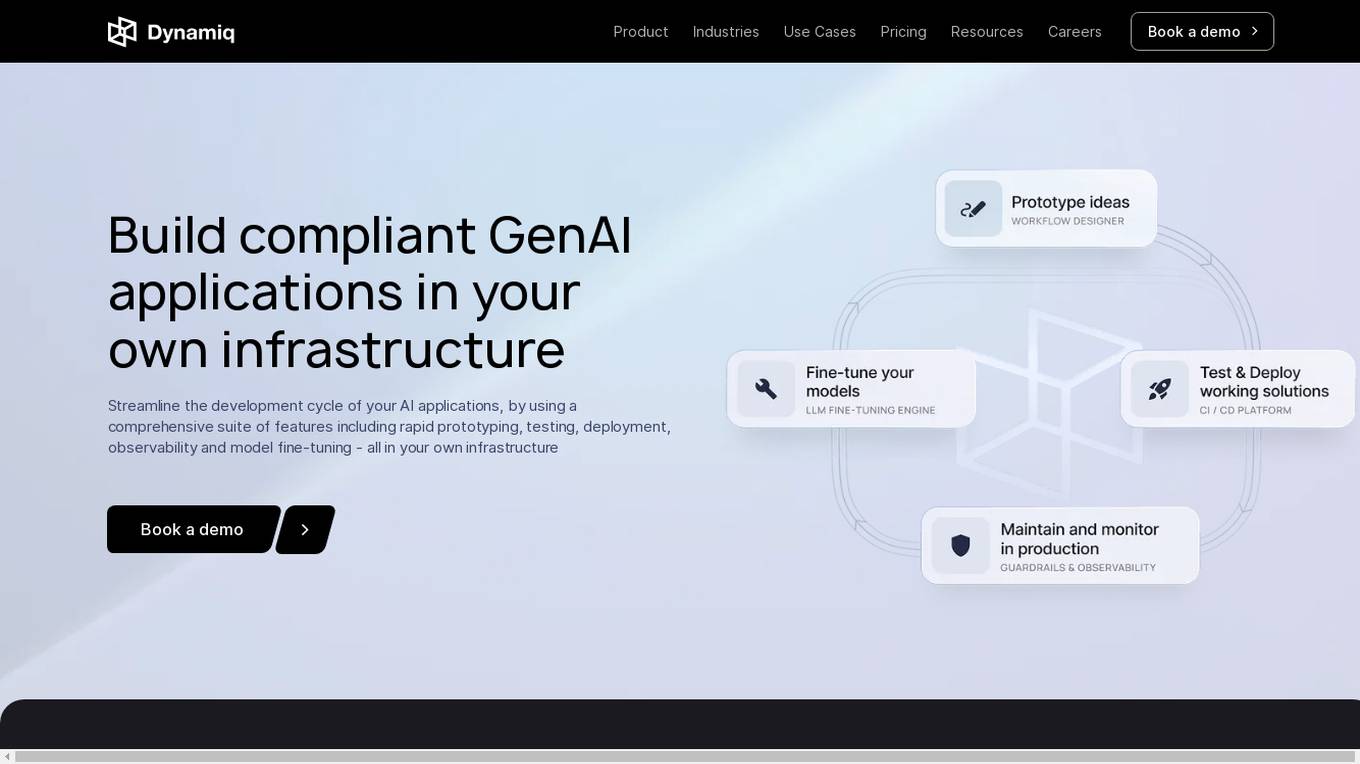

Dynamiq

Dynamiq is an operating platform for GenAI applications that enables users to build compliant GenAI applications in their own infrastructure. It offers a comprehensive suite of features including rapid prototyping, testing, deployment, observability, and model fine-tuning. The platform helps streamline the development cycle of AI applications and provides tools for workflow automations, knowledge base management, and collaboration. Dynamiq is designed to optimize productivity, reduce AI adoption costs, and empower organizations to establish AI ahead of schedule.

Together AI

Together AI is an AI Acceleration Cloud platform that offers fast inference, fine-tuning, and training services. It provides self-service NVIDIA GPUs, model deployment on custom hardware, AI chat app, code execution sandbox, and tools to find the right model for specific use cases. The platform also includes a model library with open-source models, documentation for developers, and resources for advancing open-source AI. Together AI enables users to leverage pre-trained models, fine-tune them, or build custom models from scratch, catering to various generative AI needs.

Telechat

Telechat is a platform that allows users to create and deploy custom chatbots on Telegram. With Telechat, users can upload their own data, fine-tune the knowledge base, and customize the chatbot's personality. Telechat also provides a range of features to help users connect their chatbots to Telegram and other channels. Telechat is suitable for a variety of use cases, including customer support, internal knowledge bases, and community engagement.

OnOut

OnOut is a platform that offers a variety of tools for developers to deploy web3 apps on their own domain with ease. It provides deployment tools for blockchain apps, DEX, farming, DAO, cross-chain setups, IDOFactory, NFT staking, and AI applications like Chate and AiGram. The platform allows users to customize their apps, earn commissions, and manage various aspects of their projects without the need for coding skills. OnOut aims to simplify the process of launching and managing decentralized applications for both developers and non-technical users.

Code Companion AI

Code Companion AI is a desktop application powered by OpenAI's ChatGPT, designed to aid by performing a myriad of coding tasks. This application streamlines project management with its chatbot interface that can execute shell commands, generate code, handle database queries and review your existing code. Tasks are as simple as sending a message - you could request creation of a .gitignore file, or deploy an app on AWS, and CodeCompanion.AI does it for you. Simply download CodeCompanion.AI from the website to enjoy all features across various programming languages and platforms.

Memorly.AI

Memorly.AI is an AI tool designed to help businesses grow by leveraging AI agents to manage sales and support operations seamlessly. The tool offers personalized engagement and optimized processes through autonomous workflows, efficient customer interactions across multiple channels, and 24/7 human-like interactions. With Memorly.AI, businesses can handle high volumes without increasing costs, leading to improved conversation rates, customer engagement, and cost reduction. The tool is pay-per-use and allows businesses to deploy AI agents on various channels like WhatsApp, websites, VoIP, and more.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

0 - Open Source AI Tools

20 - OpenAI Gpts

Rust on ESP32 Expert

Expert in Rust coding for ESP32, offering detailed programming and deployment guidance.

React on Rails Pro

Expert in Rails & React, focusing on high-standard software development.

Azure Arc Expert

Azure Arc expert providing guidance on architecture, deployment, and management.

XRPL GPT

Build on the XRP Ledger with assistance from this GPT trained on extensive documentation and code samples.

Javascript Cloud services coding assistant

Expert on google cloud services with javascript

Apple CoreML Complete Code Expert

A detailed expert trained on all 3,018 pages of Apple CoreML, offering complete coding solutions. Saving time? https://www.buymeacoffee.com/parkerrex ☕️❤️

Auto Custom Actions GPT

This GPT help you on one single task, generating valid OpenAI Schemas for Custom Actions in GPTs