Best AI tools for< Deploy Models Safely >

20 - AI tool Sites

SkyDeck AI

SkyDeck AI is a secure business-first AI productivity platform that offers solutions for teams and individuals. It provides a range of AI-driven tools and services to enhance collaboration, development, education, and entrepreneurship. The platform focuses on security, customization, and automation to empower users in deploying AI across their businesses with ease and efficiency.

Dust

Dust is a customizable and secure AI assistant platform that helps businesses amplify their team's potential. It allows users to deploy the best Large Language Models to their company, connect Dust to their team's data, and empower their teams with assistants tailored to their specific needs. Dust is exceptionally modular and adaptable, tailoring to unique requirements and continuously evolving to meet changing needs. It supports multiple sources of data and models, including proprietary and open-source models from OpenAI, Anthropic, and Mistral. Dust also helps businesses identify their most creative and driven team members and share their experience with AI throughout the company. It promotes collaboration with shared conversations, @mentions in discussions, and Slackbot integration. Dust prioritizes security and data privacy, ensuring that data remains private and that enterprise-grade security measures are in place to manage data access policies.

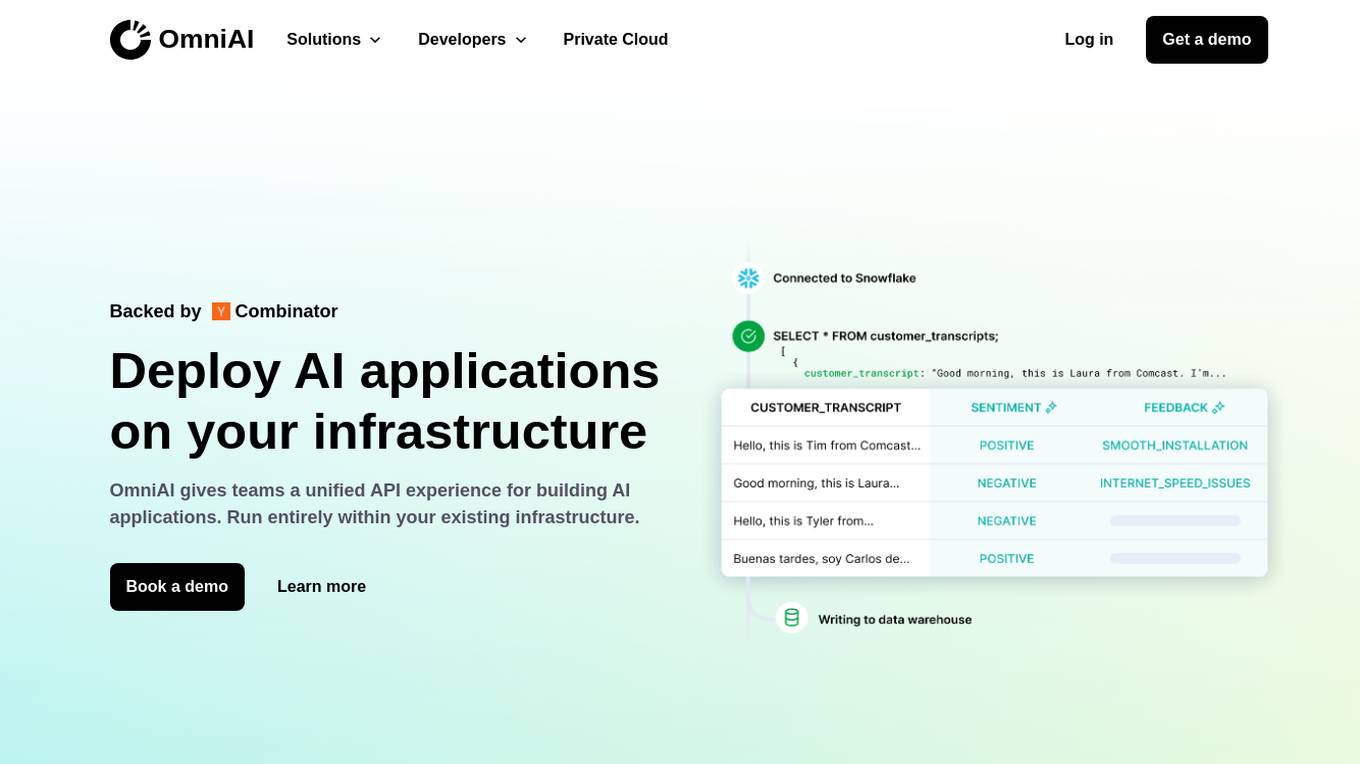

OmniAI

OmniAI is an AI tool that allows teams to deploy AI applications on their existing infrastructure. It provides a unified API experience for building AI applications and offers a wide selection of industry-leading models. With tools like Llama 3, Claude 3, Mistral Large, and AWS Titan, OmniAI excels in tasks such as natural language understanding, generation, safety, ethical behavior, and context retention. It also enables users to deploy and query the latest AI models quickly and easily within their virtual private cloud environment.

Lamini

Lamini is an enterprise-level LLM platform that offers precise recall with Memory Tuning, enabling teams to achieve over 95% accuracy even with large amounts of specific data. It guarantees JSON output and delivers massive throughput for inference. Lamini is designed to be deployed anywhere, including air-gapped environments, and supports training and inference on Nvidia or AMD GPUs. The platform is known for its factual LLMs and reengineered decoder that ensures 100% schema accuracy in the JSON output.

Azure AI Platform

Azure AI Platform by Microsoft offers a comprehensive suite of artificial intelligence services and tools for developers and businesses. It provides a unified platform for building, training, and deploying AI models, as well as integrating AI capabilities into applications. With a focus on generative AI, multimodal models, and large language models, Azure AI empowers users to create innovative AI-driven solutions across various industries. The platform also emphasizes content safety, scalability, and agility in managing AI projects, making it a valuable resource for organizations looking to leverage AI technologies.

Aify.co

Aify.co is a website that covers all things artificial intelligence. It provides news, analysis, and opinion on the latest developments in AI, as well as resources for developers and users. The site is written by a team of experts in AI, and it is committed to providing accurate and up-to-date information on the field.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

PoplarML

PoplarML is a platform that enables the deployment of production-ready, scalable ML systems with minimal engineering effort. It offers one-click deploys, real-time inference, and framework agnostic support. With PoplarML, users can seamlessly deploy ML models using a CLI tool to a fleet of GPUs and invoke their models through a REST API endpoint. The platform supports Tensorflow, Pytorch, and JAX models.

Outfit AI

Outfit AI is an AI tool that enables users to design and deploy AI models or workflows as user-ready applications in minutes. It allows users to create custom user interfaces for their AI-powered apps by dropping in an API key from Replicate or Hugging Face. With Outfit AI, users can have creative control over the design of their apps, build complex workflows without any code, and optimize prompts for better performance. The tool aims to help users launch their models faster, save time, and enhance their AI applications with a built-in product copilot.

Groq

Groq is a fast AI inference tool that offers GroqCloud™ Platform and GroqRack™ Cluster for developers to build and deploy AI models with ultra-low-latency inference. It provides instant intelligence for openly-available models like Llama 3.1 and is known for its speed and compatibility with other AI providers. Groq powers leading openly-available AI models and has gained recognition in the AI chip industry. The tool has received significant funding and valuation, positioning itself as a strong challenger to established players like Nvidia.

Baseten

Baseten is a machine learning infrastructure that provides a unified platform for data scientists and engineers to build, train, and deploy machine learning models. It offers a range of features to simplify the ML lifecycle, including data preparation, model training, and deployment. Baseten also provides a marketplace of pre-built models and components that can be used to accelerate the development of ML applications.

Domino Data Lab

Domino Data Lab is an enterprise AI platform that enables users to build, deploy, and manage AI models across any environment. It fosters collaboration, establishes best practices, and ensures governance while reducing costs. The platform provides access to a broad ecosystem of open source and commercial tools, and infrastructure, allowing users to accelerate and scale AI impact. Domino serves as a central hub for AI operations and knowledge, offering integrated workflows, automation, and hybrid multicloud capabilities. It helps users optimize compute utilization, enforce compliance, and centralize knowledge across teams.

Arcee AI

Arcee AI is a platform that offers a cost-effective, secure, end-to-end solution for building and deploying Small Language Models (SLMs). It allows users to merge and train custom language models by leveraging open source models and their own data. The platform is known for its Model Merging technique, which combines the power of pre-trained Large Language Models (LLMs) with user-specific data to create high-performing models across various industries.

Together AI

Together AI is an AI Acceleration Cloud platform that offers fast inference, fine-tuning, and training services. It provides self-service NVIDIA GPUs, model deployment on custom hardware, AI chat app, code execution sandbox, and tools to find the right model for specific use cases. The platform also includes a model library with open-source models, documentation for developers, and resources for advancing open-source AI. Together AI enables users to leverage pre-trained models, fine-tune them, or build custom models from scratch, catering to various generative AI needs.

FuriosaAI

FuriosaAI is an AI application that offers Hardware RNGD for LLM and Multimodality, as well as WARBOY for Computer Vision. It provides a comprehensive developer experience through the Furiosa SDK, Model Zoo, and Dev Support. The application focuses on efficient AI inference, high-performance LLM and multimodal deployment capabilities, and sustainable mass adoption of AI. FuriosaAI features the Tensor Contraction Processor architecture, software for streamlined LLM deployment, and a robust ecosystem support. It aims to deliver powerful and efficient deep learning acceleration while ensuring future-proof programmability and efficiency.

Striveworks

Striveworks is an AI application that offers a Machine Learning Operations Platform designed to help organizations build, deploy, maintain, monitor, and audit machine learning models efficiently. It provides features such as rapid model deployment, data and model auditability, low-code interface, flexible deployment options, and operationalizing AI data science with real returns. Striveworks aims to accelerate the ML lifecycle, save time and money in model creation, and enable non-experts to leverage AI for data-driven decisions.

Wallaroo.AI

Wallaroo.AI is an AI inference platform that offers production-grade AI inference microservices optimized on OpenVINO for cloud and Edge AI application deployments on CPUs and GPUs. It provides hassle-free AI inferencing for any model, any hardware, anywhere, with ultrafast turnkey inference microservices. The platform enables users to deploy, manage, observe, and scale AI models effortlessly, reducing deployment costs and time-to-value significantly.

Qualcomm AI Hub

Qualcomm AI Hub is a platform that allows users to run AI models on Snapdragon® 8 Elite devices. It provides a collaborative ecosystem for model makers, cloud providers, runtime, and SDK partners to deploy on-device AI solutions quickly and efficiently. Users can bring their own models, optimize for deployment, and access a variety of AI services and resources. The platform caters to various industries such as mobile, automotive, and IoT, offering a range of models and services for edge computing.

ClearML

ClearML is an open-source, end-to-end platform for continuous machine learning (ML). It provides a unified platform for data management, experiment tracking, model training, deployment, and monitoring. ClearML is designed to make it easy for teams to collaborate on ML projects and to ensure that models are deployed and maintained in a reliable and scalable way.

JFrog ML

JFrog ML is an AI platform designed to streamline AI development from prototype to production. It offers a unified MLOps platform to build, train, deploy, and manage AI workflows at scale. With features like Feature Store, LLMOps, and model monitoring, JFrog ML empowers AI teams to collaborate efficiently and optimize AI & ML models in production.

1 - Open Source AI Tools

fortuna

Fortuna is a library for uncertainty quantification that enables users to estimate predictive uncertainty, assess model reliability, trigger human intervention, and deploy models safely. It provides calibration and conformal methods for pre-trained models in any framework, supports Bayesian inference methods for deep learning models written in Flax, and is designed to be intuitive and highly configurable. Users can run benchmarks and bring uncertainty to production systems with ease.

20 - OpenAI Gpts

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

TensorFlow Oracle

I'm an expert in TensorFlow, providing detailed, accurate guidance for all skill levels.

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

![[latest] FastAPI GPT Screenshot](/screenshots_gpts/g-BhYCAfVXk.jpg)

[latest] FastAPI GPT

Up-to-date FastAPI coding assistant with knowledge of the latest version. Part of the [latest] GPTs family.

GPT Designer

A creative aide for designing new GPT models, skilled in ideation and prompting.

Pytorch Trainer GPT

Your purpose is to create the pytorch code to train language models using pytorch