Best AI tools for< Deploy Functions >

20 - AI tool Sites

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

UbiOps

UbiOps is an AI infrastructure platform that helps teams quickly run their AI & ML workloads as reliable and secure microservices. It offers powerful AI model serving and orchestration with unmatched simplicity, speed, and scale. UbiOps allows users to deploy models and functions in minutes, manage AI workloads from a single control plane, integrate easily with tools like PyTorch and TensorFlow, and ensure security and compliance by design. The platform supports hybrid and multi-cloud workload orchestration, rapid adaptive scaling, and modular applications with unique workflow management system.

Lyzr AI

Lyzr AI is a full-stack agent framework designed to build GenAI applications faster. It offers a range of AI agents for various tasks such as chatbots, knowledge search, summarization, content generation, and data analysis. The platform provides features like memory management, human-in-loop interaction, toxicity control, reinforcement learning, and custom RAG prompts. Lyzr AI ensures data privacy by running data locally on cloud servers. Enterprises and developers can easily configure, deploy, and manage AI agents using Lyzr's platform.

OAK

OAK is an open-source platform for building and deploying custom AI agents quickly and easily. It offers a modular design, powerful plugins, and seamless integration with various AI models. OAK is scalable, flexible, and developer-friendly, allowing users to create AI agents in minutes without hassle.

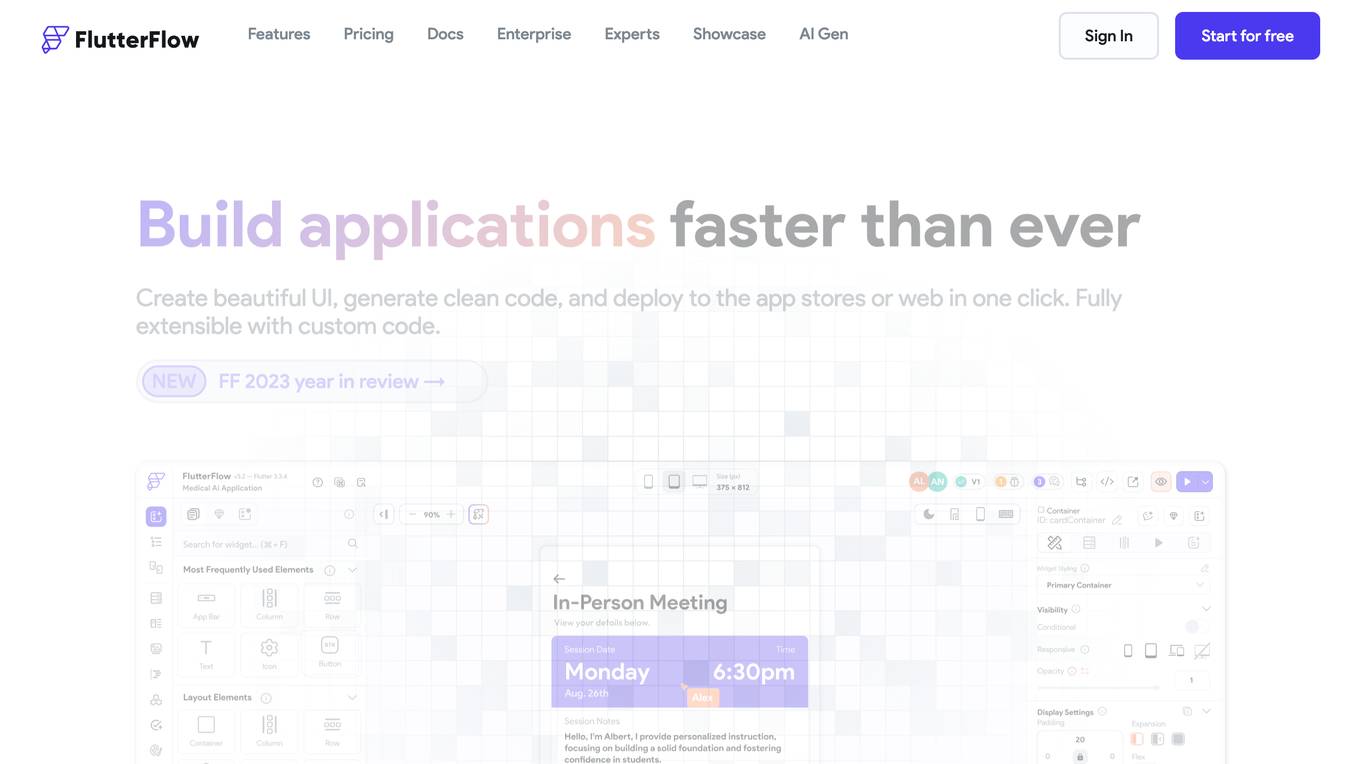

FlutterFlow

FlutterFlow is a low-code development platform that enables users to build cross-platform mobile and web applications without writing code. It provides a visual interface for designing user interfaces, connecting data, and implementing complex logic. FlutterFlow is trusted by users at leading companies around the world and has been used to build a wide range of applications, from simple prototypes to complex enterprise solutions.

Dreamlab

Dreamlab is a multiplayer game creation platform that allows users to build and deploy great 2D multiplayer games quickly and effortlessly. It features an in-browser collaborative editor, easy integration of multiplayer functionality, and AI assistance for code generation. Dreamlab is designed for indie game developers, game jam participants, Discord server activities, and rapid prototyping. Users can start building their games instantly without the need for downloads or installations. The platform offers simple and transparent pricing with a free plan and a pro plan for serious game developers.

Hanabi.rest

Hanabi.rest is an AI-based API building platform that allows users to create REST APIs from natural language and screenshots using AI technology. Users can deploy the APIs on Cloudflare Workers and roll them out globally. The platform offers a live editor for testing database access and API endpoints, generates code compatible with various runtimes, and provides features like sharing APIs via URL, npm package integration, and CLI dump functionality. Hanabi.rest simplifies API design and deployment by leveraging natural language processing, image recognition, and v0.dev components.

SupBot

SupBot is an AI-powered support solution that enables businesses to handle customer support instantly. It offers the ability to train and deploy AI support bots with ease, allowing for seamless integration into any website. With a user-friendly interface, SupBot simplifies the process of setting up and customizing support bots to meet specific requirements. The platform is designed to enhance customer service efficiency and streamline communication processes through the use of AI technology.

NOCODING AI

NOCODING AI is an innovative AI tool that allows users to create advanced applications without the need for coding skills. The platform offers a user-friendly interface with drag-and-drop functionality, making it easy for individuals and businesses to develop custom solutions. With NOCODING AI, users can build chatbots, automate workflows, analyze data, and more, all without writing a single line of code. The tool leverages machine learning algorithms to streamline the development process and empower users to bring their ideas to life quickly and efficiently.

Leadzen.ai

Leadzen.ai is an AI-driven lead generation tool that empowers businesses to identify and engage decision-makers effectively. The platform offers advanced AI features such as bulk search functionality, intelligent filters, and real-time insights to enhance B2B lead acquisition. With Leadzen.ai, users can access vital contact details, personalize outreach, and maximize results in lead generation.

Magick

Magick is a cutting-edge Artificial Intelligence Development Environment (AIDE) that empowers users to rapidly prototype and deploy advanced AI agents and applications without coding. It provides a full-stack solution for building, deploying, maintaining, and scaling AI creations. Magick's open-source, platform-agnostic nature allows for full control and flexibility, making it suitable for users of all skill levels. With its visual node-graph editors, users can code visually and create intuitively. Magick also offers powerful document processing capabilities, enabling effortless embedding and access to complex data. Its real-time and event-driven agents respond to events right in the AIDE, ensuring prompt and efficient handling of tasks. Magick's scalable deployment feature allows agents to handle any number of users, making it suitable for large-scale applications. Additionally, its multi-platform integrations with tools like Discord, Unreal Blueprints, and Google AI provide seamless connectivity and enhanced functionality.

Helix AI

Helix AI is a private GenAI platform that enables users to build AI applications using open source models. The platform offers tools for RAG (Retrieval-Augmented Generation) and fine-tuning, allowing deployment on-premises or in a Virtual Private Cloud (VPC). Users can access curated models, utilize Helix API tools to connect internal and external APIs, embed Helix Assistants into websites/apps for chatbot functionality, write AI application logic in natural language, and benefit from the innovative RAG system for Q&A generation. Additionally, users can fine-tune models for domain-specific needs and deploy securely on Kubernetes or Docker in any cloud environment. Helix Cloud offers free and premium tiers with GPU priority, catering to individuals, students, educators, and companies of varying sizes.

Sendbird

Sendbird is a communication API platform that offers solutions for chat, AI chatbots, SMS, WhatsApp, KakaoTalk, voice, and video. It provides tools for live chat, video, and omnichannel business messaging to enhance customer engagement both within and outside of applications. With a focus on enterprise-level scale, security, and compliance, Sendbird's platform is trusted by over 4,000 apps globally. The platform offers intuitive APIs, sample apps, tutorials, and free trials to help developers easily integrate communication features into their applications.

Create Next App Chat With the Algorithm

The website 'Create Next App Chat With the Algorithm' is an AI tool that allows users to generate chat applications using algorithms. It is a work in progress with the latest algorithm code updated on April 14, 2023. Users can leverage this tool to quickly create chat applications with the help of advanced algorithms.

Wordware

Wordware is an AI toolkit that empowers cross-functional teams to build reliable high-quality agents through rapid iteration. It combines the best aspects of software with the power of natural language, freeing users from traditional no-code tool constraints. With advanced technical capabilities, multiple LLM providers, one-click API deployment, and multimodal support, Wordware offers a seamless experience for AI app development and deployment.

ConsoleX

ConsoleX is an advanced AI tool that offers a wide range of functionalities to unlock infinite possibilities in the field of artificial intelligence. It provides users with a powerful platform to develop, test, and deploy AI models with ease. With cutting-edge features and intuitive interface, ConsoleX is designed to cater to the needs of both beginners and experts in the AI domain. Whether you are a data scientist, researcher, or developer, ConsoleX empowers you to explore the full potential of AI technology and drive innovation in your projects.

SmythOS

SmythOS is an AI-powered platform that allows users to create and deploy AI agents in minutes. With a user-friendly interface and drag-and-drop functionality, SmythOS enables users to build custom agents for various tasks without the need for manual coding. The platform offers pre-built agent templates, universal integration with AI models and APIs, and the flexibility to deploy agents locally or to the cloud. SmythOS is designed to streamline workflow automation, enhance productivity, and provide a seamless experience for developers and businesses looking to leverage AI technology.

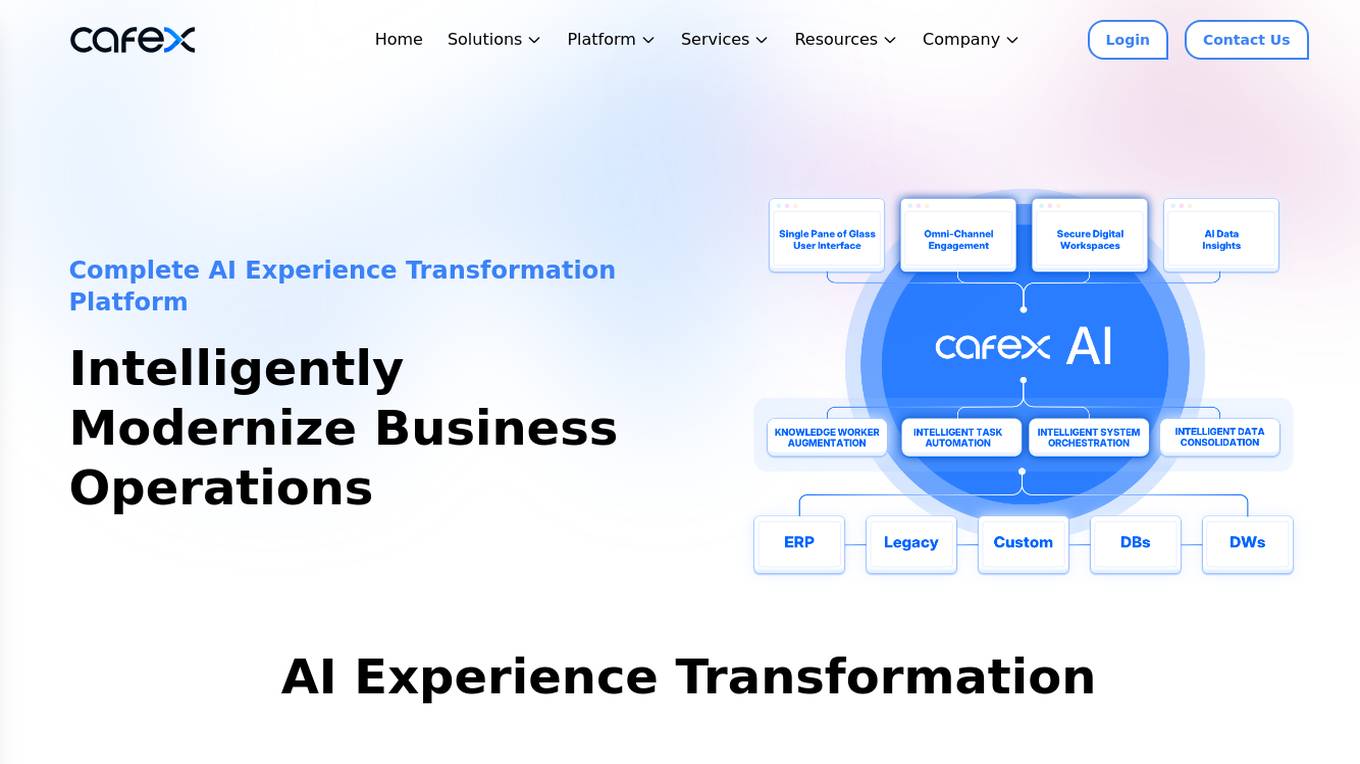

CafeX

CafeX is an AI-powered platform that offers AI Experience Transformation solutions for businesses. It helps in modernizing business operations, integrating AI and automation to simplify complex challenges, and enhancing customer interactions with plug-and-play solutions. CafeX enables organizations in regulated industries to optimize digital engagement with employees, customers, and partners by leveraging existing investments and unifying fragmented solutions. The platform provides unified intelligence, seamless integration, developer empowerment, efficient deployment, and audit & compliance functionalities.

ThinkRoot

ThinkRoot is an AI Compiler that empowers users to transform their ideas into fully functional applications within minutes. By leveraging advanced artificial intelligence algorithms, ThinkRoot streamlines the app development process, eliminating the need for extensive coding knowledge. With a user-friendly interface and intuitive design, ThinkRoot caters to both novice and experienced developers, offering a seamless experience from concept to deployment. Whether you're a startup looking to prototype quickly or an individual with a creative vision, ThinkRoot provides the tools and resources to bring your ideas to life effortlessly.

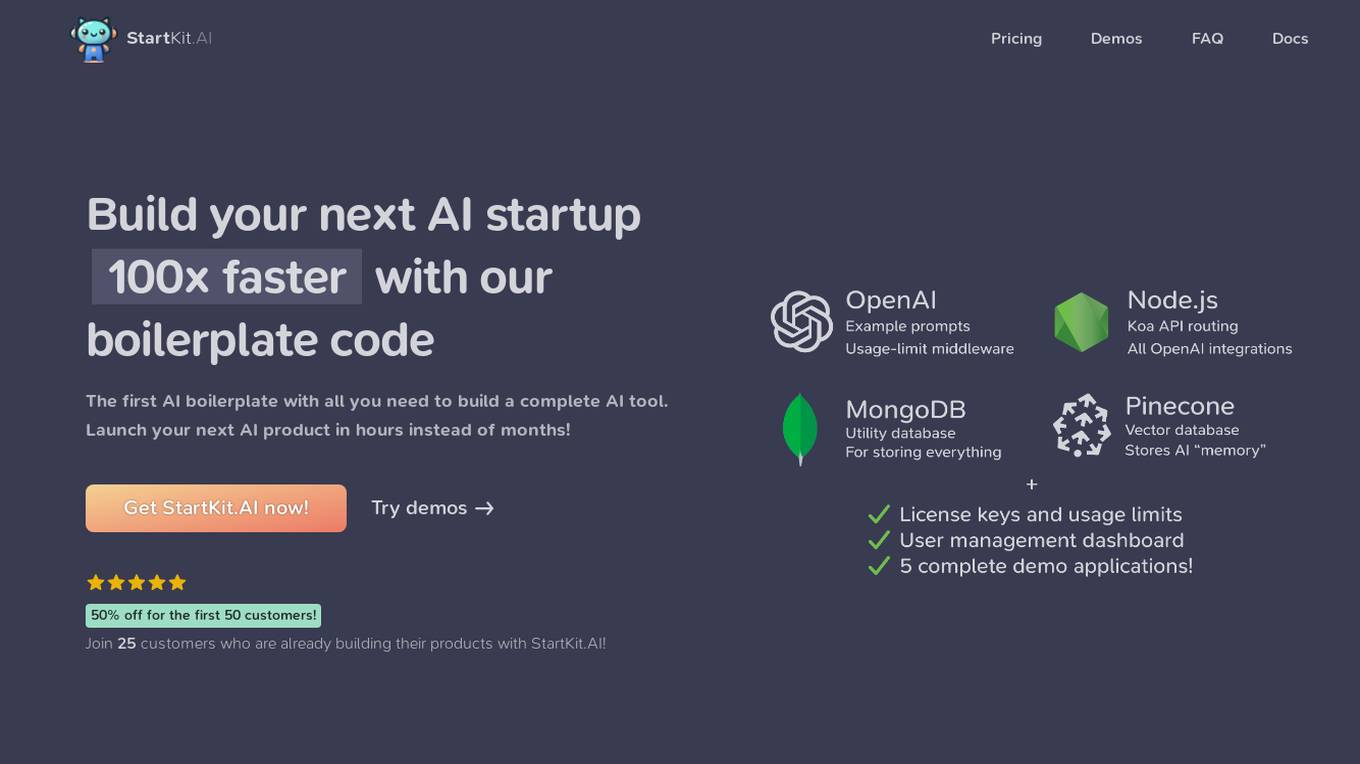

StartKit.AI

StartKit.AI is a boilerplate code for AI products that helps users build their AI startups 100x faster. It includes pre-built REST API routes for all common AI functionality, a pre-configured Pinecone for text embeddings and Retrieval-Augmented Generation (RAG) for chat endpoints, and five React demo apps to help users get started quickly. StartKit.AI also provides a license key and magic link authentication, user & API limit management, and full documentation for all its code. Additionally, users get access to guides to help them get set up and one year of updates.

1 - Open Source AI Tools

inngest

Inngest is a platform that offers durable functions to replace queues, state management, and scheduling for developers. It allows writing reliable step functions faster without dealing with infrastructure. Developers can create durable functions using various language SDKs, run a local development server, deploy functions to their infrastructure, sync functions with the Inngest Platform, and securely trigger functions via HTTPS. Inngest Functions support retrying, scheduling, and coordinating operations through triggers, flow control, and steps, enabling developers to build reliable workflows with robust support for various operations.

20 - OpenAI Gpts

Frontend Developer

AI front-end developer expert in coding React, Nextjs, Vue, Svelte, Typescript, Gatsby, Angular, HTML, CSS, JavaScript & advanced in Flexbox, Tailwind & Material Design. Mentors in coding & debugging for junior, intermediate & senior front-end developers alike. Let’s code, build & deploy a SaaS app.

Azure Arc Expert

Azure Arc expert providing guidance on architecture, deployment, and management.

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

Docker and Docker Swarm Assistant

Expert in Docker and Docker Swarm solutions and troubleshooting.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.